前言

本篇关于Python语言接口使用 DashInfer引擎实现CPU大模型推理的是实战教学,如果想要了解相关技术原理的可以参考上篇文章:基于魔搭开源推理引擎 DashInfer实现CPU服务器大模型推理--理论篇-CSDN博客

一、环境配置

- 模型: Qwen1.5-1.8-Chat

- 推理引擎: DashInfer

- 硬件: x86, Emerald Rapids, 96 vCPU @ 3.2GHz, 16GBx24 DDR

- CPU内存32G,16核

- 服务器基础环境ubuntu22.04,torch-cpu-2.1.2,python3.10

1.1、python 依赖

dashinfer sentencepiece accelerate transformers_stream_generator tiktoken这里的主要的引擎dashinfer 我在win下pip install 一直失败,不知道是不是我环境的问题,还是本身包不支持win

还有就是protobuf好像需要3.18.0,因为我在测试出现过这个包协议错误问题,

TensorFlow官方的建议是不使用,可能会存在冲突,所以可能需要卸载

1.2、硬件支持

- x86 CPU:要求硬件至少需要支持AVX2指令集。对于第五代至强(Xeon)处理器(Emerald Rapids)、第四代至强(Xeon)处理器(Sapphire Rapids)等(对应于阿里云第8代ECS实例,如g8i),采用AMX矩阵指令加速计算。

- ARMv9 CPU:要求硬件支持SVE指令集。支持如倚天(Yitian)710等ARMv9架构处理器(对应于阿里云第8代ECS实例,如g8y),采用SVE向量指令加速计算。

1.3、数据类型

- x86 CPU:支持FP32、BF16。

- ARM Yitian710 CPU:FP32、BF16、InstantQuant(量化技术)。

二、推理实战

2.1、在单NUMA CPU上进行单NUMA推理

示例中默认使用的是单NUMA推理。 在单NUMA CPU上进行单NUMA推理不需要特殊的权限和配置。

2.2、在多NUMA CPU上进行单NUMA推理

在多NUMA节点的CPU上,若只需要1个NUMA节点进行推理,需要用numactl进行绑核。 该方式需要在docker run的时候添加--cap-add SYS_NICE --cap-add SYS_PTRACE --ipc=host参数。

使用单NUMA推理,需要将模型配置文件中的device_ids字段改成要运行的NUMA节点编号,并将multinode_mode字段设置为true。

"device_ids": [ 0 ], "multinode_mode": true,

2.3、多NUMA推理

使用多NUMA推理,需要用mpirun+numactl进行绑核,以达到最佳性能。 该方式需要在docker run的时候添加--cap-add SYS_NICE --cap-add SYS_PTRACE --ipc=host参数。

使用多NUMA推理,需要将模型配置文件中的device_ids字段改成要运行的NUMA节点编号,并将multinode_mode字段设置为true。

"device_ids": [ 0, 1 ], "multinode_mode": true,2.4、原始模型下载

## download model from modelscope original_model = { "source": "modelscope", "model_id": "qwen/Qwen1.5-1.8B-Chat", "revision": "master", "model_path": "" } def download_model(model_id, revision, source="modelscope"): """ x下载原始模型 :param model_id: :param revision: :param source: :return: """ print(f"Downloading model {model_id} (revision: {revision}) from {source}") if source == "modelscope": from modelscope import snapshot_download model_dir = snapshot_download(model_id, revision=revision) elif source == "huggingface": from huggingface_hub import snapshot_download model_dir = snapshot_download(repo_id=model_id) else: raise ValueError("Unknown source") print(f"Save model to path {model_dir}") return model_dir2.5、prompt的组织格式

start_text = "<|im_start|>" end_text = "<|im_end|>" system_msg = {"role": "system", "content": "You are a helpful assistant."} user_msg = {"role": "user", "content": ""} assistant_msg = {"role": "assistant", "content": ""} prompt_template = Template( "{{start_text}}" + "{{system_role}}\n" + "{{system_content}}" + "{{end_text}}\n" + "{{start_text}}" + "{{user_role}}\n" + "{{user_content}}" + "{{end_text}}\n" + "{{start_text}}" + "{{assistant_role}}\n")2.6、模型转换配置文件

config_qwen_v15_1_8b.json

{ "model_name": "Qwen1.5-1.8B-Chat", "model_type": "Qwen_v15", "model_path": "./dashinfer_models/", "data_type": "float32", "device_type": "CPU", "device_ids": [ 0 ], "multinode_mode": false, "engine_config": { "engine_max_length": 2048, "engine_max_batch": 8, "do_profiling": false, "num_threads": 0, "matmul_precision": "medium" }, "generation_config": { "temperature": 0.95, "early_stopping": true, "top_k": 1024, "top_p": 0.8, "repetition_penalty": 1.1, "presence_penalty": 0.0, "min_length": 0, "max_length": 2048, "no_repeat_ngram_size": 0, "eos_token_id": 151643, "seed": 1234, "stop_words_ids": [ [ 151643 ], [ 151644 ], [ 151645 ] ] }, "convert_config": { "do_dynamic_quantize_convert": false }, "quantization_config": { "activation_type": "bfloat16", "weight_type": "uint8", "SubChannel": true, "GroupSize": 256 } }2.7、完整实战代码

传参配置格式化代码

文件名我这命名为llm_utils.py

# coding: utf-8 # @Time : # @Author : # @description : from typing import Tuple class Role: USER = 'user' SYSTEM = 'system' BOT = 'bot' ASSISTANT = 'assistant' ATTACHMENT = 'attachment' default_system = 'You are a helpful assistant.' def history_to_messages(history, system: str): """ 格式化传参中的history和系统提示词 :param history: [[问题,回答],[问题,回答]] :param system: :return: """ messages = [{'role': Role.SYSTEM, 'content': system}] for h in history: messages.append({'role': Role.USER, 'content': h[0]}) messages.append({'role': Role.ASSISTANT, 'content': h[1]}) return messages def messages_to_history(messages): """ 历史聊天保留 :param messages: :return: """ assert messages[0]['role'] == Role.SYSTEM system = messages[0]['content'] history = [] for q, r in zip(messages[1::2], messages[2::2]): history.append([q['content'], r['content']]) return system, history def message_to_prompt(messages) -> str: """ 提示词模板格式化 :param messages: :return: """ prompt = "" for item in messages: im_start, im_end = "<|im_start|>", "<|im_end|>" prompt += f"\n{im_start}{item['role']}\n{item['content']}{im_end}" prompt += f"\n{im_start}assistant\n" return prompt推理实战run.py

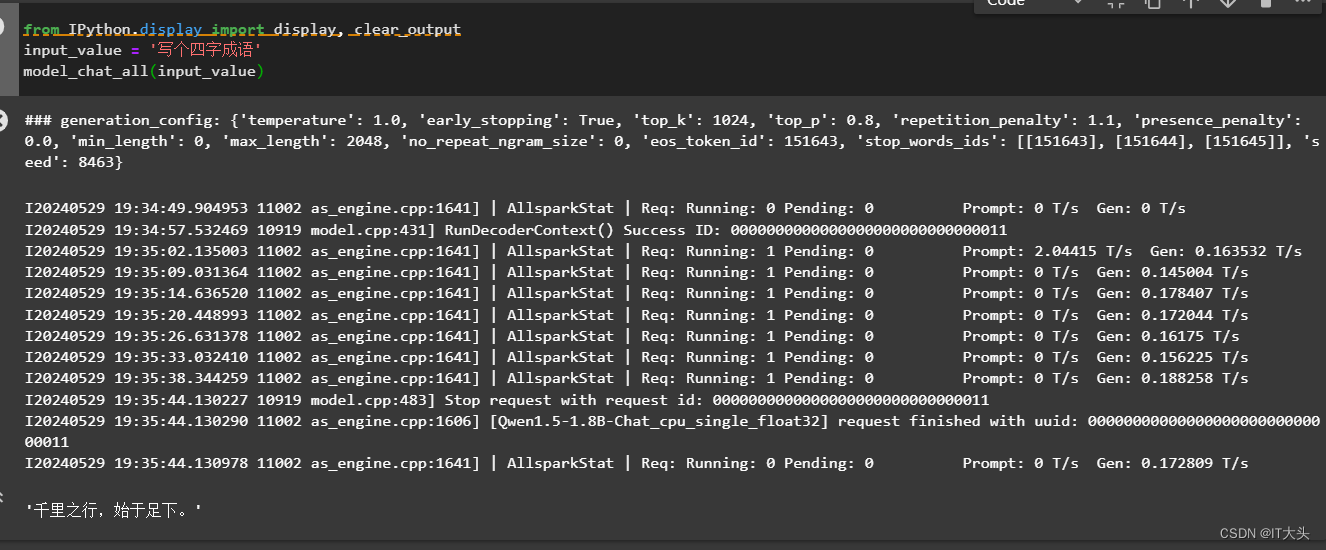

# coding: utf-8 # @Time : # @Author : # @description : import os import copy import threading import subprocess import random from typing import Optional from llm_utils import history_to_messages, Role, message_to_prompt, messages_to_history os.system("pip uninstall -y tensorflow tensorflow-estimator tensorflow-io-gcs-filesystem") os.environ["LANG"] = "C" os.environ["LC_ALL"] = "C" from dashinfer.helper import EngineHelper, ConfigManager log_lock = threading.Lock() config_file = "config_qwen_v15_1_8b.json" config = ConfigManager.get_config_from_json(config_file) def download_model(model_id, revision, source="modelscope"): """ x下载原始模型 :param model_id: :param revision: :param source: :return: """ print(f"Downloading model {model_id} (revision: {revision}) from {source}") if source == "modelscope": from modelscope import snapshot_download model_dir = snapshot_download(model_id, revision=revision) elif source == "huggingface": from huggingface_hub import snapshot_download model_dir = snapshot_download(repo_id=model_id) else: raise ValueError("Unknown source") print(f"Save model to path {model_dir}") return model_dir cmd = f"pip show dashinfer | grep 'Location' | cut -d ' ' -f 2" package_location = subprocess.run(cmd, stdout=subprocess.PIPE, stderr=subprocess.PIPE, shell=True, text=True) package_location = package_location.stdout.strip() os.environ["AS_DAEMON_PATH"] = package_location + "/dashinfer/allspark/bin" os.environ["AS_NUMA_NUM"] = str(len(config["device_ids"])) os.environ["AS_NUMA_OFFSET"] = str(config["device_ids"][0]) ## download model from modelscope original_model = { "source": "modelscope", "model_id": "qwen/Qwen1.5-1.8B-Chat", "revision": "master", "model_path": "" } original_model["model_path"] = download_model(original_model["model_id"], original_model["revision"], original_model["source"]) # GPU模型转换成CPU格式文件 engine_helper = EngineHelper(config) engine_helper.verbose = True engine_helper.init_tokenizer(original_model["model_path"]) ## convert huggingface model to dashinfer model ## only one conversion is required engine_helper.convert_model(original_model["model_path"]) # 转换,这里我记得文件不会覆盖,如果多次运行可能出现多个文件,运行后可以注释掉 engine_helper.init_engine() engine_max_batch = engine_helper.engine_config["engine_max_batch"] def model_chat(query, history=None,system='You are a helpful assistant.') : """ 生成器输出流结果 :param query: :param history: :param system: :return: """ if query is None: query = '' if history is None: history = [] messages = history_to_messages(history, system) messages.append({'role': Role.USER, 'content': query}) prompt = message_to_prompt(messages) gen_cfg = copy.deepcopy(engine_helper.default_gen_cfg) gen_cfg["seed"] = random.randint(0, 10000) request_list = engine_helper.create_request([prompt], [gen_cfg]) request = request_list[0] # request_list[0].out_text gen = engine_helper.process_one_request_stream(request) return gen def model_chat_all(query, history=None,system='You are a helpful assistant.') : """ 直接return结果,也是需要结果输出完成后才能打印 :param query: :param history: :param system: :return: """ if query is None: query = '' if history is None: history = [] messages = history_to_messages(history, system) messages.append({'role': Role.USER, 'content': query}) prompt = message_to_prompt(messages) gen_cfg = copy.deepcopy(engine_helper.default_gen_cfg) gen_cfg["seed"] = random.randint(0, 10000) request_list = engine_helper.create_request([prompt], [gen_cfg]) request = request_list[0] # request_list[0].out_text gen = engine_helper.process_one_request_stream(request) # 需要从生成器中取数据才能有结果 responses = [i for i in gen if i] # 不取数据不会返回结果 result = request_list[0].out_text return result if __name__ == '__main__': from IPython.display import display, clear_output input_value = '写个四字成语' gen = model_chat(input_value) for part in gen: # 流式查看数据 clear_output(wait=True) print(f"Input: {input_value}") print(f"Response:\n{part}")git地址:GitHub - liukangjia666/dashinfer_demo: 魔搭CPU大模型推理引擎dashinfer的测试实战代码

2.8、推理效果分析

(1)内存占用情况,我这个使用的1.8b的模型占用内存大概在8-15g左右

(2)推理速度依赖CPU的计算能力

(3)推理结果精度和GPU推理是有些区别,但整体来说影响不大

(4)json文件中的推理参数可以自定义修改,如temperature,top_p等

2.9、模型配置文件

<path_to_dashinfer>/examples/python/model_config目录下提供了一些config示例。

以下是对config中的参数说明:

- `model_name`: DashInfer模型名称,自定义; - `model_type`: DashInfer模型类型,可选项:LLaMA_v2、ChatGLM_v2、ChatGLM_v3、Qwen_v10、Qwen_v15、Qwen_v20; - `model_path`: DashInfer模型导出路径; - `data_type`: 输出的数据类型,可选项:float32; - `device_type`: 推理硬件,可选项:CPU; - `device_ids`: 用于推理的NUMA节点,可以通过Linux命令`lscpu`查看CPU的NUMA信息; - `multinode_mode`: 是否在多NUMA CPU上进行推理,可选项:true、false; - `convert_config`: 模型转换相关参数; - `do_dynamic_quantize_convert`: 是否量化权重,可选项:true、false,目前仅ARM CPU支持量化; - `engine_config`: 推理引擎参数; - `engine_max_length`: 最大推理长度,<= 11000; - `engine_max_batch`: 最大batch数; - `do_profiling`: 是否对推理过程进行profiling,可选项:true、false,若要进行profiling,需要设置`do_profiling = true`,并且设置环境变量`AS_PROFILE=ON`; - `num_threads`: 线程数,设置为单NUMA节点下的物理核数量时性能最佳,当设置的数值为0时,EngineHelper会自动解析`lscpu`的输出并设置,当设置的数值 > 0 时,采用设置的数值; - `matmul_precision`: 矩阵乘法的计算精度,可选项:high、medium,设置为high时,采用fp32进行矩阵乘法计算,设置为medium时,采用bf16进行计算; - `generation_config`: 生成参数; - `temperature`: 随机过程 temperature; - `early_stopping`: 在生成stop_words_ids后是否停止生成,可选项:true、false; - `top_k`: 采样过程,top k 参数,top_k = 0 时对全词表排序; - `top_p`: 采样过程,top p 参数,0 <= top_p <= 1.0,top_p = 0 表示不使用topp; - `repetition_penalty`: The parameter for repetition penalty. 1.0 means no penalty. - `presence_penalty`: The parameter for presence penalty. 0.0 means no penalty. - `min_length`: 输入+输出的最小长度,默认为0,不启用filter; - `max_length`: 输入+输出的最大长度; - `no_repeat_ngram_size`: 用于控制重复词生成,默认为0,If set to int > 0, all ngrams of that size can only occur once. - `eos_token_id`: EOS对应的token,取决于模型; - `seed`: 随机数seed; - `stop_words_ids`: List of token ids of stop ids. - `quantization_config`: 量化参数,当 do_dynamic_quantize_convert = true 时需要设置; - `activation_type`: 矩阵乘法的输入矩阵数据类型,可选项:bfloat16; - `weight_type`: 矩阵乘法的权重数据类型,可选项:uint8; - `SubChannel`: 是否进行权重sub-channel量化,可选项:true、false; - `GroupSize`: sub-channel量化粒度,可选项:64、128、256、512;代码开源地址:

https://github.com/modelscope/dash-infer

推理体验地址:

https://www.modelscope.cn/studios/modelscope/DashInfer-Demo

如有问题可评论区留言,或者关注下面公众号获取加群二维码进群交流