目录

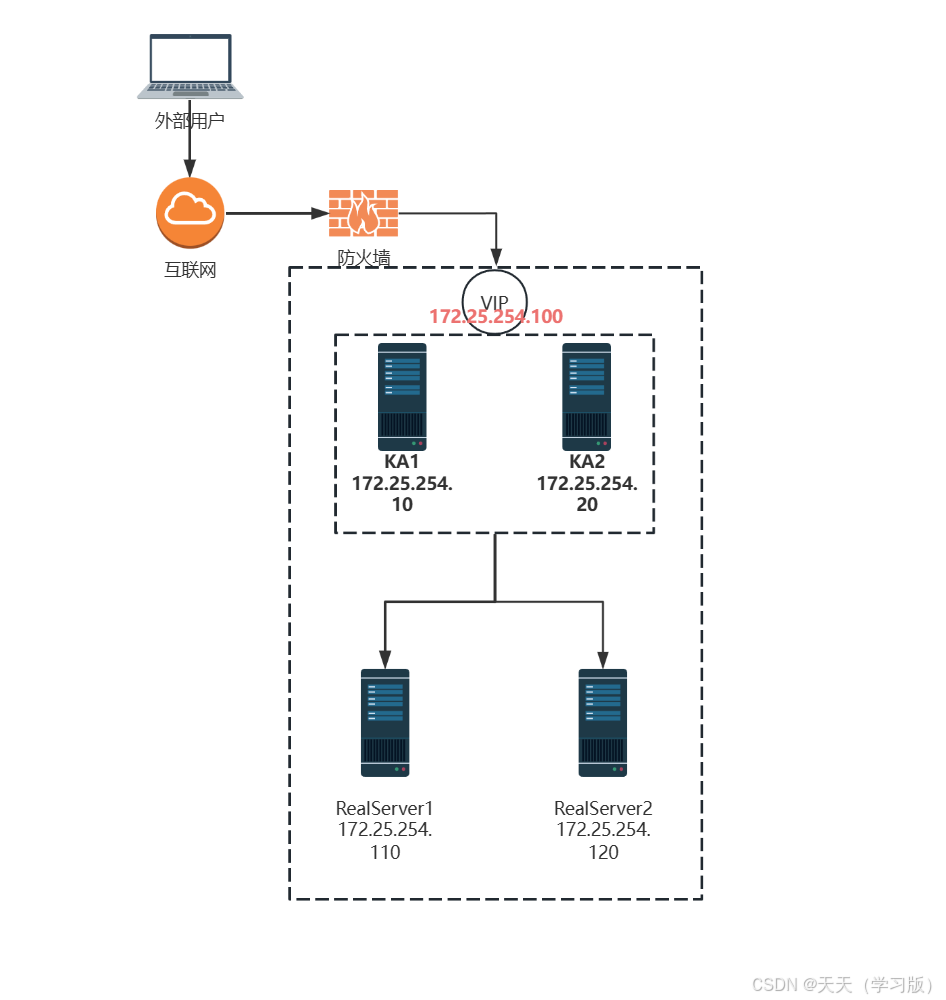

一、高可用集群

高可用集群(High Availability Cluster,简称HA Cluster)是指以减少服务中断时间为目的的服务器集群技术。它通过保护用户的业务程序对外不间断提供的服务,把因软件、硬件、人为造成的故障对业务的影响降低到最小程度。高可用集群通常由多个节点(服务器)组成,这些节点通过网络连接在一起,共同对外提供服务。以下是高可用集群的详细解析:

1.1 高可用集群的定义与特点

- 定义: 高可用集群是指如单系统一样地运行并支持计算机持续正常运行的一个主机群。它通过冗余部署和故障转移等技术手段,确保系统在高并发、高负载、高故障率等情况下仍能保持稳定运行。

- 特点:

- 高可用性:通过冗余部署和故障转移,确保系统的高可用性,减少服务中断时间。

- 负载均衡:将用户请求分发到多个节点上,提高系统的处理能力和响应速度。

- 故障自动恢复:能够自动检测节点故障,并将故障节点的任务转移到其他正常节点上,实现故障自动恢复。

- 易于扩展:可以根据需要随时添加或删除节点,具有良好的可扩展性。

1.2 高可用集群的关键技术

- VRRP协议:VRRP(Virtual Router Redundancy Protocol)协议是实现高可用集群的关键技术之一。它通过选举一个MASTER节点来负责处理对外请求和转发,其他节点则作为BACKUP节点处于待命状态。当MASTER节点出现故障时,BACKUP节点能够迅速接管其工作,确保服务的连续性。

- 心跳检测:心跳检测是判断节点之间连接状态的重要手段。通过定期发送心跳信号,节点可以检测到其他节点的状态。如果某个节点在预定时间内没有收到其他节点的心跳信号,则认为该节点已经失效或出现故障。

- 故障转移:故障转移是指当某个节点出现故障时,系统自动将该节点的任务转移到其他正常节点上的过程。通过故障转移,可以确保系统的持续运行和服务的稳定性。

1.3 VRRR (Virtual Router Redundancy Protocol)

虚拟路由冗余协议,解决静态网关单点风险

物理层:路由器、三层交换机

软件层:keepalived

1.3.1 VRRP 相关术语

虚拟路由器:Virtual Router

虚拟路由器标识:VRID(0-255),唯一标识虚拟路由器

VIP:Virtual IP

VMAC:Virutal MAC (00-00-5e-00-01-VRID)

物理路由器:

master:主设备

backup:备用设备

priority:优先级

1.3.2 VRRP 相关技术

通告:心跳,优先级等;周期性

工作方式:抢占式,非抢占式

安全认证:

无认证

简单字符认证:预共享密钥

MD5

工作模式:

主/备:单虚拟路由器

主/主:主/备(虚拟路由器1),备/主(虚拟路由器2)

二、keepalived 部署

2.1 keepalived 简介

官网:http://keepalived.org/

Keepalived是一款基于VRRP(Virtual Router Redundancy Protocol,虚拟路由冗余协议)的开源软件,主要用于解决网络服务的单点故障问题,并提供高可用性和负载均衡功能。以下是Keepalived的详细简介:

2.1.1 概述

- 开源与免费:Keepalived是免费开源的,用C编写,具有轻量级、配置简单、管理方便等特点,是互联网IT企业常用的高可用软件之一。

- 功能定位:Keepalived主要提供高可用性和负载均衡服务。它通过VRRP协议实现高可用,通过LVS(Linux Virtual Server)模块提供负载均衡。

2.1.2 主要功能

- 高可用性

- 利用VRRP协议,将多台服务器组成一个虚拟路由器集群,其中一台作为主服务器(MASTER),其他作为备份服务器(BACKUP)。

- 当主服务器出现故障时,Keepalived能够迅速将虚拟IP(VIP)和服务切换到备份服务器上,确保服务的连续性和稳定性。

- 通过保护用户的业务程序对外不间断提供的服务,把因软件、硬件、人为造成的故障对业务的影响降低到最小程度。

- 负载均衡

- 通过LVS模块,Keepalived能够提供四层负载均衡,即将用户请求分发到后端的多台服务器上,以提高系统的处理能力和响应速度。

- 支持多种负载均衡算法,如轮询(ROUND ROBIN)、最少连接(LEAST CONNECTIONS)等。

2.2 keepalived 架构

用户空间核心组件:

vrrp stack:VIP消息通告

checkers:监测real server

system call:实现 vrrp 协议状态转换时调用脚本的功能

SMTP:邮件组件

IPVS wrapper:生成IPVS规则

Netlink Reflector:网络接口

WatchDog:监控进程

**控制组件:**提供keepalived.conf 的解析器,完成Keepalived配置

**IO复用器:**针对网络目的而优化的自己的线程抽象

**内存管理组件:**为某些通用的内存管理功能(例如分配,重新分配,发布等)提供访问权限

2.3 keepalived 环境准备

各节点时间必须同步:ntp, chrony

关闭防火墙及SELinux

各节点之间可通过主机名互相通信:非必须

建议使用/etc/hosts文件实现:非必须

各节点之间的root用户可以基于密钥认证的ssh服务完成互相通信:非必须

[root@rs1 ~]# yum install -y httpd [root@rs1 ~]# echo 172.25.254.110 > /var/www/html/index.html [root@rs1 ~]# systemctl enable --now httpd [root@rs2 ~]# yum install -y httpd [root@rs2 ~]# echo 172.25.254.120 > /var/www/html/index.html [root@rs1 ~]# systemctl enable --now httpd 2.4 keepalived 文件

软件包名:keepalived

主程序文件:/usr/sbin/keepalived

主配置文件:/etc/keepalived/keepalived.conf

配置文件示例:/usr/share/doc/keepalived/

Unit File:/lib/systemd/system/keepalived.service

Unit File的环境配置文件:/etc/sysconfig/keepalived

注意 RHEL7中可能会遇到一下bug

systemctl restart keepalived #新配置可能无法生效

systemctl stop keepalived;systemctl start keepalived #无法停止进程,需要 kill 停止

2.5 keepalived 安装

[root@KA1 ~]# dnf install keepalived -y [root@KA1 ~]# systemctl start keepalived [root@KA1 ~]# ps axf | grep keepalived 2385 pts/0 S+ 0:00 \_ grep --color=auto keepalived 2326 ? Ss 0:00 /usr/sbin/keepalived -D 2327 ? S 0:00 \_ /usr/sbin/keepalived -D 2.6 keepalived 配置

配置文件:/etc/keepalived/keepalived.conf

配置文件组成

GLOBAL CONFIGURATION

Global definitions: 定义邮件配置,route_id,vrrp配置,多播地址等

VRRP CONFIGURATION

VRRP instance(s): 定义每个vrrp虚拟路由器

LVS CONFIGURATION

Virtual server group(s)

Virtual server(s): LVS集群的VS和RS

2.6.1 全局配置

global_defs { notification_email { xxx@xxx #keepalived 发生故障切换时邮件发送的目标邮箱,可以按行区分写多个 } notification_email_from xxx@xxx #发邮件的地址 smtp_server 127.0.0.1 #邮件服务器地址 smtp_connect_timeout 30 #邮件服务器连接timeout router_id ka1 #每个keepalived主机唯一标识 #建议使用当前主机名,但多节点重名不影响 vrrp_skip_check_adv_addr #对所有通告报文都检查,会比较消耗性能 #启用此配置后,如果收到的通告报文 和上一个报文是同一个路由器,则跳过检查,默认值为全检查 vrrp_strict #严格遵循vrrp协议 #启用此项后以下状况将无法启动服务: #1.无VIP地址 #2.配置了单播邻居 #3.在VRRP版本2中有IPv6地址 #建议不加此项配置 vrrp_garp_interval 0 #报文发送延迟,0表示不延迟 vrrp_gna_interval 0 #消息发送延迟 vrrp_mcast_group4 224.0.0.18 #指定组播IP地址范围 } 2.6.2 虚拟路由器配置

vrrp_instance VI_1 { state MASTER interface eth0 #绑定为当前虚拟路由器使用的物理接口,如:eth0,可以和VIP不在一一个网卡 virtual_router_id 100 #每个虚拟路由器惟一标识,范围:0-255,每个虚拟路由器此值必须唯一 否则服务无法启动 同属一个虚拟路由器的多个keepalived节点必须相同 务必要确认在同一网络中此值必须唯一 priority 100 #当前物理节点在此虚拟路由器的优先级,范围:1-254 #值越大优先级越高,每个keepalived主机节点此值不同 advert_int 1 #vrrp通告的时间间隔,默认1s authentication { #认证机制 auth_type PASS #AH为IPSEC认证(不推荐),PASS为简单密码(建议使用) auth_pass 1111 #预共享密钥,仅前8位有效 #同一个虚拟路由器的多个keepalived节点必须一样 } virtual_ipaddress { #虚拟IP,生产环境可能指定上百个IP地址 172.25.254.100/24 dev eth0 label eth0:1 #指定VIP,不指定网卡,默认为eth0,注意:不指 #定/prefix,默认32 } } 示例

配置ka1

[root@ka1 ~]# vim /etc/keepalived/keepalived.conf .................... global_defs { notification_email { 2111683866@qq.com } notification_email_from keepalived@ka1.zty.org smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id ka1 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.0.18 } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 100 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } } [root@ka1 ~]# systemctl enable --now keepalived.service Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service. 配置ka2

[root@ka2 ~]# vim /etc/keepalived/keepalived.conf .................... global_defs { notification_email { 2111683866@qq.com } notification_email_from keepalived@ka1.zty.org smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id ka2 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.0.18 } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 100 priority 80 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } } [root@ka2 ~]# systemctl enable --now keepalived.service Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service. 测试

#查看网卡 [root@ka1 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 2205 bytes 194696 (190.1 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2066 bytes 233647 (228.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) #查看组播信息 [root@ka1 ~]# tcpdump -i eth0 -nn host 224.0.0.18 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes 11:06:35.490328 IP 172.25.254.10 > 224.0.0.18: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20 11:06:36.491391 IP 172.25.254.10 > 224.0.0.18: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20 11:06:37.492541 IP 172.25.254.10 > 224.0.0.18: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20 11:06:38.493659 IP 172.25.254.10 > 224.0.0.18: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20 关掉ka1服务后再看组播,发现变成ka2发送,查看ka2网卡能看到vip

[root@ka1 ~]# systemctl stop keepalived.service [root@ka1 ~]# tcpdump -i eth0 -nn host 224.0.0.18 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes 11:08:51.288416 IP 172.25.254.20 > 224.0.0.18: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20 11:08:52.289695 IP 172.25.254.20 > 224.0.0.18: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20 [root@ka2 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.20 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fe5e:abca prefixlen 64 scopeid 0x20<link> ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) RX packets 2023 bytes 179497 (175.2 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1829 bytes 188251 (183.8 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) 重新启动ka1服务,查看组播信息,vip重新回到ka1服务器上

[root@ka1 ~]# systemctl start keepalived.service [root@ka1 ~]# tcpdump -i eth0 -nn host 224.0.0.18 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes 11:10:20.384044 IP 172.25.254.10 > 224.0.0.18: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20 11:10:21.385090 IP 172.25.254.10 > 224.0.0.18: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20 11:10:22.385320 IP 172.25.254.10 > 224.0.0.18: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20 2.6.3 启动vip通信

由于全局配置中添加vrrp_strict 选项,所以无法访问vip,可以用iptables -nL查看

[root@ka2 ~]# iptables -nL Chain INPUT (policy ACCEPT) target prot opt source destination ACCEPT udp -- 0.0.0.0/0 0.0.0.0/0 udp dpt:53 ACCEPT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:53 ACCEPT udp -- 0.0.0.0/0 0.0.0.0/0 udp dpt:67 ACCEPT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:67 DROP all -- 0.0.0.0/0 0.0.0.0/0 match-set keepalived dst 方法一:不加vrrp_strict配置

global_defs { notification_email { 2111683866@qq.com } notification_email_from keepalived@ka1.zty.org smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id ka1 vrrp_skip_check_adv_addr #vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.0.18 } 方法二:添加vrrp_iptables

global_defs { notification_email { 2111683866@qq.com } notification_email_from keepalived@ka1.zty.org smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id ka2 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.0.18 vrrp_iptables } 测试

ping172.25.254.100

[root@rs1 ~]# ping 172.25.254.100 PING 172.25.254.100 (172.25.254.100) 56(84) bytes of data. 64 bytes from 172.25.254.100: icmp_seq=1 ttl=64 time=0.252 ms 64 bytes from 172.25.254.100: icmp_seq=2 ttl=64 time=0.316 ms 64 bytes from 172.25.254.100: icmp_seq=3 ttl=64 time=0.356 ms 2.6.4 启动日志功能

配置 /etc/sysconfig/keepalived 文件

[root@ka1 ~]# vim /etc/sysconfig/keepalived [root@ka1 ~]# egrep -v "^#|^$" /etc/sysconfig/keepalived KEEPALIVED_OPTIONS="-D -S 6" 配置 /etc/rsyslog.conf 文件

[root@ka1 ~]# vim /etc/rsyslog.conf .................... local6.* /var/log/keepalived.log .................... 重启 keepalived 和 rsyslog 服务

[root@ka1 ~]# systemctl restart keepalived.service rsyslog.service [root@ka1 ~]# tail -n3 /var/log/keepalived.log Aug 12 13:10:56 ka1 Keepalived_vrrp[6681]: Sending gratuitous ARP on eth0 for 172.25.254.100 Aug 12 13:10:56 ka1 Keepalived_vrrp[6681]: Sending gratuitous ARP on eth0 for 172.25.254.100 Aug 12 13:10:56 ka1 Keepalived_vrrp[6681]: Sending gratuitous ARP on eth0 for 172.25.254.100 2.6.5 实现独立子配置文件

当生产环境复杂时, /etc/keepalived/keepalived.conf 文件中内容过多,不易管理

将不同集群的配置,比如:不同集群的VIP配置放在独立的子配置文件中利用include 指令可以实现包含 子配置文件

格式:include /path/file

示例

[root@ka1 ~]# mkdir /etc/keepalived/conf.d #创建子配置目录 [root@ka1 ~]# vim /etc/keepalived/keepalived.conf .................... include /etc/keepalived/conf.d/*.conf #相关子配置文件 .................... 配置子配置文件

[root@ka1 ~]# vim /etc/keepalived/conf.d/172.25.254.100.conf vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 100 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } } 三、keepalived 企业应用示例

3.1 实现master/slave的 keepalived 单主架构

3.1.1 MASTER配置

[root@ka1 ~]# vim /etc/keepalived/keepalived.conf .................... global_defs { notification_email { 2111683866@qq.com } notification_email_from keepalived@ka1.zty.org smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id ka1 vrrp_skip_check_adv_addr #vrrp_strict #添加此选项无法访问vip vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.0.18 } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 100 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } } 3.1.2 BACKUP配置

#配置文件和master基本一致,只需修改三行 [root@ka2 ~]# vim /etc/keepalived/keepalived.conf .................... global_defs { notification_email { 2111683866@qq.com } notification_email_from keepalived@ka1.zty.org smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id ka2 vrrp_skip_check_adv_addr #vrrp_strict #添加此选项无法访问vip vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.0.18 } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 100 #相同id管理同一个虚拟路由 priority 80 #低优先级 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } } 3.2 抢占模式和非抢占模式

3.2.1 非抢占模式 nopreempt

默认为抢占模式preempt,即当高优先级的主机恢复在线后,会抢占低先级的主机的master角色, 这样会使vip在KA主机中来回漂移,造成网络抖动

建议设置为非抢占模式 nopreempt ,即高优先级主机恢复后,并不会抢占低优先级主机的master角色

非抢占模块下,如果原主机down机, VIP迁移至的新主机, 后续也发生down时,仍会将VIP迁移回原主机

注意:要关闭 VIP抢占,必须将各 keepalived 服务器state配置为BACKUP

示例

ka1主机配置

vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 100 priority 100 #优先级高 nopreempt #非抢占模式 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } } ka2主机配置

vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 100 priority 80 #优先级低 nopreempt #非抢占模式 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } } 重启keepalived服务后

测试

查看ka1网卡

[root@ka1 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 17260 bytes 1331273 (1.2 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 27122 bytes 2309306 (2.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) 关闭ka1服务器的keepalived服务再重启,再查看ka1网卡,发现vip依然在ka2上

[root@ka1 ~]# systemctl stop keepalived.service [root@ka1 ~]# systemctl start keepalived.service [root@ka1 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 17260 bytes 1331273 (1.2 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 27122 bytes 2309306 (2.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@ka2 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.20 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fe5e:abca prefixlen 64 scopeid 0x20<link> ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) RX packets 20712 bytes 1402829 (1.3 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 24770 bytes 1901965 (1.8 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) 3.2.2 抢占延迟模式 preempt_delay

示例

ka1主机配置

vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 100 priority 100 #优先级高 preempt_delay 5s #抢占延迟5s advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } } ka2主机配置

vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 100 priority 100 #优先级高 preempt_delay 5s #抢占延迟5s advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } } 测试

查看ka1网卡

[root@ka1 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 17867 bytes 1375227 (1.3 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 27599 bytes 2361474 (2.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) ka1关闭再重启后,查看网卡发现没有vip

[root@ka1 ~]# systemctl stop keepalived.service [root@ka1 ~]# systemctl start keepalived.service [root@ka1 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 17971 bytes 1383223 (1.3 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 27692 bytes 2372082 (2.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 过5秒后,ka1再查看网卡 ,发现vip重新回来了

[root@ka1 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 18054 bytes 1389683 (1.3 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 27843 bytes 2387092 (2.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) 3.3 VIP单播配置

默认keepalived主机之间利用多播相互通告消息,会造成网络拥塞,可以替换成单播,减少网络流量

注意:启用 vrrp_strict 时,不能启用单播

#在所有节点vrrp_instance语句块中设置对方主机的IP,建议设置为专用于对应心跳线网络的地址,而非使用业务网络 unicast_src_ip <IPADDR> #指定发送单播的源IP unicast_peer { <IPADDR> #指定接收单播的对方目标主机IP ...... } #启用 vrrp_strict 时,不能启用单播,否则服务无法启动 示例

ka1主机配置

vrrp_instance VI_1 { state MASTER` interface eth0 virtual_router_id 100 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } unicast_src_ip 172.25.254.10 #本机IP unicast_peer { 172.25.254.20 #指向对方主机IP #如果有多个keepalived,再加其它节点的IP } } ka2主机配置

vrrp_instance VI_1 { state BACKUP` interface eth0 virtual_router_id 100 priority 80 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } unicast_src_ip 172.25.254.20 unicast_peer { 172.25.254.10 } } 抓包查看单播效果

root@ka1 ~]# tcpdump -i eth0 -nn src host 172.25.254.10 and dst host 172.25.254.20 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes 18:01:58.401212 IP 172.25.254.10 > 172.25.254.20: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20 18:01:59.402549 IP 172.25.254.10 > 172.25.254.20: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20 [root@ka1 ~]# systemctl stop keepalived.service [root@ka1 ~]# tcpdump -i eth0 -nn src host 172.25.254.20 and dst host 172.25.254.10 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes 18:02:33.069672 IP 172.25.254.20 > 172.25.254.10: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20 18:02:34.070261 IP 172.25.254.20 > 172.25.254.10: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20 3.4 keepalived 通知脚本配置

当keepalived的状态变化时,可以自动触发脚本的执行,比如:发邮件通知用户

默认以用户keepalived_script身份执行脚本

如果此用户不存在,以root执行脚本可以用下面指令指定脚本执行用户的身份

global_defs { ...... script_user <USER> ...... } 3.4.1 通知脚本类型

当前节点成为主节点时触发的脚本

notify_master <STRING>|<QUOTED-STRING> 当前节点转为备节点时触发的脚本

notify_backup <STRING>|<QUOTED-STRING> 当前节点转为“失败”状态时触发的脚本

notify_fault <STRING>|<QUOTED-STRING> 通用格式的通知触发机制,一个脚本可完成以上三种状态的转换时的通知

notify <STRING>|<QUOTED-STRING> 当停止VRRP时触发的脚本

notify_stop <STRING>|<QUOTED-STRING> 3.4.2 脚本的调用方法

在 vrrp_instance VI_1 语句块的末尾加下面行

notify_master "/etc/keepalived/notify.sh master" notify_backup "/etc/keepalived/notify.sh backup" notify_fault "/etc/keepalived/notify.sh fault" 3.4.3 创建通知脚本

#!/bin/bash mail_dest='xxxxx@qq.com' mail_send() { mail_subj="$HOSTNAME to be $1 vip 转移" mail_mess="`date +%F\ %T`: vrrp 转移,$HOSTNAME 变为 $1" echo "$mail_mess" | mail -s "$mail_subj" $mail_dest } case $1 in master) mail_send master ;; backup) mail_send backup ;; fault) mail_send fault ;; *) exit 1 ;; esac 3.4.4 邮件配置

安装邮件发送工具

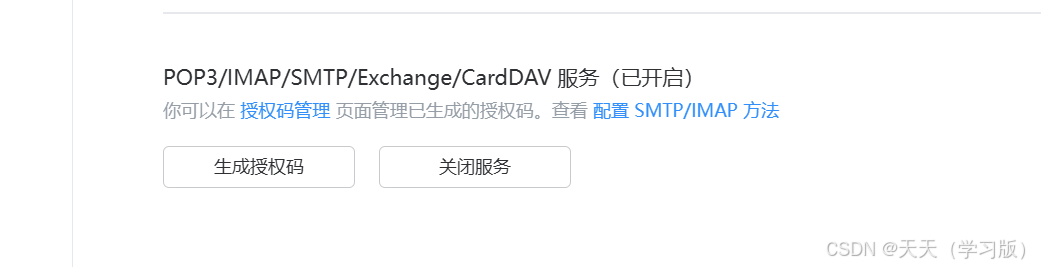

[root@ka1 + ka2 ~]# dnf install mailx -y 在 qq邮箱 中选择 账号与安全,点击 安全设置,开启POP3/IMAP/SMTP/Exchange/CardDAV 服务,生成授权码

ka服务器邮箱配置,在末尾添加

[root@ka1 + ka2 ~]# vim /etc/mail.rc set from=xxxxx@qq.com set smtp=smtp.qq.com set smtp-auth-user=xxxxx@qq.com set smtp-auth-password=生成的授权码 set smtp-auth=login set ssl-verify=ignore 发送测试邮件

[root@ka1 + ka2 ~]# echo test message |mail -s test xxxxx@qq.com 在自己的qq邮箱中查看信息

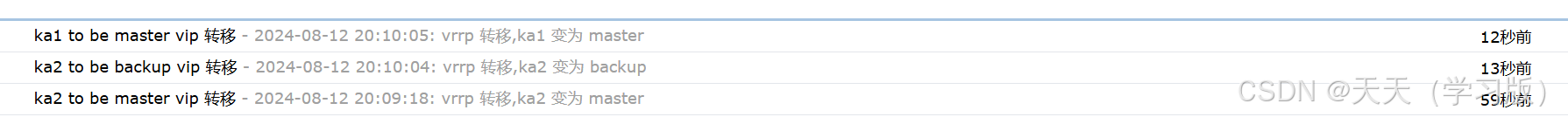

3.4.5 实战案例:实现 keepalived 状态切换的通知脚本

在所有keepalived节点配置

[root@ka1 + ka2 ~]# vim /etc/keepalived/mail.sh #!/bin/bash mail_dest='xxxxx@qq.com' mail_send() { mail_subj="$HOSTNAME to be $1 vip 转移" mail_mess="`date +%F\ %T`: vrrp 转移,$HOSTNAME 变为 $1" echo "$mail_mess" | mail -s "$mail_subj" $mail_dest } case $1 in master) mail_send master ;; backup) mail_send backup ;; fault) mail_send fault ;; *) exit 1 ;; esac [root@ka1 +ka2 ~]# chmod +x /etc/keepalived/mail.sh [root@ka1 + ka2 ~]#vim /etc/keepalived/keepalived.conf .................... vrrp_instance VI_1 { .................... notify_master "/etc/keepalived/mail.sh master" notify_backup "/etc/keepalived/mail.sh backup" notify_fault "/etc/keepalived/mail.sh fault" } 测试

停止ka1服务,让ka1下线,在开启ka1服务

[root@ka1 ~]# systemctl stop keepalived.service [root@ka1 ~]# systemctl start keepalived.service 在浏览器中查看qq邮箱收件信息

3.5 实现 master/master 的 keepalived 双主架构

master/slave的单主架构,同一时间只有一个Keepalived对外提供服务,此主机繁忙,而另一台主机却很空闲,利用率低下,可以使用master/master的双主架构,解决此问题。

master/master 的双主架构:

即将两个或以上VIP分别运行在不同的keepalived服务器,以实现服务器并行提供web访问的目的,提高服务器资源利用率

示例

ka1节点添加配置 做备份

.................... vrrp_instance VI_2 { state BACKUP interface eth0 virtual_router_id 200 priority 80 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.200/24 dev eth0 label eth0:2 } unicast_src_ip 172.25.254.10 unicast_peer { 172.25.254.20 } } ka2节点添加配置 做主

.................... vrrp_instance VI_2 { state MASTER interface eth0 virtual_router_id 200 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.200/24 dev eth0 label eth0:2 } unicast_src_ip 172.25.254.20 unicast_peer { 172.25.254.10 } } 测试

查看ka1 和ka2 的网卡

[root@ka1 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 2161 bytes 178704 (174.5 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 8346 bytes 567484 (554.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) [root@ka2 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.20 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fe5e:abca prefixlen 64 scopeid 0x20<link> ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) RX packets 8778 bytes 581155 (567.5 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2071 bytes 212059 (207.0 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.200 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) 将ka2 keepalived服务关掉,查看ka1 网卡

[root@ka2 ~]# systemctl stop keepalived.service [root@ka1 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 2312 bytes 188638 (184.2 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 8519 bytes 581816 (568.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) eth0:2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.200 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) 3.6 实现IPVS的高可用性

3.6.1 IPVS相关配置

3.6.1.1 虚拟服务器配置结构

virtual_server IP port { ... real_server { ... } real_server { ... } ... } 3.6.1.2 virtual server (虚拟服务器)的定义格式

virtual_server IP port #定义虚拟主机IP地址及其端口 virtual_server fwmark int #ipvs的防火墙打标,实现基于防火墙的负载均衡集群 virtual_server group string #使用虚拟服务器组 3.6.1.3 虚拟服务器配置

virtual_server IP port { #VIP和PORT delay_loop <INT> #检查后端服务器的时间间隔 lb_algo rr|wrr|lc|wlc|lblc|sh|dh #定义调度方法 lb_kind NAT|DR|TUN #集群的类型,注意要大写 persistence_timeout <INT> #持久连接时长 protocol TCP|UDP|SCTP #指定服务协议,一般为TCP sorry_server <IPADDR> <PORT> #所有RS故障时,备用服务器地址 real_server <IPADDR> <PORT> { #RS的IP和PORT weight <INT> #RS权重 notify_up <STRING>|<QUOTED-STRING> #RS上线通知脚本 notify_down <STRING>|<QUOTED-STRING> #RS下线通知脚本 HTTP_GET|SSL_GET|TCP_CHECK|SMTP_CHECK|MISC_CHECK { ... } #定义当前主机健康状 #态检测方法 } } #注意:括号必须分行写,两个括号写在同一行,如: }} 会出错 3.6.1.4 应用层监测

应用层检测:HTTP_GET|SSL_GET

HTTP_GET|SSL_GET { url { path <URL_PATH> #定义要监控的URL status_code <INT> #判断上述检测机制为健康状态的响应码,一般为 200 } connect_timeout <INTEGER> #客户端请求的超时时长, 相当于haproxy的timeout server nb_get_retry <INT> #重试次数 delay_before_retry <INT> #重试之前的延迟时长 connect_ip <IP ADDRESS> #向当前RS哪个IP地址发起健康状态检测请求 connect_port <PORT> #向当前RS的哪个PORT发起健康状态检测请求 bindto <IP ADDRESS> #向当前RS发出健康状态检测请求时使用的源地址 bind_port <PORT> #向当前RS发出健康状态检测请求时使用的源端口 } 3.6.1.5 传输层监测

传输层检测:TCP_CHECK

TCP_CHECK { connect_ip <IP ADDRESS> #向当前RS的哪个IP地址发起健康状态检测请求 connect_port <PORT> #向当前RS的哪个PORT发起健康状态检测请求 bindto <IP ADDRESS> #发出健康状态检测请求时使用的源地址 bind_port <PORT> #发出健康状态检测请求时使用的源端口 connect_timeout <INTEGER> #客户端请求的超时时长 #等于haproxy的timeout server } 3.6.2 实战案例

准备web服务器并绑定VIP至web服务器lo网卡

[root@rs1 ~]# ip a a 172.25.254.100/32 dev lo [root@rs1 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore [root@rs1 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce [root@rs1 ~]# echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore [root@rs1 ~]# echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce [root@rs2 ~]# ip a a 172.25.254.100/32 dev lo [root@rs2 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore [root@rs2 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce [root@rs2 ~]# echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore [root@rs2 ~]# echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce 注意:这样是临时配置,节点重启后会失效

如需永久配置,则按如下设置

[root@rs1 + rs2 ~]# vim /etc/sysconfig/network-scripts/ifcfg-lo DEVICE=lo IPADDR1=127.0.0.1 NETMASK1=255.0.0.0 IPADDR2=172.25.254.100 NETMASK2=255.255.255.255 NETWORK=127.0.0.0 # If you're having problems with gated making 127.0.0.0/8 a martian, # you can change this to something else (255.255.255.255, for example) BROADCAST=127.255.255.255 ONBOOT=yes NAME=loopback [root@rs1 + rs2 ~]# systemctl restart network [root@rs1 + rs2 ~]# vim /etc/sysctl.conf net.ipv4.conf.all.arp_ignore=1 net.ipv4.conf.all.arp_announce=2 net.ipv4.conf.lo.arp_ignore=1 net.ipv4.conf.lo.arp_announce=2 [root@rs1 + rs2 ~]# sysctl --system 配置keepalived

ka1节点的配置

[root@ka1 ~]# vim /etc/keepalived/keepalived.conf .................... virtual_server 172.25.254.100 80 { delay_loop 6 lb_algo wrr lb_kind DR #persistence_timeout 50 protocol TCP real_server 172.25.254.110 80 { weight 1 HTTP_GET { url { path / status_code 200 } connect_timeout 3 nb_get_retry 2 delay_before_retry 2 } } real_server 172.25.254.120 80 { weight 1 HTTP_GET { url { path / status_code 200 } connect_timeout 3 nb_get_retry 2 delay_before_retry 2 } } } ka2节点的配置 与ka1相同

[root@ka2 ~]# vim /etc/keepalived/keepalived.conf .................... virtual_server 172.25.254.100 80 { delay_loop 6 lb_algo wrr lb_kind DR #persistence_timeout 50 protocol TCP real_server 172.25.254.110 80 { weight 1 HTTP_GET { url { path / status_code 200 } connect_timeout 3 nb_get_retry 2 delay_before_retry 2 } } real_server 172.25.254.120 80 { weight 1 HTTP_GET { url { path / status_code 200 } connect_timeout 3 nb_get_retry 2 delay_before_retry 2 } } } 测试

[C:\~]$ curl 172.25.254.100 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 15 100 15 0 0 5265 0 --:--:-- --:--:-- --:--:-- 7500 172.25.254.120 [C:\~]$ curl 172.25.254.100 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 15 100 15 0 0 5995 0 --:--:-- --:--:-- --:--:-- 7500 172.25.254.110 为了让配置效果明显,在ka1 和ka2 下载ipvsadm,使用该命令查看lvs策略

[root@ka1 ~]# yum install -y ipvsadm [root@ka1 ~]# yum install -y ipvsadm [root@ka1 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.25.254.100:80 wrr -> 172.25.254.110:80 Route 1 0 0 -> 172.25.254.120:80 Route 1 0 0 模拟故障

将rs1的http服务停掉,流量全部被定向到rs2

#第一台RS1故障,自动切换至RS2 [root@rs1 ~]# systemctl stop httpd.service #当RS1故障 [root@ka1 ~]# ipvsadm -Ln #查看lvs策略,rs1被踢出 IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.25.254.100:80 wrr -> 172.25.254.120:80 Route 1 0 0 #流量全部被定向到rs2中 [C:\~]$ curl 172.25.254.100 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 15 100 15 0 0 6004 0 --:--:-- --:--:-- --:--:-- 7500 172.25.254.120 [C:\~]$ curl 172.25.254.100 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 15 100 15 0 0 6516 0 --:--:-- --:--:-- --:--:-- 7500 172.25.254.120 ka1故障,自动切换至ka2

[root@ka1 ~]# systemctl stop keepalived.service [root@ka1 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn [root@ka2 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.25.254.100:80 wrr -> 172.25.254.110:80 Route 1 0 0 -> 172.25.254.120:80 Route 1 0 0 #客户端能正常访问 [C:\~]$ curl 172.25.254.100 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 15 100 15 0 0 6095 0 --:--:-- --:--:-- --:--:-- 7500 172.25.254.110 [C:\~]$ curl 172.25.254.100 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 15 100 15 0 0 5709 0 --:--:-- --:--:-- --:--:-- 7500 172.25.254.120 3.7 实现其它应用的高可用性 VRRP Script

keepalived利用 VRRP Script 技术,可以调用外部的辅助脚本进行资源监控,并根据监控的结果实现优先动态调整,从而实现其它应用的高可用性功能

参考配置文件:/usr/share/doc/keepalived/keepalived.conf.vrrp.localcheck

3.7.1 VRRP Script 配置

分两步实现:

定义脚本

vrrp_script:自定义资源监控脚本,vrrp实例根据脚本返回值,公共定义,可被多个实例调用,定义在vrrp实例之外的独立配置块,一般放在global_defs设置块之后。

通常此脚本用于监控指定应用的状态。一旦发现应用的状态异常,则触发对MASTER节点的权重减至 低于SLAVE节点,从而实现 VIP 切换到 SLAVE 节点

vrrp_script <SCRIPT_NAME> { script <STRING>|<QUOTED-STRING> #此脚本返回值为非0时,会触发下面OPTIONS执行 OPTIONS } 调用脚本

track_script:调用vrrp_script定义的脚本去监控资源,定义在VRRP实例之内,调用事先定义的 vrrp_script

track_script { SCRIPT_NAME_1 SCRIPT_NAME_2 } 3.7.1.1 定义 VRRP script

vrrp_script <SCRIPT_NAME> { #定义一个检测脚本,在global_defs 之外配置 script <STRING>|<QUOTED-STRING> #shell命令或脚本路径 interval <INTEGER> #间隔时间,单位为秒,默认1秒 timeout <INTEGER> #超时时间 weight <INTEGER:-254..254> #默认为0,如果设置此值为负数, #当上面脚本返回值为非0时 #会将此值与本节点权重相加可以降低本节点权重, #即表示fall. #如果是正数,当脚本返回值为0, #会将此值与本节点权重相加可以提高本节点权重 #即表示 rise.通常使用负值 fall <INTEGER> #执行脚本连续几次都失败,则转换为失败,建议设为2以上 rise <INTEGER> #执行脚本连续几次都成功,把服务器从失败标记为成功 user USERNAME [GROUPNAME] #执行监测脚本的用户或组 init_fail #设置默认标记为失败状态,监测成功之后再转换为成功状态 } 3.7.1.2 调用 VRRP script

vrrp_instance test { ... ... track_script { <SCRIPT_NAME> } } 3.7.2 实战案例:利用脚本实现主从角色切换

创建脚本

[root@ka1 ~]# vim /etc/keepalived/check_zty.sh #!/bin/bash [ ! -f "/mnt/zty" ] [root@ka1 mnt]# chmod +x /etc/keepalived/check_zty.sh 配置keepalived文件

[root@ka1 mnt]# vim /etc/keepalived/keepalived.conf vrrp_script check_file { #自定义资源监控脚本 script "/etc/keepalived/check_zty.sh" #自定义脚本的路径 interval 1 weight -30 fall 2 rise 2 timeout 2 } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 100 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.100/24 dev eth0 label eth0:1 } unicast_src_ip 172.25.254.10 unicast_peer { 172.25.254.20 } track_script { #调用vrrp_script check_file #与上面vrrp_script 指定的名字相同 } } 测试

ka1创建 /mnt/zty 文件,查看ka1和ka2网卡,发现vip在ka2上

[root@ka1 mnt]# touch /mnt/zty [root@ka1 mnt]# ll 总用量 0 -rw-r--r-- 1 root root 0 8月 13 12:45 zty [root@ka1 mnt]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 22035 bytes 2104590 (2.0 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 29079 bytes 2180967 (2.0 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@ka2 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.20 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fe5e:abca prefixlen 64 scopeid 0x20<link> ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) RX packets 27494 bytes 2450981 (2.3 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 22822 bytes 1788797 (1.7 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) ka1删除 /mnt/zty 文件,查看ka1和ka2网卡,发现vip回到ka1上

[root@ka1 ~]# rm -f /mnt/zty [root@ka1 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 22838 bytes 2178036 (2.0 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 29530 bytes 2222505 (2.1 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) 3.7.3 实战案例:实现HAProxy高可用

在两个ka1和ka2先实现haproxy的配置

[root@ka1 + ka2 ~]# yum install -y haproxy [root@ka1 + ka2 ~]# vim /etc/haproxy/haproxy.cfg .................... listen webserver bind 172.25.254.200:80 mode http balance roundrobin server web1 172.25.254.110:80 check inter 2 fall 2 rise 5 server web2 172.25.254.120:80 check inter 2 fall 2 rise 5 .................... [root@ka1 + ka2 ~]# systemctl enable --now haproxy.service 在两个ka1和ka2两个节点启用内核参数

[root@ka1 + ka2 ~]# vim /etc/sysctl.conf net.ipv4.ip_nonlocal_bind=1 [root@ka1 + ka2 ~]# sysctl -p net.ipv4.ip_nonlocal_bind = 1 在两个ka1和ka2两个节点编写检测脚本

[root@ka1 + ka2 ~]# vim /etc/keepalived/haproxy.sh #!/bin/bash /usr/bin/killall -0 haproxy 在两个ka1和ka2两个节点配置keepalived

vrrp_script check_haproxy { script "/etc/keepalived/haproxy.sh" interval 1 weight -30 fall 2 rise 2 timeout 2 } vrrp_instance VI_2 { state MASTER #BACKUP interface eth0 virtual_router_id 200 priority 100 #80 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.254.200/24 dev eth0 label eth0:2 } unicast_src_ip 172.25.254.20 #10 unicast_peer { 172.25.254.10 #20 } track_script { check_haproxy } } 测试

查看ka2网卡,vip在ka2上

[root@ka2 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.20 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fe5e:abca prefixlen 64 scopeid 0x20<link> ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) RX packets 462823 bytes 35392683 (33.7 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 871643 bytes 61399877 (58.5 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.200 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) 客户端访问172.25.254.200VIP,能够正常访问

[C:\~]$ curl 172.25.254.200 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 15 100 15 0 0 4832 0 --:--:-- --:--:-- --:--:-- 5000 172.25.254.110 [C:\~]$ curl 172.25.254.200 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 15 100 15 0 0 5387 0 --:--:-- --:--:-- --:--:-- 7500 172.25.254.120 在ka2 将haproxy服务关闭

[root@ka2 ~]# systemctl stop haproxy.service 查看网卡,发现172.25.254.200VIP 在ka1上

[root@ka1 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.10 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fea0:1240 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) RX packets 48904 bytes 4821988 (4.5 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 57633 bytes 4460651 (4.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.100 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) eth0:2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.200 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:a0:12:40 txqueuelen 1000 (Ethernet) 客户端访问172.25.254.200VIP,依旧正常访问

重新将ka2 的haproxy服务开启

[root@ka2 ~]# systemctl start haproxy.service 查看网卡,发现172.25.254.200VIP 重新回到ka2上

[root@ka2 ~]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.20 netmask 255.255.255.0 broadcast 172.25.254.255 inet6 fe80::20c:29ff:fe5e:abca prefixlen 64 scopeid 0x20<link> ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) RX packets 549617 bytes 41827491 (39.8 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1044656 bytes 73519719 (70.1 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth0:2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.25.254.200 netmask 255.255.255.0 broadcast 0.0.0.0 ether 00:0c:29:5e:ab:ca txqueuelen 1000 (Ethernet) 客户端访问172.25.254.200VIP,依旧正常访问

[C:\~]$ curl 172.25.254.200 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 15 100 15 0 0 5563 0 --:--:-- --:--:-- --:--:-- 7500 172.25.254.110 [C:\~]$ curl 172.25.254.200 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 15 100 15 0 0 5852 0 --:--:-- --:--:-- --:--:-- 7500 172.25.254.120