参考文章:Ubuntu系统k8s安装

步骤1 ~ 5 所有节点都要执行!

下面所有的命令中,如果出错或者提示权限不够的记得加sudo , 不行就进入root再试试

1、首先关闭防火墙和swap分区(必须执行,不然后果难以想象)

没有vim工具的自己安装一下

切记关闭防火墙,分区,而且Ubuntu和centos防火墙关闭方式不一样 systemctl stop firewalld #centos systemctl disable firewalld #ubuntu sysemctl stop ufw systemctl disable ufw #关闭swap vim /etc/fstab 在关闭swap分区前可以使用命令

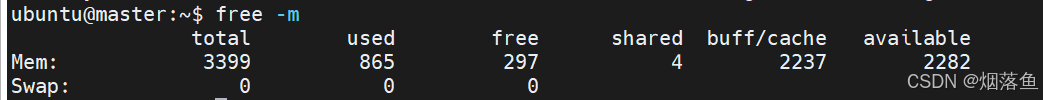

free -m查看本机是否启用了swap分区功能

如果swap这一行都是0就不用管它了,如果不是0就需要进入 fstab文件后注释掉swapfile这一行。

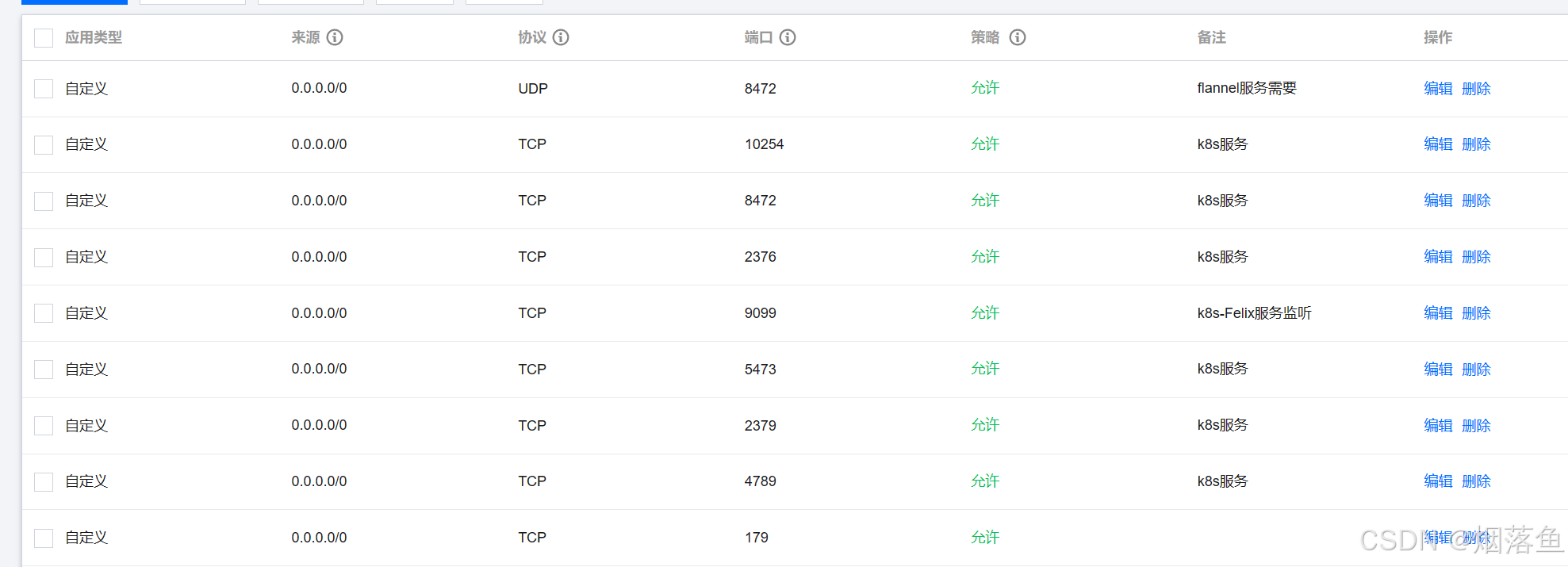

云服务器需要开放相应的端口

除了上述端口外还要有下图(所有主机都要开放):

看清楚端口号和协议,这一步错了后面会有莫名其妙的问题

2、安装docker

#step 1: 安装必要的一些系统工具 sudo apt-get update sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common #step 2: 安装GPG证书 curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - #Step 3: 写入软件源信息 sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" #Step 4: 更新并安装Docker-CE sudo apt-get -y update sudo apt-get -y install docker-ce 配置容器运行时

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter 设置必需的 sysctl 参数,这些参数在重新启动后仍然存在。 cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF #应用 sysctl 参数而无需重新启动 sudo sysctl --system 配置docker守护进程

cat <<EOF | sudo tee /etc/docker/daemon.json { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2" } EOF 配置docker镜像源,建议大家的镜像源跟我一致,不然会有很多镜像无法拉取的情况!

sudo vim /etc/docker/daemon.json { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "registry-mirrors": [ "http://hub-mirror.c.163.com", "https://docker.m.daocloud.io", "https://38yd698j.mirror.aliyuncs.com", "https://docker.mirrors.ustc.edu.cn", "https://registry.docker-cn.com" ] } 重启docker

sudo systemctl enable docker sudo systemctl daemon-reload sudo systemctl restart docker 3、允许 iptables 检查桥接流量

允许 iptables 检查桥接流量

确保 br_netfilter 模块被加载。这一操作可以通过运行 lsmod | grep br_netfilter 来完成。若要显式加载该模块,可执行 sudo modprobe br_netfilter。

为了让你的 Linux 节点上的 iptables 能够正确地查看桥接流量,你需要确保在你的 sysctl 配置中将

net.bridge.bridge-nf-call-iptables 设置为 1。例如:

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf br_netfilter EOF cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sudo sysctl --system # 修改内核参数(首先确认你的系统已经加载了 br_netfilter 模块,默认是没有该模块的,需要你先安装 bridge-utils) apt-get install -y bridge-utils modprobe br_netfilter lsmod | grep br_netfilter # 如果报错找不到包,需要先更新 apt-get update -y4、设置主机名

vim /etc/hosts配置自己的公网ip和主机名称。主节点(控制平面)叫master,其他的叫node

5、ip映射

使用命令

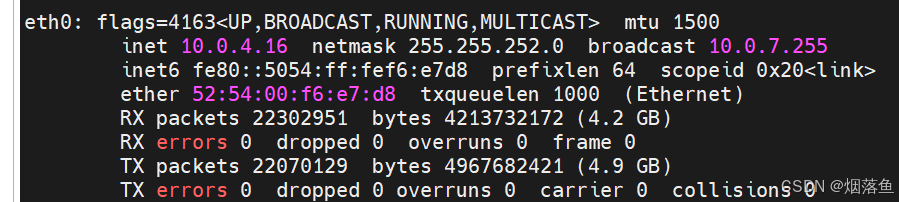

ifconfig查看自己的网卡以及内网Ip

记住自己的网卡名称以及内网IP!!!!

一般是eth0或者是ens33

比如博主的网卡名就是eth0,内网IP是10.0.4.16。

公网部署k8s最重要的就是能互相访问对方的内网,所以需要Ip映射

由于不在一个内网,集群间通讯默认走内网ip,因此需要借助公网ip,通过iptables转换

所以机器都要执行:

以作者为例,matser主机执行

iptables -t nat -A OUTPUT -d master外网ip -j DNAT --to-destination master内网ip iptables -t nat -A OUTPUT -d node1内网ip -j DNAT --to-destination node1外网ipnode1主机执行:

iptables -t nat -A OUTPUT -d node1的外网ip -j DNAT --to-destination node1内网ip iptables -t nat -A OUTPUT -d master内网ip -j DNAT --to-destination master外网ip执行完后在所有主机上互相Ping一下,查看是否Ping的通!

6、master节点安装kubelet、kubeadm、kubectl

# 安装基础环境 apt-get install -y ca-certificates curl software-properties-common apt-transport-https curl curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add - # 执行配置k8s阿里云源 vim /etc/apt/sources.list.d/kubernetes.list #加入以下内容 deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main # 执行更新 apt-get update -y # 安装kubeadm、kubectl、kubelet apt-get install -y kubelet=1.23.1-00 kubeadm=1.23.1-00 kubectl=1.23.1-00 # 阻止自动更新(apt upgrade时忽略)。所以更新的时候先unhold,更新完再hold。 apt-mark hold kubelet kubeadm kubectl systemctl enable kubelet7、初始化master

我们创建一个初始化文件

vim kubeadm-config.yaml复制进去以下内容,注意在我提示的位置换成自己的master节点公网ip

如果安装的 kubelet、kubeadm、kubectl版本跟我的不一致记得把下面的1.23.1改成自己的版本

apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 这里填写自己的master节点公网ip!!! bindPort: 6443 nodeRegistration: criSocket: /var/run/dockershim.sock imagePullPolicy: IfNotPresent name: master taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: 1.23.1 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 scheduler: {} --- kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 cgroupDriver: systemd 使用命令

kubeadm config images list来查看自己需要的镜像,一般是如下所示

#查看kubeadm config所需的镜像 kubeadm config images list #执行结果如下 k8s.gcr.io/kube-apiserver:v1.23.8 k8s.gcr.io/kube-controller-manager:v1.23.8 k8s.gcr.io/kube-scheduler:v1.23.8 k8s.gcr.io/kube-proxy:v1.23.8 k8s.gcr.io/pause:3.6 k8s.gcr.io/etcd:3.5.1-0 k8s.gcr.io/coredns/coredns:v1.8.6大家按照自己的版本来,不一定要跟我的一模一样

然后从国内镜像拉取这些镜像 (记得改成自己的版本)

#从国内镜像拉取 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.23.8 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.23.8 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.23.8 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.23.8 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 docker pull coredns/coredns:1.8.6然后根据依赖对这些镜像进行重命名(这里要注意重命名的版本号有的是带v的,有的是不带的)注意看清楚。

#将拉取下来的images重命名为kubeadm config所需的镜像名字 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.23.8 k8s.gcr.io/kube-apiserver:v1.23.8 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.23.8 k8s.gcr.io/kube-controller-manager:v1.23.8 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.23.8 k8s.gcr.io/kube-scheduler:v1.23.8 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.23.8 k8s.gcr.io/kube-proxy:v1.23.8 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 k8s.gcr.io/pause:3.6 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 k8s.gcr.io/etcd:3.5.1-0 docker tag coredns/coredns:1.8.6 k8s.gcr.io/coredns/coredns:v1.8.6然后再master上执行初始化操作

kubeadm init --config kubeadm-config.yaml这一步一定会让你等很久然后报错,接下来的操作一定要跟着我一步步来,但是必须要先执行了上面这个指令,就是要让它生成一些配置文件,也就是要必须报错一次!!!!!!!!!!!!!!!!!!!!!!!!!!

执行命令

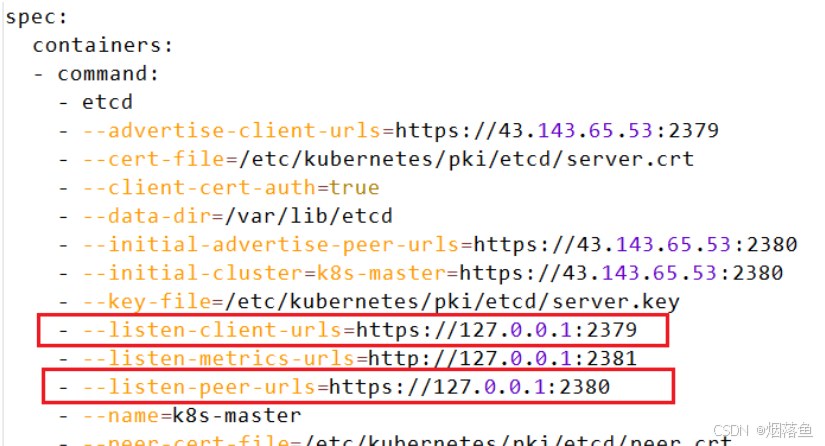

vim /etc/kubernetes/manifests/etcd.yaml这个yaml文件是必须执行过一次init命令后才会生成的,将–listen-client-urls后面的公网ip删掉,将–listen-peer-urls后面的ip改成127.0.0.1

如下所示:

执行命令,停止kubelet服务

systemctl stop kubelet通过命令找出kubelet的进程pid

netstat -anp |grep kube通过kill -9 强杀进程,这里的pid要换成刚刚查到的pid

kill -9 pid重启kubelet服务

systemctl start kubelet使用命令跳过生成配置文件,再次初始化

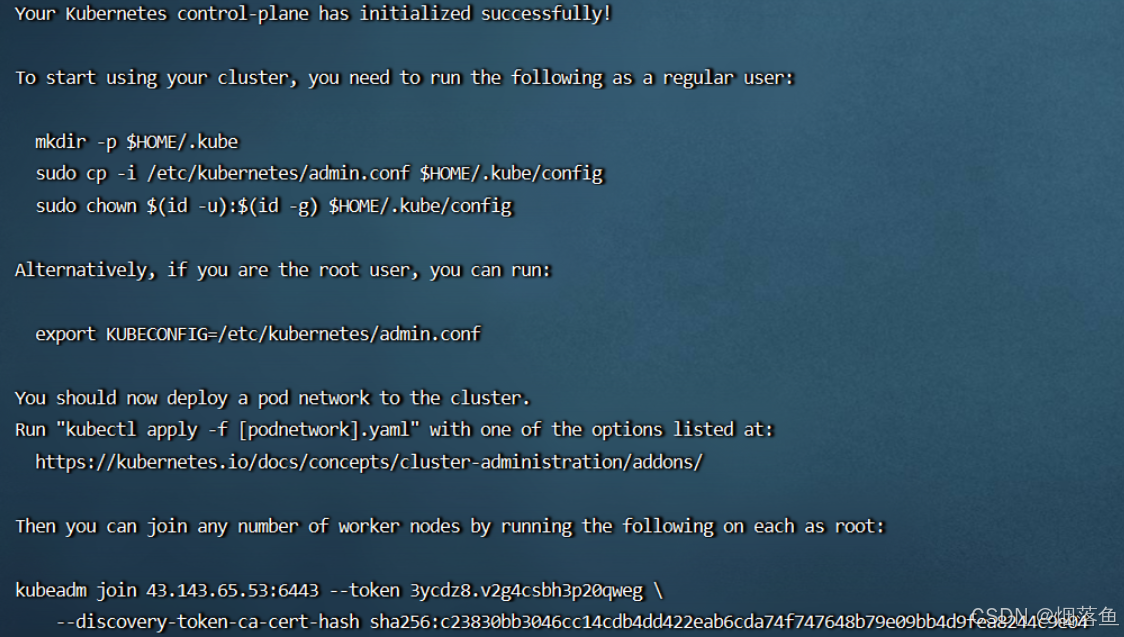

kubeadm init --config=kubeadm-config.yaml --skip-phases=preflight,certs,kubeconfig,kubelet-start,control-plane,etcd初始化成功一般很快(秒级别)

弹出以上信息就是成功了,上面有个successfully。

下面有个kubeadm join 开头的命令是让其他节点加入的指令,记得保存(默认有效期是24H)

master上执行

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config 如果想要node上也能执行kubectl 相关命令就要把master上的admin.config文件复制到node上的相同位置,再执行

sudo chown $(id -u):$(id -g) $HOME/.kube/config若没有相关目录就自己创建。

8、网络配置(master上执行)

作者原本使用的是calico,奈何后面有个bug实在解决不了就换成了flannel。

vim kube-flannel.yml 复制粘贴进去以下内容

--- apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: psp.flannel.unprivileged annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default spec: privileged: false volumes: - configMap - secret - emptyDir - hostPath allowedHostPaths: - pathPrefix: "/etc/cni/net.d" - pathPrefix: "/etc/kube-flannel" - pathPrefix: "/run/flannel" readOnlyRootFilesystem: false # Users and groups runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny fsGroup: rule: RunAsAny # Privilege Escalation allowPrivilegeEscalation: false defaultAllowPrivilegeEscalation: false # Capabilities allowedCapabilities: ['NET_ADMIN', 'NET_RAW'] defaultAddCapabilities: [] requiredDropCapabilities: [] # Host namespaces hostPID: false hostIPC: false hostNetwork: true hostPorts: - min: 0 max: 65535 # SELinux seLinux: # SELinux is unused in CaaSP rule: 'RunAsAny' --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: flannel rules: - apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: ['psp.flannel.unprivileged'] - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux hostNetwork: true priorityClassName: system-node-critical tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.14.0 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.14.0 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN", "NET_RAW"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg 安装flannel插件

kubectl apply -f kube-flannel.yml 9、相关帮助

下面的命令和经验或者可以帮助你排查问题:

kubectl get pod -A -o widekubectl get nodekubectl describe pod pod名称 -n 命名空间名称还有就是cgroup策略问题,可以参考文章:https://blog.csdn.net/weixin_48030085/article/details/131404866

使用calico.yaml文件安装calico网络插件的人记得在文件中指定自己的网卡(最好直接在csdn上搜对应版本的yaml文件),不然会报错,这个有很多教程,博主就不赘述了。

网络插件一般有版本要求对应,读者记得检查!

遇到不明白的问题可以通过查看日志的方式解决(亲测有大用)

kubectl logs pod名称 -n 命名空间名称有问题欢迎评论区留言!