video-retalking部署安装,在服务器Ubuntu22.04系统下

- 一、ubuntu基本环境配置

- 二、安装miniconda环境

- 三、安装video-retalking

- 三、报错合集

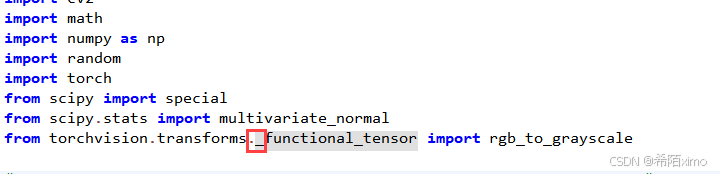

- 1. ModuleNotFoundError: No module named 'torchvision.transforms.functional_tensor'

- 2. RuntimeError: PytorchStreamReader failed reading zip archive: failed finding central directory

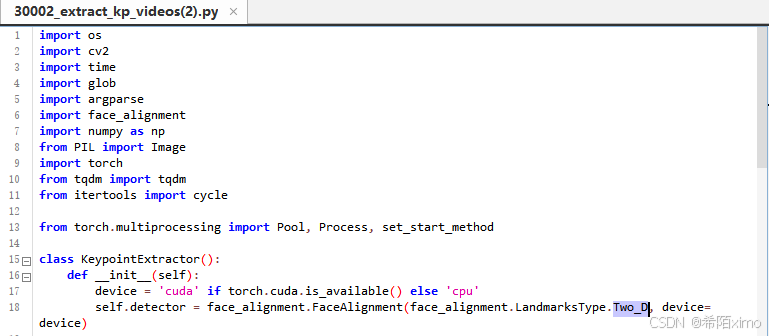

- 3. AttributeError: _2D

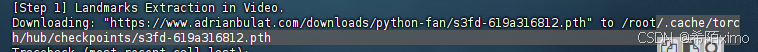

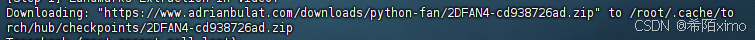

- 4. ssl.SSLError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:1007)== 和 ==urllib.error.URLError: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:1007)>

- 5. ValueError: setting an array element with a sequence. The requested array has an inhomogeneous shape after 1 dimensions. The detected shape was (5,) + inhomogeneous part.

- 6.TypeError: mel() takes 0 positional arguments but 2 positional arguments (and 3 keyword-only arguments) were given

- 7.torch.OutOfMemoryError: CUDA out of memory.

- 四、部署完成

一、ubuntu基本环境配置

1.更新包列表:

- 打开终端,输入以下命令:

sudo apt-get update sudo apt upgrade - 更新时间较长,请耐心等待

2. 安装英伟达显卡驱动

2.1 使用wget在命令行下载驱动包

wget https://cn.download.nvidia.com/XFree86/Linux-x86_64/550.100/NVIDIA-Linux-x86_64-550.100.run 2.2 更新软件列表和安装必要软件、依赖

sudo apt-get update sudo apt-get install g++ sudo apt-get install gcc sudo apt-get install make 2.2 卸载原有驱动

sudo apt-get remove --purge nvidia* - 1.使用vim修改配置文件

sudo vim /etc/modprobe.d/blacklist.conf - 2.按i键进入编辑模式,在文件尾增加两行:

blacklist nouveau options nouveau modeset=0 - 3.按esc键退出编辑模式,输入:wq保存并退出

- 4.更新文件

sudo update-initramfs -u - 5.重启电脑:

sudo reboot 这里需要等一会才能连上

2.3 安装驱动

- 1.授予执行权限

sudo chmod 777 NVIDIA-Linux-x86_64-550.100.run - 2.执行安装命令

sudo ./NVIDIA-Linux-x86_64-550.100.run 这里一直按回车就行,默认选择

- 3.检测显卡驱动是否安装成功

nvidia-smi 2.4 安装CUDA

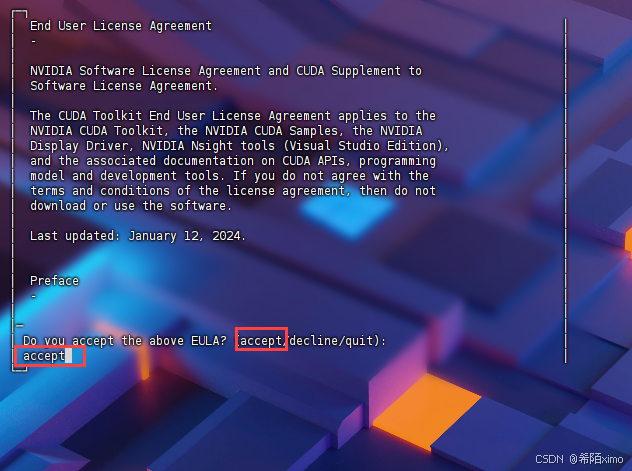

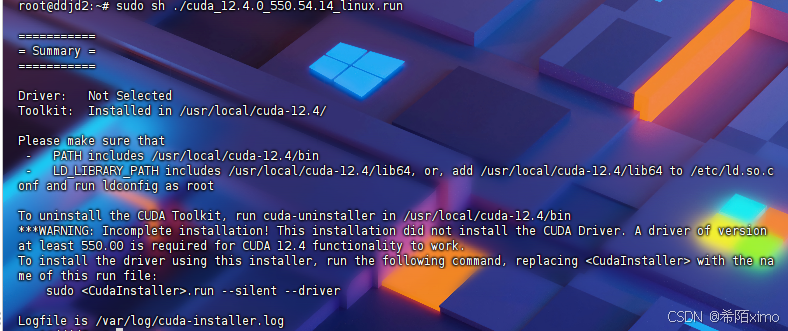

wget https://developer.download.nvidia.com/compute/cuda/12.4.0/local_installers/cuda_12.4.0_550.54.14_linux.run 执行安装命令

sudo sh ./cuda_12.4.0_550.54.14_linux.run - 1.输出accept开始安装

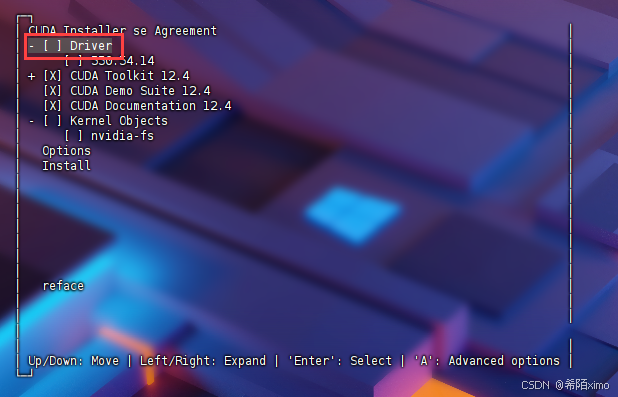

- 2.然后注意这里要按enter取消勾选第一个选项,因为之前已经安装了驱动

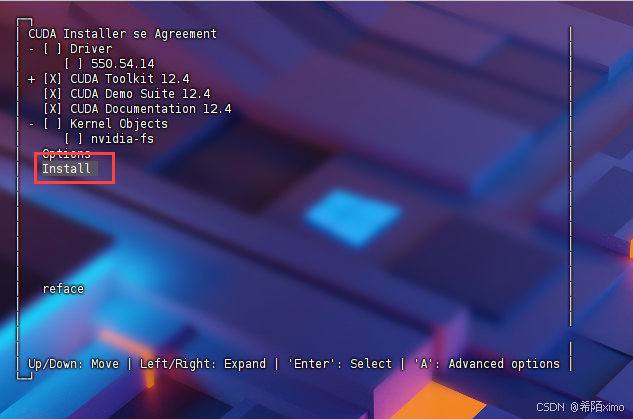

- 3.接着选择Install开始安装

- 4.安装完成

2.5 环境变量配置

- 1.以vim方式打开配置文件

sudo vim ~/.bashrc - 2.按i键进入编辑模式,在文件尾增加下面内容:

export PATH="/usr/local/cuda-12.4/bin:$PATH" export LD_LIBRARY_PATH="/usr/local/cuda-12.4/lib64:$LD_LIBRARY_PATH" 按esc键退出编辑模式,输入:wq保存并退出

3.更新环境变量

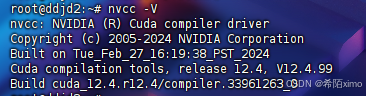

source ~/.bashrc - 4.检测CUDA是否安装成功

nvcc -V

二、安装miniconda环境

1. 下载miniconda3

wget https://mirrors.cqupt.edu.cn/anaconda/miniconda/Miniconda3-py310_23.10.0-1-Linux-x86_64.sh 2. 安装miniconda3

bash Miniconda3-py310_23.10.0-1-Linux-x86_64.sh -u 直接一直enter键,到输入路径和yes

这边建议路径为:miniconda3

3. 切换到bin文件夹

cd miniconda3/bin/ 4. 输入pwd获取路径

pwd 复制这里的路径

5. 打开用户环境编辑页面

vim ~/.bashrc - 点击键盘I键进入编辑模式,在最下方输入以下代码

export PATH="/root/miniconda3/bin:$PATH" 6. 重新加载用户环境变量

source ~/.bashrc 7. 初始化conda

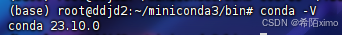

conda init bash source ~/.bashrc 8.然后conda -V要是正常就安装成功了

conda -V

9.conda配置

- 1.配置清华镜像源

代码如下:

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/ conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/ conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/conda-forge/ - 2.设置搜索时显示通道地址

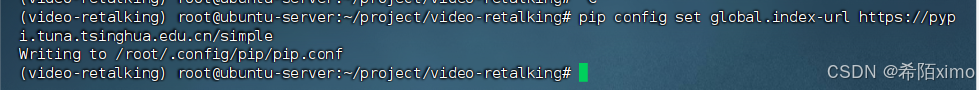

conda config --set show_channel_urls yes - 3.配置pip 镜像源

pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple 三、安装video-retalking

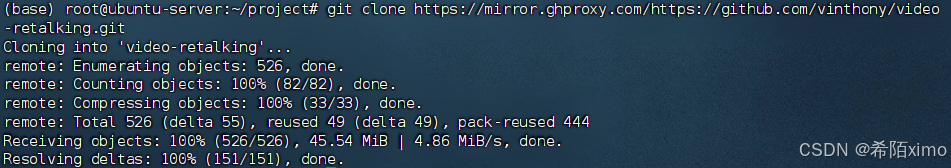

1.克隆仓库

1.1 github克隆

git clone https://github.com/vinthony/video-retalking.git 1.2 国内github镜像克隆

git clone https://mirror.ghproxy.com/https://github.com/vinthony/video-retalking.git

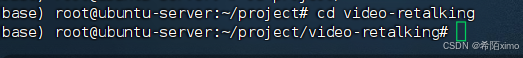

1.3. 进入目录

cd video-retalking

2.创建虚拟环境

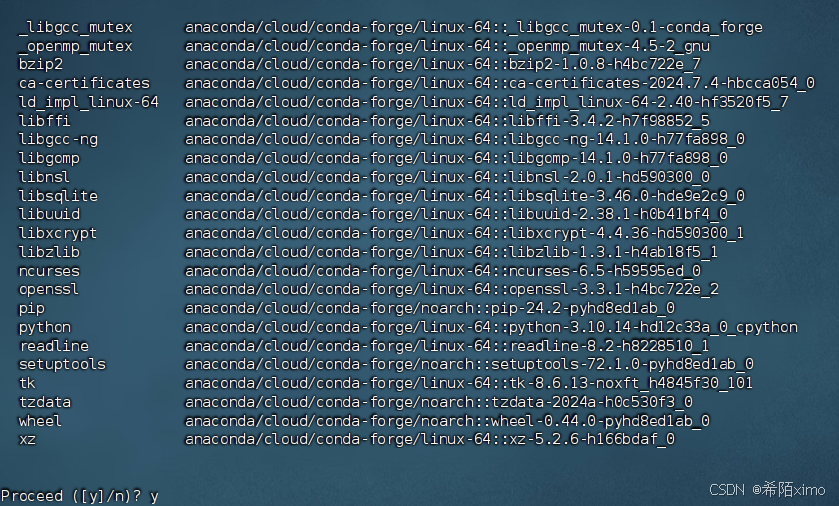

conda create -n video-retalking python=3.10

输入y确认安装

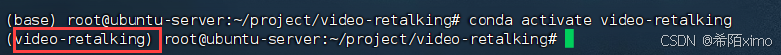

2.1 进入虚拟环境

conda activate video-retalking

3. 安装依赖

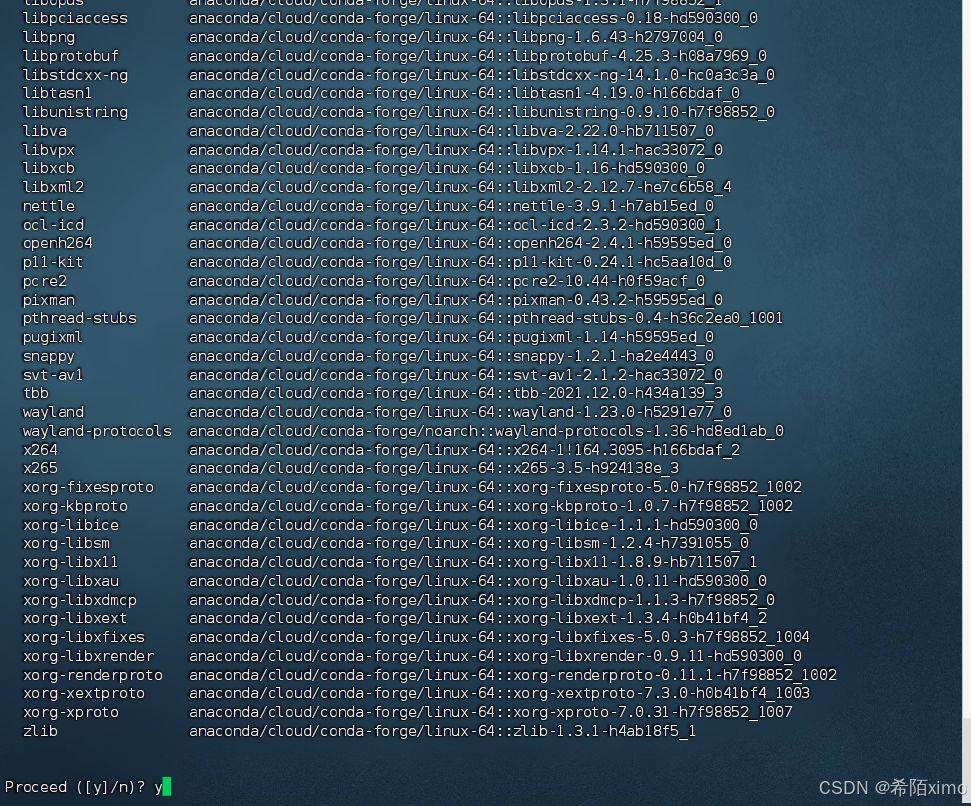

conda install ffmpeg  输入y进行安装

输入y进行安装

3.1设置清华源

pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

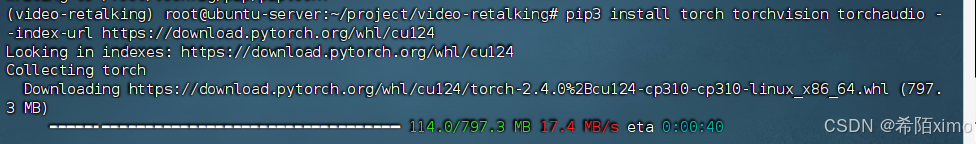

3.2安装torch 12.4cuda版本

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124

3.3安装依赖

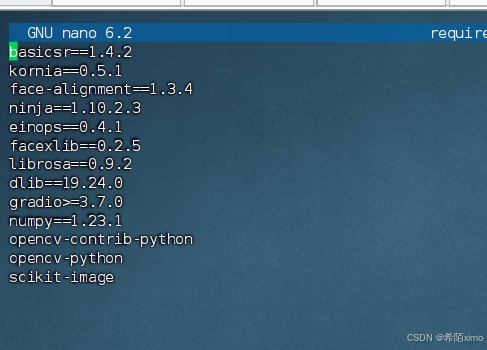

安装之前我们先修改一下requirements.txt文件的依赖版本:

编辑文件:

nano requirements.txt 粘贴以下内容:

basicsr==1.4.2 kornia==0.5.1 face-alignment==1.3.4 ninja==1.10.2.3 einops==0.4.1 facexlib==0.2.5 librosa==0.9.2 dlib==19.24.0 gradio>=3.7.0 numpy==1.23.1 opencv-contrib-python opencv-python scikit-image ctrl+x,再输入y确认,再enter确认

3.4安装依赖

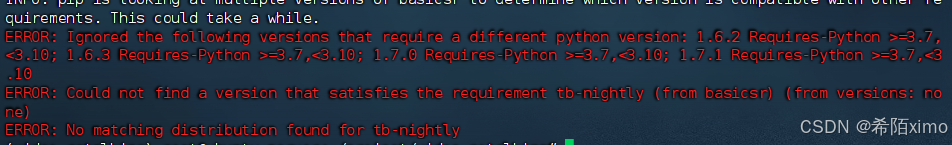

pip install -r requirements.txt

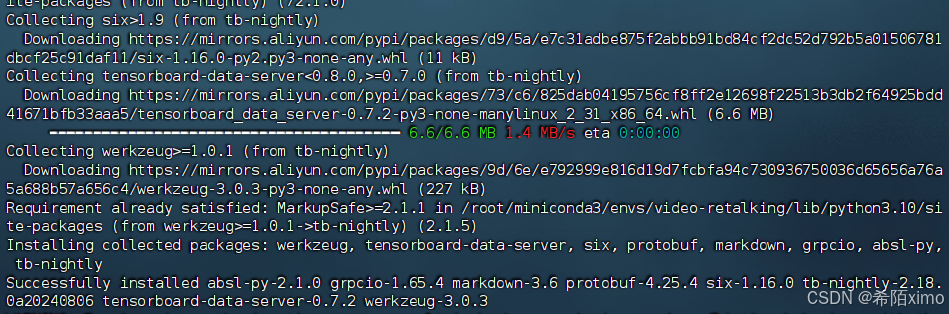

这里清华源没有找到tb-nightly,下面使用阿里源进行安装

python -m pip install tb-nightly -i https://mirrors.aliyun.com/pypi/simple

3.5单独安装依赖

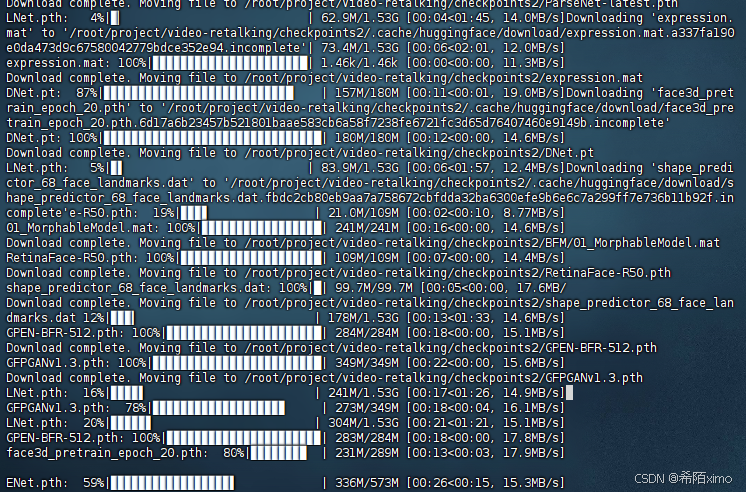

pip install opencv-python pip install tqdm pip install scipy pip install scikit-image pip install face_alignment==1.3.5 pip install ninja pip install basicsr pip install einops pip install kornia pip install facexlib pip install librosa pip install --upgrade pip setuptools pip install dlib pip install gradio 3.6请下载预训练模型

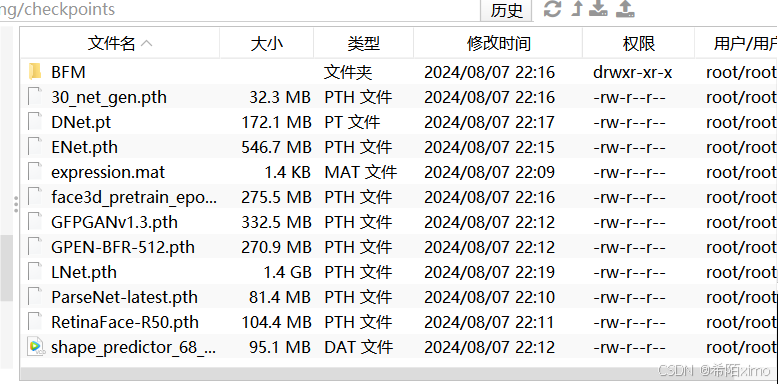

预训练模型

并将它们放入 ./checkpoints

没有科学上网的可以在我的夸克网盘下载

- 新建文件夹

mkdir checkpoints - 笑脸社区

pip install -U huggingface_hub huggingface-cli download --resume-download yachty66/video_retalking --local-dir /root/project/video-retalking/checkpoints

- 传入模型文件

三、报错合集

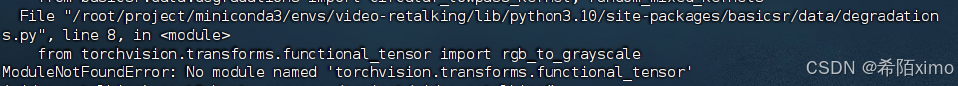

1. ModuleNotFoundError: No module named ‘torchvision.transforms.functional_tensor’

去环境里面修改video-retalking/lib/python3.10/site-packages/basicsr/data/degradations.py

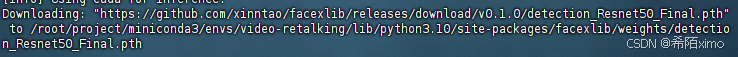

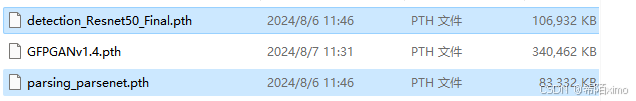

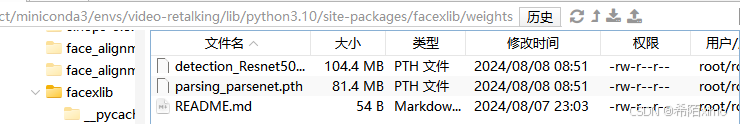

1.1把weights权重文件考到下面目录(两个文件)

- 这两个文件也可以不用下面的方法,运行时会自动下载,但下载的速度太慢了,所以这里直接拷贝了。

/root/anaconda3/envs/video_retalking/lib/python3.10/site-packages/facexlib/weights/

2. RuntimeError: PytorchStreamReader failed reading zip archive: failed finding central directory

模型上传错误,重新下载即可

3. AttributeError: _2D

2种方法:

- 安装指定版本

pip install face_alignment==1.3.4 - 修改代码

- 修改代码

4. ssl.SSLError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:1007)== 和 ==urllib.error.URLError: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:1007)>

下载s3fd-619a316812.pth和2DFAN4-cd938726ad.zip放到上述的文件加下

5. ValueError: setting an array element with a sequence. The requested array has an inhomogeneous shape after 1 dimensions. The detected shape was (5,) + inhomogeneous part.

数组形状不对

- 修改video-retalking/third_part/face3d/util/preprocess.py文件,粘贴以下代码

import numpy as np from scipy.io import loadmat from PIL import Image import cv2 from skimage import transform as trans import torch import warnings warnings.filterwarnings("ignore", category=np.VisibleDeprecationWarning) warnings.filterwarnings("ignore", category=FutureWarning) def POS(xp, x): npts = xp.shape[1] A = np.zeros([2*npts, 8]) A[0:2*npts-1:2, 0:3] = x.transpose() A[0:2*npts-1:2, 3] = 1 A[1:2*npts:2, 4:7] = x.transpose() A[1:2*npts:2, 7] = 1 b = np.reshape(xp.transpose(), [2*npts, 1]) k, _, _, _ = np.linalg.lstsq(A, b, rcond=None) R1 = k[0:3] R2 = k[4:7] sTx = k[3] sTy = k[7] s = (np.linalg.norm(R1) + np.linalg.norm(R2)) / 2 t = np.array([sTx, sTy]).flatten() return t, s def BBRegression(points, params): w1 = params['W1'] b1 = params['B1'] w2 = params['W2'] b2 = params['B2'] data = points.copy().reshape([5, 2]) data_mean = np.mean(data, axis=0) x_mean, y_mean = data_mean data -= data_mean rms = np.sqrt(np.sum(data ** 2) / 5) data /= rms data = data.reshape([1, 10]).transpose() inputs = np.matmul(w1, data) + b1 inputs = 2 / (1 + np.exp(-2 * inputs)) - 1 inputs = np.matmul(w2, inputs) + b2 inputs = inputs.transpose() x, y = inputs[:, 0] * rms + x_mean, inputs[:, 1] * rms + y_mean w = 224 / inputs[:, 2] * rms return np.array([x, y, w, w]).reshape([4]) def img_padding(img, box): success = True bbox = box.copy() res = np.zeros([2*img.shape[0], 2*img.shape[1], 3]) res[img.shape[0]//2:img.shape[0]+img.shape[0]//2, img.shape[1]//2:img.shape[1]+img.shape[1]//2] = img bbox[0] += img.shape[1] // 2 bbox[1] += img.shape[0] // 2 if bbox[0] < 0 or bbox[1] < 0: success = False return res, bbox, success def crop(img, bbox): padded_img, padded_bbox, flag = img_padding(img, bbox) if flag: crop_img = padded_img[padded_bbox[1]:padded_bbox[1] + padded_bbox[3], padded_bbox[0]:padded_bbox[0] + padded_bbox[2]] crop_img = cv2.resize(crop_img.astype(np.uint8), (224, 224), interpolation=cv2.INTER_CUBIC) scale = 224 / padded_bbox[3] return crop_img, scale return padded_img, 0 def scale_trans(img, lm, t, s): imgw, imgh = img.shape[1], img.shape[0] M_s = np.array([[1, 0, -t[0] + imgw // 2 + 0.5], [0, 1, -imgh // 2 + t[1]]], dtype=np.float32) img = cv2.warpAffine(img, M_s, (imgw, imgh)) w, h = int(imgw / s * 100), int(imgh / s * 100) img = cv2.resize(img, (w, h)) lm = np.stack([lm[:, 0] - t[0] + imgw // 2, lm[:, 1] - t[1] + imgh // 2], axis=1) / s * 100 left, up = w // 2 - 112, h // 2 - 112 bbox = [left, up, 224, 224] cropped_img, scale2 = crop(img, bbox) assert(scale2 != 0) t1 = np.array([bbox[0], bbox[1]]) scale, t2 = s / 100, np.array([t[0] - imgw / 2, t[1] - imgh / 2]) inv = (scale / scale2, scale * t1 + t2.reshape([2])) return cropped_img, inv def align_for_lm(img, five_points): five_points = np.array(five_points).reshape([1, 10]) params = loadmat('util/BBRegressorParam_r.mat') bbox = BBRegression(five_points, params) assert(bbox[2] != 0) bbox = np.round(bbox).astype(np.int32) crop_img, scale = crop(img, bbox) return crop_img, scale, bbox def resize_n_crop_img(img, lm, t, s, target_size=224., mask=None): w0, h0 = img.size w, h = int(w0 * s), int(h0 * s) left = int(w / 2 - target_size / 2 + (t[0] - w0 / 2) * s) right = left + target_size up = int(h / 2 - target_size / 2 + (h0 / 2 - t[1]) * s) below = up + target_size img = img.resize((w, h), resample=Image.BICUBIC) img = img.crop((left, up, right, below)) if mask is not None: mask = mask.resize((w, h), resample=Image.BICUBIC) mask = mask.crop((left, up, right, below)) lm = np.stack([lm[:, 0] - t[0] + w0 / 2, lm[:, 1] - t[1] + h0 / 2], axis=1) * s lm -= np.array([(w / 2 - target_size / 2), (h / 2 - target_size / 2)]).reshape([1, 2]) return img, lm, mask def extract_5p(lm): lm_idx = np.array([31, 37, 40, 43, 46, 49, 55]) - 1 lm5p = np.stack([lm[lm_idx[0], :], np.mean(lm[lm_idx[[1, 2]], :], 0), np.mean(lm[lm_idx[[3, 4]], :], 0), lm[lm_idx[5], :], lm[lm_idx[6], :]], axis=0) lm5p = lm5p[[1, 2, 0, 3, 4], :] return lm5p def align_img(img, lm, lm3D, mask=None, target_size=224., rescale_factor=102.): """ Return: transparams --numpy.array (raw_W, raw_H, scale, tx, ty) img_new --PIL.Image (target_size, target_size, 3) lm_new --numpy.array (68, 2), y direction is opposite to v direction mask_new --PIL.Image (target_size, target_size) Parameters: img --PIL.Image (raw_H, raw_W, 3) lm --numpy.array (68, 2), y direction is opposite to v direction lm3D --numpy.array (5, 3) mask --PIL.Image (raw_H, raw_W, 3) """ w0, h0 = img.size if lm.shape[0] != 5: lm5p = extract_5p(lm) else: lm5p = lm t, s = POS(lm5p.transpose(), lm3D.transpose()) s = rescale_factor / s # Ensure t is a sequence of length 2 if not (isinstance(t, (list, np.ndarray)) and len(t) == 2): raise ValueError("Expected 't' to be a sequence of length 2, got {} of type {}.".format(t, type(t))) # Ensure s is a scalar if not np.isscalar(s): raise ValueError("Expected 's' to be a scalar, got {} of type {}.".format(s, type(s))) img_new, lm_new, mask_new = resize_n_crop_img(img, lm, t, s, target_size=target_size, mask=mask) trans_params = np.array([w0, h0, s, t[0], t[1]]) return trans_params, img_new, lm_new, mask_new def estimate_norm(lm_68p, H): lm = extract_5p(lm_68p) lm[:, -1] = H - 1 - lm[:, -1] tform = trans.SimilarityTransform() src = np.array( [[38.2946, 51.6963], [73.5318, 51.5014], [56.0252, 71.7366], [41.5493, 92.3655], [70.7299, 92.2041]], dtype=np.float32 ) tform.estimate(lm, src) M = tform.params if np.linalg.det(M) == 0: M = np.eye(3) return M[0:2, :] def estimate_norm_torch(lm_68p, H): lm_68p_ = lm_68p.detach().cpu().numpy() M = [estimate_norm(lm_68p_[i], H) for i in range(lm_68p_.shape[0])] return torch.tensor(M, dtype=torch.float32).to(lm_68p.device) 6.TypeError: mel() takes 0 positional arguments but 2 positional arguments (and 3 keyword-only arguments) were given

修改video-retalking/utils/audio.py文件,粘贴以下代码

import librosa import librosa.filters import numpy as np from scipy import signal from scipy.io import wavfile from .hparams import hparams as hp def load_wav(path, sr): return librosa.core.load(path, sr=sr)[0] def save_wav(wav, path, sr): wav *= 32767 / max(0.01, np.max(np.abs(wav))) wavfile.write(path, sr, wav.astype(np.int16)) def save_wavenet_wav(wav, path, sr): librosa.output.write_wav(path, wav, sr=sr) def preemphasis(wav, k, preemphasize=True): if preemphasize: return signal.lfilter([1, -k], [1], wav) return wav def inv_preemphasis(wav, k, inv_preemphasize=True): if inv_preemphasize: return signal.lfilter([1], [1, -k], wav) return wav def get_hop_size(): hop_size = hp.hop_size if hop_size is None: assert hp.frame_shift_ms is not None hop_size = int(hp.frame_shift_ms / 1000 * hp.sample_rate) return hop_size def linearspectrogram(wav): D = _stft(preemphasis(wav, hp.preemphasis, hp.preemphasize)) S = _amp_to_db(np.abs(D)) - hp.ref_level_db if hp.signal_normalization: return _normalize(S) return S def melspectrogram(wav): D = _stft(preemphasis(wav, hp.preemphasis, hp.preemphasize)) S = _amp_to_db(_linear_to_mel(np.abs(D))) - hp.ref_level_db if hp.signal_normalization: return _normalize(S) return S def _lws_processor(): import lws return lws.lws(hp.n_fft, get_hop_size(), fftsize=hp.win_size, mode="speech") def _stft(y): if hp.use_lws: return _lws_processor(hp).stft(y).T else: return librosa.stft(y=y, n_fft=hp.n_fft, hop_length=get_hop_size(), win_length=hp.win_size) ########################################################## #Those are only correct when using lws!!! (This was messing with Wavenet quality for a long time!) def num_frames(length, fsize, fshift): """Compute number of time frames of spectrogram """ pad = (fsize - fshift) if length % fshift == 0: M = (length + pad * 2 - fsize) // fshift + 1 else: M = (length + pad * 2 - fsize) // fshift + 2 return M def pad_lr(x, fsize, fshift): """Compute left and right padding """ M = num_frames(len(x), fsize, fshift) pad = (fsize - fshift) T = len(x) + 2 * pad r = (M - 1) * fshift + fsize - T return pad, pad + r ########################################################## #Librosa correct padding def librosa_pad_lr(x, fsize, fshift): return 0, (x.shape[0] // fshift + 1) * fshift - x.shape[0] # Conversions _mel_basis = None def _linear_to_mel(spectrogram): global _mel_basis if _mel_basis is None: _mel_basis = _build_mel_basis() return np.dot(_mel_basis, spectrogram) def _build_mel_basis(): assert hp.fmax <= hp.sample_rate // 2 return librosa.filters.mel(sr=hp.sample_rate, n_fft=hp.n_fft, n_mels=hp.num_mels, fmin=hp.fmin, fmax=hp.fmax) def _amp_to_db(x): min_level = np.exp(hp.min_level_db / 20 * np.log(10)) return 20 * np.log10(np.maximum(min_level, x)) def _db_to_amp(x): return np.power(10.0, (x) * 0.05) def _normalize(S): if hp.allow_clipping_in_normalization: if hp.symmetric_mels: return np.clip((2 * hp.max_abs_value) * ((S - hp.min_level_db) / (-hp.min_level_db)) - hp.max_abs_value, -hp.max_abs_value, hp.max_abs_value) else: return np.clip(hp.max_abs_value * ((S - hp.min_level_db) / (-hp.min_level_db)), 0, hp.max_abs_value) assert S.max() <= 0 and S.min() - hp.min_level_db >= 0 if hp.symmetric_mels: return (2 * hp.max_abs_value) * ((S - hp.min_level_db) / (-hp.min_level_db)) - hp.max_abs_value else: return hp.max_abs_value * ((S - hp.min_level_db) / (-hp.min_level_db)) def _denormalize(D): if hp.allow_clipping_in_normalization: if hp.symmetric_mels: return (((np.clip(D, -hp.max_abs_value, hp.max_abs_value) + hp.max_abs_value) * -hp.min_level_db / (2 * hp.max_abs_value)) + hp.min_level_db) else: return ((np.clip(D, 0, hp.max_abs_value) * -hp.min_level_db / hp.max_abs_value) + hp.min_level_db) if hp.symmetric_mels: return (((D + hp.max_abs_value) * -hp.min_level_db / (2 * hp.max_abs_value)) + hp.min_level_db) else: return ((D * -hp.min_level_db / hp.max_abs_value) + hp.min_level_db) 7.torch.OutOfMemoryError: CUDA out of memory.

Tried to allocate 1.22 GiB. GPU 0 has a total capacity of 23.64 GiB of which 689.00 MiB is free. Process 307533 has 1.70 GiB memory in use. Process 417650 has 2.03 GiB memory in use. Process 572252 has 13.39 GiB memory in use. Including non-PyTorch memory, this process has 5.83 GiB memory in use. Of the allocated memory 5.31 GiB is allocated by PyTorch, and 60.29 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True to avoid fragmentation. See documentation for Memory Management (https://pytorch.org/docs/stable/notes/cuda.html#environment-variables)

显存不够8G了

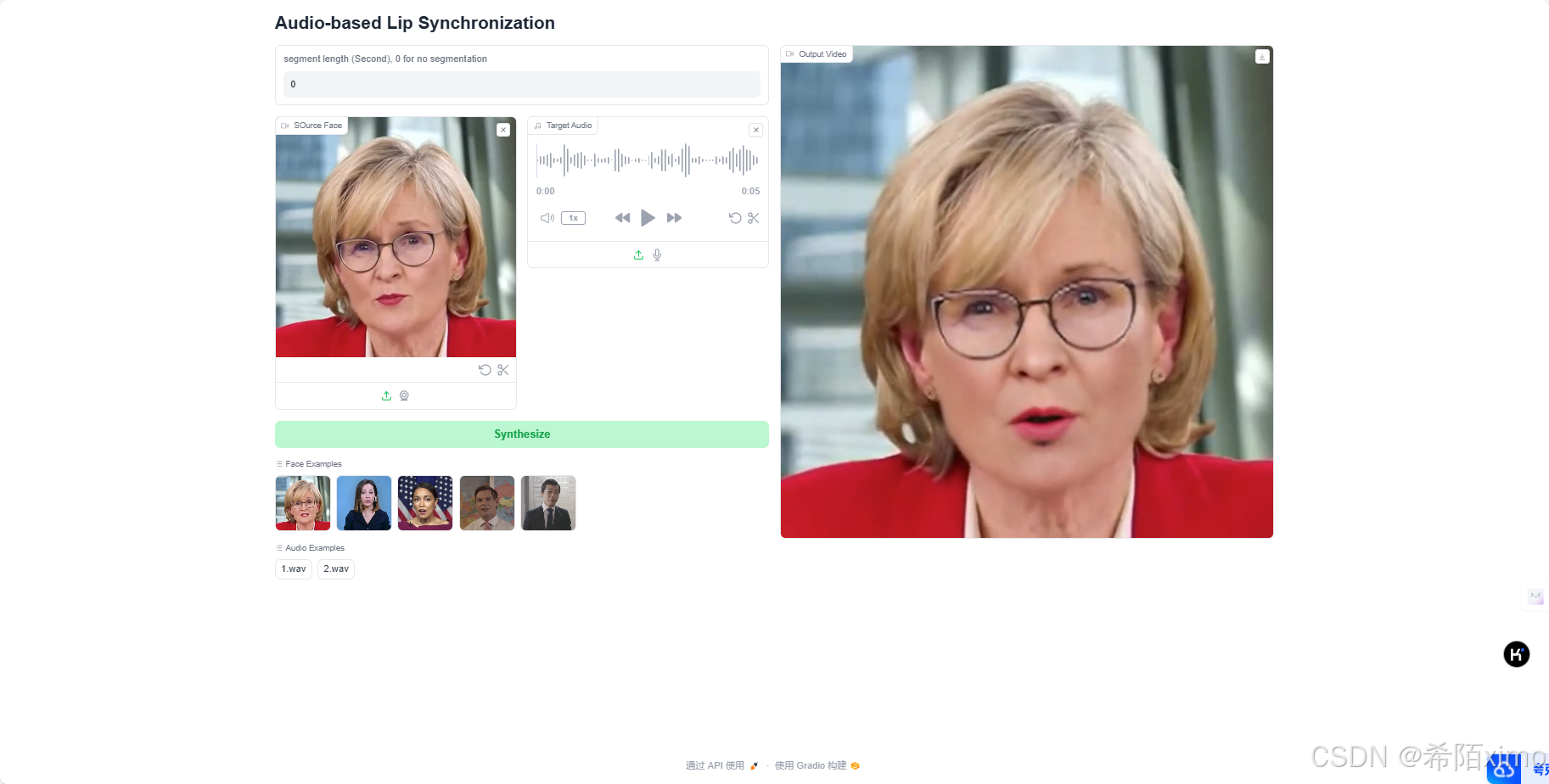

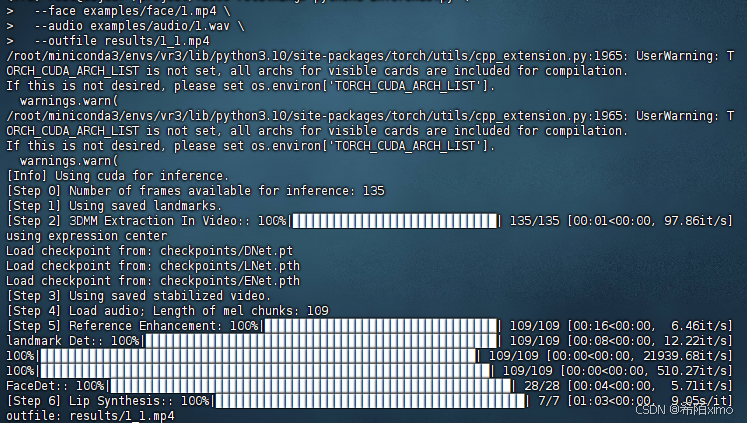

四、部署完成

4.1运行示例测试一下

python3 inference.py \ --face examples/face/1.mp4 \ --audio examples/audio/1.wav \ --outfile results/1_1.mp4 - 这里至少需要有8G显存

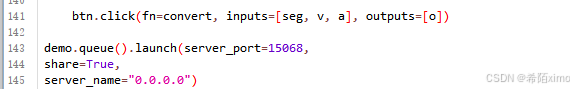

4.2webui界面的端口修改

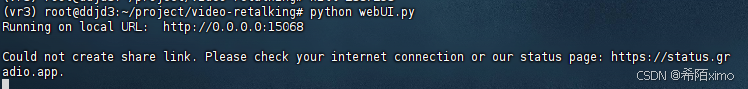

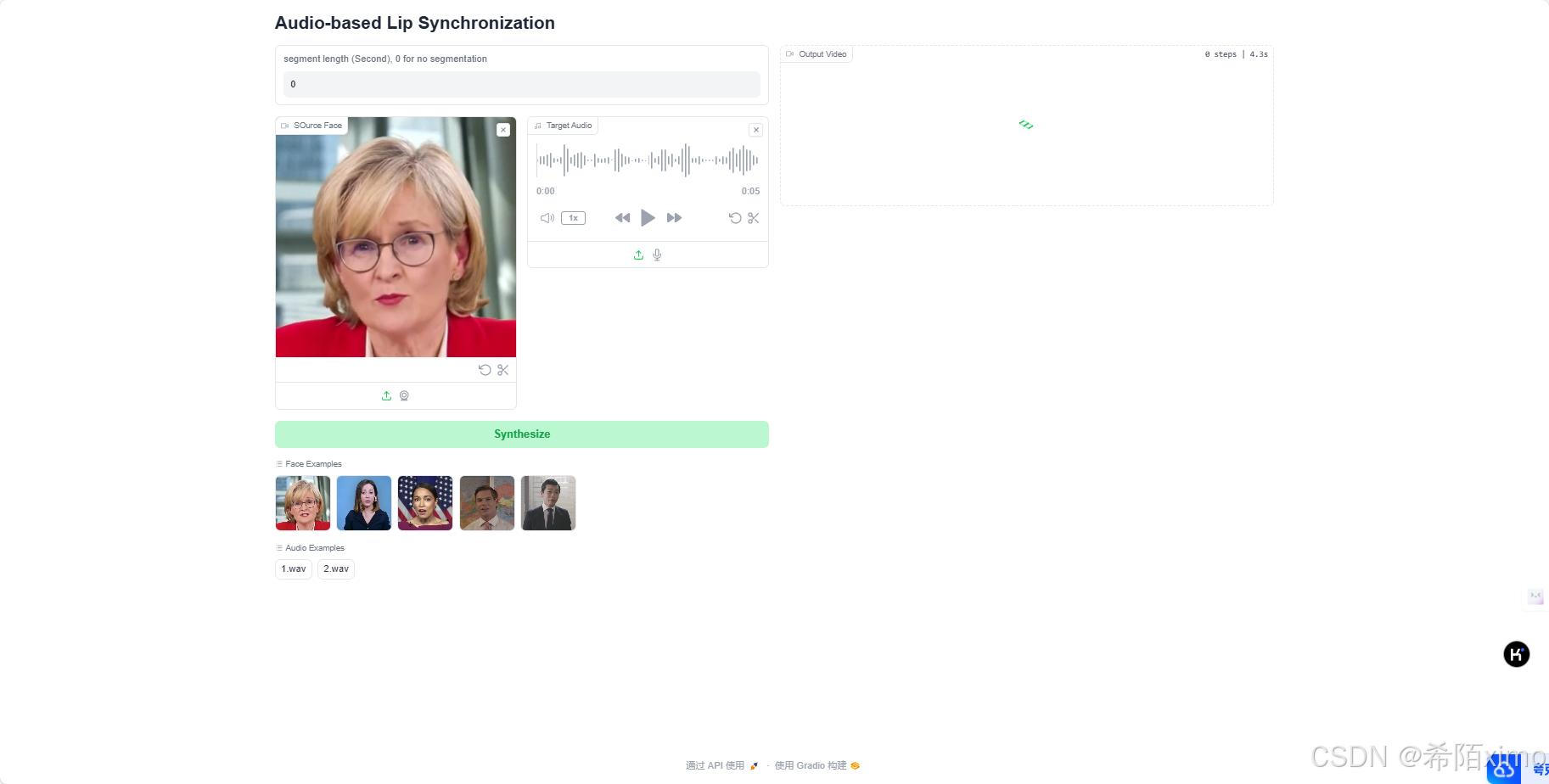

4.3进行访问

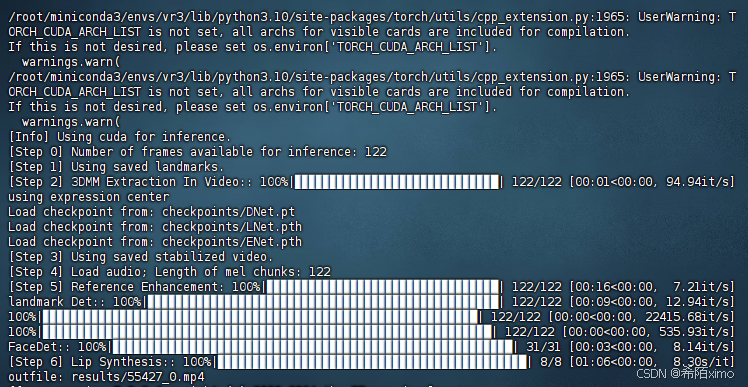

4.4终端显示

4.5成功输出