阅读量:0

目录

四、所有节点安装kubeadm,kubelet和kubectl

一、组件部署

| master节点 | |

| master01 | 192.168.80.100 |

| master02 | 192.168.80.101 |

| master03 | 192.168.80.106 |

| node节点 | |

| node01 | 192.168.80.102 |

| node02 | 192.168.80.103 |

| 负载均衡节点(之前的) | |

| nginx+keepalive01 | 192.168.80.104 (01)master |

| nginx+keepalive02 | 192.168.80.105 (02)backup |

二、环境初始化

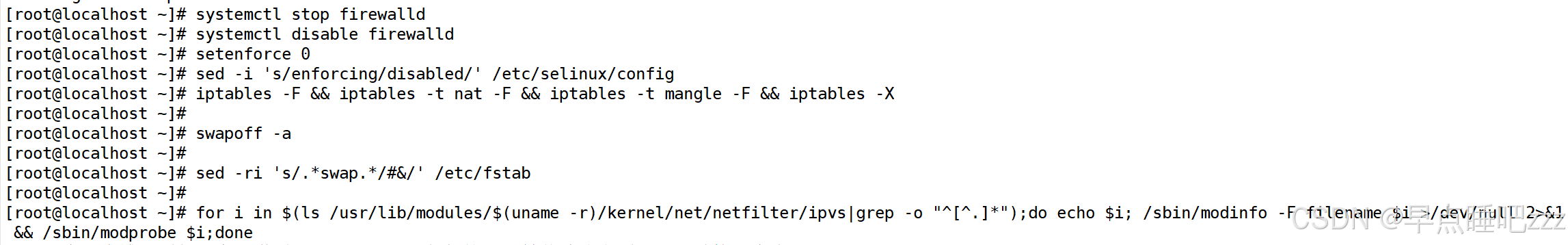

所有节点,关闭防火墙规则,关闭selinux,关闭swap交换 systemctl stop firewalld systemctl disable firewalld setenforce 0 sed -i 's/enforcing/disabled/' /etc/selinux/config iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X swapoff -a #交换分区必须要关闭 sed -ri 's/.*swap.*/#&/' /etc/fstab #永久关闭swap分区,&符号在sed命令中代表上次匹配的结果 #加载 ip_vs 模块 for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done

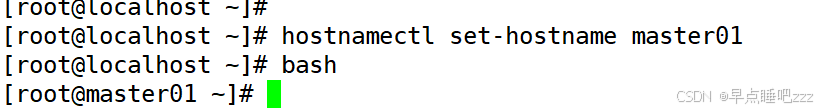

修改主机名 hostnamectl set-hostname master01 hostnamectl set-hostname master02 hostnamectl set-hostname master03 hostnamectl set-hostname node01 hostnamectl set-hostname node02

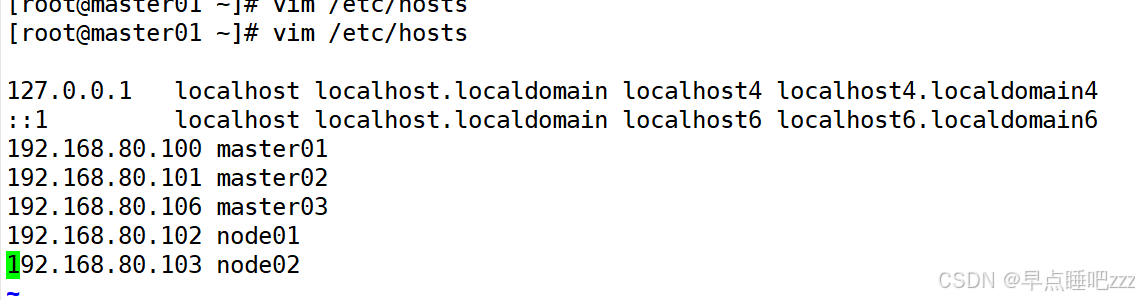

所有节点修改hosts文件 vim /etc/hosts 192.168.80.100 master01 192.168.80.101 master02 192.168.80.106 master03 192.168.80.102 node01 192.168.80.103 node02

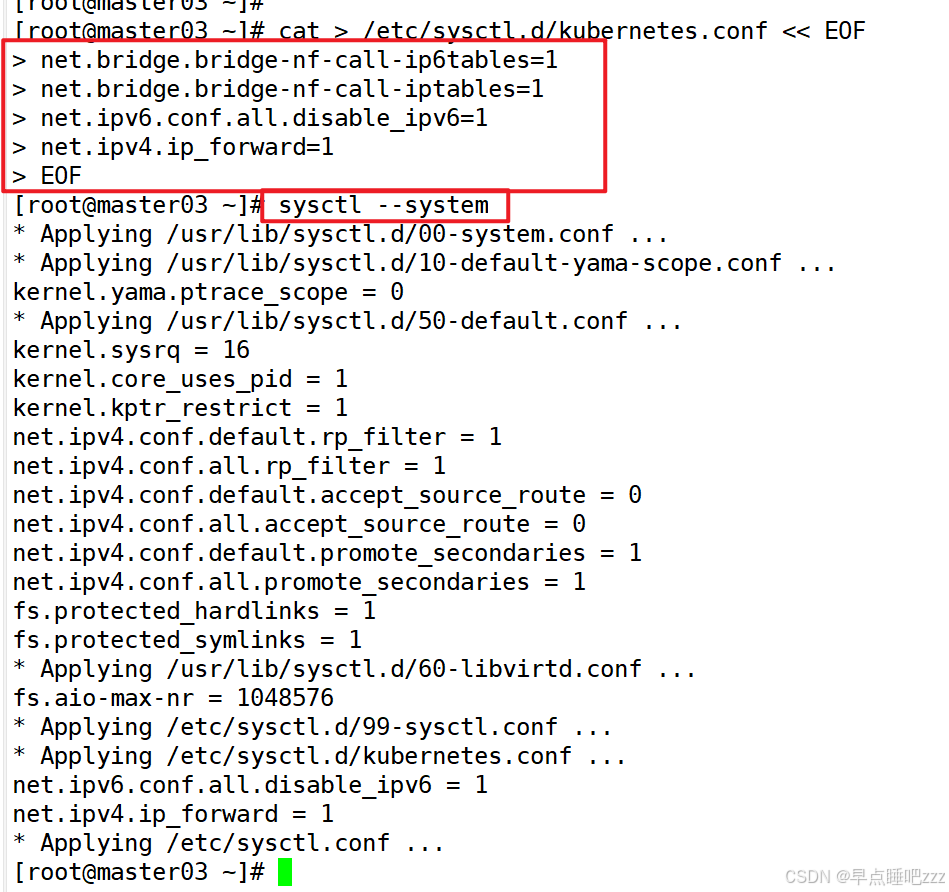

//调整内核参数 cat > /etc/sysctl.d/kubernetes.conf << EOF #开启网桥模式,可将网桥的流量传递给iptables链 net.bridge.bridge-nf-call-ip6tables=1 net.bridge.bridge-nf-call-iptables=1 #关闭ipv6协议 net.ipv6.conf.all.disable_ipv6=1 net.ipv4.ip_forward=1 EOF //生效参数 sysctl --system

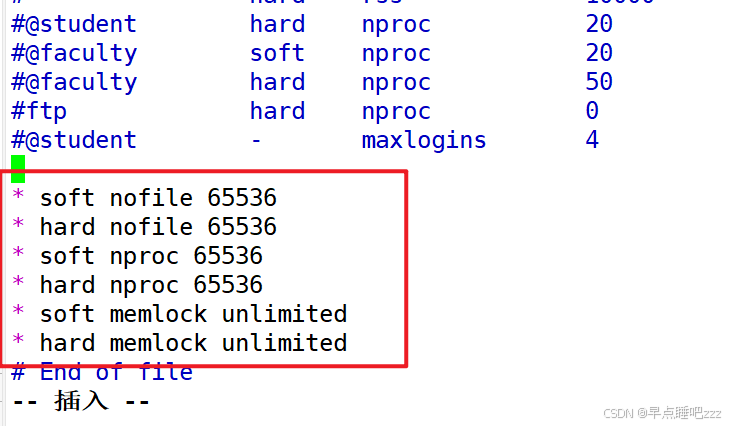

所有节点实现Linux的资源限制 vim /etc/security/limits.conf * soft nofile 65536 * hard nofile 65536 * soft nproc 65536 * hard nproc 65536 * soft memlock unlimited * hard memlock unlimited

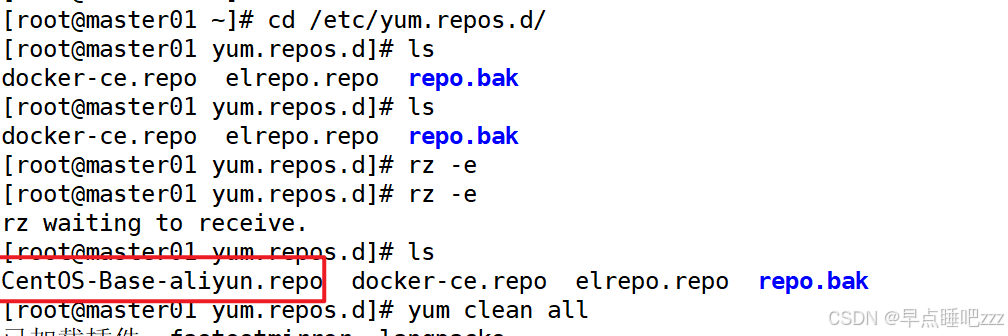

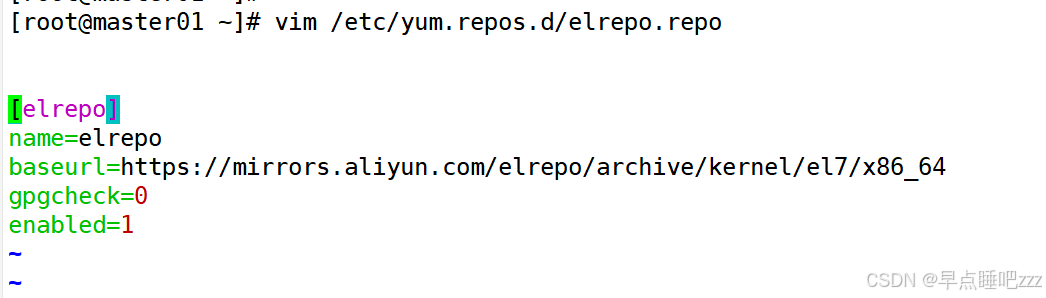

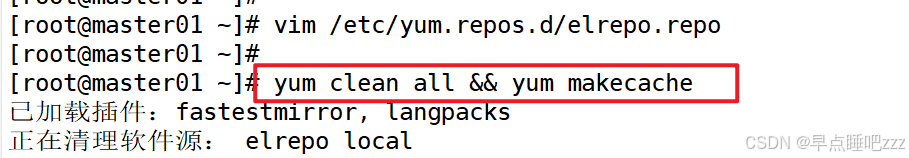

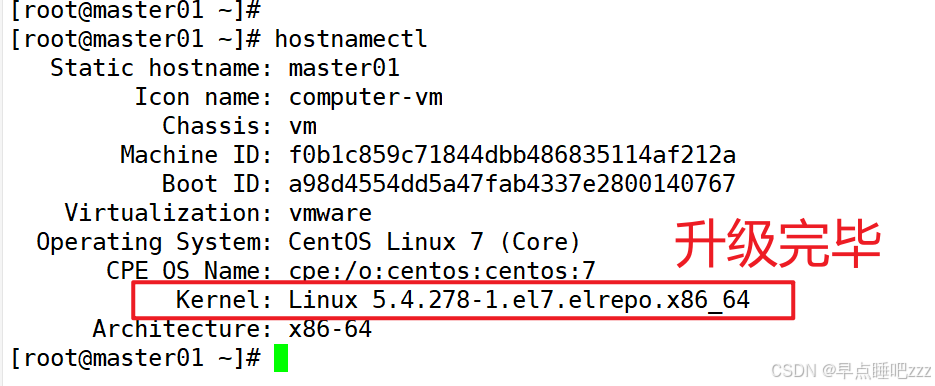

#配置elrepo镜像源 所有服务器操作 vim /etc/yum.repos.d/elrepo.repo [elrepo] name=elrepo baseurl=https://mirrors.aliyun.com/elrepo/archive/kernel/el7/x86_64 gpgcheck=0 enabled=1 cd /etc/yum.repos.d/ mv local.repo repo.bak/ 上传阿里云在线云 yum clean all #集群升级内核 yum install -y kernel-lt kernel-lt-devel #设置默认启动内核 grub2-set-default 0 #重启操作系统 reboot #查看生效版本 hostnamectl

三、所有节点部署docker,以及指定版本的kubeadm

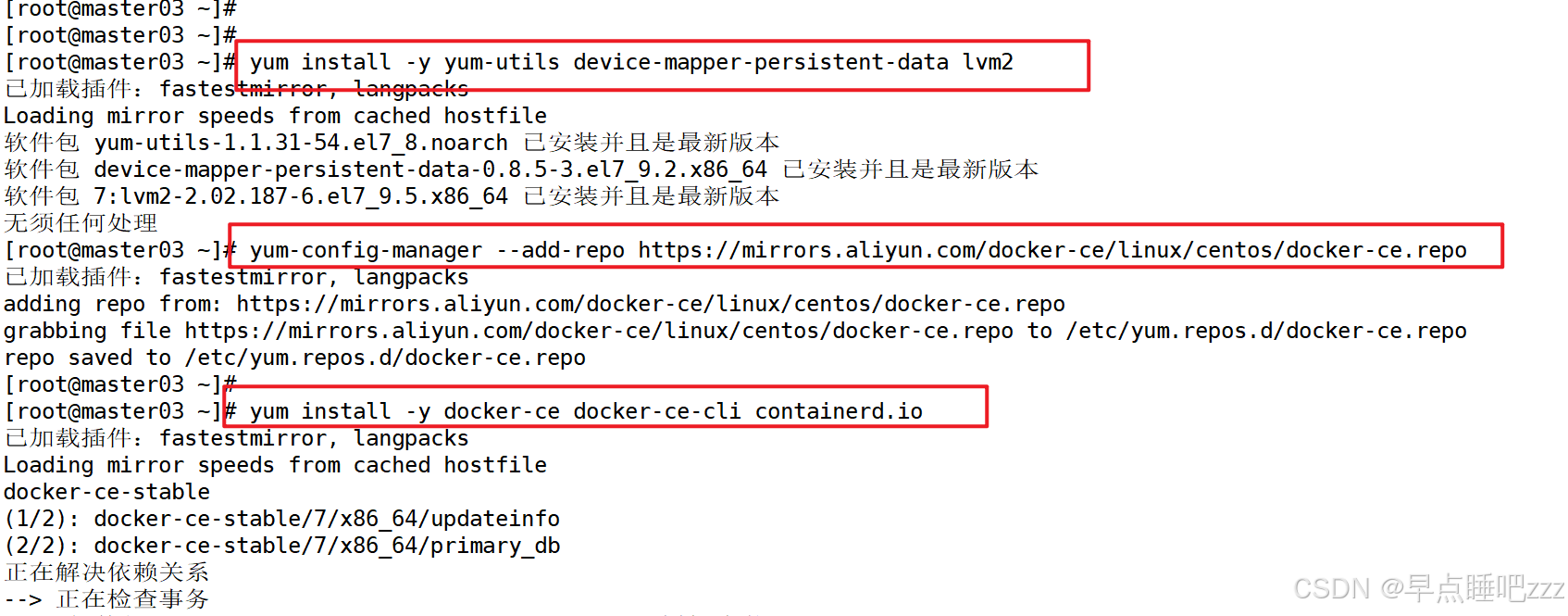

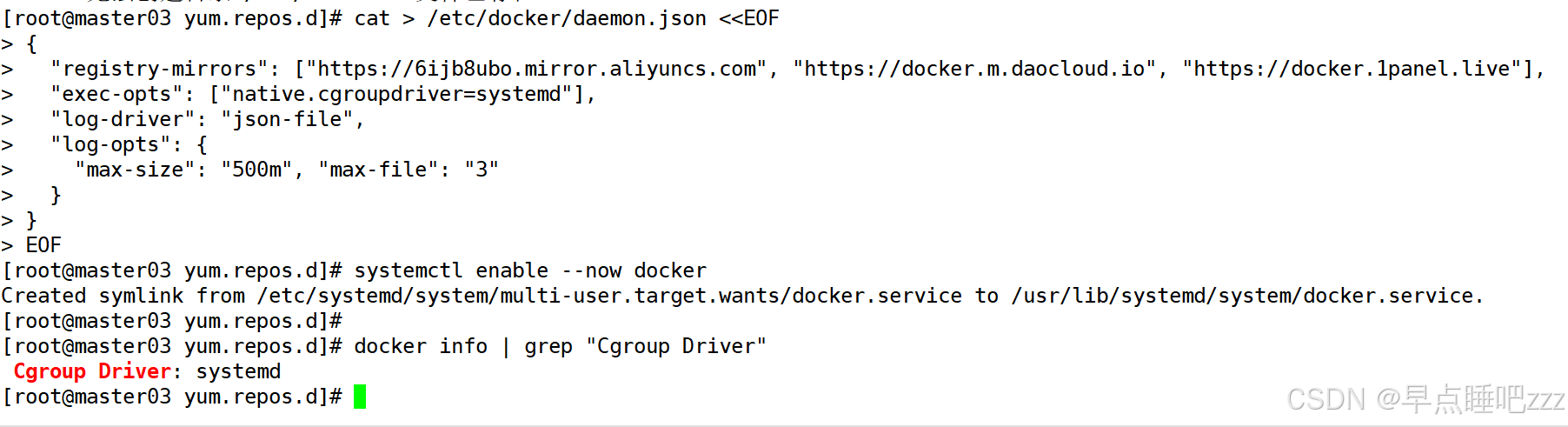

yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum install -y docker-ce docker-ce-cli containerd.io mkdir /etc/docker cat > /etc/docker/daemon.json <<EOF { "registry-mirrors": ["https://6ijb8ubo.mirror.aliyuncs.com", "https://docker.m.daocloud.io", "https://docker.1panel.live"], "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "500m", "max-file": "3" } } EOF #使用Systemd管理的Cgroup来进行资源控制与管理,因为相对Cgroupfs而言,Systemd限制CPU、内存等资源更加简单和成熟稳定。 #日志使用json-file格式类型存储,大小为500M,保存在/var/log/containers目录下,方便ELK等日志系统收集和管理日志。 systemctl daemon-reload systemctl restart docker.service systemctl enable docker.service docker info | grep "Cgroup Driver" Cgroup Driver: systemd

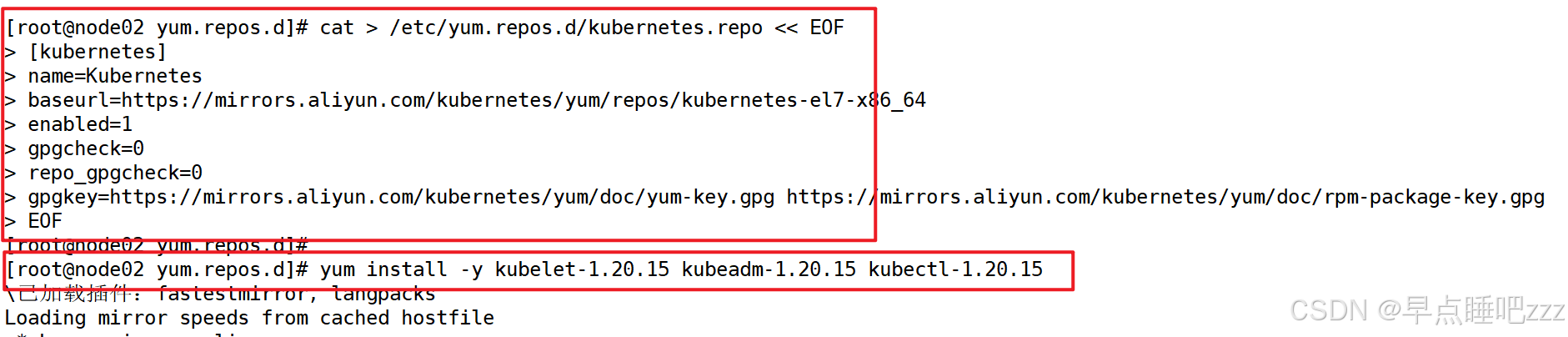

四、所有节点安装kubeadm,kubelet和kubectl

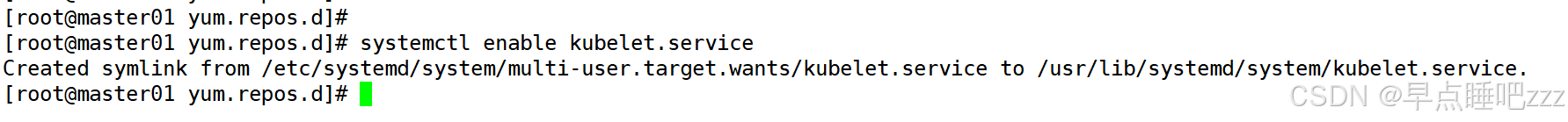

//定义kubernetes源 cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF yum install -y kubelet-1.20.15 kubeadm-1.20.15 kubectl-1.20.15 //开机自启kubelet systemctl enable kubelet.service #K8S通过kubeadm安装出来以后都是以Pod方式存在,即底层是以容器方式运行,所以kubelet必须设置开机自启

五、高可用配置

参考博客神秘代码~这里有康的~快来康康~

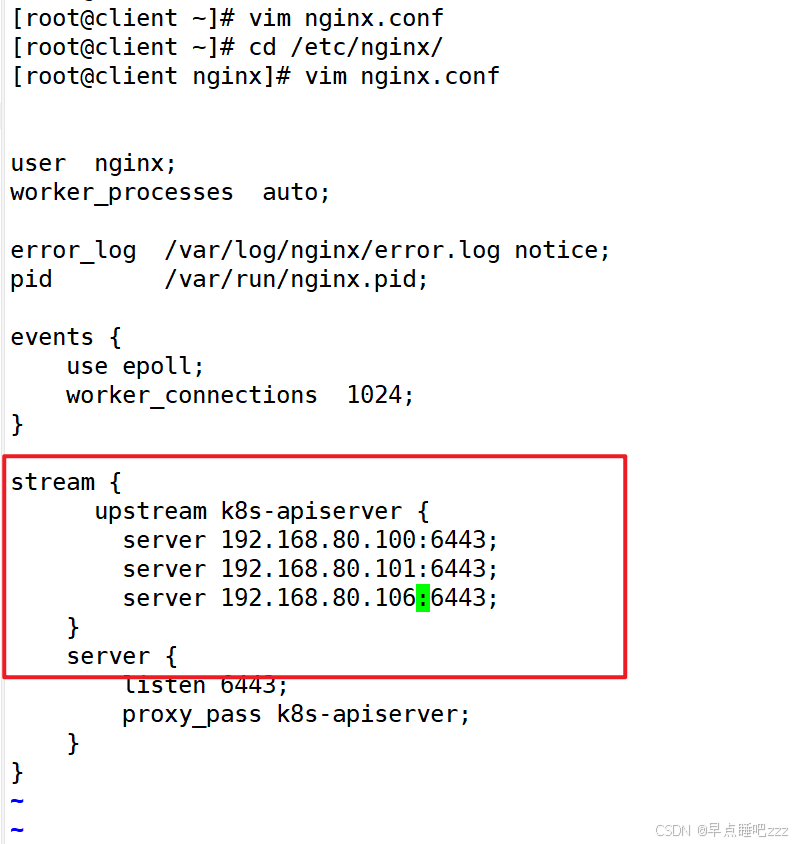

两个服务器都操作 vim nginx.conf server 192.168.80.100:6443; server 192.168.80.101:6443; server 192.168.80.106:6443; systemctl restart nginx

六、部署K8S集群

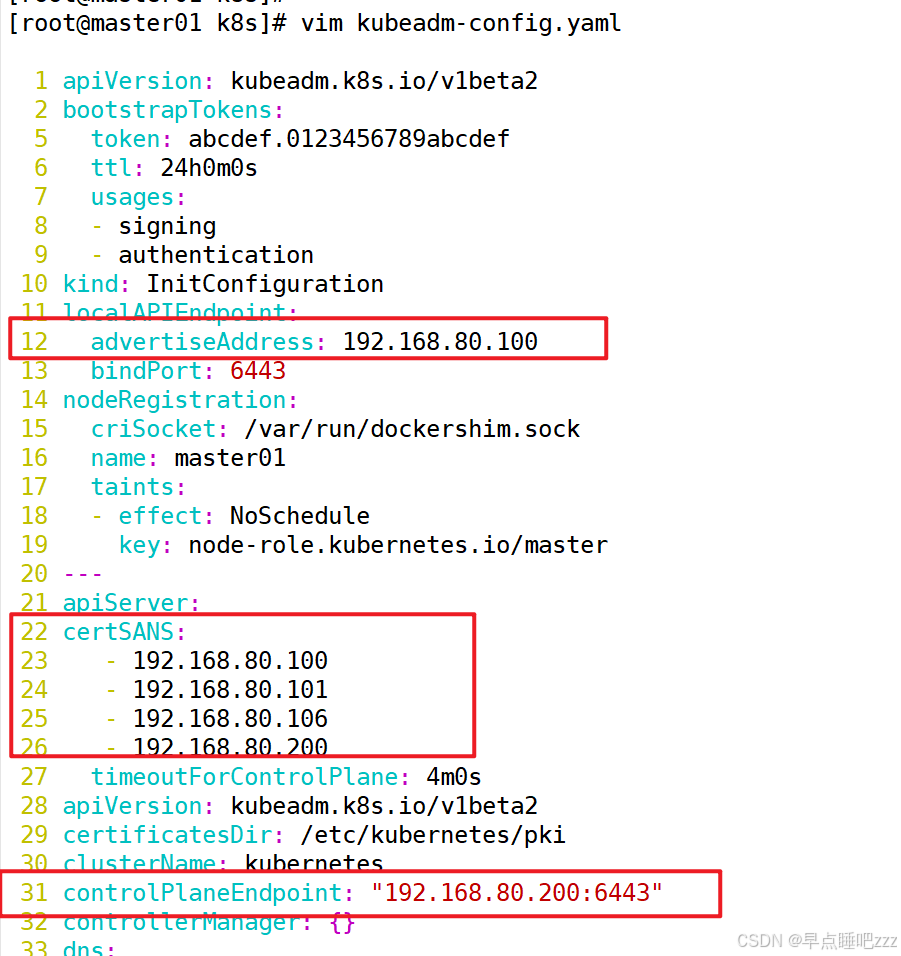

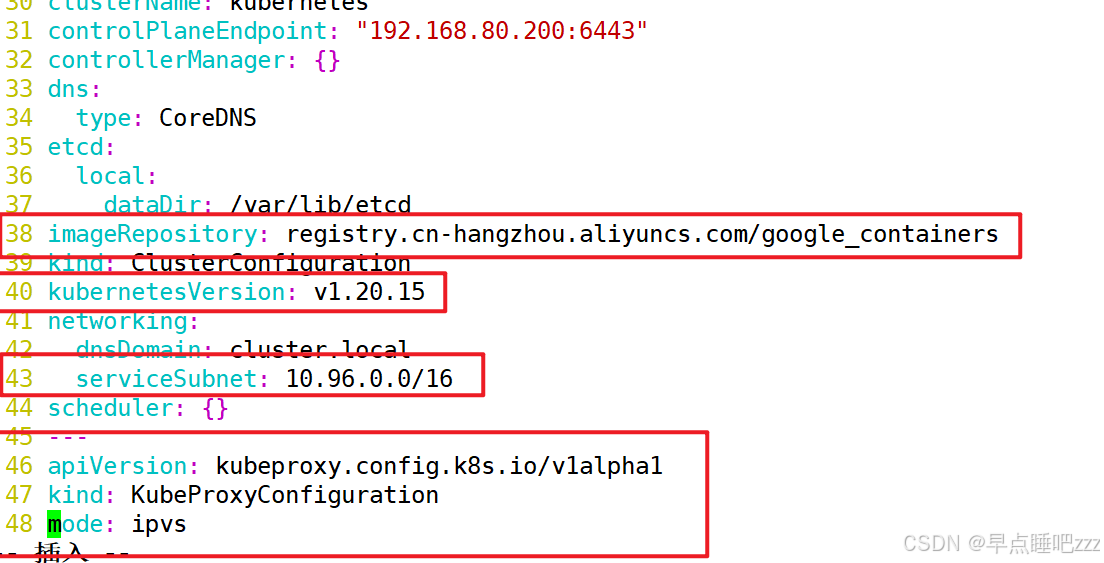

1.master01 节点操作

cd /opt mkdir k8s/ cd k8s kubeadm config print init-defaults > kubeadm-config.yaml vim kubeadm-config.yaml #12行修改为本机地址 advertiseAddress: 192.168.80.100 #22行添加 certSANS: - 192.168.80.100 - 192.168.80.101 - 192.168.80.106 - 192.168.80.200 #31行添加 controlPlaneEndpoint: "192.168.80.200:6443" #38行修改 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #40行修改 kubernetesVersion: v1.20.15 #43行修改 serviceSubnet: 10.96.0.0/16 #44行添加 podSubnet: "10.244.0.0/16" #46行添加 --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvsapiVersion: kubeadm.k8s.io/v1beta2 kind: InitConfiguration bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication localAPIEndpoint: advertiseAddress: 192.168.80.100 bindPort: 6443 nodeRegistration: criSocket: /var/run/dockershim.sock name: master01 taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration apiServer: certSANS: - 192.168.80.100 - 192.168.80.101 - 192.168.80.106 - 192.168.80.200 timeoutForControlPlane: 4m0s certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controlPlaneEndpoint: "192.168.80.200:6443" controllerManager: {} dns: type: CoreDNS etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kubernetesVersion: v1.20.15 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/16 podSubnet: 10.244.0.0/16 scheduler: {} --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs

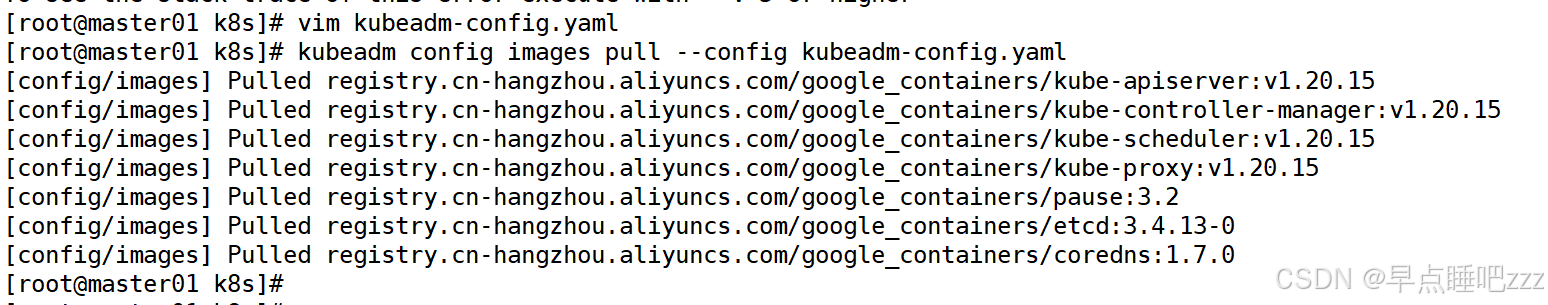

在线拉取镜像 kubeadm config images pull --config kubeadm-config.yaml

2.master02、master03节点

cd /opt mkdir k8s

3.master01 节点

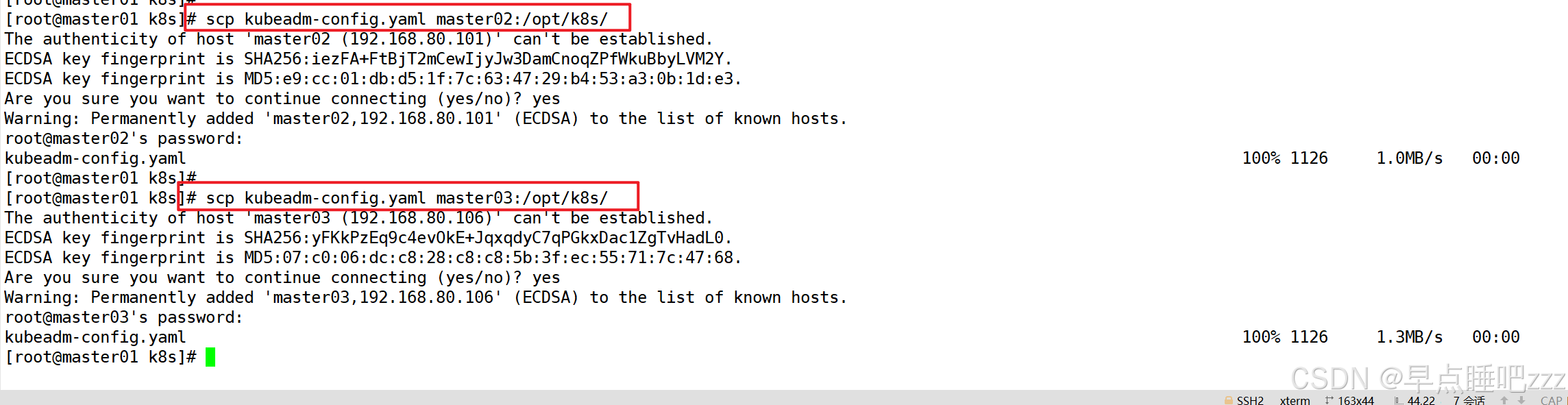

将文件进行远程复制 scp kubeadm-config.yaml master02:/opt/k8s/ scp kubeadm-config.yaml master03:/opt/k8s/

4.master02、master03节点

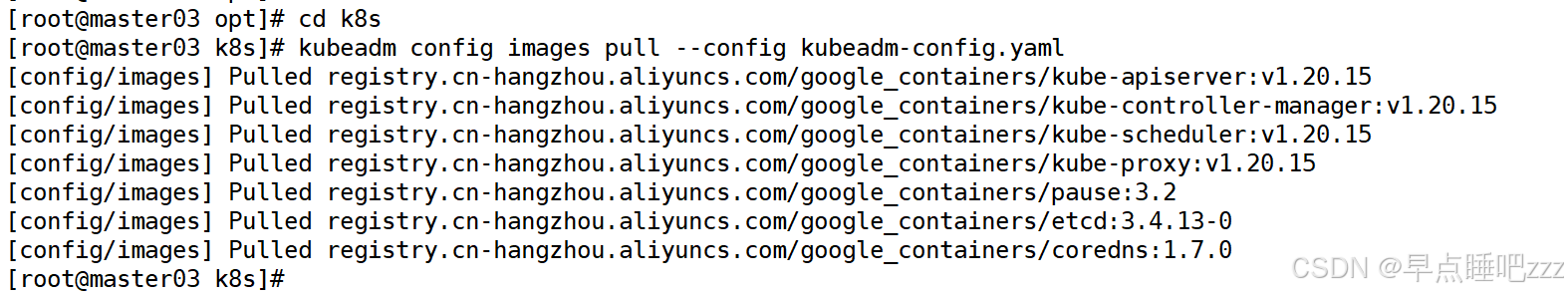

cd k8s kubeadm config images pull --config kubeadm-config.yaml

5.master01 节点

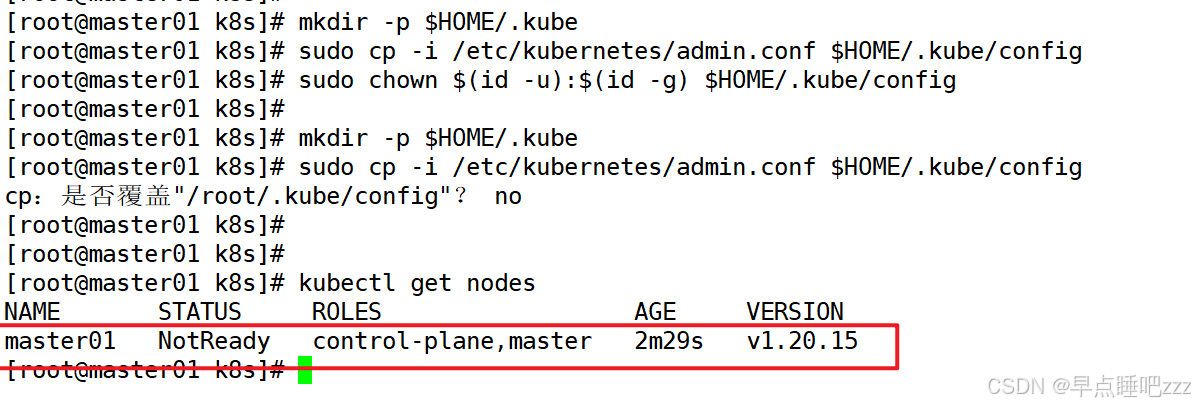

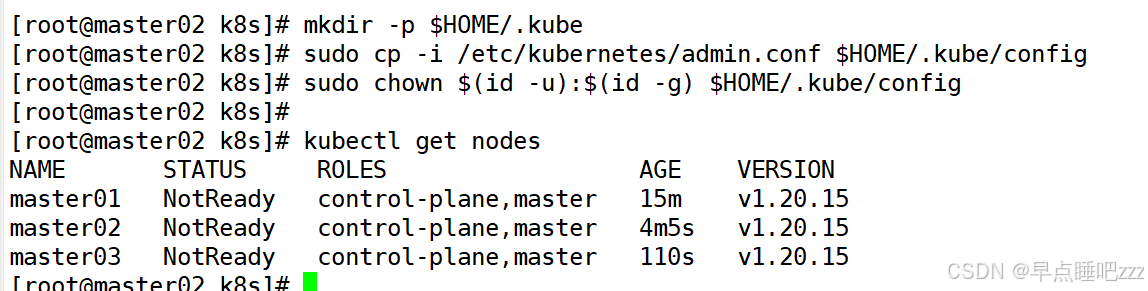

初始化 master kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log #--upload-certs 参数可以在后续执行加入节点时自动分发证书文件 #tee kubeadm-init.log 用以输出日志 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config kubectl get nodes

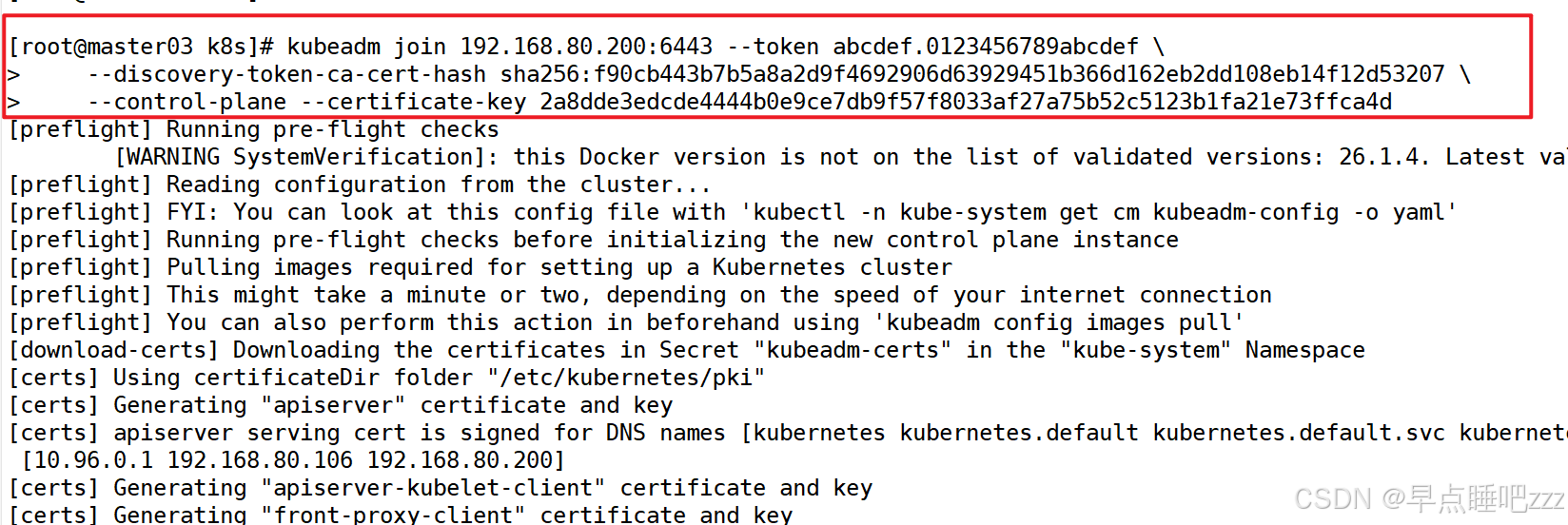

6.master02、master03节点

将其他master加入集群 kubeadm join 192.168.80.200:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:f90cb443b7b5a8a2d9f4692906d63929451b366d162eb2dd108eb14f12d53207 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config kubectl get nodes

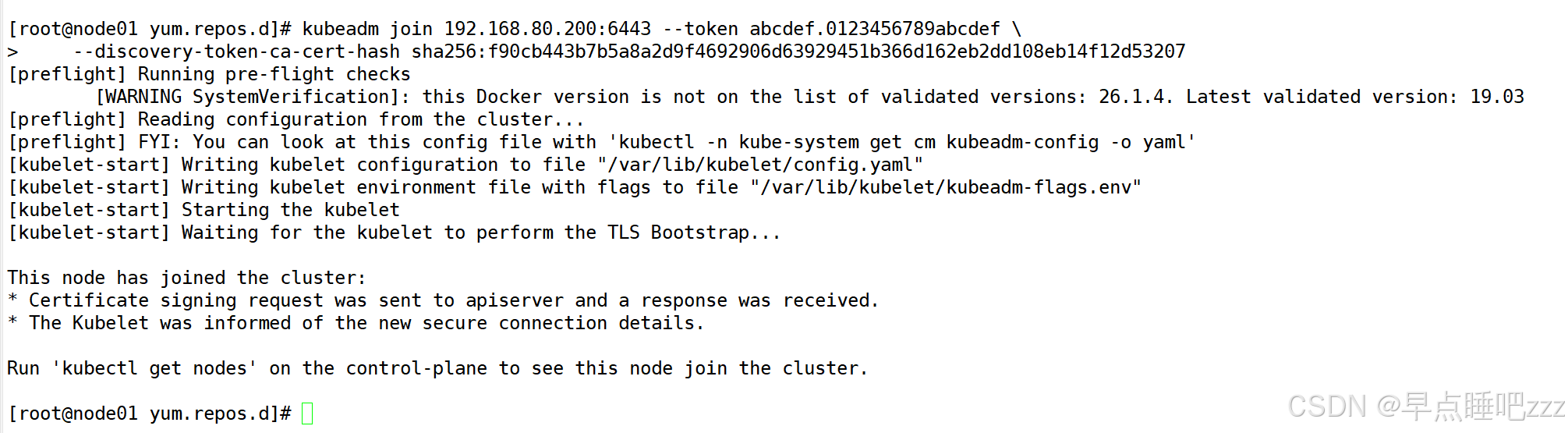

7.node01 node02 节点

kubeadm join 192.168.80.200:6443 --token abcdef.0123456789abcdef \ > --discovery-token-ca-cert-hash sha256:f90cb443b7b5a8a2d9f4692906d63929451b366d162eb2dd108eb14f12d53207

七、所有节点部署网络插件flannel

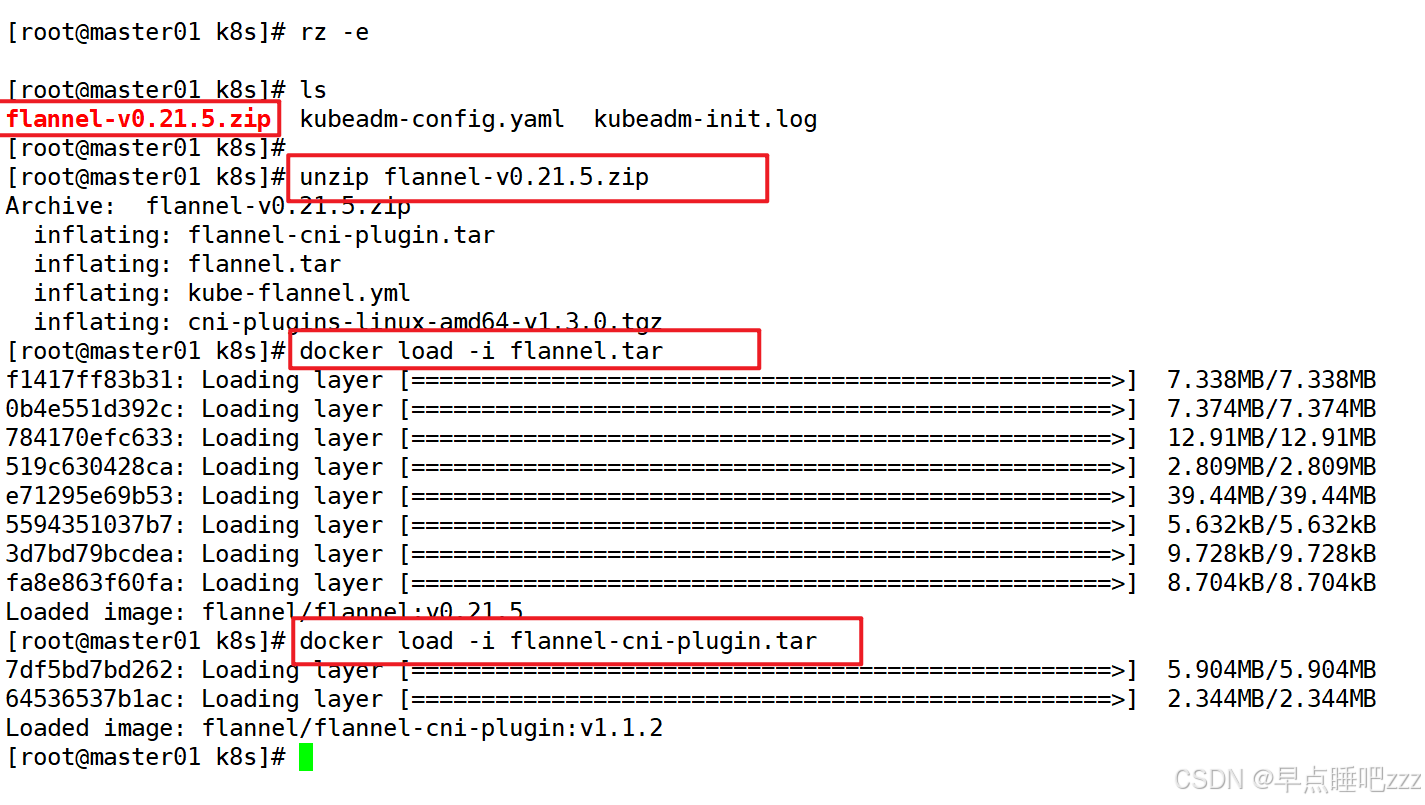

1.master01 节点

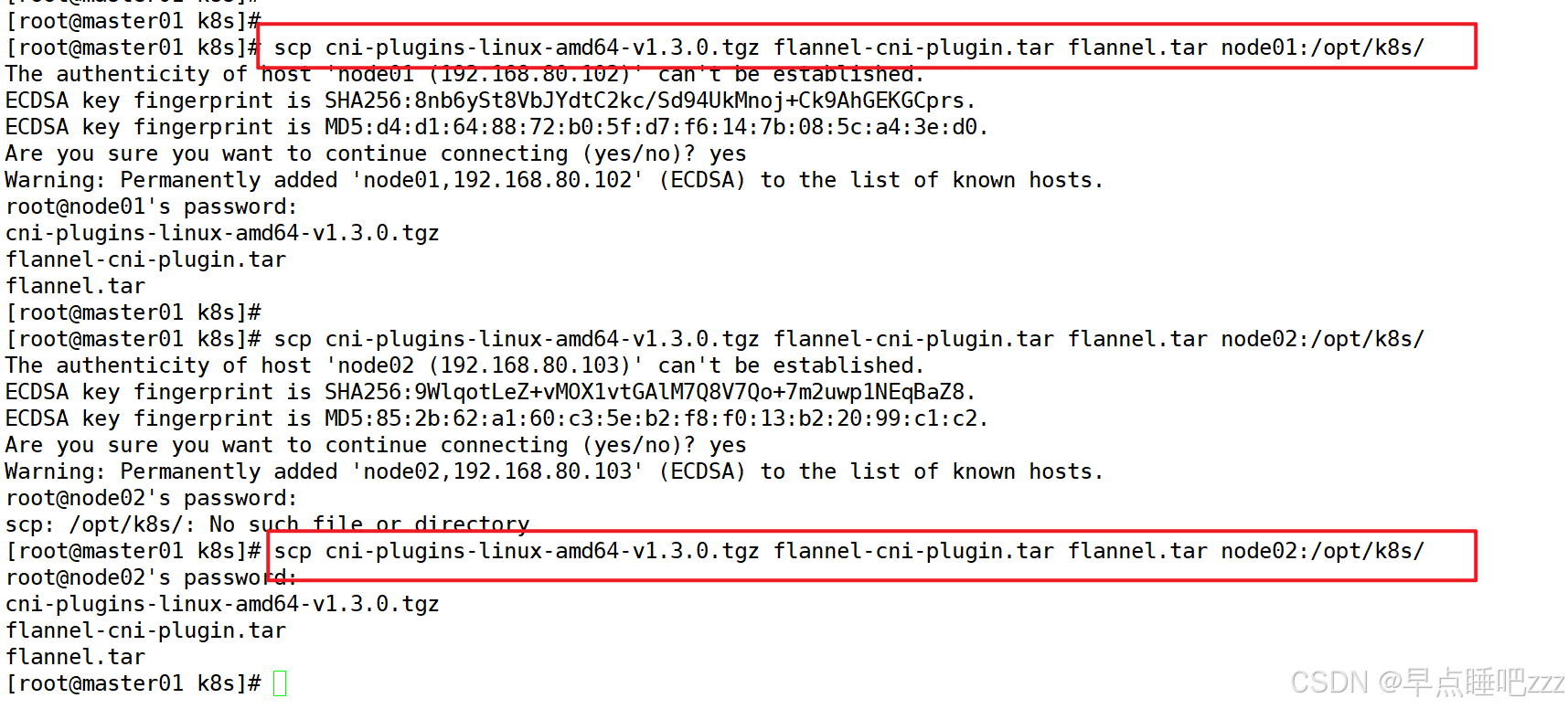

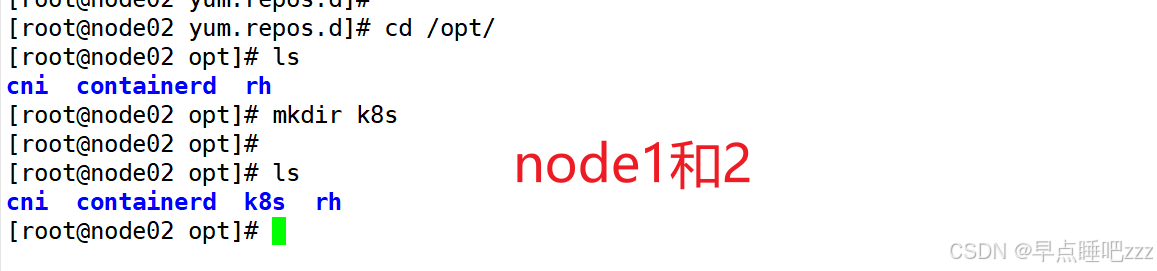

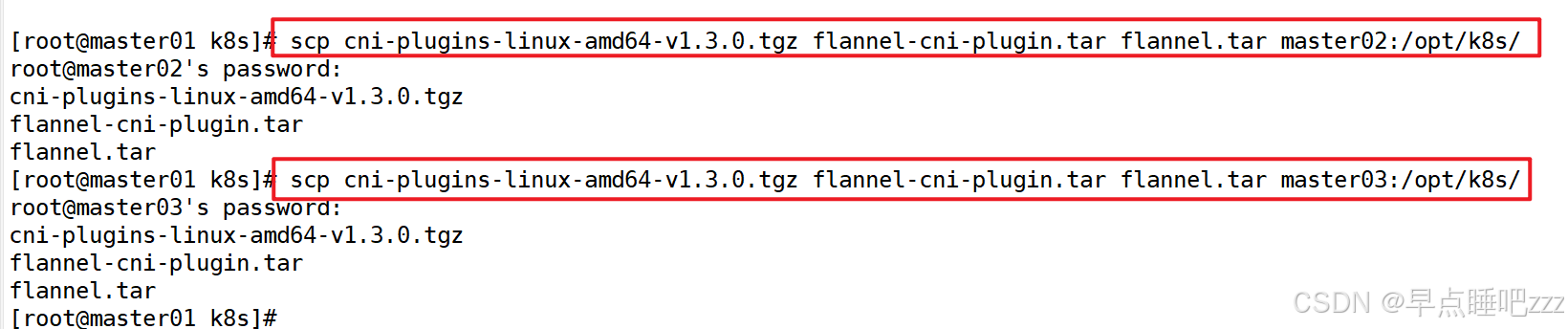

master01节点 cd /opt/k8s/ 上传flannel-v0.21.5.zip压缩包 unzip flannel-v0.21.5.zip docker load -i flannel.tar docker load -i flannel-cni-plugin.tar 两个node节点 cd /opt mkdir k8s master01节点 scp cni-plugins-linux-amd64-v1.3.0.tgz flannel-cni-plugin.tar flannel.tar master02:/opt/k8s/ scp cni-plugins-linux-amd64-v1.3.0.tgz flannel-cni-plugin.tar flannel.tar master03:/opt/k8s/ scp cni-plugins-linux-amd64-v1.3.0.tgz flannel-cni-plugin.tar flannel.tar node01:/opt/k8s/ scp cni-plugins-linux-amd64-v1.3.0.tgz flannel-cni-plugin.tar flannel.tar node02:/opt/k8s/

然后回到master 01

2.其余所有节点

在其余所有节点进入/opt/k8s目录执行 docker load -i flannel.tar docker load -i flannel-cni-plugin.tar 3.所有服务器操作

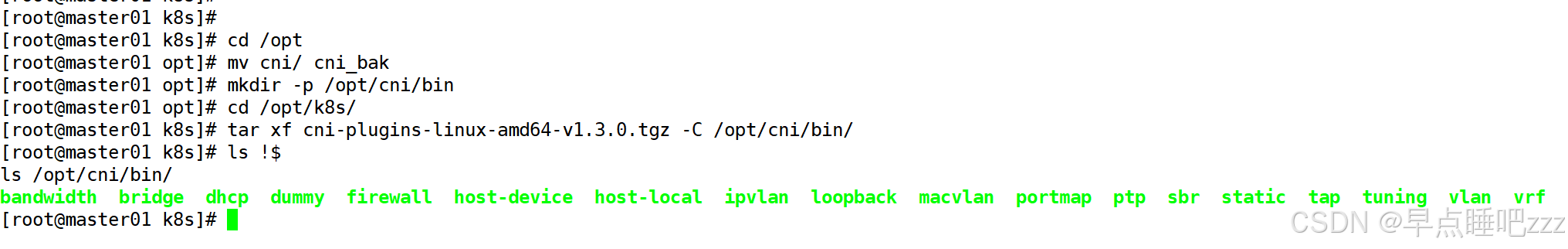

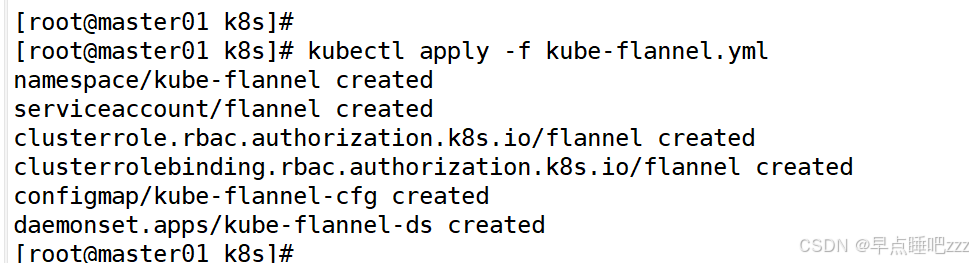

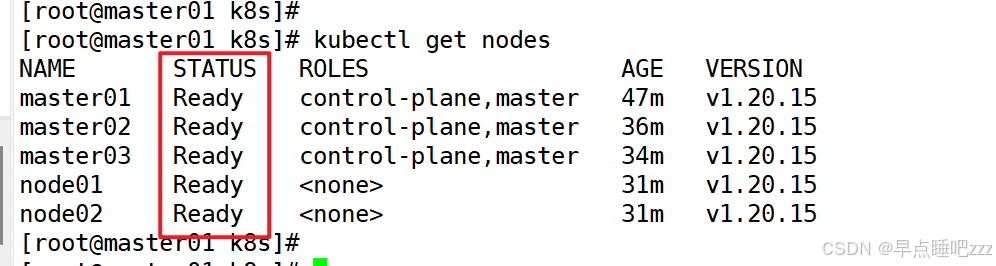

所有服务器操作 cd /opt mv cni/ cni_bak mkdir -p /opt/cni/bin cd /opt/k8s/ tar xf cni-plugins-linux-amd64-v1.3.0.tgz -C /opt/cni/bin/ ls !$ master01节点操作 kubectl apply -f kube-flannel.yml #创建资源 kubectl get nodes

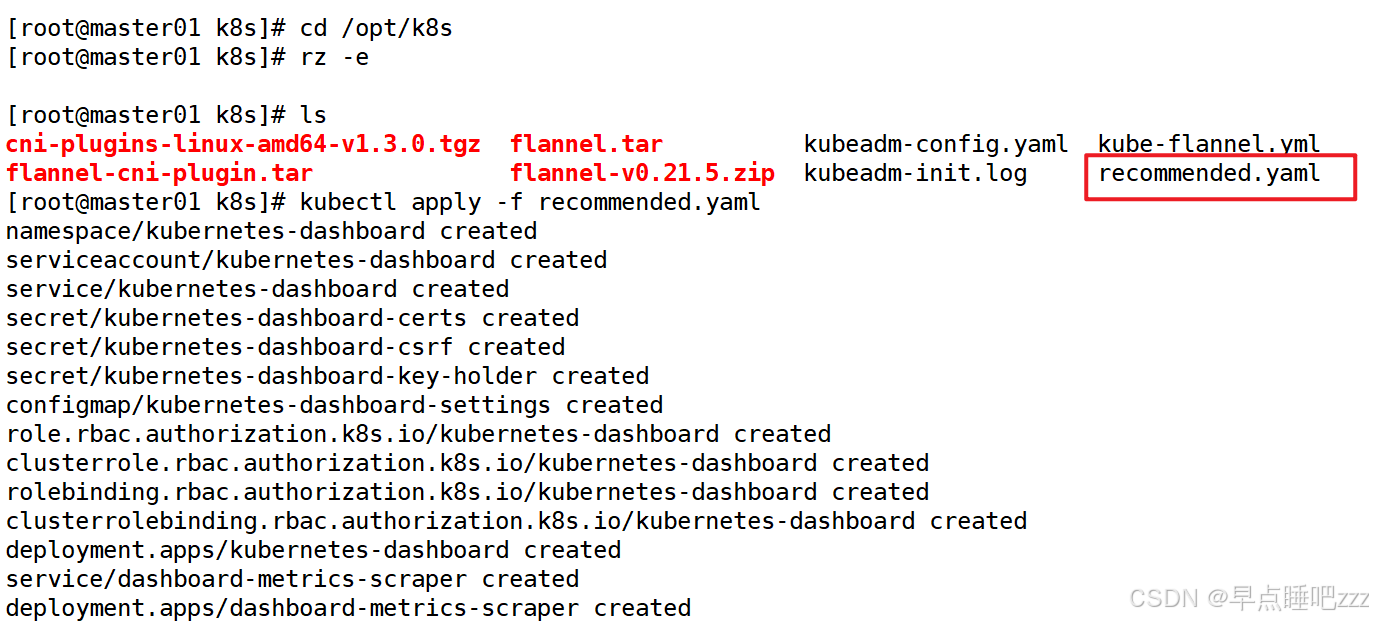

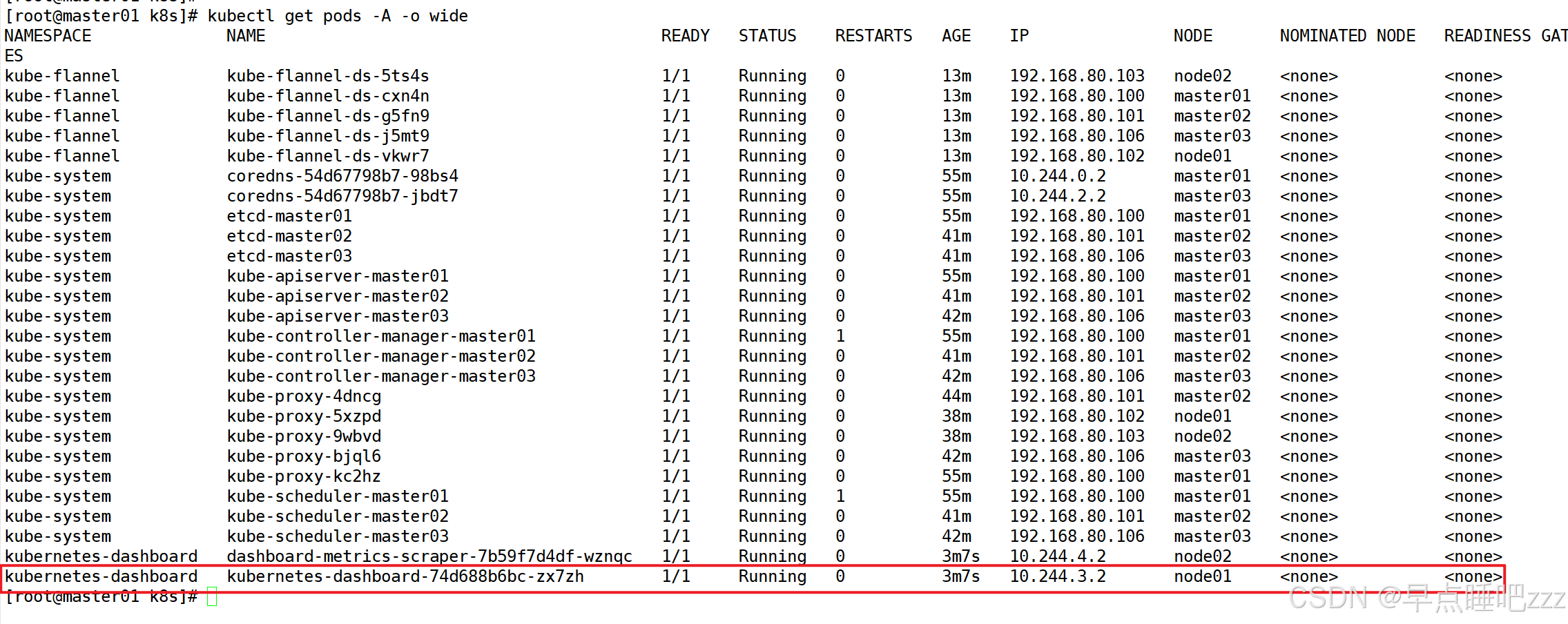

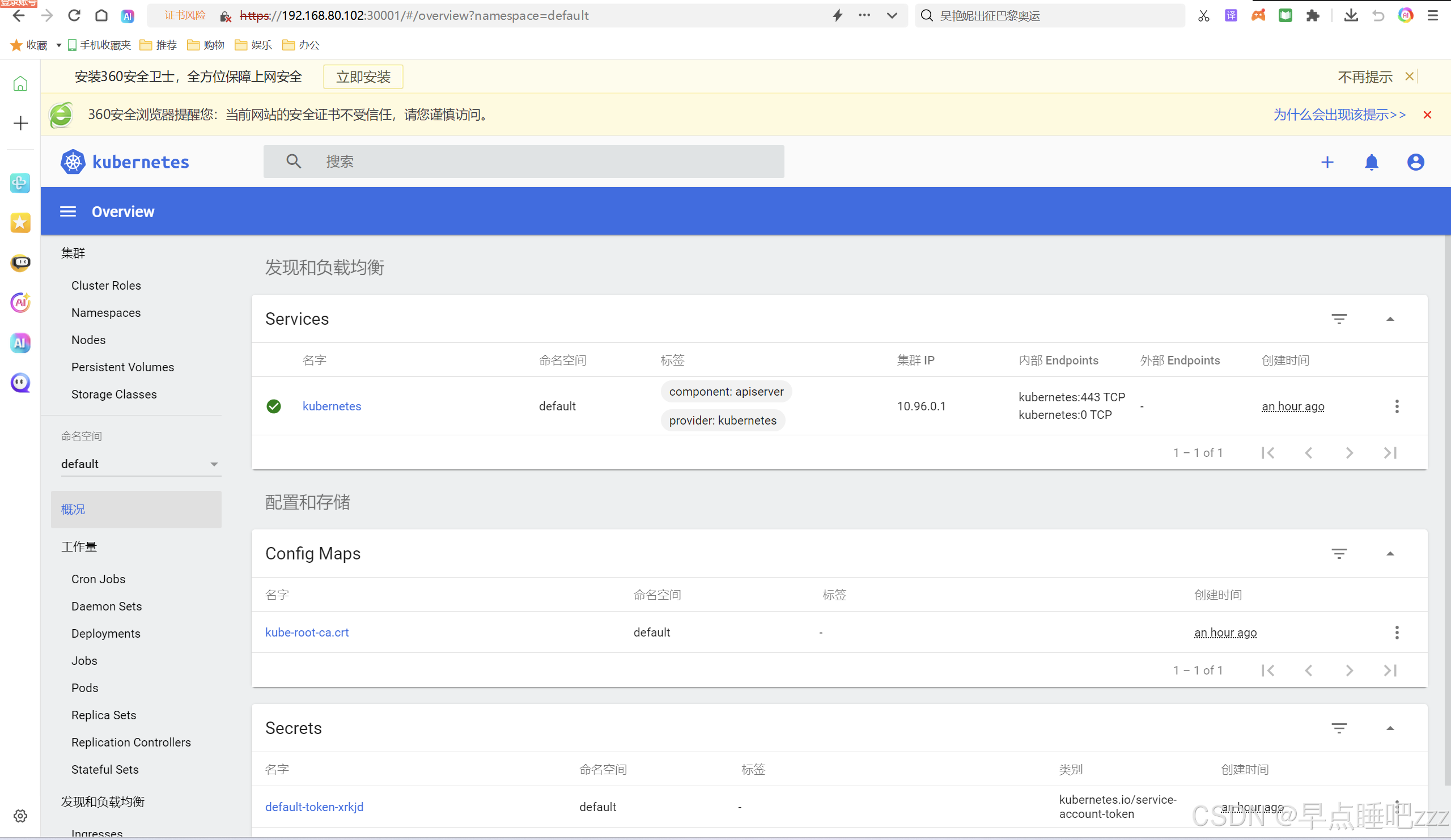

八、部署 Dashboard可视化面板

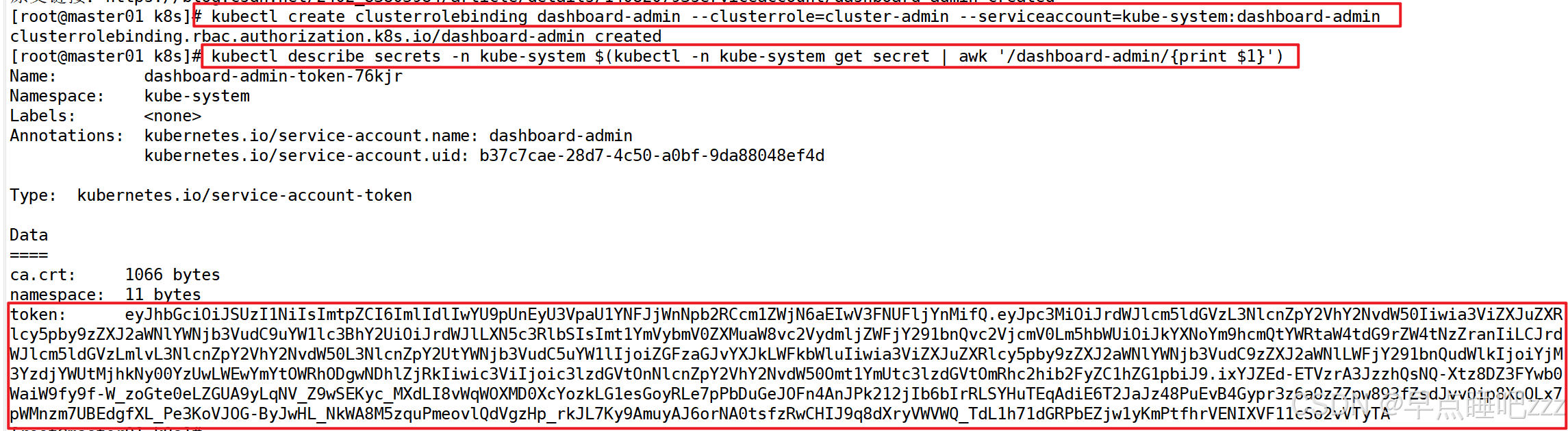

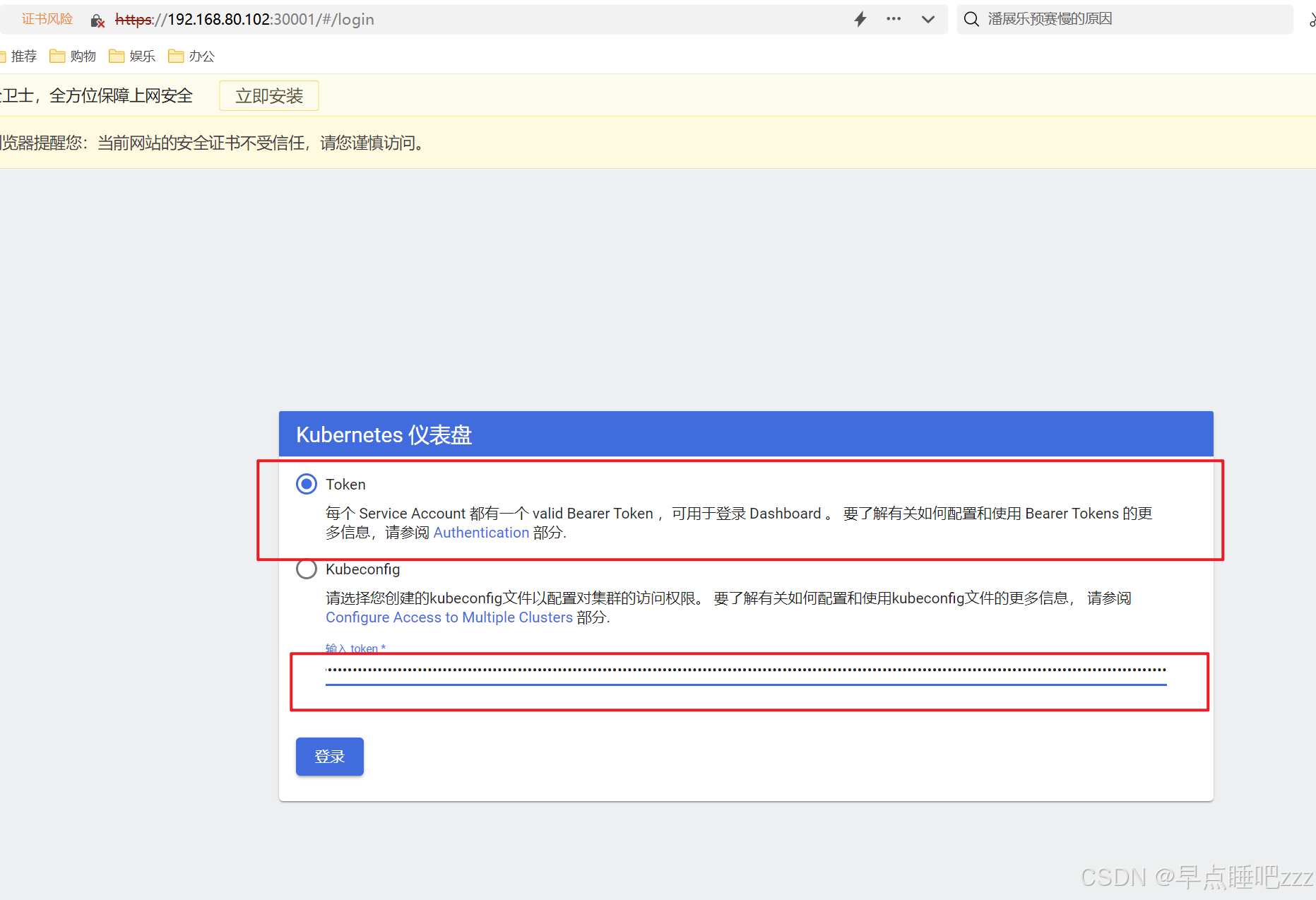

//在 master01 节点上操作 #上传 recommended.yaml 文件到 /opt/k8s 目录中 cd /opt/k8s kubectl apply -f recommended.yaml #创建service account并绑定默认cluster-admin管理员集群角色 kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') #查看用户使用的令牌,需复制,后面要用

如何解决kubeadm部署k8s集群中状态显示Unhealthy?

cd /etc/kubernetes/manifests vim kube-controller-manager.yaml vim kube-scheduler.yaml 将三个master节点中两个文件中的 #- --port=0注释重启即可 systemctl restart kubelet.service kubectl get cs九、更新k8s证书有效期

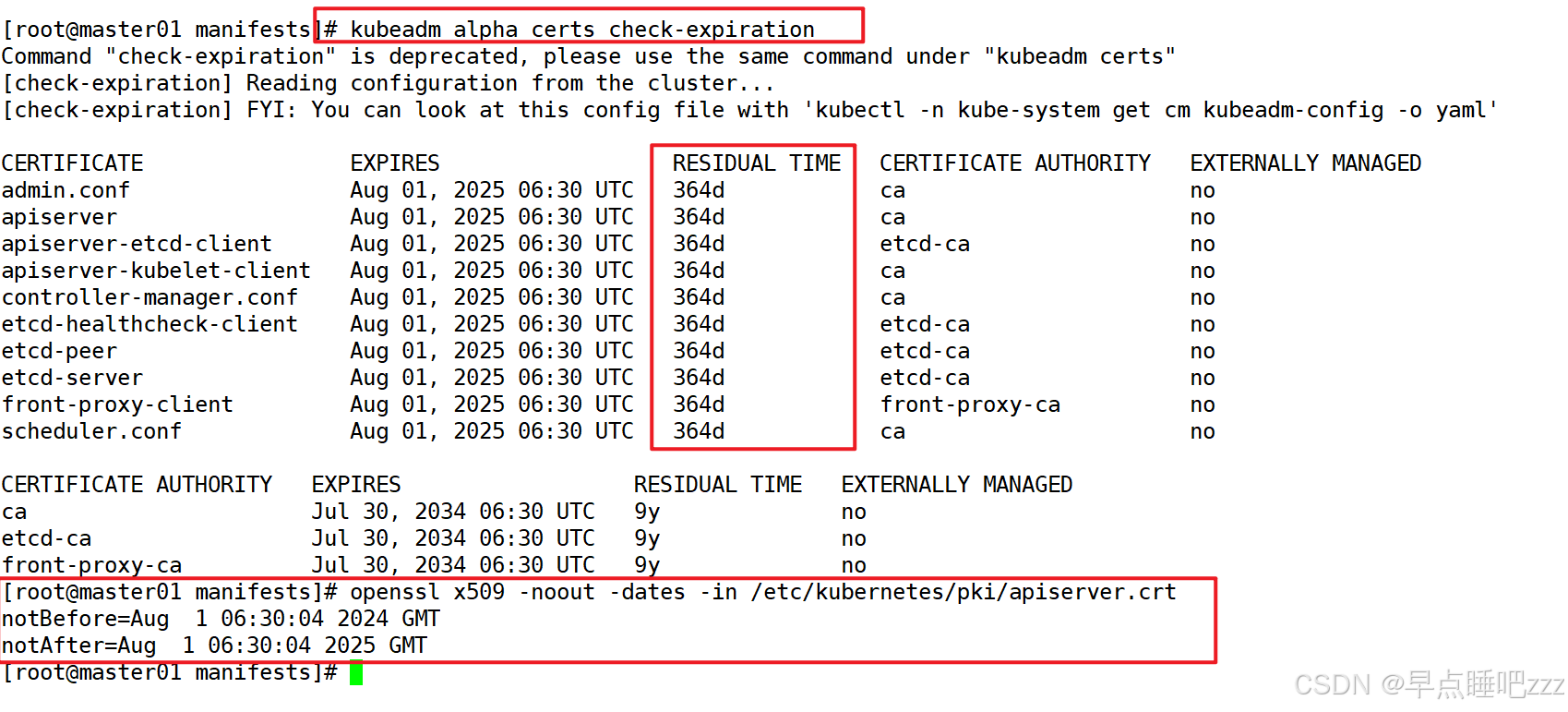

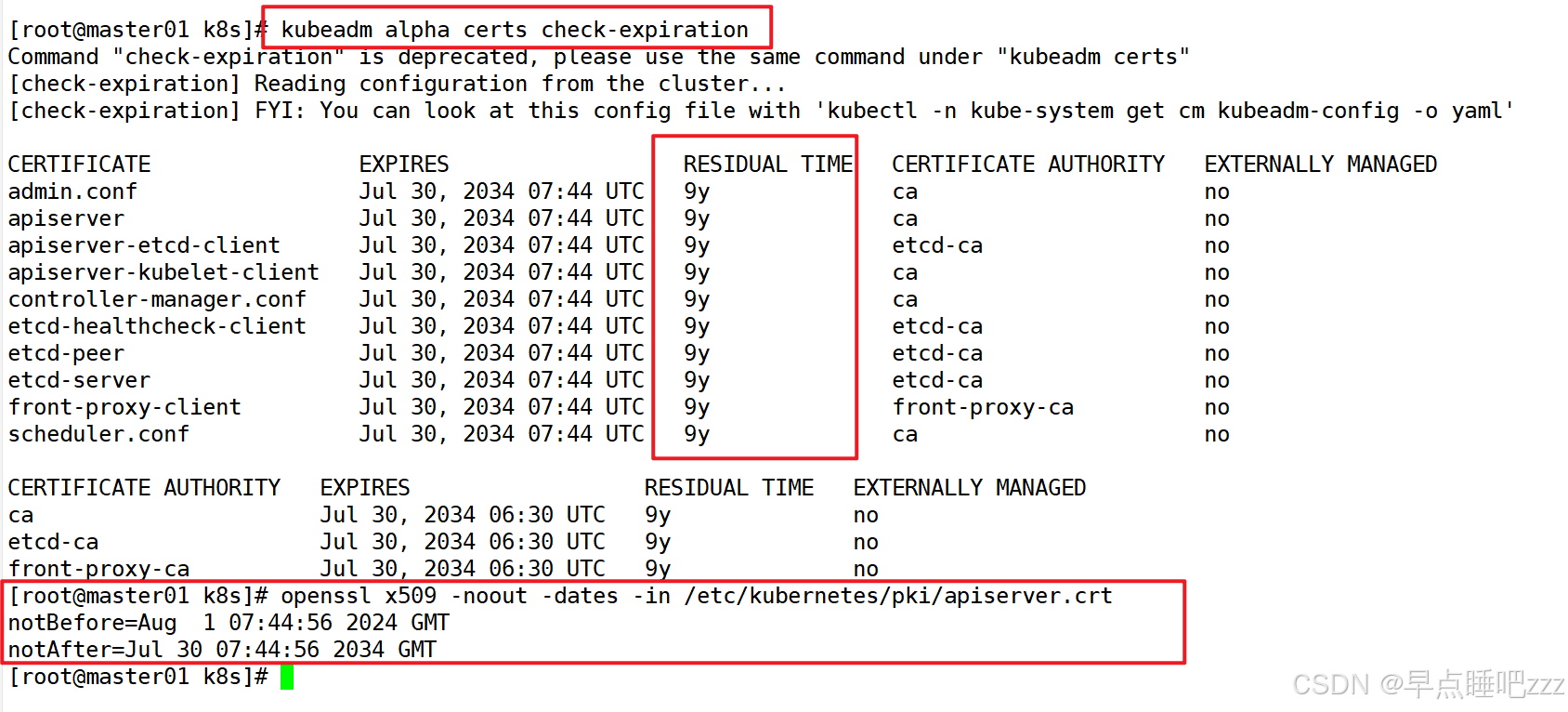

#查看证书有效期 kubeadm alpha certs check-expiration openssl x509 -noout -dates -in /etc/kubernetes/pki/apiserver.crt

方法一:通过脚本升级证书有效期

master01节点 cd /opt/k8s/ 上传脚本update-kubeadm-cert.sh chmod +x update-kubeadm-cert.sh ./update-kubeadm-cert.sh all

方法二:

kubeadm alpha certs renew all --config kubeadm.yaml #重新刷新证书一年有效期 kubeadm init phase kubeconfig all --config kubeadm.yaml #更新kubeconfig配置文件 随后重启证书 systemctl restart kubelet 二进制安装k8s重启方法 kubeadm安装k8s的重启方法 mv /etc/kubernetes/manifests/*.yaml /tmp/ mv /tmp/*.yaml /etc/kubernetes/manifests/