本实验过程中所显示的优惠价格及费用报销等相关信息仅在【Arm AI 开发体验创造营】体验活动过程中有效,逾期无效,请根据实时价格自行购买和体验。同时,感谢本次体验活动 Arm 导师 Liliya 对于本博客的指导。 详见活动地址:https://marketing.csdn.net/p/a11ba7c4ee98e52253c8608a085424be

文章目录

1 写在前面

在常规的嵌入式软件开发中,通常需要在用于开发的电脑主机上提前把应用程序编译好,生成可在嵌入式芯片上运行的文件代码,再通过相应的烧录调试工具,把该代码烧录至开发板中,才能查看验证所编写应用程序的正确性。

传统的嵌入式开发流程中,往往需要用到物理开发板才能进行相应的软件开发。但是,没有拿到物理开发板或对于一些新推出的处理器产品(例如:Arm® Cortex®-M55,Cortex-M85, Ethos™-U 系列 NPU 等)市场上硬件的资源较为稀缺且需要较长的时间才能获取到物理开发板的情况下,是否有办法在相应的平台上进行软件开发呢?

答案自然是有的,这就是我们本期实验手册要给大家介绍的一个非常强大的开发工具:**Arm 虚拟硬件(Arm Virtual Hardware)。**借助 Arm 虚拟硬件平台,我们可以做一些非常实用有趣的工具和案例,达到辅助我们日常开发的目的。

另一方面,在当下 AI 应用层出不穷,技术生态也越见成熟,我们有理由相信,在不久的将来,一定会是 AI 应用渗透到各行各业,到那时 AI 技术的应用,将会是更加低门槛和更加具有普适性。

本期文章给大家介绍的 基于Arm虚拟硬件和语音识别接入Kimi AI大模型实现智能语音交互新体验,下文会该项目做详细介绍。

2 Arm虚拟硬件简介

Arm 虚拟硬件(Arm Virtual Hardware)提供了一个 Ubuntu Linux 镜像,包括用于 物联网、机器学习和嵌入式应用程序的 Arm 开发工具:例如,Arm 编译器、 FVP 模型和其他针对 Cortex-M 系列处理器的开发工具帮助开发者快速入门。Arm 虚拟硬件限时免费提供用于评估用途,例如,评估 CI/CD、MLOps 和 DevOps 工作流中的自动化测试工作流等。订阅访问和使用此版本的 Arm 虚拟硬件,您需同意产品最终用户许可协议中与免费测试版许可相关的条款和协议。

Arm 虚拟硬件产品的技术概览示意图如下所示。开发者也可访问 Arm 虚拟硬件产品介绍页和产品技术文档了解更多关于 Arm 虚拟硬件产品知识。

3 案例项目简介

3.1 项目简介

本次实验案例是基于 Arm 虚拟硬件和 Baidu AI 语音识别,同时接入 Kimi AI 大模型实现智能语音交互新体验。

它的核心功能有以下几点:

- 支持联机 AI 语音识别。

- 支持联机 AIGC 文本处理。

- 支持联机 AI 语音合成。

3.2 AI 服务简介

本案例应用的最核心技术是基于前沿的 AI 服务技术,实现基础的 AI 能力,包括语音识别、语音合成,以及 AIGC 文本处理。

在案例中,我们会应用到几项 AI 服务能力,主要是百度的语言技术(语言识别和语音合成)、Kimi AI 大模型 AIGC 的文本处理。

下面简单对这几项 AI 服务做一下介绍。

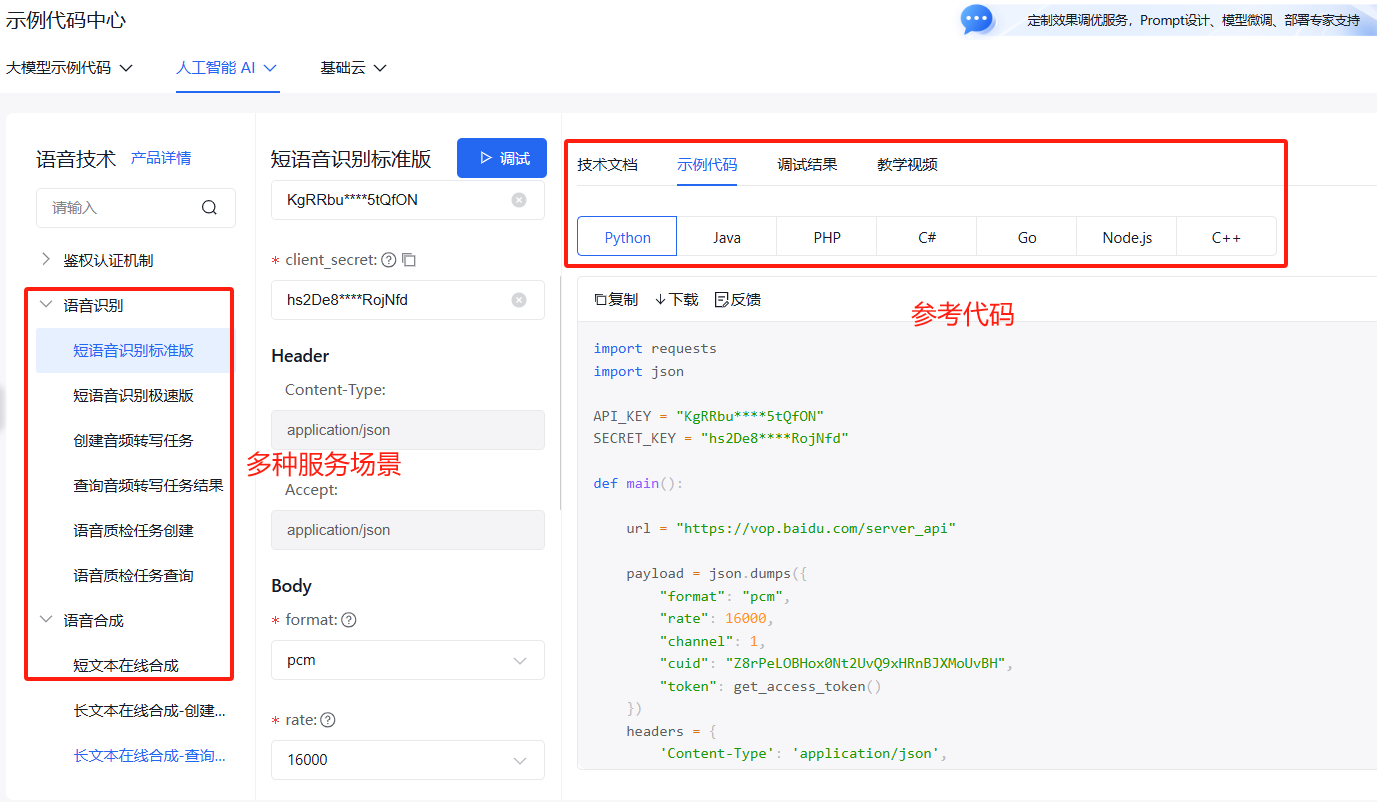

3.2.1 百度语音技术

百度语音技术服务,主要分为三大类别:语音识别、语音合成、呼叫中心语音。

百度语音技术开放了多种应用场景,详见下表,我们可以根据自己的业务灵活选用不同的场景模块,比如本案例中,我们选用了短语音识别和长文本在线合成。

| 语音识别 | 采用国际领先的流式端到端语音语言一体化建模算法,将语音快速准确识别为文字,支持手机应用语音交互、语音内容分析、机器人对话等多个场景。 |

|---|---|

| 短语音识别 | 将 60 秒以内的语音精准识别为文字,可适用于手机语音输入、智能语音交互、语音指令、语音搜索等短语音交互场景。 |

| 短语音识别极速版 | 采用最新解码技术,API 接口识别速度提升 5 倍以上,耗时仅音频时长十分之一,提升语音交互体验。 |

| 实时语音识别 | 实时语音识别接口采用 websocket 协议的连接方式,边上传音频边获取识别结果。适用于长句语音输入、音视频字幕、直播质检、会议记录等场景。 |

| 音频文件转写 (16k) | 音频文件转写接口可以将大批量的音频文件异步转写为文字。适合音视频字幕生产、批量录音质检、会议内容总结、录音内容分析等场景,一般12小时内返回识别接口。 |

| EasyDL语音识别 | 可以通过自助训练语言模型的方式有效提升您业务场景下的识别准确率。 |

| 语音合成 | 基于业内领先的深度学习技术,提供高度拟人、流畅自然的语音合成服务,支持在线、离线多种调用方式,满足泛阅读、订单播报、智能硬件等场景的语音播报需求。 |

| 短文本在线合成 | 基于 HTTP 请求的 REST API 接口,将文本转换为可以播放的音频文件。 最长可支持 1024 GBK 字节的文本。 |

| 长文本在线合成 | 长文本在线合成接口可以将 10 万字以内文本一次性合成,异步返回音频。支持多种优质音库,将超长文本快速转换成稳定流畅、饱满真实的音频。适用于阅读听书、新闻播报等客户。 |

| 离线语音合成 | 在无网或弱网环境下,可在手机 APP 或故事机、机器人等智能硬件设备终端进行语音播报,将文字合成为声音,提供稳定一致、流畅自然的合成体验 。 |

| 呼叫中心语音 | 呼叫中心服务分为呼叫中心解决语音方案及呼叫中心音频文件转写。可用于智能语音IVR、智能外呼、客服内容质检等场景。 |

| 音频文件转写 (8k) | 音频文件转写接口可以将大批量的音频文件异步转写为文字。适合批量录音质检、会议内容总结、录音内容分析等场景,一般 12 小时内返回识别接口。 |

| 呼叫中心语音解决方案 | MRCP Server 端,集成了呼叫中心 8K 采样率语音识别 (ASR) 和呼叫中心专属发音人语音合成 (TTS) 两种能力。 |

在百度提供的AI语音技术中,均有详尽的 API 指导和开发文档做支撑,可以很方便的帮助开发者完成AI语音技术的快速集成,更多相关参考资料,可以从这里了解更多。

3.2.2 Kimi AI 大型语言模型

Kimi 是北京月之暗面科技有限公司于 2023 年 10 月 9 日推出的一款智能助手,主要应用场景为专业学术论文的翻译和理解、辅助分析法律问题、快速理解 API 开发文档等,是全球首个支持输入 20 万汉字的智能助手产品。Kimi 在二级市场一度复现了 ChatGPT “带货能力”的势头,引发了一众 “Kimi 概念股” 狂飙猛涨。

Kimi 主要有6项功能:长文总结和生成、联网搜索、数据处理、编写代码、用户交互、翻译。主要应用场景为专业学术论文的翻译和理解、辅助分析法律问题、快速理解 API 开发文档等,是全球首个支持输入 20 万汉字的智能助手产品,已启动 200 万字无损上下文内测。

在本案例中,我们主要使用了用户交互场景下的文本处理,后续可以设计出更多的应用场景,也是比较容易的,更多关于 Kimi 的介绍和使用,可以参考这里及其官网。

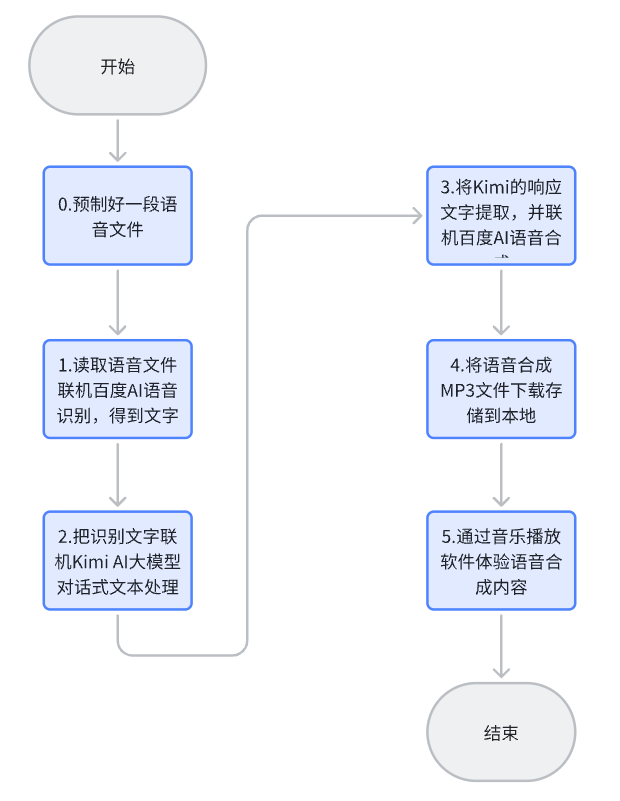

3.3 核心流程图

本案例的核心流程图如下所示:

如流程图所示:

先是语音文件准备,接着联机发起语音识别,Arm虚拟硬件本地获得语音识别结果,再联机Kimi执行AIGC文本处理,Arm虚拟硬件本地获得文本响应结果,最后再联机语音合成,Arm虚拟硬件本地生成语音文件(同时在云端也有在线mp3语音文件)。

注意,本次实验因基于Arm虚拟硬件远程调试,仅仅是核心功能实现,在实现过程中,语音的采集输入,采用提前预制好的语音文件;同时,语音合成的输出文件,采用标准的MP3音频文件,可以通过Windows的语音播放软件体验合成后的语音内容。

4 案例项目实现过程

4.1 RT-Thread 的移植

整个 RT-Thread 的移植可以参考我之前的博文,也可以尝试跟随 RT-Thread 的官方文档中心,自行完成,本文不再赘述。

4.2 远程文件系统的功能移植

移植远程文件系统功能,主要是为了在 Arm 虚拟硬件平台上更好地获得对文件系统中的文件有更精准的开发体验。

本案例中,为了满足响应的文件操作需求,我们开发了以下几个接口,包括文件打开、文件关闭、文件定位、文件偏移、文件读写、文件大小;这几个 API 都是与原生的 C 语言底层文件系统的接口用法兼容。

第5章节,可以查看详细的代码实现。

在实现远程文件系统的过程中,主要用到了 Arm 虚拟硬件的 虚拟 Socket 接口,它可以很方便的集成标准Socket 网络通讯,快速基于网络能力搭建各式各样的网络功能。

基于这套远程文件系统接口,我们在Arm虚拟硬件内编程操作文件就可以像操作本地的文件系统一样了,如fread/fwrite接口,完全与原生的接口兼容。

这样就可以完美解决语音文件输入的问题。

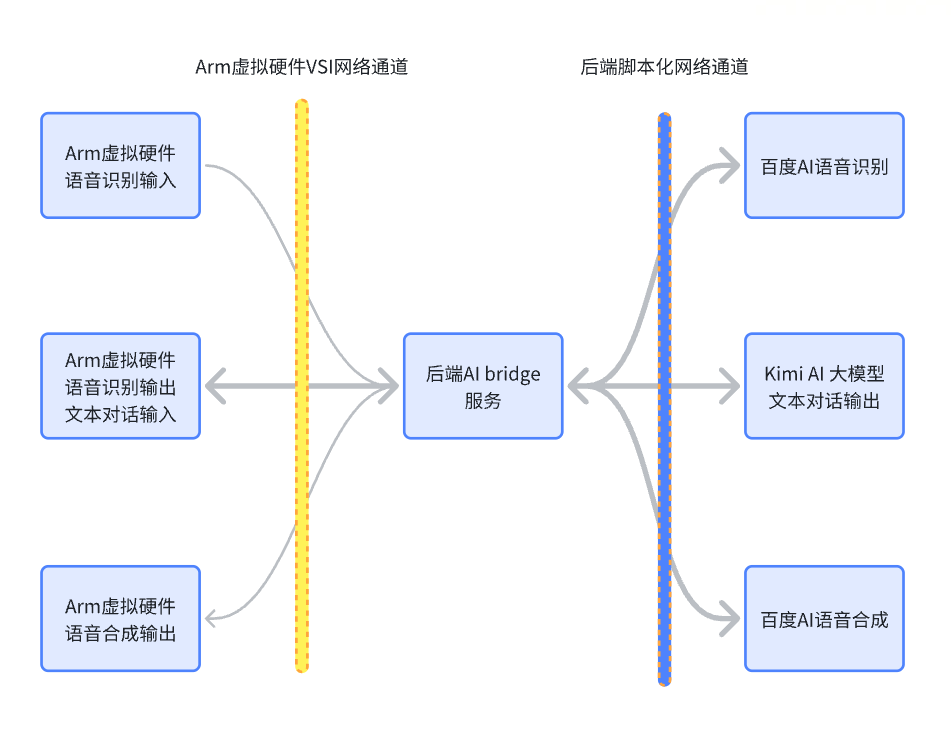

4.3 远程调用 AI 能力的逻辑实现

这里的 AI 能力主要包括百度的语音识别、语音合成,以及 Kimi 的文本生成。

在实现过程中,它也是基于 VSI 的 Socket 网络通讯构建一套完整的服务链路,参考图如下:

4.4 遇到的难题

这期间遇到的难度有几个:

- 基于 VSI 在实现 Socket 通讯时,由于 Arm 虚拟硬件平台提供的 API,并不完全兼容 BSD 的 Socket API,所以前期在摸索的过程中,踩坑不少。

- 基于 Socket 网络实现远程文件系统,受限于内存,单次读写文件的长度也会被限制,如果太长了,会导致内存奔溃;但如果读写太短,又容易导致读写瓶颈,效率低下。

- 联调各个 AI 服务,由于是后端服务调用,比较耗时,异常的超时机制比较复杂。

5 核心代码展示

5.1 远程文件系统的逻辑代码

两部分内容:Arm 虚拟硬件端接口展示的头文件和接口实现文件,主要是 C 代码,充当客户端。

#ifndef __RPC_FS_H__ #define __RPC_FS_H__ #include <stdio.h> #include <stdlib.h> #include <string.h> #include <unistd.h> typedef int RPC_FILE; int rpc_fs_init(void); int rpc_fs_deinit(void); /* FILE *fopen(const char *pathname, const char *mode); */ RPC_FILE * rpc_fs_fopen(const char *pathname, const char *mode); /* int fseek(FILE *stream, long offset, int whence); */ int rpc_fs_fseek(RPC_FILE *stream, long offset, int whence); /* long ftell(FILE *stream); */ long rpc_fs_ftell(RPC_FILE *stream); /* size_t fread(void *ptr, size_t size, size_t nmemb, FILE *stream); */ size_t rpc_fs_fread(void *ptr, size_t size, size_t nmemb, RPC_FILE *stream); /* size_t fwrite(const void *ptr, size_t size, size_t nmemb, FILE *stream); */ size_t rpc_fs_fwrite(const void *ptr, size_t size, size_t nmemb, RPC_FILE *stream); /* int fclose(FILE *stream); */ int rpc_fs_fclose(RPC_FILE *file); int rpc_fs_fsize(const char *file_name); #endif // #include <stdio.h> #include <stdlib.h> #include <string.h> #include <unistd.h> #include "cJSON.h" #include "rpc_fs.h" #if (CFG_AWS_IOT_SOCKET_ENABLE) #include "iot_socket.h" #define AF_INET IOT_SOCKET_AF_INET #define SOCK_STREAM IOT_SOCKET_SOCK_STREAM #define SOCK_DGRAM IOT_SOCKET_SOCK_DGRAM #define socket(af, type, protocol) iotSocketCreate(af, type, protocol) #define listen(fd, backlog) iotSocketListen (fd, backlog) #define read(fd, buf, len) iotSocketRecv(fd, buf, len) #define write(fd, buf, len) iotSocketSend(fd, buf, len) #define close(fd) iotSocketClose(fd) #define INET_ADDRSTRLEN 128 #else #include <sys/socket.h> #include <netinet/in.h> #include <arpa/inet.h> #endif #define TCP_MODE 0 #define SERVER_IP "127.0.0.1" #define SERVER_IP_BYTES {0x7F, 0x00, 0x00, 0x01} // sepcial for AVH socket API #if (TCP_MODE) #define SERVER_PORT 55555 #else #define SERVER_PORT 55556 #endif static int g_sock = -1; static int g_fd = 1; #if !(CFG_AWS_IOT_SOCKET_ENABLE) static struct sockaddr_in g_serv_addr; #endif int rpc_fs_init(void) { #if (CFG_AWS_IOT_SOCKET_ENABLE) #if (TCP_MODE) if ((g_sock = iotSocketCreate(AF_INET, IOT_SOCKET_SOCK_STREAM, IOT_SOCKET_IPPROTO_TCP)) < 0) { #else if ((g_sock = iotSocketCreate(AF_INET, IOT_SOCKET_SOCK_DGRAM, IOT_SOCKET_IPPROTO_UDP)) < 0) { #endif return -1; } //printf("g_sock: %d\n", g_sock); #else if ((g_sock = socket(AF_INET, SOCK_DGRAM, 0)) < 0) { return -1; } memset(&g_serv_addr, 0, sizeof(g_serv_addr)); g_serv_addr.sin_family = AF_INET; g_serv_addr.sin_port = htons(SERVER_PORT); g_serv_addr.sin_addr.s_addr = inet_addr(SERVER_IP); #endif #if (TCP_MODE) #if (CFG_AWS_IOT_SOCKET_ENABLE) uint8_t ip_array[] = SERVER_IP_BYTES; uint32_t ip_len = sizeof(ip_array); int ret = iotSocketConnect (g_sock, (const uint8_t *)ip_array, ip_len, SERVER_PORT); //printf("ret: %d\n", ret); #else if (connect(g_sock, (struct sockaddr *)&g_serv_addr, sizeof(g_serv_addr)) < 0) { close(g_sock); printf("connect fail\n"); return -1; } #endif #endif //printf("g_sock1: %d\n", g_sock); return g_sock; } int rpc_fs_deinit(void) { close(g_sock); return 0; } cJSON *rpc_fs_cJSON_exhcange(cJSON *in, int exp_len) { char *json_string = cJSON_Print(in); if (json_string) { #if (DEBUG_MODE) if (strlen(json_string) <= 2048) { printf(">>> %s\n", json_string); } #endif #if (CFG_AWS_IOT_SOCKET_ENABLE) //printf("%s:%d %d\n", __func__, __LINE__, g_sock); //extern int32_t iotSocketSendTo (int32_t socket, const void *buf, \ uint32_t len, const uint8_t *ip, uint32_t ip_len, uint16_t port); #if (TCP_MODE) int ret = iotSocketSend(g_sock, json_string, strlen(json_string)); #else uint8_t ip_array[] = SERVER_IP_BYTES; uint32_t ip_len = sizeof(ip_array); //printf("%d %d %d %d %d\n", ip_array[0], ip_array[1], ip_array[2], ip_array[3], ip_len); int ret = iotSocketSendTo (g_sock, json_string, strlen(json_string), \ (const uint8_t *)ip_array, ip_len, SERVER_PORT); #endif //printf("%s:%d %d\n", __func__, __LINE__, ret); #else #if (TCP_MODE) send(g_sock, json_string, strlen(json_string), 0); #else sendto(g_sock, json_string, strlen(json_string), 0, \ (struct sockaddr *)&g_serv_addr, sizeof(g_serv_addr)); #endif #endif free(json_string); } // 释放 cJSON 对象 cJSON_Delete(in); char buffer[1024] = {0}; int recv_size = sizeof(buffer); char *p_buffer = buffer; if (exp_len > recv_size) { recv_size = exp_len * 2 + 100; p_buffer = (char *)malloc(recv_size); //printf("p_buffer: %p\n", p_buffer); if (!p_buffer) { printf("error buffer\n"); } } #if (CFG_AWS_IOT_SOCKET_ENABLE) uint8_t ip_recv[64]; uint16_t port = 0; uint32_t ip_len = sizeof(ip_recv); #if (TCP_MODE) int ret = iotSocketRecv (g_sock, p_buffer, recv_size); #else int ret = iotSocketRecvFrom (g_sock, p_buffer, recv_size, ip_recv, &ip_len, &port); #endif #else #if (TCP_MODE) int ret = recv(g_sock, p_buffer, recv_size, 0); #else int ret = recvfrom(g_sock, p_buffer, recv_size, 0, NULL, NULL); #endif #endif //printf("ret: %d %d\n", ret, recv_size); #if (DEBUG_MODE) if (p_buffer == buffer) { printf("<<< %s\n", p_buffer); } #endif cJSON * rsp_ret = NULL; if (ret < 0) { goto exit_entry; } cJSON * rsp_root = NULL; cJSON * status = NULL; RPC_FILE *file = NULL; rsp_root = cJSON_Parse(p_buffer); //printf("rsp_root: %p\n", rsp_root); status = cJSON_GetObjectItem(rsp_root, "status"); //printf("%s:%d ...\n", __func__, __LINE__); if (strcmp(status->valuestring, "ok")) { printf("status err: %s\n", status->valuestring); cJSON_Delete(rsp_root); goto exit_entry; } //printf("%s:%d ...\n", __func__, __LINE__); rsp_ret = rsp_root; exit_entry: if (p_buffer != buffer) { free(p_buffer); p_buffer = NULL; } return rsp_ret; } /* FILE *fopen(const char *pathname, const char *mode); */ RPC_FILE * rpc_fs_fopen(const char *pathname, const char *mode) { RPC_FILE *file = NULL; cJSON *root = cJSON_CreateObject(); // 添加键值对到 cJSON 对象中 cJSON_AddItemToObject(root, "name", cJSON_CreateString(pathname)); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("fopen")); cJSON_AddItemToObject(root, "mode", cJSON_CreateString(mode)); cJSON *rsp_root = rpc_fs_cJSON_exhcange(root, 0); if (rsp_root) { file = (RPC_FILE *)&g_fd; cJSON_Delete(rsp_root); } return file; } /* int fseek(FILE *stream, long offset, int whence); */ int rpc_fs_fseek(RPC_FILE *stream, long offset, int whence) { cJSON *root = cJSON_CreateObject(); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("fseek")); cJSON_AddItemToObject(root, "offset", cJSON_CreateNumber(offset)); cJSON_AddItemToObject(root, "whence", cJSON_CreateNumber(whence)); cJSON *rsp_root = rpc_fs_cJSON_exhcange(root, 0); if (rsp_root) { cJSON_Delete(rsp_root); return 0; } else { return -1; } } /* long ftell(FILE *stream); */ long rpc_fs_ftell(RPC_FILE *stream) { cJSON *root = cJSON_CreateObject(); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("ftell")); cJSON *rsp_root = rpc_fs_cJSON_exhcange(root, 0); if (rsp_root) { cJSON *offset = cJSON_GetObjectItem(rsp_root, "offset"); cJSON_Delete(rsp_root); return offset->valueint; } else { return -1; } } // 将单个十六进制字符转换为对应的整数值 unsigned char hex_char_to_int(char c) { if (c >= '0' && c <= '9') { return c - '0'; } else if (c >= 'a' && c <= 'f') { return c - 'a' + 10; } else if (c >= 'A' && c <= 'F') { return c - 'A' + 10; } return 0; // 处理非法字符,默认0 } // 将十六进制字符串转换为字节数组 void hex_string_to_byte_array(const char* hex_string, unsigned char* byte_array) { size_t len = strlen(hex_string); size_t i; for (i = 0; i < len; i += 2) { byte_array[i/2] = (hex_char_to_int(hex_string[i]) << 4) + \ hex_char_to_int(hex_string[i+1]); } } //将二进制源串分解成双倍长度可读的16进制串, 如 0x12AB-->"12AB" void byte_array_to_hex_string(uint8_t *psIHex, int32_t iHexLen, char *psOAsc) { static const char szMapTable[17] = {"0123456789ABCDEF"}; int32_t iCnt,index; unsigned char ChTemp; for(iCnt = 0; iCnt < iHexLen; iCnt++) { ChTemp = (unsigned char)psIHex[iCnt]; index = (ChTemp / 16) & 0x0F; psOAsc[2*iCnt] = szMapTable[index]; ChTemp = (unsigned char) psIHex[iCnt]; index = ChTemp & 0x0F; psOAsc[2*iCnt + 1] = szMapTable[index]; } } /* size_t fread(void *ptr, size_t size, size_t nmemb, FILE *stream); */ size_t rpc_fs_fread(void *ptr, size_t size, size_t nmemb, RPC_FILE *stream) { int read_size = size * nmemb; cJSON *root = cJSON_CreateObject(); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("fread")); cJSON_AddItemToObject(root, "length", cJSON_CreateNumber(read_size)); cJSON *rsp_root = rpc_fs_cJSON_exhcange(root, read_size); if (rsp_root) { cJSON *contents = cJSON_GetObjectItem(rsp_root, "contents"); //printf("contents: %s\n", contents->valuestring); hex_string_to_byte_array(contents->valuestring, ptr); cJSON_Delete(rsp_root); return read_size; } else { return -1; } } /* size_t fwrite(const void *ptr, size_t size, size_t nmemb, FILE *stream); */ size_t rpc_fs_fwrite(const void *ptr, size_t size, size_t nmemb, RPC_FILE *stream) { int write_size = size * nmemb; cJSON *root = cJSON_CreateObject(); uint8_t *contents_hex_string = malloc(write_size * 2); if (!contents_hex_string) { return -1; } byte_array_to_hex_string((uint8_t *)ptr, write_size, (char *)contents_hex_string); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("fwrite")); cJSON_AddItemToObject(root, "contents", cJSON_CreateString((const char *)contents_hex_string)); cJSON_AddItemToObject(root, "length", cJSON_CreateNumber(write_size)); cJSON *rsp_root = rpc_fs_cJSON_exhcange(root, 0); if (rsp_root) { cJSON_Delete(rsp_root); return write_size; } else { return -1; } } /* int fclose(FILE *stream); */ int rpc_fs_fclose(RPC_FILE *file) { cJSON *root = cJSON_CreateObject(); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("fclose")); cJSON *rsp_root = rpc_fs_cJSON_exhcange(root, 0); if (rsp_root) { cJSON_Delete(rsp_root); return 0; } else { return -1; } } int rpc_fs_fsize(const char *file_name) { RPC_FILE *file = rpc_fs_fopen(file_name, "rb+"); rpc_fs_fseek(file, 0, SEEK_END); int offset = rpc_fs_ftell(file); rpc_fs_fclose(file); return offset; } #ifndef MAIN int rpc_fs_main(int argc, const char *argv[]) #else int main(int argc, const char *argv[]) #endif { int offset = 0; char buffer[1024] = {0}; const char *file_name = "123.txt"; char *write_request = "WRITE test.txt"; RPC_FILE *file = NULL; if (argc > 1) { file_name = argv[1]; } rpc_fs_init(); printf("%d %d %d\n", SEEK_SET, SEEK_CUR, SEEK_END); int size = rpc_fs_fsize("123.txt"); printf("size: %d\n", size); size = rpc_fs_fsize("456.txt"); printf("size: %d\n", size); file = rpc_fs_fopen(file_name, "rb+"); offset = rpc_fs_ftell(file); printf("offset1: %d\n", offset); rpc_fs_fseek(file, 10, SEEK_CUR); offset = rpc_fs_ftell(file); printf("offset2: %d\n", offset); rpc_fs_fread(buffer, 1, 10, file); printf("buf: %s\n", buffer); rpc_fs_fseek(file, 5, SEEK_SET); offset = rpc_fs_ftell(file); printf("offset3: %d\n", offset); rpc_fs_fread(buffer, 1, 10, file); printf("buf: %s\n", buffer); rpc_fs_fseek(file, 5, SEEK_SET); offset = rpc_fs_ftell(file); printf("offset4: %d\n", offset); rpc_fs_fwrite(write_request, 1, 10, file); rpc_fs_fseek(file, 5, SEEK_SET); offset = rpc_fs_ftell(file); printf("offset5: %d\n", offset); rpc_fs_fread(buffer, 1, 10, file); printf("buf: %s\n", buffer); rpc_fs_fclose(file); rpc_fs_deinit(); return 0; } 后端Python脚本做远程文件系统的服务端,参考代码如下:

import socket import sys import json import socket import os import shutil import binascii debug_mode = False tcp_mode = False # 定义服务器的IP地址和端口号 SERVER_IP_ADDRESS = "127.0.0.1" if tcp_mode: TCP_PORT_NO = 55555 else: UDP_PORT_NO = 55556 # 创建一个UDP socket if tcp_mode: serverSock = socket.socket(socket.AF_INET, socket.SOCK_STREAM) else: serverSock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM) if tcp_mode: # Server address and port server_address = (SERVER_IP_ADDRESS, TCP_PORT_NO) # Bind the socket to the port print(f"Starting up on {server_address[0]} port {server_address[1]}") serverSock.bind(server_address) else: # 绑定socket到指定的IP地址和端口号 print(f"Starting up on " + SERVER_IP_ADDRESS + " port " + str(UDP_PORT_NO)) serverSock.bind((SERVER_IP_ADDRESS, UDP_PORT_NO)) file_fd = None if tcp_mode: # Listen for incoming connections serverSock.listen(1) print("Waiting for a connection...") connection, client_address = serverSock.accept() print(connection) print(client_address) while True: if tcp_mode: data = connection.recv(10240) else: data, addr = serverSock.recvfrom(10240) ''' { "name": "test.txt", "operation": "read", "mode": "none" "offset": 1, "length": 10, "contents": "", } ''' # 使用json.loads()函数解析JSON字符串 json_string = data parsed_data = json.loads(json_string) # 打印解析后的Python对象 if debug_mode: print("<<<", end=" ") print(parsed_data) operation = parsed_data["operation"] try: if operation == "fopen": name = parsed_data["name"] mode = parsed_data["mode"] file_fd = open(name, mode) rsp_content = "{\"status\": \"ok\"}" elif operation == "fseek": offset = parsed_data["offset"] whence = parsed_data["whence"] file_fd.seek(offset, whence) rsp_content = "{\"status\": \"ok\"}" elif operation == "ftell": offset = file_fd.tell() rsp_content = "{\"status\": \"ok\", \"offset\": " + str(offset) + "}" elif operation == "fread": length = parsed_data["length"] contents_rd_byte_array = file_fd.read(length) # 使用binascii.hexlify()将字节数组转换为十六进制字符串 contents_hex_string = binascii.hexlify(contents_rd_byte_array).decode('utf-8') rsp_content = "{\"status\": \"ok\", \"contents\": \"" + contents_hex_string + "\"}" elif operation == "fwrite": length = parsed_data["length"] contents = parsed_data["contents"] contents_wr_byte_array = binascii.unhexlify(contents) file_fd.write(contents_wr_byte_array) rsp_content = "{\"status\": \"ok\"}" elif operation == "fclose": file_fd.close() rsp_content = "{\"status\": \"ok\"}" else: rsp_content = "{\"status\": \"unknown operation\"}" if debug_mode: print(">>> " + rsp_content) except Exception as e: rsp_content = str(e) if tcp_mode: connection.sendall(rsp_content.encode('utf-8')) else: serverSock.sendto(rsp_content.encode('utf-8'), addr) if debug_mode: print("") 工程示例图:

5.2 虚拟硬件中调用远程 AI 服务的核心代码

本部分利用的 Arm 虚拟硬件独有的 VSocket 特性,感兴趣可以从 Arm 虚拟硬件的帮助文档 https://arm-software.github.io/AVH/main/simulation/html/group__arm__vsocket.html 了解 。

具体的代码体现如下,整体采用的经典的 CS 模型,云端做服务器,端侧做客户端。

端侧的 AI 逻辑代码,核心思路就是基于 VSI 构建网络能力,通过自定义 JSON 数据格式与 AI bridge 服务进行数据交换。

端侧的 C 代码展示:

#include <stdio.h> #include <stdlib.h> #include <string.h> #include <unistd.h> #include "cJSON.h" #include "rpc_fs.h" #include "base64.h" #if (CFG_AWS_IOT_SOCKET_ENABLE) #include "iot_socket.h" #define AF_INET IOT_SOCKET_AF_INET #define SOCK_STREAM IOT_SOCKET_SOCK_STREAM #define SOCK_DGRAM IOT_SOCKET_SOCK_DGRAM #define socket(af, type, protocol) iotSocketCreate(af, type, protocol) #define listen(fd, backlog) iotSocketListen (fd, backlog) #define read(fd, buf, len) iotSocketRecv(fd, buf, len) #define write(fd, buf, len) iotSocketSend(fd, buf, len) #define close(fd) iotSocketClose(fd) #define INET_ADDRSTRLEN 128 #else #include <sys/socket.h> #include <netinet/in.h> #include <arpa/inet.h> #endif #ifndef DEBUG_MODE #define DEBUG_MODE 0 #endif #define TCP_MODE 0 #define SERVER_IP "127.0.0.1" #define SERVER_IP_BYTES {0x7F, 0x00, 0x00, 0x01} // sepcial for AVH socket API #if (TCP_MODE) #define SERVER_PORT 55557 #else #define SERVER_PORT 55558 #endif #ifdef __RTTHREAD__ #include <rtthread.h> #define MAX_READ_BLOCK_SIZE (12 * 768) #define MAX_SEND_BLOCK_LEN (10 * 1024) extern int cmd_free(int argc, char **argv); #define malloc(n) rt_malloc(n) #define free(p) rt_free(p) #define cmd_free(argc, argv) #else #define cmd_free(argc, argv) // maybe itn't the best !!! #define MAX_READ_BLOCK_SIZE (24 * 1024) #define MAX_SEND_BLOCK_LEN (40 * 1024) #endif #define BASE64_LEN(len) ((len + 2 ) / 3 * 4) #define MAX_READ_BLOCK_BASE64_SIZE BASE64_LEN(MAX_READ_BLOCK_SIZE) static int g_sock = -1; static int g_fd = 1; #if !(CFG_AWS_IOT_SOCKET_ENABLE) static struct sockaddr_in g_serv_addr; #endif #include <stdio.h> #include <string.h> int log_hexdump(const char *title, const unsigned char *data, int len) { char str[160], octet[10]; int ofs, i, k, d; const unsigned char *buf = (const unsigned char *)data; const char dimm[] = "+------------------------------------------------------------------------------+"; printf("%s (%d bytes):\r\n", title, len); printf("%s\r\n", dimm); printf("| Offset : 00 01 02 03 04 05 06 07 08 09 0A 0B 0C 0D 0E 0F 0123456789ABCDEF |\r\n"); printf("%s\r\n", dimm); for (ofs = 0; ofs < (int)len; ofs += 16) { d = snprintf( str, sizeof(str), "| %08X: ", ofs ); for (i = 0; i < 16; i++) { if ((i + ofs) < (int)len) { snprintf( octet, sizeof(octet), "%02X ", buf[ofs + i] ); } else { snprintf( octet, sizeof(octet), " " ); } d += snprintf( &str[d], sizeof(str) - d, "%s", octet ); } d += snprintf( &str[d], sizeof(str) - d, " " ); k = d; for (i = 0; i < 16; i++) { if ((i + ofs) < (int)len) { str[k++] = (0x20 <= (buf[ofs + i]) && (buf[ofs + i]) <= 0x7E) ? buf[ofs + i] : '.'; } else { str[k++] = ' '; } } str[k] = '\0'; printf("%s |\r\n", str); } printf("%s\r\n", dimm); return 0; } int log_hexdump2(const char *title, const unsigned char *data, int len) { int i = 0; printf("%s: ", title); for (i = 0; i < len; i++) { printf("%02X", data[i]); } printf("\n"); return 0; } static int g_ai_bridge_inited = 0; int ai_bridge_init(void) { if (g_ai_bridge_inited) { return 0; } #if (CFG_AWS_IOT_SOCKET_ENABLE) #if (TCP_MODE) if ((g_sock = iotSocketCreate(AF_INET, IOT_SOCKET_SOCK_STREAM, IOT_SOCKET_IPPROTO_TCP)) < 0) { #else if ((g_sock = iotSocketCreate(AF_INET, IOT_SOCKET_SOCK_DGRAM, IOT_SOCKET_IPPROTO_UDP)) < 0) { #endif return -1; } //printf("g_sock: %d\n", g_sock); #else if ((g_sock = socket(AF_INET, SOCK_DGRAM, 0)) < 0) { return -1; } memset(&g_serv_addr, 0, sizeof(g_serv_addr)); g_serv_addr.sin_family = AF_INET; g_serv_addr.sin_port = htons(SERVER_PORT); g_serv_addr.sin_addr.s_addr = inet_addr(SERVER_IP); //printf("%s:%d\n", SERVER_IP, SERVER_PORT); #endif #if (TCP_MODE) #if (CFG_AWS_IOT_SOCKET_ENABLE) uint8_t ip_array[] = SERVER_IP_BYTES; uint32_t ip_len = sizeof(ip_array); int ret = iotSocketConnect (g_sock, (const uint8_t *)ip_array, ip_len, SERVER_PORT); //printf("ret: %d\n", ret); #else if (connect(g_sock, (struct sockaddr *)&g_serv_addr, sizeof(g_serv_addr)) < 0) { close(g_sock); printf("connect fail\n"); return -1; } #endif #endif g_ai_bridge_inited = 1; //printf("g_sock: %d\n", g_sock); return g_sock; } int ai_bridge_deinit(void) { close(g_sock); g_sock = -1; g_ai_bridge_inited = 0; return 0; } static cJSON *ai_bridge_cJSON_exhcange(cJSON *in) { //printf("%s:%d %d %d\n", __func__, __LINE__, 0, SERVER_PORT); char *json_string = cJSON_Print(in); if (json_string) { #if (DEBUG_MODE) if (strlen(json_string) <= 2048) { printf(">>> %s\n", json_string); } else { char tmp[1024] = {0}; memcpy(tmp, json_string, sizeof(tmp) - 1); printf("tmp: %s\n", tmp); } #endif #if (CFG_AWS_IOT_SOCKET_ENABLE) //printf("%s:%d %d\n", __func__, __LINE__, g_sock); //extern int32_t iotSocketSendTo (int32_t socket, const void *buf, \ uint32_t len, const uint8_t *ip, uint32_t ip_len, uint16_t port); #if (TCP_MODE) int ret = iotSocketSend(g_sock, json_string, strlen(json_string)); #else uint8_t ip_array[] = SERVER_IP_BYTES; uint32_t ip_len = sizeof(ip_array); //printf("%d %d %d %d %d\n", ip_array[0], ip_array[1], ip_array[2], ip_array[3], ip_len); int ret = iotSocketSendTo (g_sock, json_string, strlen(json_string), \ (const uint8_t *)ip_array, ip_len, SERVER_PORT); #endif //printf("%s:%d %d %d\n", __func__, __LINE__, ret, SERVER_PORT); #else #if (TCP_MODE) send(g_sock, json_string, strlen(json_string), 0); #else sendto(g_sock, json_string, strlen(json_string), 0, \ (struct sockaddr *)&g_serv_addr, sizeof(g_serv_addr)); #endif #endif free(json_string); //printf("%s:%d %d %d %d\n", __func__, __LINE__, 0, SERVER_PORT, g_sock); } // 释放 cJSON 对象 cJSON_Delete(in); char buffer[2048] = {0}; #if (CFG_AWS_IOT_SOCKET_ENABLE) uint8_t ip_recv[64] = {0}; uint16_t port = 0; uint32_t ip_len = sizeof(ip_recv); #if (TCP_MODE) int ret = iotSocketRecv (g_sock, buffer, sizeof(buffer)); #else int ret = iotSocketRecvFrom (g_sock, buffer, sizeof(buffer), ip_recv, &ip_len, &port); #endif #else #if (TCP_MODE) int ret = recv(g_sock, buffer, sizeof(buffer), 0); #else int ret = recvfrom(g_sock, buffer, sizeof(buffer), 0, NULL, NULL); #endif #endif #if (DEBUG_MODE) printf("<<< %s\n", buffer); #endif if (ret < 0) { return NULL; } cJSON * rsp_root = NULL; cJSON * status = NULL; RPC_FILE *file = NULL; rsp_root = cJSON_Parse(buffer); if (!rsp_root) { printf("error null rsp\n"); return NULL; } status = cJSON_GetObjectItem(rsp_root, "status"); if (strcmp(status->valuestring, "ok")) { printf("status err: %s\n", status->valuestring); cJSON_Delete(rsp_root); return NULL; } cmd_free(0, NULL); return rsp_root; } int ai_bridge_baidu_stt(const char *audio_file, char *speech_rsp) { int i; int ret; char *buffer = NULL; char *p = NULL; RPC_FILE *file = NULL; // send many times MAX_SEND_BLOCK_LEN int max_part = 0; cJSON *root = NULL; cJSON *rsp_root = NULL; ai_bridge_init(); rpc_fs_init(); int size = rpc_fs_fsize(audio_file); int base64_len = BASE64_LEN(size); buffer = (char *)malloc(MAX_READ_BLOCK_BASE64_SIZE + 1); if (!buffer) { printf("null pointer, no enough memory !\n"); return -1; } //printf("size: %d %d\n", size, base64_len); p = buffer; int cnt = size / MAX_READ_BLOCK_SIZE; int left = size % MAX_READ_BLOCK_SIZE; if (left != 0) { max_part = cnt + 1; } else { max_part = cnt; } #if (DEBUG_MODE) printf("size: %d %d %d %d %d %d\n", size, base64_len, MAX_READ_BLOCK_SIZE, left, cnt, max_part); #endif file = rpc_fs_fopen(audio_file, "rb+"); for (i = 0; i < cnt; i++) { ret = rpc_fs_fread(p, 1, MAX_READ_BLOCK_SIZE, file); cmd_free(0, NULL); if (i == 0) { //log_hexdump("frist", p, MAX_READ_BLOCK_SIZE); //log_hexdump2("frist", p, MAX_READ_BLOCK_SIZE); } base64_encode_tail((const uint8_t *)p, MAX_READ_BLOCK_SIZE, p); if (i == 0) { //printf("p: (%d)%s\n", (int)strlen(p), p);; } *(p + BASE64_LEN(MAX_READ_BLOCK_SIZE)) = '\0'; root = cJSON_CreateObject(); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("baidu_stt")); cJSON_AddItemToObject(root, "cur_part", cJSON_CreateNumber(i + 1)); cJSON_AddItemToObject(root, "max_part", cJSON_CreateNumber(max_part)); cJSON_AddItemToObject(root, "file_len", cJSON_CreateNumber(size)); cJSON_AddItemToObject(root, "cur_len", cJSON_CreateNumber((int)strlen((char *)p))); cJSON_AddItemToObject(root, "base64", cJSON_CreateString((char *)p)); rsp_root = ai_bridge_cJSON_exhcange(root); if (i + 1 != max_part) { cJSON_Delete(rsp_root); } } if (left != 0) { ret = rpc_fs_fread(p, 1, left, file); //printf("ret: %d %d\n", ret, left); base64_encode_tail((const uint8_t *)p, left, p); //printf("p: (%d)%s\n", (int)strlen(p), p); *(p + BASE64_LEN(left)) = '\0'; root = cJSON_CreateObject(); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("baidu_stt")); cJSON_AddItemToObject(root, "cur_part", cJSON_CreateNumber(i + 1)); cJSON_AddItemToObject(root, "max_part", cJSON_CreateNumber(max_part)); cJSON_AddItemToObject(root, "file_len", cJSON_CreateNumber(size)); cJSON_AddItemToObject(root, "cur_len", cJSON_CreateNumber((int)strlen((char *)p))); cJSON_AddItemToObject(root, "base64", cJSON_CreateString((char *)p)); rsp_root = ai_bridge_cJSON_exhcange(root); } if (rsp_root) { cJSON *speech_rsp_obj = cJSON_GetObjectItem(rsp_root, "speech_rsp"); if (speech_rsp_obj) { printf("text req: %s\n", speech_rsp_obj->valuestring); strcpy(speech_rsp, speech_rsp_obj->valuestring); ret = 0; } cJSON_Delete(rsp_root); } else { ret = -1; } free(buffer); rpc_fs_fclose(file); rpc_fs_deinit(); ai_bridge_deinit(); return ret; } int ai_bridge_baidu_stt_normal(const char *audio_file, char *speech_rsp) { int i; int ret; char *buffer = NULL; char *p = NULL; RPC_FILE *file = NULL; rpc_fs_init(); int size = rpc_fs_fsize(audio_file); int base64_len = BASE64_LEN(size); buffer = (char *)malloc(base64_len + 1); if (!buffer) { printf("null pointer, no enough memory !\n"); return -1; } //printf("size: %d %d\n", size, base64_len); p = buffer; int cnt = size / MAX_READ_BLOCK_SIZE; int left = size % MAX_READ_BLOCK_SIZE; file = rpc_fs_fopen(audio_file, "rb+"); for (i = 0; i < cnt; i++) { ret = rpc_fs_fread(p, 1, MAX_READ_BLOCK_SIZE, file); cmd_free(0, NULL); if (i == 0) { //log_hexdump("frist", p, MAX_READ_BLOCK_SIZE); //log_hexdump2("frist", p, MAX_READ_BLOCK_SIZE); } base64_encode_tail((const uint8_t *)p, MAX_READ_BLOCK_SIZE, p); if (i == 0) { //printf("p: (%d)%s\n", (int)strlen(p), p);; } p += MAX_READ_BLOCK_BASE64_SIZE; } if (left != 0) { ret = rpc_fs_fread(p, 1, left, file); //printf("ret: %d %d\n", ret, left); base64_encode_tail((const uint8_t *)p, left, p); //printf("p: (%d)%s\n", (int)strlen(p), p); p += BASE64_LEN(left); *p = '\0'; } //printf("len: %d\n", (int)strlen(buffer)); rpc_fs_fclose(file); rpc_fs_deinit(); ai_bridge_init(); // send many times MAX_SEND_BLOCK_LEN int total_len = strlen((char *)buffer); int have_sent = 0; int max_part = 0; cJSON *root = NULL; cJSON *rsp_root = NULL; p = buffer; cnt = total_len / MAX_SEND_BLOCK_LEN; left = total_len % MAX_SEND_BLOCK_LEN; //printf("%d %d %d\n", total_len, cnt, left); if (left != 0) { max_part = cnt + 1; } else { max_part = cnt; } for (i = 0; i < cnt; i++) { char c_bak = *(p + MAX_SEND_BLOCK_LEN); *(p + MAX_SEND_BLOCK_LEN) = '\0'; root = cJSON_CreateObject(); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("baidu_stt")); cJSON_AddItemToObject(root, "cur_part", cJSON_CreateNumber(i + 1)); cJSON_AddItemToObject(root, "max_part", cJSON_CreateNumber(max_part)); cJSON_AddItemToObject(root, "file_len", cJSON_CreateNumber(size)); cJSON_AddItemToObject(root, "base64", cJSON_CreateString((char *)p)); *(p + MAX_SEND_BLOCK_LEN) = c_bak; p += MAX_SEND_BLOCK_LEN; rsp_root = ai_bridge_cJSON_exhcange(root); if (i + 1 != max_part) { cJSON_Delete(rsp_root); } } // the lastest part if (left != 0) { char c_bak = *(p + left); *(p + left) = '\0'; root = cJSON_CreateObject(); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("baidu_stt")); cJSON_AddItemToObject(root, "cur_part", cJSON_CreateNumber(i + 1)); cJSON_AddItemToObject(root, "max_part", cJSON_CreateNumber(max_part)); cJSON_AddItemToObject(root, "file_len", cJSON_CreateNumber(size)); cJSON_AddItemToObject(root, "base64", cJSON_CreateString((char *)p)); *(p + left) = c_bak; p += left; free(buffer); rsp_root = ai_bridge_cJSON_exhcange(root); } else { free(buffer); } if (rsp_root) { cJSON *speech_rsp_obj = cJSON_GetObjectItem(rsp_root, "speech_rsp"); if (speech_rsp_obj) { printf("text req: %s\n", speech_rsp_obj->valuestring); strcpy(speech_rsp, speech_rsp_obj->valuestring); ret = 0; } cJSON_Delete(rsp_root); } else { ret = -1; } ai_bridge_deinit(); return ret; } int ai_bridge_baidu_tts(char *text_req, const char *audio_file, char *speech_url) { cJSON *root = NULL; cJSON *rsp_root = NULL; int ret = -1; root = cJSON_CreateObject(); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("baidu_tts")); cJSON_AddItemToObject(root, "text_req", cJSON_CreateString(text_req)); cJSON_AddItemToObject(root, "audio_file", cJSON_CreateString(audio_file)); ai_bridge_init(); rsp_root = ai_bridge_cJSON_exhcange(root); if (rsp_root) { cJSON *speech_url_obj = cJSON_GetObjectItem(rsp_root, "speech_url"); if (speech_url_obj) { char *p = strstr(speech_url_obj->valuestring, "err_msg: "); if (p) { printf("something goes wrong: %s\n", p + 9); } else { printf("speech online url (copy it to your web browser, you can enjoy it): %s\n", speech_url_obj->valuestring); printf("speech local mp3 file: %s\n", audio_file); strcpy(speech_url, speech_url_obj->valuestring); ret = 0; } ret = 0; } cJSON_Delete(rsp_root); } ai_bridge_deinit(); return ret; } int ai_bridge_kimi_ai(char *text_req, char *text_rsp) { cJSON *root = NULL; cJSON *rsp_root = NULL; int ret = -1; root = cJSON_CreateObject(); cJSON_AddItemToObject(root, "operation", cJSON_CreateString("kimi_ai")); cJSON_AddItemToObject(root, "text_req", cJSON_CreateString(text_req)); ai_bridge_init(); rsp_root = ai_bridge_cJSON_exhcange(root); if (rsp_root) { cJSON *text_rsp_obj = cJSON_GetObjectItem(rsp_root, "text_rsp"); if (text_rsp_obj) { printf("text rsp: %s\n", text_rsp_obj->valuestring); strcpy(text_rsp, text_rsp_obj->valuestring); ret = 0; } cJSON_Delete(rsp_root); } ai_bridge_deinit(); return ret; } int ai_bridge_main(int argc, const char *argv[]) { char speech_rsp[1024] = {0}; char ai_text_rsp[2048] = {0}; char speech_url[1024] = {0}; const char *audio_file_in = "123.txt"; const char *audio_file_out = "456.txt"; if (argc > 2) { audio_file_in = argv[1]; audio_file_out = argv[2]; } else { printf("error input\n"); return -1; } printf("\n"); printf("baidu AI STT (audio file: %s) ... please wait ...\n", audio_file_in); ai_bridge_baidu_stt(audio_file_in, speech_rsp); printf("\n"); printf("kimi AI LLM AIGC ... please wait ...\n"); ai_bridge_kimi_ai(speech_rsp, ai_text_rsp); printf("\n"); printf("baidu AI TTS ... please wait ...\n"); ai_bridge_baidu_tts(ai_text_rsp, audio_file_out, speech_url); printf("\n"); //ai_bridge_baidu_stt(audio_file_out, speech_rsp); return 0; } int main_thread_ai_test(void) { //rpc_fs_main(1, NULL); printf("\n"); printf("============ TEST PART 1 ============\n"); //ai_tell_me_a_story const char *argv2[] = { "ai", "./ai/test2.raw", "./ai/test2-1.mp3", }; ai_bridge_main(3, argv2); printf("\n"); printf("============ TEST PART 2 ============\n"); //ai_tell_me_a_joke const char *argv3[] = { "ai", "./ai/test3.raw", "./ai/test3-1.mp3", }; ai_bridge_main(3, argv3); printf("exit this demo application by [CTRL + C] !!!\n"); return 0; } #ifdef AI_MAIN int main(int argc, const char *argv[]) { if (argc == 1) { main_thread_ai_test(); } else { ai_bridge_main(argc, argv); } return 0; } #endif #ifdef __RTTHREAD__ static void ai_tell_me_a_joke_thread(void *arg) { static const char *argv_in[] = { "ai", "./ai/test3.raw", "./ai/test3-1.mp3", }; ai_bridge_main(3, argv_in); } int ai_tell_me_a_joke(int argc, char** argv) { rt_thread_t tid; tid = rt_thread_create("ai+1", ai_tell_me_a_joke_thread, NULL, 8192, RT_MAIN_THREAD_PRIORITY / 2, 10); rt_thread_startup(tid); return 0; } MSH_CMD_EXPORT(ai_tell_me_a_joke, tell me a joke from kimi AI); static void ai_tell_me_a_story_thread(void *arg) { static const char *argv_in[] = { "ai", "./ai/test2.raw", "./ai/test2-1.mp3", }; ai_bridge_main(3, argv_in); } int ai_tell_me_a_story(int argc, char** argv) { rt_thread_t tid; tid = rt_thread_create("ai+2", ai_tell_me_a_story_thread, NULL, 8192, RT_MAIN_THREAD_PRIORITY / 2, 10); rt_thread_startup(tid); return 0; } MSH_CMD_EXPORT(ai_tell_me_a_story, tell me a story from kimi AI); #endif 测试代码入口:

int ai_bridge_main(int argc, const char *argv[]) { char speech_rsp[1024] = {0}; char ai_text_rsp[2048] = {0}; char speech_url[1024] = {0}; const char *audio_file_in = "123.txt"; const char *audio_file_out = "456.txt"; if (argc > 2) { audio_file_in = argv[1]; audio_file_out = argv[2]; } else { printf("error input\n"); return -1; } printf("\n"); printf("baidu AI STT (audio file: %s) ... please wait ...\n", audio_file_in); ai_bridge_baidu_stt(audio_file_in, speech_rsp); printf("\n"); printf("kimi AI LLM AIGC ... please wait ...\n"); ai_bridge_kimi_ai(speech_rsp, ai_text_rsp); printf("\n"); printf("baidu AI TTS ... please wait ...\n"); ai_bridge_baidu_tts(ai_text_rsp, audio_file_out, speech_url); printf("\n"); //ai_bridge_baidu_stt(audio_file_out, speech_rsp); return 0; } int main_thread_ai_test(void) { //rpc_fs_main(1, NULL); printf("\n"); printf("============ TEST PART 1 ============\n"); //ai_tell_me_a_story const char *argv2[] = { "ai", "./ai/test2.raw", "./ai/test2-1.mp3", }; ai_bridge_main(3, argv2); printf("\n"); printf("============ TEST PART 2 ============\n"); //ai_tell_me_a_joke const char *argv3[] = { "ai", "./ai/test3.raw", "./ai/test3-1.mp3", }; ai_bridge_main(3, argv3); printf("exit this demo application by [CTRL + C] !!!\n"); return 0; } 5.3 AI 后端桥接的逻辑代码

服务器云端的 Python 脚本的代码,以下部分包含了百度 AI 语音识别、语音合成,以及 Kimi AI 大模型文本对话,通过不同的 JSON 自动识别出请求的不同服务,从而调用不同的 AI 服务。

程序逻辑图,可以参考第 4 章节。

import socket import sys import json import socket import os import shutil import binascii import time import re import base64 import urllib import requests import json import os import urllib import urllib.response import urllib.request import urllib.parse import http.cookiejar debug_mode = False API_KEY = "KgRRbuj3824giWkMOP5tQfON" SECRET_KEY = "hs2De8mXboVmznrQcl9paqTOSURojNfd" def speech_decode_by_pcm_online(audio_data_base64, audio_file_size): #print(len(audio_data_base64)) #print(audio_file_size) url = "https://vop.baidu.com/server_api" # speech 可以通过 get_file_content_as_base64("C:\fakepath\test.pcm",False) 方法获取 payload = json.dumps({ "format": "pcm", "rate": 16000, "channel": 1, "cuid": "P4Dz9FMQiJpA47ztMBc4QdWsBFeAhW55", "token": get_access_token(), "speech": audio_data_base64, "len": audio_file_size }) headers = { 'Content-Type': 'application/json', 'Accept': 'application/json' } response = requests.request("POST", url, headers=headers, data=payload) #print(response) result = response.json() #print(result) if result['err_msg'] is not None: ret_result = result['err_msg'] if ret_result == 'success.': ret_result = result['result'][0] if debug_mode: #print(response.text) print(ret_result) return ret_result def speech_decode_by_file_online(audio_file): # 读取语音文件并转换为base64格式 #with open(audio_file, 'rb') as f: # audio_data_base64 = base64.b64encode(f.read()).decode() with open('1.txt', 'w') as f: f.write(audio_data_base64) speech_decode_by_pcm_online(audio_data_base64, os.stat(audio_file).st_size) def get_file_content_as_base64(path, urlencoded=False): """ 获取文件base64编码 :param path: 文件路径 :param urlencoded: 是否对结果进行urlencoded :return: base64编码信息 """ with open(path, "rb") as f: content = base64.b64encode(f.read()).decode("utf8") if urlencoded: content = urllib.parse.quote_plus(content) return content def get_access_token(): """ 使用 AK,SK 生成鉴权签名(Access Token) :return: access_token,或是None(如果错误) """ url = "https://aip.baidubce.com/oauth/2.0/token" params = {"grant_type": "client_credentials", "client_id": API_KEY, "client_secret": SECRET_KEY} return str(requests.post(url, params=params).json().get("access_token")) #if __name__ == '__main__': # speech_decode_by_file_online('./test.wav') # speech_decode_by_file_online('./test1.raw') import requests def get_access_token2(): """ 使用 AK,SK 生成鉴权签名(Access Token) :return: access_token,或是None(如果错误) """ url = "https://aip.baidubce.com/oauth/2.0/token" params = {"grant_type": "client_credentials", "client_id": API_KEY, "client_secret": SECRET_KEY} return str(requests.post(url, params=params).json().get("access_token")) def baidu_tts(text_req, audio_file): url = "https://tsn.baidu.com/text2audio" payload='tex=' + '1234567890' + '&tok='+ get_access_token2() +'&cuid=DfV47ziDDbsSE6a5nUarA4BDZGuXzrQB&ctp=1&lan=zh&spd=5&pit=5&vol=5&per=1&aue=4' headers = { 'Content-Type': 'application/x-www-form-urlencoded', 'Accept': '*/*' } print(payload) response = requests.request("POST", url, headers=headers, data=payload) print(response) if response: with open(audio_file, 'w') as f: f.write(response.text) #print(response.text) def baidu_tts_plus_query(task_id): url = "https://aip.baidubce.com/rpc/2.0/tts/v1/query?access_token=" + get_access_token() payload = json.dumps({ "task_ids": [ task_id ] }) headers = { 'Content-Type': 'application/json', 'Accept': 'application/json' } response = requests.request("POST", url, headers=headers, data=payload) if debug_mode: print(response.encoding) print(response.text) rsp_json = response.json() task_status = rsp_json['tasks_info'][0]['task_status'] if task_status == 'Success': speech_url = rsp_json['tasks_info'][0]['task_result']['speech_url'] return speech_url elif task_status == 'Failure': err_msg = rsp_json['tasks_info'][0]['task_result']['err_msg'] #print(err_msg) json_pattern = r'\{.*?\}' json_str = re.search(json_pattern, err_msg).group(0) # 将匹配到的JSON字符串进行解析 try: json_data = json.loads(json_str) #print("Extracted JSON:", json_data) #print(json_data['errmsg']) return json_data['errmsg'] except json.JSONDecodeError as e: print("Error decoding JSON:", e) return None else: return None def baidu_tts_plus(text_req, audio_file): url = "https://aip.baidubce.com/rpc/2.0/tts/v1/create?access_token=" + get_access_token2() payload = json.dumps({ "text": text_req, "format": "mp3-16k", "voice": 0, "lang": "zh", "speed": 5, "pitch": 5, "volume": 5, "enable_subtitle": 0 }) #print(payload) headers = { 'Content-Type': 'application/json', 'Accept': 'application/json' } if debug_mode: print(url) response = requests.request("POST", url, headers=headers, data=payload) rsp_json = response.json() if debug_mode: print(rsp_json) if 'error_msg' in rsp_json: url = rsp_json['error_msg'] return 'err_msg: ' + url task_id = rsp_json['task_id'] #print(task_id) max_cnt = 20 i = 0 while True: url = baidu_tts_plus_query(task_id) #print(url) if url is not None: if url.startswith('http://'): try: #print('download url file ...') f = urllib.request.urlopen(url) data = f.read() with open(audio_file, "wb") as code: code.write(data) #print('download url file ... ok') break except Exception as e: print(e) else: if debug_mode: print('err_msg: ' + url) url = 'err_msg + (' + url + ')' break else: i += 1 if i >= max_cnt: if debug_mode: print('max retry times fail ...') url = "err_msg: query respone timeout" break time.sleep(1) return url ################################################################3 import openai import json import requests from openai import OpenAI client = OpenAI( api_key="sk-52OQjRvWkkJrSor3V4W3ZeVLz6ArQyHED1RP4xiBS2KF5mdA", base_url="https://api.moonshot.cn/v1", ) def ai_get_answer_from_kimi(message): try: #print('connecting to kimi ...') completion = client.chat.completions.create( model="moonshot-v1-8k", messages=[ { "role": "user", "content": message }, ], temperature=0.3, ) answer = completion.choices[0].message.content if debug_mode: print("*" * 30) print(answer) return answer except Exception as e: print(e) return "Error response now !" ##############################################################33 tcp_mode = False # 定义服务器的IP地址和端口号 SERVER_IP_ADDRESS = "127.0.0.1" if tcp_mode: TCP_PORT_NO = 55557 else: UDP_PORT_NO = 55558 # 创建一个UDP socket if tcp_mode: serverSock = socket.socket(socket.AF_INET, socket.SOCK_STREAM) else: serverSock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM) if tcp_mode: # Server address and port server_address = (SERVER_IP_ADDRESS, TCP_PORT_NO) # Bind the socket to the port print(f"Starting up on {server_address[0]} port {server_address[1]}") serverSock.bind(server_address) else: # 绑定socket到指定的IP地址和端口号 print(f"Starting up on " + SERVER_IP_ADDRESS + " port " + str(UDP_PORT_NO)) serverSock.bind((SERVER_IP_ADDRESS, UDP_PORT_NO)) file_fd = None if tcp_mode: # Listen for incoming connections serverSock.listen(1) print("Waiting for a connection...") connection, client_address = serverSock.accept() print(connection) print(client_address) base64_data = "" while True: if tcp_mode: data = connection.recv(102400) else: data, addr = serverSock.recvfrom(102400) ''' { "name": "test.txt", "operation": "read", "mode": "none" "offset": 1, "length": 10, "contents": "", } ''' # 使用json.loads()函数解析JSON字符串 json_string = data parsed_data = json.loads(json_string) # 打印解析后的Python对象 if debug_mode: print("<<<", end=" ") print(parsed_data) operation = parsed_data["operation"] try: if operation == "baidu_stt": cur_part = parsed_data["cur_part"] max_part = parsed_data["max_part"] if cur_part == 1: # clear base64 data base64_data = "" if cur_part != max_part: base64_data += parsed_data["base64"] rsp_content = "{\"status\": \"ok\"}" elif cur_part == max_part: base64_data += parsed_data["base64"] file_len = parsed_data["file_len"] #speech_decode_by_file_online('./test.wav') #with open('2.txt', 'w') as f: # f.write(base64_data) text_result = speech_decode_by_pcm_online(base64_data, file_len) rsp_content = "{\"status\": \"ok\", \"speech_rsp\": \"" + text_result + "\"}" elif operation == "kimi_ai": text_req = parsed_data["text_req"] text_rsp = ai_get_answer_from_kimi(text_req) rsp_content = "{\"status\": \"ok\", \"text_rsp\": \"" + text_rsp + "\"}" elif operation == "baidu_tts": text_req = parsed_data["text_req"] audio_file = parsed_data["audio_file"] #print('---===== ' + audio_file) #baidu_tts(text_req, audio_file) speech_url = baidu_tts_plus(text_req, audio_file) rsp_content = "{\"status\": \"ok\", \"speech_url\": \"" + speech_url + "\"}" else: rsp_content = "{\"status\": \"unknown operation\"}" if debug_mode: print(">>> " + rsp_content) except Exception as e: rsp_content = str(e) if tcp_mode: connection.sendall(rsp_content.encode('utf-8')) else: serverSock.sendto(rsp_content.encode('utf-8'), addr) if debug_mode: print("") 对源码感兴趣的,可以进一步参考文末给出的仓库地址。

6 功能测试

6.1 工程的编译构建

本工程项目采用 Makefile + cbuild 来进行构建。最外层,我们使用的是 Makefile 进行操作的封装。

输入make help可以获取帮助信息,一眼可以看出如何进行输入命令:

ubuntu@arm-43ecd4886e664dffa086d3a69133a5e5:~/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI$ make help make help -> Show this help msg. make install -> Install python third-party package make build -> Build thie demo. make clean -> Clean object files. make run -> Run this demo. make debug -> Build & run this demo. make all -> Source & clean & build & run all together. make stop -> Stop to run this demo. 输入make clean 清除编译生成的中间文件,make build 可以进行代码的编译构建,少部分编译警告信息可以忽略:

ubuntu@arm-43ecd4886e664dffa086d3a69133a5e5:~/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI$ make clean Clean ... rm -rf ./Objects/ rm -rf out ubuntu@arm-43ecd4886e664dffa086d3a69133a5e5:~/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI$ ubuntu@arm-43ecd4886e664dffa086d3a69133a5e5:~/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI$ ubuntu@arm-43ecd4886e664dffa086d3a69133a5e5:~/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI$ make build Building ... cbuild --packs ./AVH-FVP_MPS2_Cortex-M85.cprj info cbuild: Build Invocation 1.2.0 (C) 2022 ARM (cbuildgen): Build Process Manager 1.3.0 (C) 2022 Arm Ltd. and Contributors M650: Command completed successfully. (cbuildgen): Build Process Manager 1.3.0 (C) 2022 Arm Ltd. and Contributors M652: Generated file for project build: '/home/ubuntu/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI/Objects/CMakeLists.txt' -- The ASM compiler identification is ARMClang -- Found assembler: /opt/armcompiler/bin/armclang -- Configuring done -- Generating done -- Build files have been written to: /home/ubuntu/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI/Objects [59/59] Linking C executable image.axf "/home/ubuntu/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI/RTE/Device/ARMCM85/ARMCM85_ac6.sct", line 31 (column 7): Warning: L6314W: No section matches pattern *(.bss.noinit). Program Size: Code=54510 RO-data=6154 RW-data=512 ZI-data=233004 Finished: 0 information, 1 warning and 0 error messages. info cbuild: build finished successfully! 至此,我们就完成了面向 Arm 平台的 axf 文件的编译生成。

6.2 验证完整实验流程

由于本实验中,会有后端Python脚本的运行,所以在正式运行Demo之前,我们需要安装一些Python的依赖包。在控制台输入 make install就可以完成依赖包的安装,参考以下执行示例:

ubuntu@arm-43ecd4886e664dffa086d3a69133a5e5:~/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI$ make install Installing ... Requirement already satisfied: python-matrix-runner~=1.0 in /home/ubuntu/.local/lib/python3.8/site-packages (from -r requirements.txt (line 5)) (1.1.0) Requirement already satisfied: junit-xml~=1.9 in /home/ubuntu/.local/lib/python3.8/site-packages (from -r requirements.txt (line 6)) (1.9) Collecting openai~=1.35.3 Using cached openai-1.35.5-py3-none-any.whl (327 kB) Requirement already satisfied: lxml~=4.6 in /home/ubuntu/.local/lib/python3.8/site-packages (from python-matrix-runner~=1.0->-r requirements.txt (line 5)) (4.9.4) Requirement already satisfied: ansicolors~=1.1 in /home/ubuntu/.local/lib/python3.8/site-packages (from python-matrix-runner~=1.0->-r requirements.txt (line 5)) (1.1.8) Requirement already satisfied: psutil~=5.8 in /home/ubuntu/.local/lib/python3.8/site-packages (from python-matrix-runner~=1.0->-r requirements.txt (line 5)) (5.9.8) Requirement already satisfied: junitparser~=2.2 in /home/ubuntu/.local/lib/python3.8/site-packages (from python-matrix-runner~=1.0->-r requirements.txt (line 5)) (2.8.0) Requirement already satisfied: colorama~=0.4 in /usr/lib/python3/dist-packages (from python-matrix-runner~=1.0->-r requirements.txt (line 5)) (0.4.3) Requirement already satisfied: colorlog~=6.6 in /home/ubuntu/.local/lib/python3.8/site-packages (from python-matrix-runner~=1.0->-r requirements.txt (line 5)) (6.8.2) Requirement already satisfied: allpairspy~=2.5 in /home/ubuntu/.local/lib/python3.8/site-packages (from python-matrix-runner~=1.0->-r requirements.txt (line 5)) (2.5.1) Requirement already satisfied: filelock~=3.4 in /home/ubuntu/.local/lib/python3.8/site-packages (from python-matrix-runner~=1.0->-r requirements.txt (line 5)) (3.13.3) Requirement already satisfied: parameterized~=0.8 in /home/ubuntu/.local/lib/python3.8/site-packages (from python-matrix-runner~=1.0->-r requirements.txt (line 5)) (0.9.0) Requirement already satisfied: tabulate~=0.8 in /home/ubuntu/.local/lib/python3.8/site-packages (from python-matrix-runner~=1.0->-r requirements.txt (line 5)) (0.9.0) Requirement already satisfied: six in /usr/lib/python3/dist-packages (from junit-xml~=1.9->-r requirements.txt (line 6)) (1.14.0) Requirement already satisfied: anyio<5,>=3.5.0 in /home/ubuntu/.local/lib/python3.8/site-packages (from openai~=1.35.3->-r requirements.txt (line 7)) (4.3.0) Requirement already satisfied: typing-extensions<5,>=4.7 in /home/ubuntu/.local/lib/python3.8/site-packages (from openai~=1.35.3->-r requirements.txt (line 7)) (4.11.0) Requirement already satisfied: httpx<1,>=0.23.0 in /home/ubuntu/.local/lib/python3.8/site-packages (from openai~=1.35.3->-r requirements.txt (line 7)) (0.27.0) Requirement already satisfied: distro<2,>=1.7.0 in /home/ubuntu/.local/lib/python3.8/site-packages (from openai~=1.35.3->-r requirements.txt (line 7)) (1.9.0) Requirement already satisfied: pydantic<3,>=1.9.0 in /home/ubuntu/.local/lib/python3.8/site-packages (from openai~=1.35.3->-r requirements.txt (line 7)) (2.7.4) Requirement already satisfied: tqdm>4 in /usr/local/lib/python3.8/dist-packages (from openai~=1.35.3->-r requirements.txt (line 7)) (4.66.4) Requirement already satisfied: sniffio in /home/ubuntu/.local/lib/python3.8/site-packages (from openai~=1.35.3->-r requirements.txt (line 7)) (1.3.1) Requirement already satisfied: future in /home/ubuntu/.local/lib/python3.8/site-packages (from junitparser~=2.2->python-matrix-runner~=1.0->-r requirements.txt (line 5)) (1.0.0) Requirement already satisfied: idna>=2.8 in /usr/lib/python3/dist-packages (from anyio<5,>=3.5.0->openai~=1.35.3->-r requirements.txt (line 7)) (2.8) Requirement already satisfied: exceptiongroup>=1.0.2; python_version < "3.11" in /home/ubuntu/.local/lib/python3.8/site-packages (from anyio<5,>=3.5.0->openai~=1.35.3->-r requirements.txt (line 7)) (1.2.1) Requirement already satisfied: certifi in /usr/lib/python3/dist-packages (from httpx<1,>=0.23.0->openai~=1.35.3->-r requirements.txt (line 7)) (2019.11.28) Requirement already satisfied: httpcore==1.* in /home/ubuntu/.local/lib/python3.8/site-packages (from httpx<1,>=0.23.0->openai~=1.35.3->-r requirements.txt (line 7)) (1.0.5) Requirement already satisfied: pydantic-core==2.18.4 in /home/ubuntu/.local/lib/python3.8/site-packages (from pydantic<3,>=1.9.0->openai~=1.35.3->-r requirements.txt (line 7)) (2.18.4) Requirement already satisfied: annotated-types>=0.4.0 in /home/ubuntu/.local/lib/python3.8/site-packages (from pydantic<3,>=1.9.0->openai~=1.35.3->-r requirements.txt (line 7)) (0.7.0) Requirement already satisfied: h11<0.15,>=0.13 in /home/ubuntu/.local/lib/python3.8/site-packages (from httpcore==1.*->httpx<1,>=0.23.0->openai~=1.35.3->-r requirements.txt (line 7)) (0.14.0) Installing collected packages: openai Successfully installed openai-1.35.5 输入make run即可把编译生成好的 axf 文件跑起来:

ubuntu@arm-43ecd4886e664dffa086d3a69133a5e5:~/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI$ make run Running ... make[1]: Entering directory '/home/ubuntu/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI' make[1]: Leaving directory '/home/ubuntu/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI' python rpc_fs/rpc_fs_server.py & python ai/ai_bridge_server.py & /opt/VHT/bin/FVP_MPS2_Cortex-M85 --stat --simlimit 80000000 -f ./vht_config.txt out/image.axf Starting up on 127.0.0.1 port 55556 telnetterminal2: Listening for serial connection on port 5000 telnetterminal1: Listening for serial connection on port 5001 telnetterminal0: Listening for serial connection on port 5002 Starting up on 127.0.0.1 port 55558 \ | / - RT - Thread Operating System / | \ 5.2.0 build Jun 26 2024 07:23:00 2006 - 2024 Copyright by RT-Thread team 如果在启动后,有提示下面信息,则需要重新安装Python依赖包。

ew/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI$ ubuntu@arm-43ecd4886e664dffa086d3a69133a5e5:~/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI$ make run Running ... make[1]: Entering directory '/home/ubuntu/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI' make[1]: Leaving directory '/home/ubuntu/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI' python rpc_fs/rpc_fs_server.py & python ai/ai_bridge_server.py & /opt/VHT/bin/FVP_MPS2_Cortex-M85 --stat --simlimit 80000000 -f ./vht_config.txt out/image.axf Starting up on 127.0.0.1 port 55556 Traceback (most recent call last): File "ai/ai_bridge_server.py", line 248, in <module> import openai ModuleNotFoundError: No module named 'openai' 上面运行Demo程序的核心命令就是 /opt/VHT/bin/FVP_MPS2_Cortex-M85 --stat --simlimit 80000000 -f ./vht_config.txt out/image.axf

为便于开发者理解,此处简单的展开说明,该命令调用了 Arm 虚拟硬件镜像中的 Cortex-M85 处理器的 FVP 模型FVP_MPS2_Cortex-M85。该 FVP 模型模拟一个硬件开发板,我们直接在云服务器上就把本来应该跑在 Arm 开发板上的可执行文件给跑起来了。其中,该命令部分参数解读如下:

FVP_MPS2_Cortex-M85即为所调用的 Cortex-M85 的 FVP 模型的名称。--stat表示停止模拟时,打印相关的运行状态信息。--simlimit 80000000表示模拟运行的时间上限为 80000000s,即若用户未手动退出,则80000000s 后程序会自动退出运行。out/image.axf即为具备本案例中提及的 AI 应用功能的可执行应用文件。-f vht_config.txt即指定了 FVP 模型运行时的所依据的配置文件。可以通过FVP_MPS2_Cortex-M85 -l命令获取 Cortex-M85 的 FVP 模型所有可配置的参数及其默认值(初始值)信息。用户可根据自身需求进行参数调整,获得不同的应用执行效果。

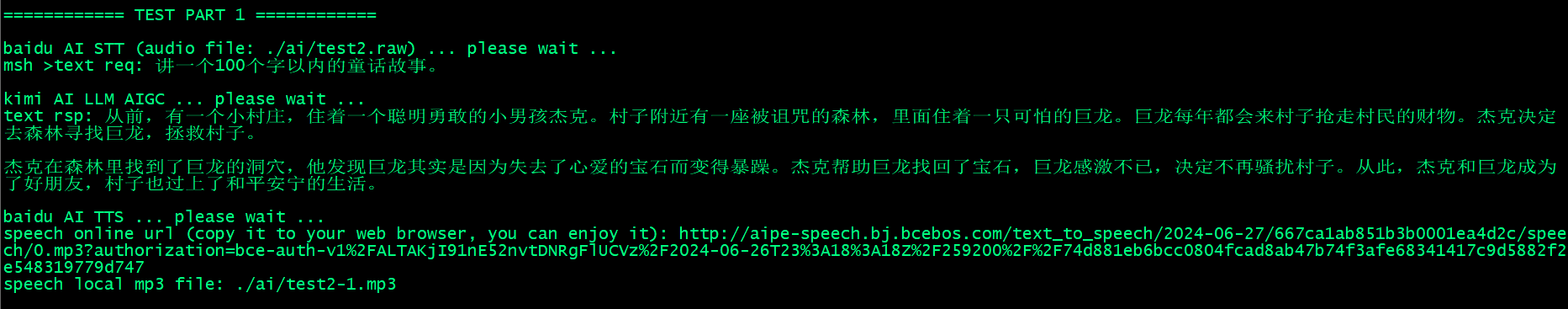

6.3 运行示例图展示

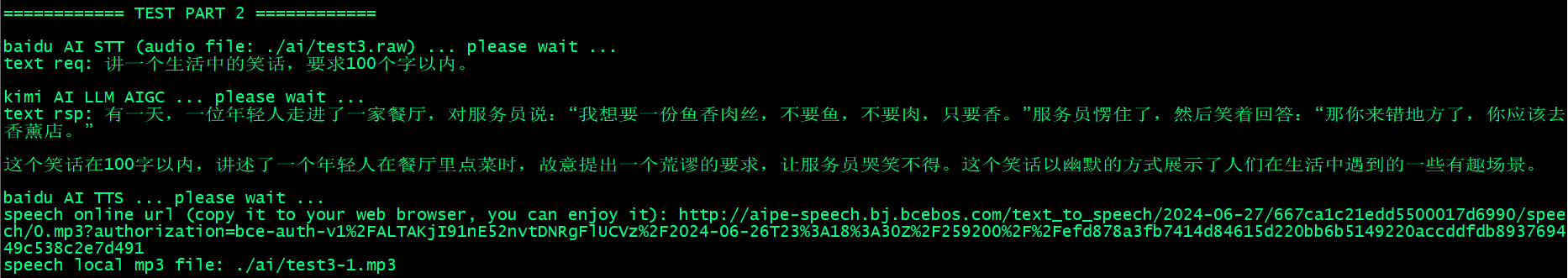

因仅做核心功能展示,我们在代码一开始跑就会去跑两个 Demo 案例,分别是:

Demo 测试案例 1 展示,讲一个 100 字以内的通话故事。以及 Demo 测试案例 2 展示,讲一个生活中的笑话。

ubuntu@arm-43ecd4886e664dffa086d3a69133a5e5:~/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI$ make run Running ... make[1]: Entering directory '/home/ubuntu/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI' make[1]: Leaving directory '/home/ubuntu/new/AVH-RTTHREAD-DEMO-RECAN/rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI' python rpc_fs/rpc_fs_server.py & python ai/ai_bridge_server.py & /opt/VHT/bin/FVP_MPS2_Cortex-M85 --stat --simlimit 80000000 -f ./vht_config.txt out/image.axf Starting up on 127.0.0.1 port 55556 telnetterminal2: Listening for serial connection on port 5000 telnetterminal1: Listening for serial connection on port 5001 telnetterminal0: Listening for serial connection on port 5002 Starting up on 127.0.0.1 port 55558 \ | / - RT - Thread Operating System / | \ 5.2.0 build Jun 26 2024 07:23:00 2006 - 2024 Copyright by RT-Thread team ============ TEST PART 1 ============ baidu AI STT (audio file: ./ai/test2.raw) ... please wait ... msh >text req: 讲一个100个字以内的童话故事。 kimi AI LLM AIGC ... please wait ... text rsp: 从前,有一个小村庄,住着一个聪明勇敢的小男孩杰克。村子附近有一座被诅咒的森林,里面住着一只可怕的巨龙。巨龙每年都会来村子抢走村民的财物。杰克决定去森林寻找巨龙,拯救村子。 杰克在森林里找到了巨龙的洞穴,他发现巨龙其实是因为失去了心爱的宝石而变得暴躁。杰克帮助巨龙找回了宝石,巨龙感激不已,决定不再骚扰村子。从此,杰克和巨龙成为了好朋友,村子也过上了和平安宁的生活。 baidu AI TTS ... please wait ... speech online url (copy it to your web browser, you can enjoy it): http://aipe-speech.bj.bcebos.com/text_to_speech/2024-06-27/667ca1ab851b3b0001ea4d2c/speech/0.mp3?authorization=bce-auth-v1%2FALTAKjI91nE52nvtDNRgFlUCVz%2F2024-06-26T23%3A18%3A18Z%2F259200%2F%2F74d881eb6bcc0804fcad8ab47b74f3afe68341417c9d5882f2e548319779d747 speech local mp3 file: ./ai/test2-1.mp3 ============ TEST PART 2 ============ baidu AI STT (audio file: ./ai/test3.raw) ... please wait ... text req: 讲一个生活中的笑话,要求100个字以内。 kimi AI LLM AIGC ... please wait ... text rsp: 有一天,一位年轻人走进了一家餐厅,对服务员说:“我想要一份鱼香肉丝,不要鱼,不要肉,只要香。”服务员愣住了,然后笑着回答:“那你来错地方了,你应该去香薰店。” 这个笑话在100字以内,讲述了一个年轻人在餐厅里点菜时,故意提出一个荒谬的要求,让服务员哭笑不得。这个笑话以幽默的方式展示了人们在生活中遇到的一些有趣场景。 baidu AI TTS ... please wait ... speech online url (copy it to your web browser, you can enjoy it): http://aipe-speech.bj.bcebos.com/text_to_speech/2024-06-27/667ca1c21edd5500017d6990/speech/0.mp3?authorization=bce-auth-v1%2FALTAKjI91nE52nvtDNRgFlUCVz%2F2024-06-26T23%3A18%3A30Z%2F259200%2F%2Fefd878a3fb7414d84615d220bb6b5149220accddfdb893769449c538c2e7d491 speech local mp3 file: ./ai/test3-1.mp3 exit this demo application by [CTRL + C] !!! ^C Stopping simulation... Info: /OSCI/SystemC: Simulation stopped by user. --- FVP_MPS2_Cortex_M85 statistics: ------------------------------------------- Simulated time : 135.714300s User time : 37.656389s System time : 0.096905s Wall time : 39.105570s Performance index : 3.47 cpu0 : 86.76 MIPS ( 3392712504 Inst) cpu1 : 0.00 MIPS ( 0 Inst) Total : 86.76 MIPS ( 3392712504 Inst) Memory highwater mark : 0x17ef3000 bytes ( 0.374 GB ) ------------------------------------------------------------------------------- ^C Simulation received signal 2 Terminating simulation... make: *** [Makefile:42: run] Interrupt 6.4 验证测试注意事项

- 某些免费、付费API有流控措施,短时间内测试太多次数会报错,比如 Kimi 的 AIGC 文本交互,就是 1 分钟最多 3次请求;否则会报错:{‘message’: ‘Your account cmh3xxxxxxfu40ssp2g request reached max request: 3, please try again after 1 seconds’, ‘type’: ‘rate_limit_reached_error’}

- 高峰时,网络请求响应比较慢,请耐心等待;如果长时间不响应,比如超过了3分钟,则按下 CTRL+C,重启开启测试。

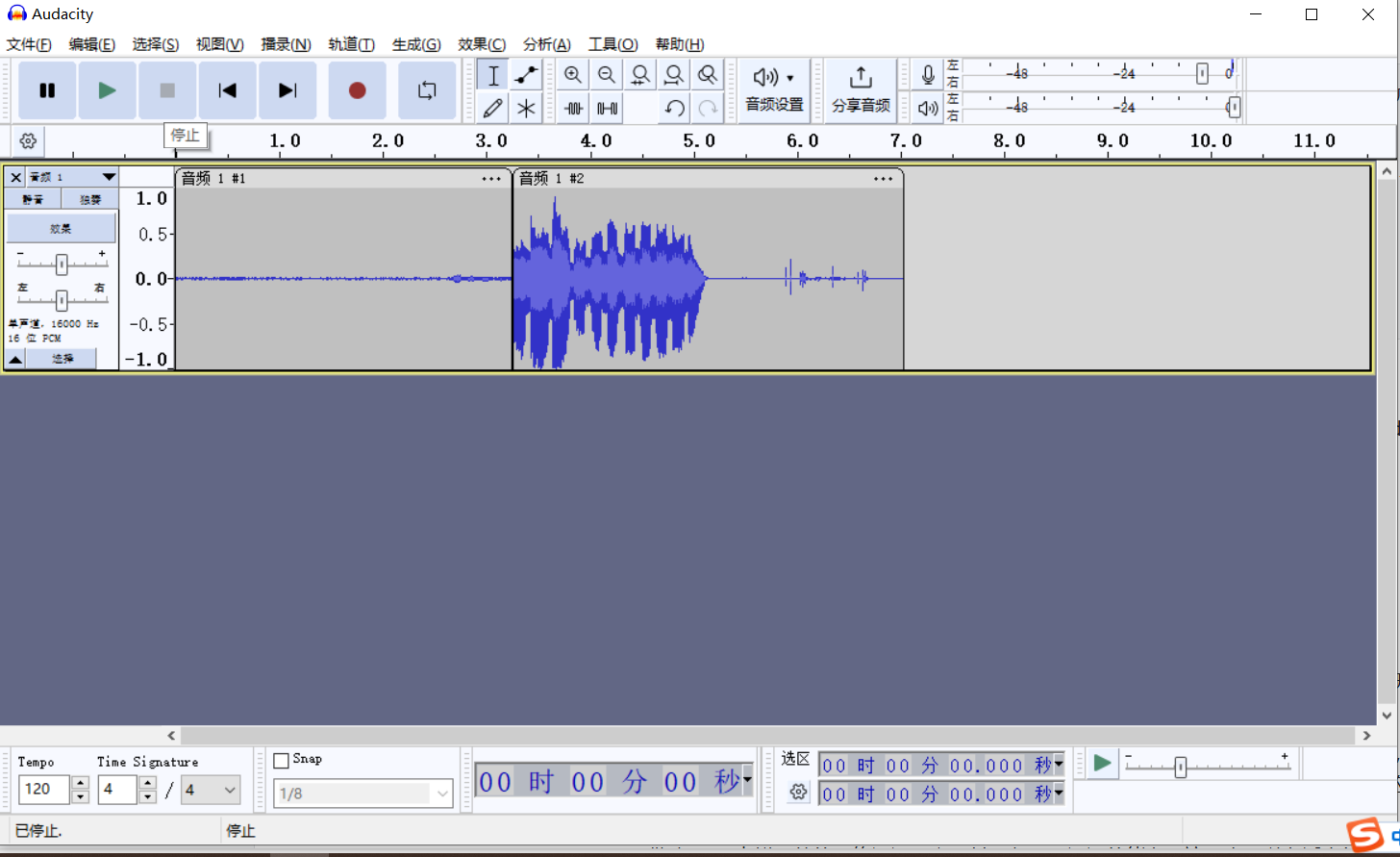

6.5 相关验证的语音输入和语音输出

本次案例使用的语音输入位于下面的 ai 目录,在做语音文件的过程中,需要用到 Audacity 软件工具,参考如下,它可以录制百度 AI 语音识别需要的音频格式 (PCM-16k格式)。

7 更多思考

7.1 关于 Arm 虚拟硬件的几点优势

我想补充几点:

- Arm 虚拟硬件平台给了非常便利的开发、编译、调试、运行验证的操作体验,无论是在开发阶段还是在生产阶段,都能给开发者及企业带来很大的便利。

- 相对于其他孤立的芯片开发平台,Arm 虚拟硬件平台在成套的软件包上还是比较完毕的,比如 RTOS 相关的、网络相关的、安全相关的等等软件包,都可以通过快速配置得到比较好的复用,这一点在开发流程上,也得到了很大的改善。

- 借助 Arm 虚拟硬件的网络通讯平台,其具备公网通讯的能力,这一点可以在适当的功能扩展上做成很多基于网络通讯的应用工程,是一个值得期待的开发亮点。

- 有了 RT-Thread 和 AI 能力的加持,对于多样化的AI场景,我们就能够支持了,欢迎广大读者想象出更多的应用场景。

7.2 实验过程的心得体会分享

- AI 应用真的遍地开花了,只要你能想到的场景,基本现在都有AI应用可以覆盖,大大提升了企业做产品的能动性;

- 多个 AI 应用的联动,可能也是未来AI应用产品的趋势;单一的 AI 功能应用已经无法满足用户的需求,多端联动更具吸引力;

- Python 脚本还是非常便捷的,无需编译,快速部署,接口友好,这是编译型语言无法比拟的;

- 遇到问题,需要冷静分析,一步步细心排查,不放松一丁点的蛛丝马迹。

7.3 项目的后续迭代与展望

当然,就当前的实现案例来说,也还存在一些问题需要后续补充改进,比如:

- 语音识别速度较慢的问题;

- 语音识别的成功率问题;

- 语言识别输入的语音文件必须是 PCM-16k 的格式;

- 网络请求偶现会超时无响应的问题;

- 多个AI平台联机交互,操作体验不是很流畅。

这些问题,都是一些值得深挖的核心问题,需要读者朋友进一步去探索和实践,也非常欢迎有问题的时候与我讨论。

另一方面,对于本实验项目我认为还是有很大前景的,展望一下它的前景,我觉得有以下几点:

- 关于语音识别,一般有远程联机识别模式和本地离线识别模式,在本项目中我们采用的是联机语音识别,但与之伴随的是识别较慢;从长远的项目成本及用户体验角度,离线识别模式将会是一个更优解。在 Arm 虚拟硬件上搭载 M85 等内核,我们是有足够能力去完成本地端侧语音识别能力的,相关的模型案例验证,可以参考 https://github.com/ARM-software/AVH-TFLmicrospeech 。当自己训练的语料库足够丰富时,语音识别的成功率和响应度会是一个质的提升。

- 类似于 Arm 内核等的端侧AI能力逐渐完备,我们看到了端侧 AI 强大的可能性,只要我们能够解决好训练模型的占用空间、消耗内存等问题,那么端侧 AI 大模型推理,定会迎来一个全新的市场机会,更多有趣好玩的 AI 终端产品,正在向我们招手。

8 参考资料

- 本案例的主代码仓库地址:https://github.com/recan-li/AVH-RTTHREAD-DEMO-RECAN 在对应的rt-thread/bsp/arm/AVH-FVP_MPS2_Cortex-M85-AI 目录下即可找到对应的FVP适配工程及对应的代码。

- Arm 虚拟硬件产品简介

- Arm 虚拟硬件帮助文档

- Arm 虚拟硬件开发者资源

- 【中文技术指南】Arm 虚拟硬件实践专题一:产品订阅指南(百度智能云版)

- 【中文技术指南】Arm 虚拟硬件实践专题二:Arm 虚拟硬件 FVP 模型入门指南

- 【中文视频直播课】加速AI开发,1小时快速入门Arm虚拟硬件

- Arm 社区微信公众号