Stable Diffusion webUI 配置指南

本博客主要介绍部署Stable Diffusion到本地,生成想要的风格图片。

文章目录

1、配置环境

- 使用平台:Linux Ubuntu 18.04.5 LTS

- 编程语言:Python 3.9

- CUDA环境:CUDA 11.7

Python环境配置可通过conda环境或者pip环境,如果不想用已有的环境或者使用python3.10及以上,可以直接跳过如下步骤。

(1)pip环境[可选]

requirements.txt文件内容如下:

accelerate==0.21.0 aenum==3.1.15 aiofiles==23.2.1 aiohttp==3.9.5 aiosignal==1.3.1 altair==5.3.0 antlr4-python3-runtime==4.9.3 anyio==3.7.1 async-timeout==4.0.3 attrs==23.2.0 blendmodes==2022 certifi==2024.2.2 chardet==5.2.0 charset-normalizer==2.0.4 clean-fid==0.1.35 click==8.1.7 clip==1.0 cmake==3.29.2 contourpy==1.2.1 cycler==0.12.1 deprecation==2.1.0 diskcache==5.6.3 einops==0.4.1 exceptiongroup==1.2.1 facexlib==0.3.0 fastapi==0.94.0 ffmpy==0.3.2 filelock==3.14.0 filterpy==1.4.5 fonttools==4.51.0 frozenlist==1.4.1 fsspec==2024.3.1 ftfy==6.2.0 gitdb==4.0.11 GitPython==3.1.32 gradio==3.41.2 gradio_client==0.5.0 h11==0.12.0 httpcore==0.15.0 httpx==0.24.1 huggingface-hub==0.23.0 idna==3.7 imageio==2.34.1 importlib_resources==6.4.0 inflection==0.5.1 Jinja2==3.1.3 jsonmerge==1.8.0 jsonschema==4.22.0 jsonschema-specifications==2023.12.1 kiwisolver==1.4.5 kornia==0.6.7 lark==1.1.2 lazy_loader==0.4 lightning-utilities==0.11.2 lit==18.1.4 llvmlite==0.42.0 MarkupSafe==2.1.5 matplotlib==3.8.4 mkl-fft==1.3.1 mkl-random==1.2.2 mkl-service==2.4.0 mpmath==1.3.0 multidict==6.0.5 networkx==3.2.1 numba==0.59.1 numpy==1.26.2 nvidia-cublas-cu11==11.10.3.66 nvidia-cublas-cu12==12.1.3.1 nvidia-cuda-cupti-cu11==11.7.101 nvidia-cuda-cupti-cu12==12.1.105 nvidia-cuda-nvrtc-cu11==11.7.99 nvidia-cuda-nvrtc-cu12==12.1.105 nvidia-cuda-runtime-cu11==11.7.99 nvidia-cuda-runtime-cu12==12.1.105 nvidia-cudnn-cu11==8.5.0.96 nvidia-cudnn-cu12==8.9.2.26 nvidia-cufft-cu11==10.9.0.58 nvidia-cufft-cu12==11.0.2.54 nvidia-curand-cu11==10.2.10.91 nvidia-curand-cu12==10.3.2.106 nvidia-cusolver-cu11==11.4.0.1 nvidia-cusolver-cu12==11.4.5.107 nvidia-cusparse-cu11==11.7.4.91 nvidia-cusparse-cu12==12.1.0.106 nvidia-nccl-cu11==2.14.3 nvidia-nccl-cu12==2.20.5 nvidia-nvjitlink-cu12==12.4.127 nvidia-nvtx-cu11==11.7.91 nvidia-nvtx-cu12==12.1.105 omegaconf==2.2.3 open-clip-torch==2.20.0 opencv-python==4.9.0.80 orjson==3.10.2 packaging==24.0 pandas==2.2.2 piexif==1.1.3 Pillow==9.5.0 pillow-avif-plugin==1.4.3 pip==23.3.1 protobuf==3.20.0 psutil==5.9.5 pydantic==1.10.15 pydub==0.25.1 pyparsing==3.1.2 python-dateutil==2.9.0.post0 python-multipart==0.0.9 pytorch-lightning==1.9.4 pytz==2024.1 PyWavelets==1.6.0 PyYAML==6.0.1 referencing==0.35.1 regex==2024.4.28 requests==2.31.0 resize-right==0.0.2 rpds-py==0.18.0 safetensors==0.4.2 scikit-image==0.21.0 scipy==1.13.0 semantic-version==2.10.0 sentencepiece==0.2.0 setuptools==68.2.2 six==1.16.0 smmap==5.0.1 sniffio==1.3.1 spandrel==0.1.6 starlette==0.26.1 sympy==1.12 tifffile==2024.4.24 timm==0.9.16 tokenizers==0.13.3 tomesd==0.1.3 toolz==0.12.1 torch==2.0.1 torchaudio==2.0.2 torchdiffeq==0.2.3 torchmetrics==1.3.2 torchsde==0.2.6 torchvision==0.15.2 tqdm==4.66.2 trampoline==0.1.2 transformers==4.30.2 triton==2.0.0 typing_extensions==4.11.0 tzdata==2024.1 urllib3==2.1.0 uvicorn==0.29.0 wcwidth==0.2.13 websockets==11.0.3 wheel==0.41.2 yarl==1.9.4 zipp==3.18.1 安装shell命令

pip install -r requirements.txt (2)conda环境[可选]

requirements.txt文件内容如下:

# This file may be used to create an environment using: # $ conda create --name <env> --file <this file> # platform: linux-64 _libgcc_mutex=0.1=main _openmp_mutex=5.1=1_gnu accelerate=0.21.0=pypi_0 aenum=3.1.15=pypi_0 aiofiles=23.2.1=pypi_0 aiohttp=3.9.5=pypi_0 aiosignal=1.3.1=pypi_0 altair=5.3.0=pypi_0 antlr4-python3-runtime=4.9.3=pypi_0 anyio=3.7.1=pypi_0 async-timeout=4.0.3=pypi_0 attrs=23.2.0=pypi_0 blas=1.0=mkl blendmodes=2022=pypi_0 bzip2=1.0.8=h5eee18b_6 ca-certificates=2024.3.11=h06a4308_0 certifi=2024.2.2=py39h06a4308_0 chardet=5.2.0=pypi_0 charset-normalizer=3.3.2=pypi_0 clean-fid=0.1.35=pypi_0 click=8.1.7=pypi_0 clip=1.0=pypi_0 cmake=3.29.2=pypi_0 contourpy=1.2.1=pypi_0 cuda-cudart=11.7.99=0 cuda-cupti=11.7.101=0 cuda-libraries=11.7.1=0 cuda-nvrtc=11.7.99=0 cuda-nvtx=11.7.91=0 cuda-runtime=11.7.1=0 cudatoolkit=11.1.1=ha002fc5_10 cudnn=8.9.2.26=cuda11_0 cycler=0.12.1=pypi_0 deprecation=2.1.0=pypi_0 diskcache=5.6.3=pypi_0 einops=0.4.1=pypi_0 exceptiongroup=1.2.1=pypi_0 facexlib=0.3.0=pypi_0 fastapi=0.94.0=pypi_0 ffmpeg=4.3=hf484d3e_0 ffmpy=0.3.2=pypi_0 filelock=3.14.0=pypi_0 filterpy=1.4.5=pypi_0 fonttools=4.51.0=pypi_0 freetype=2.12.1=h4a9f257_0 frozenlist=1.4.1=pypi_0 fsspec=2024.3.1=pypi_0 ftfy=6.2.0=pypi_0 gitdb=4.0.11=pypi_0 gitpython=3.1.32=pypi_0 gmp=6.2.1=h295c915_3 gnutls=3.6.15=he1e5248_0 gradio=3.41.2=pypi_0 gradio-client=0.5.0=pypi_0 h11=0.12.0=pypi_0 httpcore=0.15.0=pypi_0 httpx=0.24.1=pypi_0 huggingface-hub=0.23.0=pypi_0 idna=3.7=py39h06a4308_0 imageio=2.34.1=pypi_0 importlib-resources=6.4.0=pypi_0 inflection=0.5.1=pypi_0 intel-openmp=2021.4.0=h06a4308_3561 jinja2=3.1.3=pypi_0 jpeg=9e=h5eee18b_1 jsonmerge=1.8.0=pypi_0 jsonschema=4.22.0=pypi_0 jsonschema-specifications=2023.12.1=pypi_0 kiwisolver=1.4.5=pypi_0 kornia=0.6.7=pypi_0 lame=3.100=h7b6447c_0 lark=1.1.2=pypi_0 lazy-loader=0.4=pypi_0 lcms2=2.12=h3be6417_0 ld_impl_linux-64=2.38=h1181459_1 lerc=3.0=h295c915_0 libcublas=11.10.3.66=0 libcufft=10.7.2.124=h4fbf590_0 libcufile=1.9.1.3=0 libcurand=10.3.5.147=0 libcusolver=11.4.0.1=0 libcusparse=11.7.4.91=0 libdeflate=1.17=h5eee18b_1 libffi=3.3=he6710b0_2 libgcc-ng=11.2.0=h1234567_1 libgomp=11.2.0=h1234567_1 libiconv=1.16=h5eee18b_3 libidn2=2.3.4=h5eee18b_0 libnpp=11.7.4.75=0 libnvjpeg=11.8.0.2=0 libpng=1.6.39=h5eee18b_0 libstdcxx-ng=11.2.0=h1234567_1 libtasn1=4.19.0=h5eee18b_0 libtiff=4.5.1=h6a678d5_0 libunistring=0.9.10=h27cfd23_0 libwebp-base=1.3.2=h5eee18b_0 lightning-utilities=0.11.2=pypi_0 lit=18.1.4=pypi_0 llvmlite=0.42.0=pypi_0 lz4-c=1.9.4=h6a678d5_1 markupsafe=2.1.5=pypi_0 matplotlib=3.8.4=pypi_0 mkl=2021.4.0=h06a4308_640 mkl-service=2.4.0=py39h7f8727e_0 mkl_fft=1.3.1=py39hd3c417c_0 mkl_random=1.2.2=py39h51133e4_0 mpmath=1.3.0=pypi_0 multidict=6.0.5=pypi_0 ncurses=6.4=h6a678d5_0 nettle=3.7.3=hbbd107a_1 networkx=3.2.1=pypi_0 numba=0.59.1=pypi_0 numpy=1.26.2=pypi_0 nvidia-cublas-cu11=11.10.3.66=pypi_0 nvidia-cublas-cu12=12.1.3.1=pypi_0 nvidia-cuda-cupti-cu11=11.7.101=pypi_0 nvidia-cuda-cupti-cu12=12.1.105=pypi_0 nvidia-cuda-nvrtc-cu11=11.7.99=pypi_0 nvidia-cuda-nvrtc-cu12=12.1.105=pypi_0 nvidia-cuda-runtime-cu11=11.7.99=pypi_0 nvidia-cuda-runtime-cu12=12.1.105=pypi_0 nvidia-cudnn-cu11=8.5.0.96=pypi_0 nvidia-cudnn-cu12=8.9.2.26=pypi_0 nvidia-cufft-cu11=10.9.0.58=pypi_0 nvidia-cufft-cu12=11.0.2.54=pypi_0 nvidia-curand-cu11=10.2.10.91=pypi_0 nvidia-curand-cu12=10.3.2.106=pypi_0 nvidia-cusolver-cu11=11.4.0.1=pypi_0 nvidia-cusolver-cu12=11.4.5.107=pypi_0 nvidia-cusparse-cu11=11.7.4.91=pypi_0 nvidia-cusparse-cu12=12.1.0.106=pypi_0 nvidia-nccl-cu11=2.14.3=pypi_0 nvidia-nccl-cu12=2.20.5=pypi_0 nvidia-nvjitlink-cu12=12.4.127=pypi_0 nvidia-nvtx-cu11=11.7.91=pypi_0 nvidia-nvtx-cu12=12.1.105=pypi_0 omegaconf=2.2.3=pypi_0 open-clip-torch=2.20.0=pypi_0 opencv-python=4.9.0.80=pypi_0 openh264=2.1.1=h4ff587b_0 openjpeg=2.4.0=h3ad879b_0 openssl=1.1.1w=h7f8727e_0 orjson=3.10.2=pypi_0 packaging=24.0=pypi_0 pandas=2.2.2=pypi_0 piexif=1.1.3=pypi_0 pillow=9.5.0=pypi_0 pillow-avif-plugin=1.4.3=pypi_0 pip=23.3.1=py39h06a4308_0 protobuf=3.20.0=pypi_0 psutil=5.9.5=pypi_0 pydantic=1.10.15=pypi_0 pydub=0.25.1=pypi_0 pyparsing=3.1.2=pypi_0 python=3.9.0=hdb3f193_2 python-dateutil=2.9.0.post0=pypi_0 python-multipart=0.0.9=pypi_0 pytorch-cuda=11.7=h778d358_5 pytorch-lightning=1.9.4=pypi_0 pytorch-mutex=1.0=cuda pytz=2024.1=pypi_0 pywavelets=1.6.0=pypi_0 pyyaml=6.0.1=pypi_0 readline=8.2=h5eee18b_0 referencing=0.35.1=pypi_0 regex=2024.4.28=pypi_0 requests=2.31.0=py39h06a4308_1 resize-right=0.0.2=pypi_0 rpds-py=0.18.0=pypi_0 safetensors=0.4.2=pypi_0 scikit-image=0.21.0=pypi_0 scipy=1.13.0=pypi_0 semantic-version=2.10.0=pypi_0 sentencepiece=0.2.0=pypi_0 setuptools=68.2.2=py39h06a4308_0 six=1.16.0=pyhd3eb1b0_1 smmap=5.0.1=pypi_0 sniffio=1.3.1=pypi_0 spandrel=0.1.6=pypi_0 sqlite=3.45.3=h5eee18b_0 starlette=0.26.1=pypi_0 sympy=1.12=pypi_0 tifffile=2024.4.24=pypi_0 timm=0.9.16=pypi_0 tk=8.6.12=h1ccaba5_0 tokenizers=0.13.3=pypi_0 tomesd=0.1.3=pypi_0 toolz=0.12.1=pypi_0 torch=2.0.1=pypi_0 torchaudio=2.0.2=pypi_0 torchdiffeq=0.2.3=pypi_0 torchmetrics=1.3.2=pypi_0 torchsde=0.2.6=pypi_0 torchvision=0.15.2=pypi_0 tqdm=4.66.2=pypi_0 trampoline=0.1.2=pypi_0 transformers=4.30.2=pypi_0 triton=2.0.0=pypi_0 typing-extensions=4.11.0=pypi_0 typing_extensions=4.9.0=py39h06a4308_1 tzdata=2024.1=pypi_0 urllib3=2.2.1=pypi_0 uvicorn=0.29.0=pypi_0 wcwidth=0.2.13=pypi_0 websockets=11.0.3=pypi_0 wheel=0.41.2=py39h06a4308_0 xz=5.4.6=h5eee18b_1 yarl=1.9.4=pypi_0 zipp=3.18.1=pypi_0 zlib=1.2.13=h5eee18b_1 zstd=1.5.5=hc292b87_2 安装shell命令

conda install --yes --file requirements.txt 2、配置Stable Diffusion webUI项目

(1)克隆项目

目前克隆版本号为:v1.9.3。如果下载进度断了,建议下载后解压到目录下即可。

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git 然后切换到项目根目录下,输入命令:cd stable-diffusion-webui。

(2)提前配置【可选,推荐配置】

- 切换到第三方包文件夹下

cd stable-diffusion-webui/repositories,提前安装open_clip(v2.24.0)和generative-models,离线安装open_clip具体命令如下:

git clone https://github.com/mlfoundations/open_clip.git cd open_clip python setup.py build install 离线安装generative-models具体命令如下:

git clone https://github.com/Stability-AI/generative-models.git cd generative-models python setup.py build install 如果在下面安装的过程中存在某些包拉取中断可以直接本地下载后再进行安装即可。笔者只遇到以上两个包安装存在中断的现象。

(3)运行脚本

- 方式一:

bash start.sh配置环境到根目录下的文件,项目根目录下会生成一个venv文件用于存放python环境文件包。

如果使用该环境下的python的话,要找到文件夹里面的python执行脚本,比如:

一般在conda或者pip环境下执行python launch.py,那么如果要使用venv文件夹里的环境就需要执行/venv/bin/python launch.py。

个人觉得比较麻烦,该环境复用性比较差,运行python不方便,所以后面都用第二种方式(如下面)

- 方式二:

python launch.py,命令过程中如果发现某些包没有装,如进行安装,建议按照上述章节提前安装好,不然会出现网络问题而终止。

笔者运行命令行结果如下(推理了一次,红色字体是因为笔者没有设置外网映射,默认这个设置是不开的,“如果你想要的话需要去weibu.py中将shared.demo.launch()第一行,改为share=True”):

(4)下载预训练权重

项目中models目录下会有一个存放模型路径(model/Stable-diffusion/),从https://hf-mirror.com/LarryAIDraw/v1-5-pruned-emaonly/tree/main下载v1-5-pruned-emaonly.ckpt存放到该路径下。如果你想生成人像比较逼真的话需要从https://hf-mirror.com/92john/chilloutmix_NiPrunedFp32Fix.safetensors/tree/main下载一个chilloutmix_NiPrunedFp32Fix.safetensors模型文件,放到model/Stable-diffusion/目录下,然后再接下来的web界面上选择对应的模型就可以了。

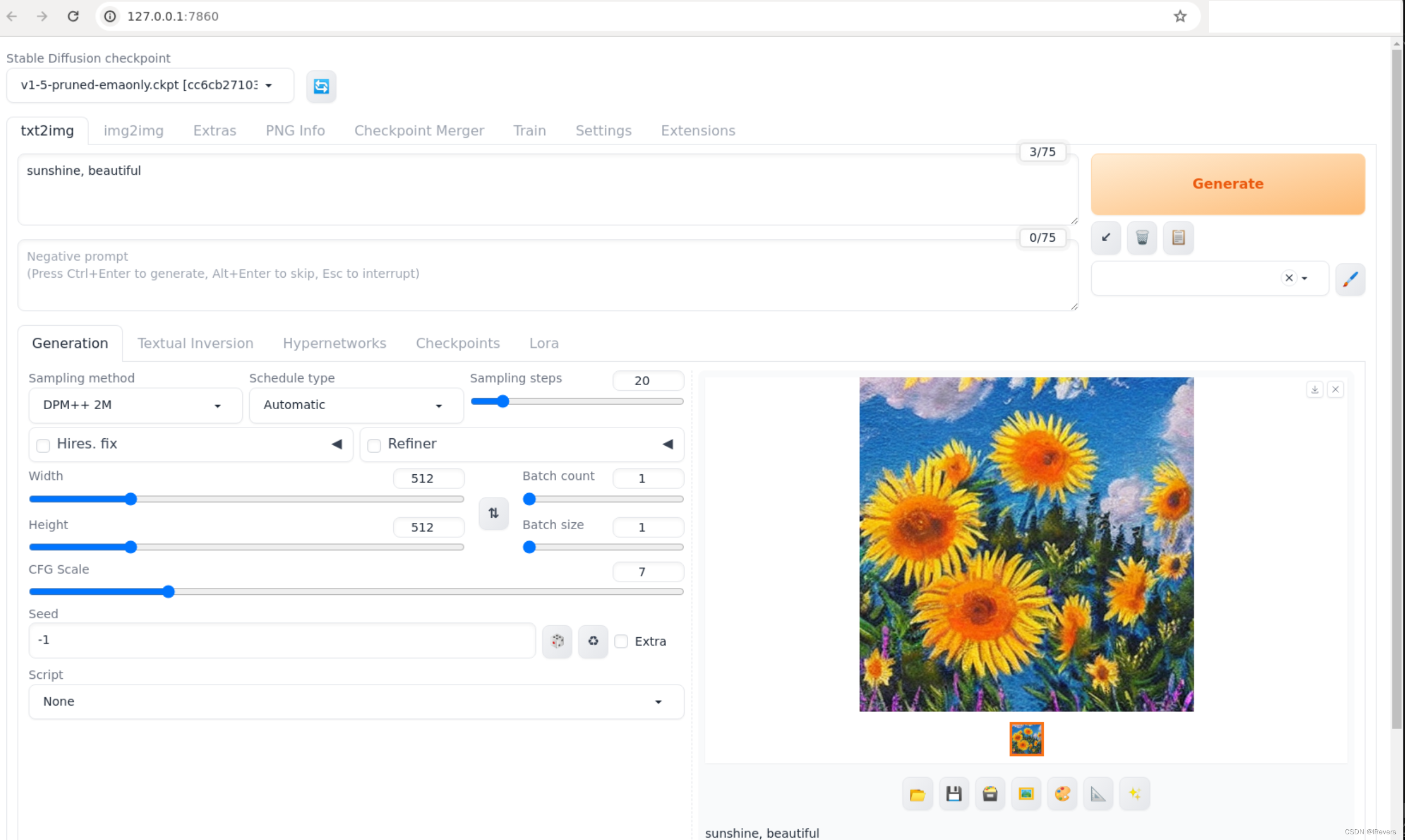

打开本地7860端口,界面如下(选择好存放的模型):

测试图片示例展示如下:

3、遇到的问题

- 问题1

version libcudnn_ops_infer.so.8 not defined in file libcudnn_ops_infer.so.8 with link time reference.

解决方法:命令行输入:conda install -c conda-forge cudnn安装cudnn和cudatoolkit。

- 问题2

OSError: Can’t load tokenizer for ‘openai/clip-vit-large-patch14’. If you were trying to load it from ‘https://huggingface.co/models’, make sure you don’t have a local directory with the same name. Otherwise, make sure ‘openai/clip-vit-large-patch14’ is the correct path to a directory containing all relevant files for a CLIPTokenizer tokenizer.

解决方法:下面https://huggingface.co/openai/clip-vit-large-patch14/tree/main的文件到stable-diffusion-webui/openai这个文件目录下(如果没有就创建)。参考【download files from https://huggingface.co/openai/clip-vit-large-patch14/tree/main and put them into the directory stable-diffusion-webui/openai (create it if doesn’t exist)】

国内专用安装命令:(不用加.git)

git clone https://hf-mirror.com/openai/clip-vit-large-patch14 - 问题3

cannot import name ‘COMMON_SAFE_ASCII_CHARACTERS‘ from ‘charset_normalizer.constant’.

解决方法:安装chardet:pip install chardet

4、参考

[1] https://zhuanlan.zhihu.com/p/611519270

[2] https://blog.csdn.net/weixin_40735291/article/details/129333599

[3] https://github.com/pytorch/pytorch/issues/104591

[4] https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/11507

[5] https://blog.csdn.net/weixin_47037450/article/details/129616415