今天,学习RTMPose关键点检测实战。教大家如何安装安装MMDetection和MMPose。

实战项目以三角板关键点检测场景为例,结合OpenMMLab开源目标检测算法库MMDetection、开源关键点检测算法库MMPose、开源模型部署算法库MMDeploy,全面讲解项目全流程:

数据集:Labelme标注数据集、整理标注格式至MS COCO

目标检测:分别训练Faster R CNN和RTMDet-Tiny目标检测模型、训练日志可视化、测试集评估、对图像、摄像头画面预测

关键点检测:训练RTMPose-S关键点检测模型、训练日志可视化、测试集上评估、分别对“图像、视频、摄像头画面”预测

模型终端部署:转ONNX格式,终端推理

一、引言

耳朵穴位是中医针灸的重要组成部分,准确定位耳朵穴位对于针灸治疗和健康监测具有重要意义。传统的耳朵穴位检测方法依赖于人工标记,效率低下且容易受到人为因素的影响。随着深度学习和计算机视觉技术的发展,基于图像的自动化穴位检测方法逐渐成为研究热点。

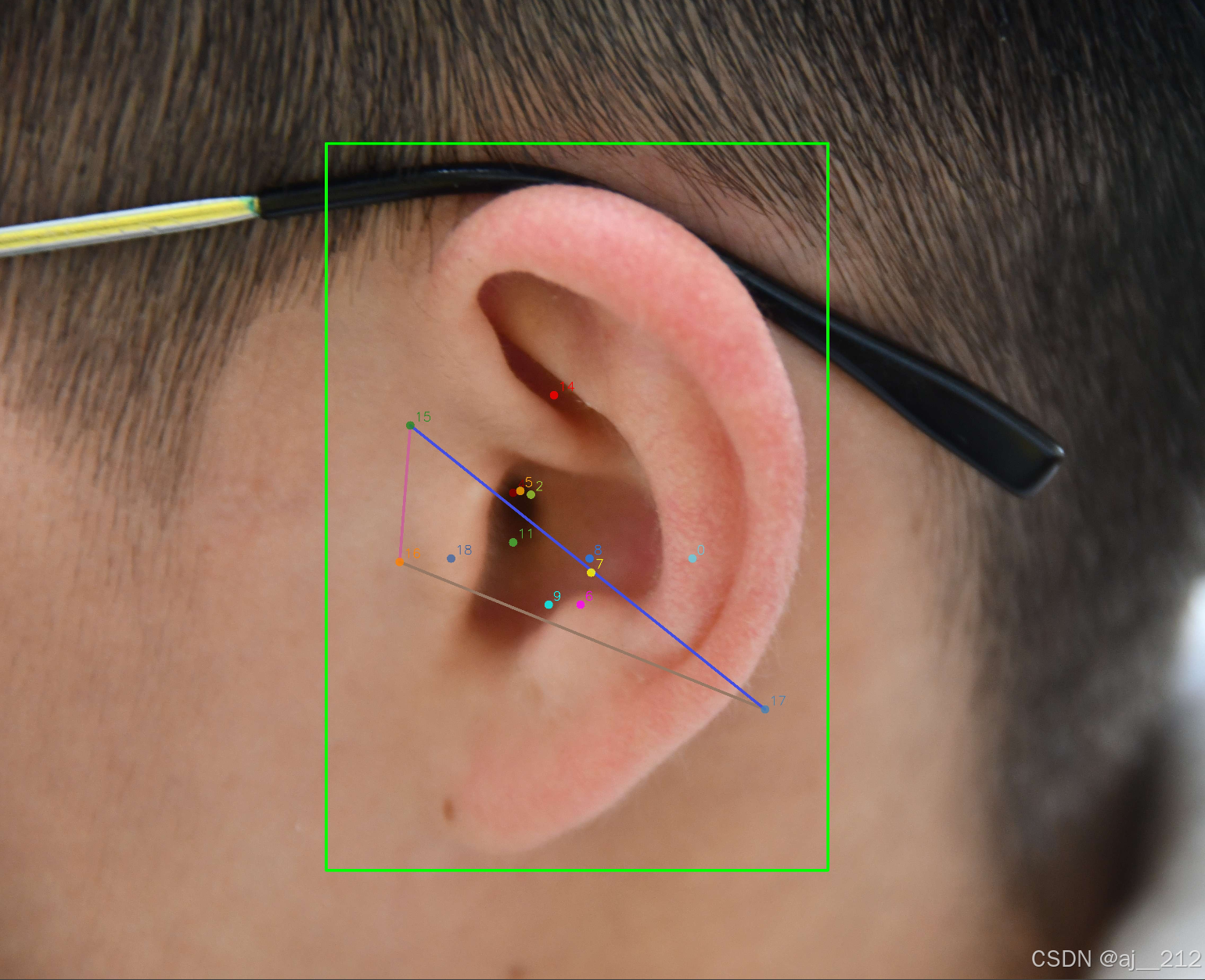

RTMPose是一种基于深度学习的姿态估计模型,具有较高的精度和鲁棒性。本文提出了一种结合RTMPose和检测技术的耳朵穴位关键点检测方法,通过引入先进的图像处理算法,实现对耳朵穴位的自动化检测和定位。

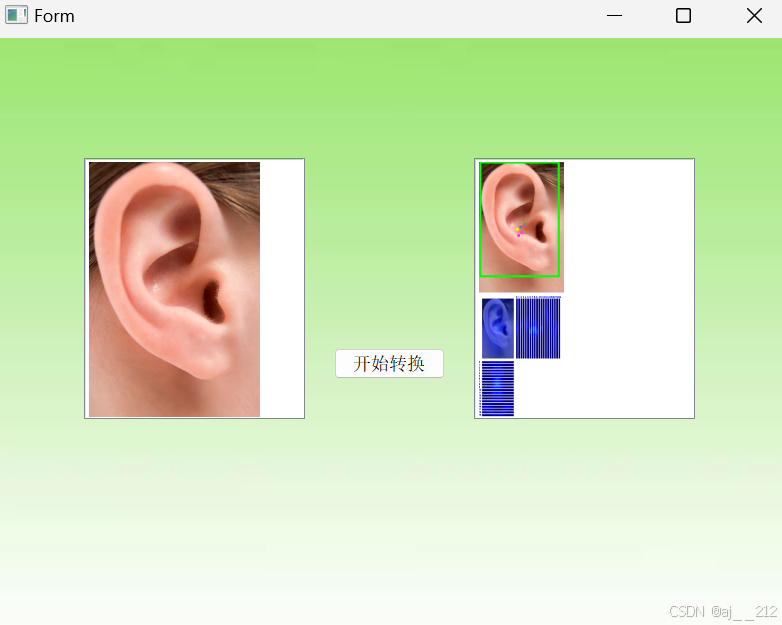

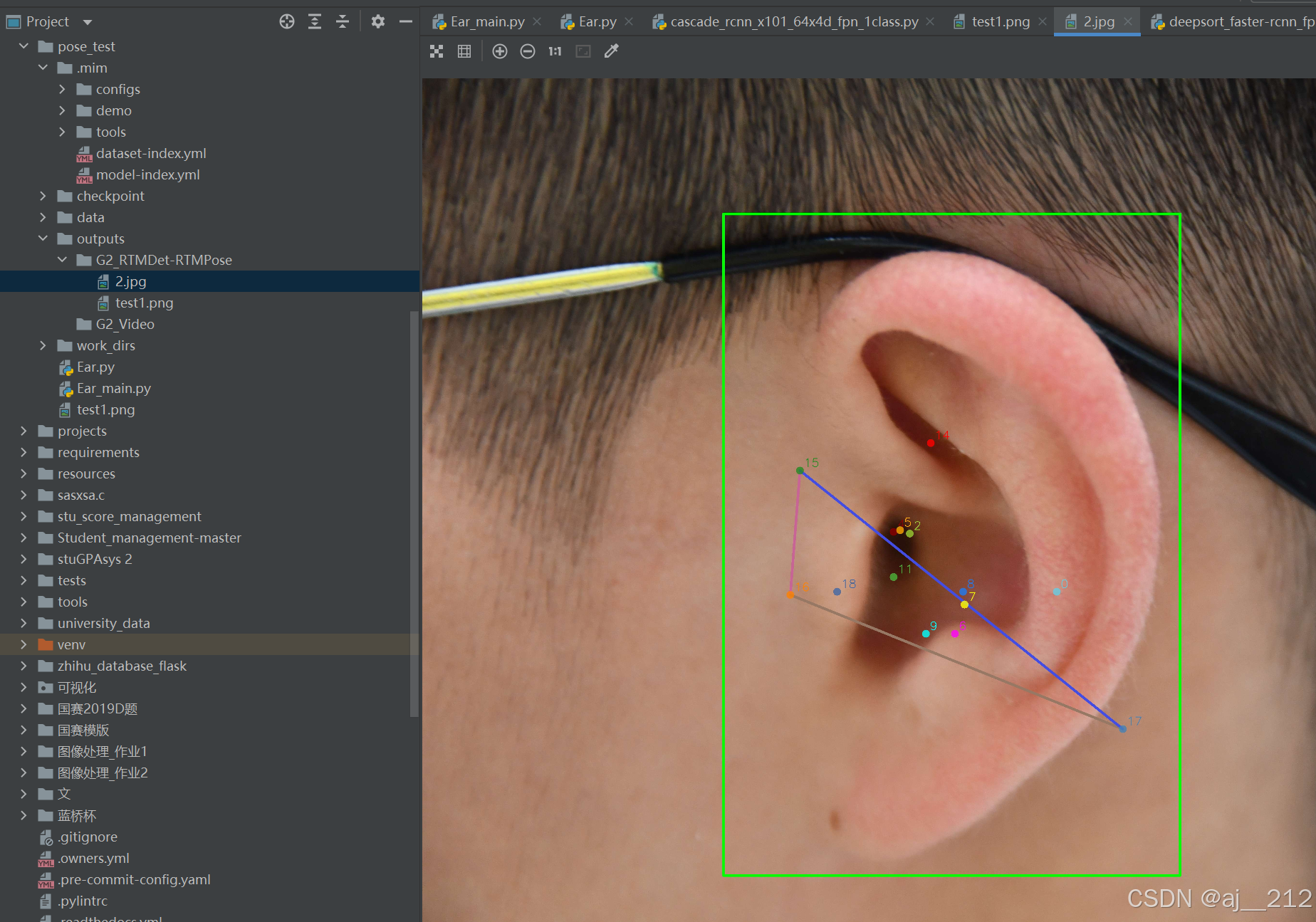

项目最终完成展示图

项目源码网盘自取:链接:https://pan.baidu.com/s/1QFFr4UBwaYePyIOFkgh5mw?pwd=4211

提取码:4211

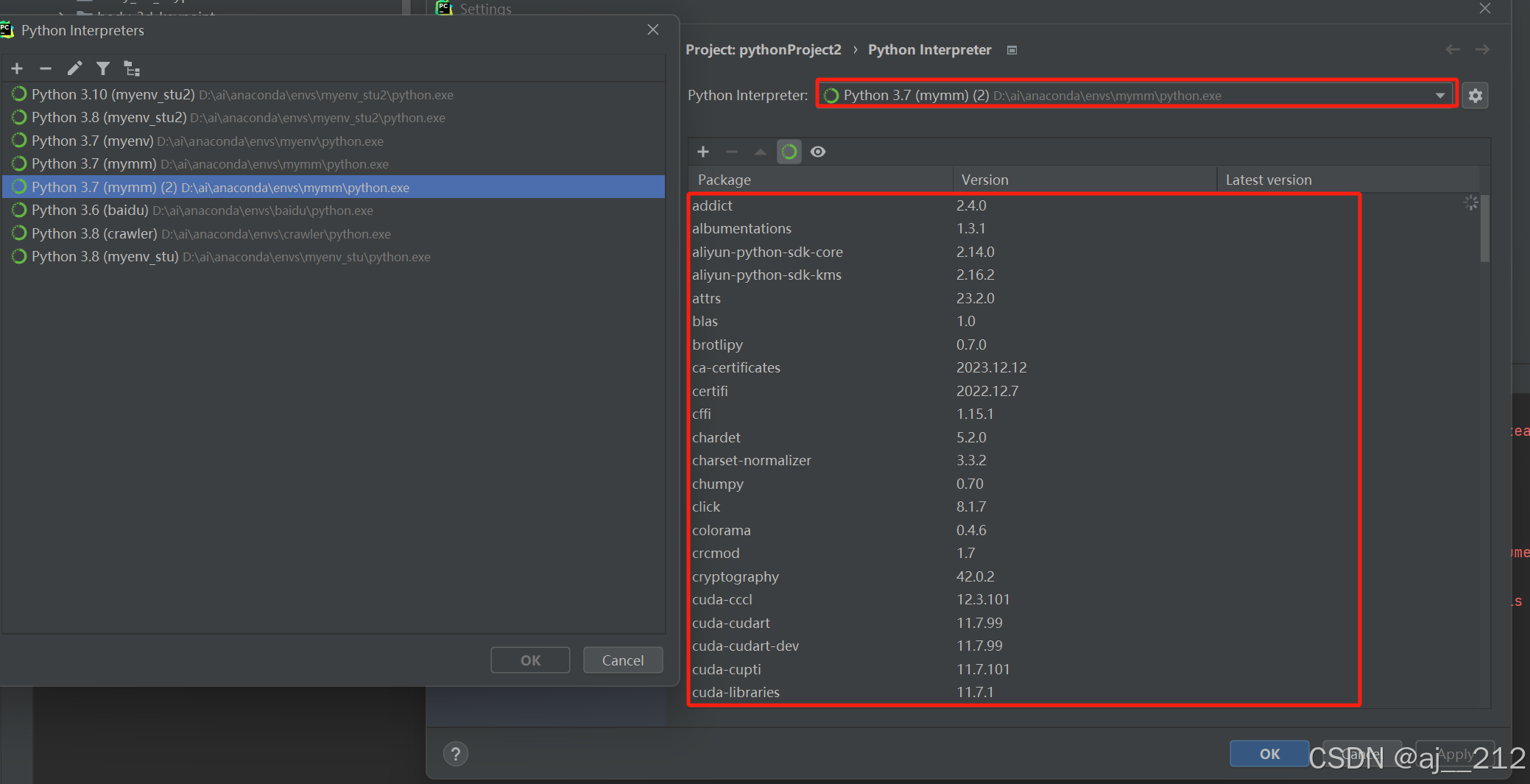

二、首先要配置好所需环境

首先要配置好所需环境

首先要配置好所需环境

重要的事情说三遍!!!!

环境配置参考之前教学,传送门:https://blog.csdn.net/m0_74194018/article/details/140844116

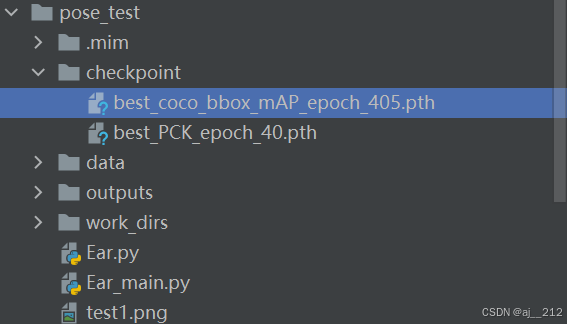

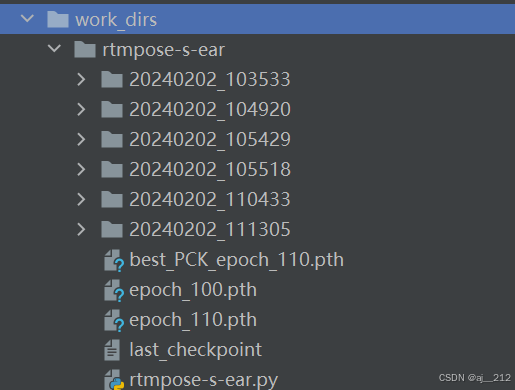

打开项目目录结构如下图所示:(checkpoint权重文件我已经帮大家训练好了训练了7749个小时)

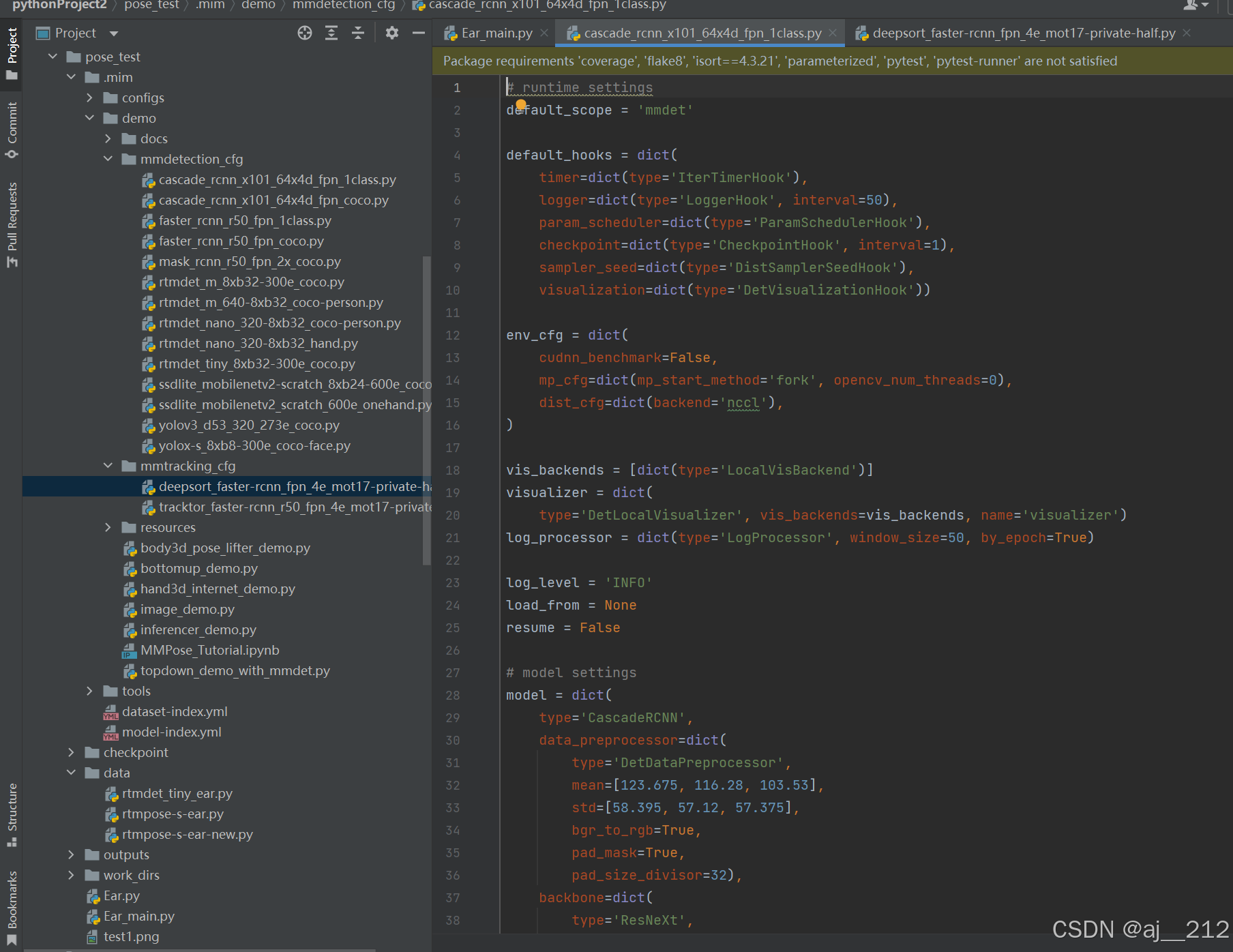

三、config配置文件我也已经准备好了不需要自己手动更改。

mmdetection_cfg

# runtime settings default_scope = 'mmdet' default_hooks = dict( timer=dict(type='IterTimerHook'), logger=dict(type='LoggerHook', interval=50), param_scheduler=dict(type='ParamSchedulerHook'), checkpoint=dict(type='CheckpointHook', interval=1), sampler_seed=dict(type='DistSamplerSeedHook'), visualization=dict(type='DetVisualizationHook')) env_cfg = dict( cudnn_benchmark=False, mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0), dist_cfg=dict(backend='nccl'), ) vis_backends = [dict(type='LocalVisBackend')] visualizer = dict( type='DetLocalVisualizer', vis_backends=vis_backends, name='visualizer') log_processor = dict(type='LogProcessor', window_size=50, by_epoch=True) log_level = 'INFO' load_from = None resume = False # model settings model = dict( type='CascadeRCNN', data_preprocessor=dict( type='DetDataPreprocessor', mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], bgr_to_rgb=True, pad_mask=True, pad_size_divisor=32), backbone=dict( type='ResNeXt', depth=101, groups=64, base_width=4, num_stages=4, out_indices=(0, 1, 2, 3), frozen_stages=1, norm_cfg=dict(type='BN', requires_grad=True), style='pytorch', init_cfg=dict( type='Pretrained', checkpoint='open-mmlab://resnext101_64x4d')), neck=dict( type='FPN', in_channels=[256, 512, 1024, 2048], out_channels=256, num_outs=5), rpn_head=dict( type='RPNHead', in_channels=256, feat_channels=256, anchor_generator=dict( type='AnchorGenerator', scales=[8], ratios=[0.5, 1.0, 2.0], strides=[4, 8, 16, 32, 64]), bbox_coder=dict( type='DeltaXYWHBBoxCoder', target_means=[.0, .0, .0, .0], target_stds=[1.0, 1.0, 1.0, 1.0]), loss_cls=dict( type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0), loss_bbox=dict(type='SmoothL1Loss', beta=1.0 / 9.0, loss_weight=1.0)), roi_head=dict( type='CascadeRoIHead', num_stages=3, stage_loss_weights=[1, 0.5, 0.25], bbox_roi_extractor=dict( type='SingleRoIExtractor', roi_layer=dict(type='RoIAlign', output_size=7, sampling_ratio=0), out_channels=256, featmap_strides=[4, 8, 16, 32]), bbox_head=[ dict( type='Shared2FCBBoxHead', in_channels=256, fc_out_channels=1024, roi_feat_size=7, num_classes=1, bbox_coder=dict( type='DeltaXYWHBBoxCoder', target_means=[0., 0., 0., 0.], target_stds=[0.1, 0.1, 0.2, 0.2]), reg_class_agnostic=True, loss_cls=dict( type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0), loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0)), dict( type='Shared2FCBBoxHead', in_channels=256, fc_out_channels=1024, roi_feat_size=7, num_classes=1, bbox_coder=dict( type='DeltaXYWHBBoxCoder', target_means=[0., 0., 0., 0.], target_stds=[0.05, 0.05, 0.1, 0.1]), reg_class_agnostic=True, loss_cls=dict( type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0), loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0)), dict( type='Shared2FCBBoxHead', in_channels=256, fc_out_channels=1024, roi_feat_size=7, num_classes=1, bbox_coder=dict( type='DeltaXYWHBBoxCoder', target_means=[0., 0., 0., 0.], target_stds=[0.033, 0.033, 0.067, 0.067]), reg_class_agnostic=True, loss_cls=dict( type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0), loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0)) ]), # model training and testing settings train_cfg=dict( rpn=dict( assigner=dict( type='MaxIoUAssigner', pos_iou_thr=0.7, neg_iou_thr=0.3, min_pos_iou=0.3, match_low_quality=True, ignore_iof_thr=-1), sampler=dict( type='RandomSampler', num=256, pos_fraction=0.5, neg_pos_ub=-1, add_gt_as_proposals=False), allowed_border=0, pos_weight=-1, debug=False), rpn_proposal=dict( nms_pre=2000, max_per_img=2000, nms=dict(type='nms', iou_threshold=0.7), min_bbox_size=0), rcnn=[ dict( assigner=dict( type='MaxIoUAssigner', pos_iou_thr=0.5, neg_iou_thr=0.5, min_pos_iou=0.5, match_low_quality=False, ignore_iof_thr=-1), sampler=dict( type='RandomSampler', num=512, pos_fraction=0.25, neg_pos_ub=-1, add_gt_as_proposals=True), pos_weight=-1, debug=False), dict( assigner=dict( type='MaxIoUAssigner', pos_iou_thr=0.6, neg_iou_thr=0.6, min_pos_iou=0.6, match_low_quality=False, ignore_iof_thr=-1), sampler=dict( type='RandomSampler', num=512, pos_fraction=0.25, neg_pos_ub=-1, add_gt_as_proposals=True), pos_weight=-1, debug=False), dict( assigner=dict( type='MaxIoUAssigner', pos_iou_thr=0.7, neg_iou_thr=0.7, min_pos_iou=0.7, match_low_quality=False, ignore_iof_thr=-1), sampler=dict( type='RandomSampler', num=512, pos_fraction=0.25, neg_pos_ub=-1, add_gt_as_proposals=True), pos_weight=-1, debug=False) ]), test_cfg=dict( rpn=dict( nms_pre=1000, max_per_img=1000, nms=dict(type='nms', iou_threshold=0.7), min_bbox_size=0), rcnn=dict( score_thr=0.05, nms=dict(type='nms', iou_threshold=0.5), max_per_img=100))) # dataset settings dataset_type = 'CocoDataset' data_root = 'data/coco/' train_pipeline = [ dict(type='LoadImageFromFile'), dict(type='LoadAnnotations', with_bbox=True), dict(type='Resize', scale=(1333, 800), keep_ratio=True), dict(type='RandomFlip', prob=0.5), dict(type='PackDetInputs') ] test_pipeline = [ dict(type='LoadImageFromFile'), dict(type='Resize', scale=(1333, 800), keep_ratio=True), # If you don't have a gt annotation, delete the pipeline dict(type='LoadAnnotations', with_bbox=True), dict( type='PackDetInputs', meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape', 'scale_factor')) ] train_dataloader = dict( batch_size=2, num_workers=2, persistent_workers=True, sampler=dict(type='DefaultSampler', shuffle=True), batch_sampler=dict(type='AspectRatioBatchSampler'), dataset=dict( type=dataset_type, data_root=data_root, ann_file='annotations/instances_train2017.json', data_prefix=dict(img='train2017/'), filter_cfg=dict(filter_empty_gt=True, min_size=32), pipeline=train_pipeline)) val_dataloader = dict( batch_size=1, num_workers=2, persistent_workers=True, drop_last=False, sampler=dict(type='DefaultSampler', shuffle=False), dataset=dict( type=dataset_type, data_root=data_root, ann_file='annotations/instances_val2017.json', data_prefix=dict(img='val2017/'), test_mode=True, pipeline=test_pipeline)) test_dataloader = val_dataloader val_evaluator = dict( type='CocoMetric', ann_file=data_root + 'annotations/instances_val2017.json', metric='bbox', format_only=False) test_evaluator = val_evaluator mmtracking_cfg

model = dict( detector=dict( type='FasterRCNN', backbone=dict( type='ResNet', depth=50, num_stages=4, out_indices=(0, 1, 2, 3), frozen_stages=1, norm_cfg=dict(type='BN', requires_grad=True), norm_eval=True, style='pytorch', init_cfg=dict( type='Pretrained', checkpoint='torchvision://resnet50')), neck=dict( type='FPN', in_channels=[256, 512, 1024, 2048], out_channels=256, num_outs=5), rpn_head=dict( type='RPNHead', in_channels=256, feat_channels=256, anchor_generator=dict( type='AnchorGenerator', scales=[8], ratios=[0.5, 1.0, 2.0], strides=[4, 8, 16, 32, 64]), bbox_coder=dict( type='DeltaXYWHBBoxCoder', target_means=[0.0, 0.0, 0.0, 0.0], target_stds=[1.0, 1.0, 1.0, 1.0], clip_border=False), loss_cls=dict( type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0), loss_bbox=dict( type='SmoothL1Loss', beta=0.1111111111111111, loss_weight=1.0)), roi_head=dict( type='StandardRoIHead', bbox_roi_extractor=dict( type='SingleRoIExtractor', roi_layer=dict( type='RoIAlign', output_size=7, sampling_ratio=0), out_channels=256, featmap_strides=[4, 8, 16, 32]), bbox_head=dict( type='Shared2FCBBoxHead', in_channels=256, fc_out_channels=1024, roi_feat_size=7, num_classes=1, bbox_coder=dict( type='DeltaXYWHBBoxCoder', target_means=[0.0, 0.0, 0.0, 0.0], target_stds=[0.1, 0.1, 0.2, 0.2], clip_border=False), reg_class_agnostic=False, loss_cls=dict( type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0), loss_bbox=dict(type='SmoothL1Loss', loss_weight=1.0))), train_cfg=dict( rpn=dict( assigner=dict( type='MaxIoUAssigner', pos_iou_thr=0.7, neg_iou_thr=0.3, min_pos_iou=0.3, match_low_quality=True, ignore_iof_thr=-1), sampler=dict( type='RandomSampler', num=256, pos_fraction=0.5, neg_pos_ub=-1, add_gt_as_proposals=False), allowed_border=-1, pos_weight=-1, debug=False), rpn_proposal=dict( nms_pre=2000, max_per_img=1000, nms=dict(type='nms', iou_threshold=0.7), min_bbox_size=0), rcnn=dict( assigner=dict( type='MaxIoUAssigner', pos_iou_thr=0.5, neg_iou_thr=0.5, min_pos_iou=0.5, match_low_quality=False, ignore_iof_thr=-1), sampler=dict( type='RandomSampler', num=512, pos_fraction=0.25, neg_pos_ub=-1, add_gt_as_proposals=True), pos_weight=-1, debug=False)), test_cfg=dict( rpn=dict( nms_pre=1000, max_per_img=1000, nms=dict(type='nms', iou_threshold=0.7), min_bbox_size=0), rcnn=dict( score_thr=0.05, nms=dict(type='nms', iou_threshold=0.5), max_per_img=100)), init_cfg=dict( type='Pretrained', checkpoint='https://download.openmmlab.com/mmtracking/' 'mot/faster_rcnn/faster-rcnn_r50_fpn_4e_mot17-half-64ee2ed4.pth')), type='DeepSORT', motion=dict(type='KalmanFilter', center_only=False), reid=dict( type='BaseReID', backbone=dict( type='ResNet', depth=50, num_stages=4, out_indices=(3, ), style='pytorch'), neck=dict(type='GlobalAveragePooling', kernel_size=(8, 4), stride=1), head=dict( type='LinearReIDHead', num_fcs=1, in_channels=2048, fc_channels=1024, out_channels=128, num_classes=380, loss=dict(type='CrossEntropyLoss', loss_weight=1.0), loss_pairwise=dict( type='TripletLoss', margin=0.3, loss_weight=1.0), norm_cfg=dict(type='BN1d'), act_cfg=dict(type='ReLU')), init_cfg=dict( type='Pretrained', checkpoint='https://download.openmmlab.com/mmtracking/' 'mot/reid/tracktor_reid_r50_iter25245-a452f51f.pth')), tracker=dict( type='SortTracker', obj_score_thr=0.5, reid=dict( num_samples=10, img_scale=(256, 128), img_norm_cfg=None, match_score_thr=2.0), match_iou_thr=0.5, momentums=None, num_tentatives=2, num_frames_retain=100)) dataset_type = 'MOTChallengeDataset' img_norm_cfg = dict( mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True) train_pipeline = [ dict(type='LoadMultiImagesFromFile', to_float32=True), dict(type='SeqLoadAnnotations', with_bbox=True, with_track=True), dict( type='SeqResize', img_scale=(1088, 1088), share_params=True, ratio_range=(0.8, 1.2), keep_ratio=True, bbox_clip_border=False), dict(type='SeqPhotoMetricDistortion', share_params=True), dict( type='SeqRandomCrop', share_params=False, crop_size=(1088, 1088), bbox_clip_border=False), dict(type='SeqRandomFlip', share_params=True, flip_ratio=0.5), dict( type='SeqNormalize', mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True), dict(type='SeqPad', size_divisor=32), dict(type='MatchInstances', skip_nomatch=True), dict( type='VideoCollect', keys=[ 'img', 'gt_bboxes', 'gt_labels', 'gt_match_indices', 'gt_instance_ids' ]), dict(type='SeqDefaultFormatBundle', ref_prefix='ref') ] test_pipeline = [ dict(type='LoadImageFromFile'), dict( type='MultiScaleFlipAug', img_scale=(1088, 1088), flip=False, transforms=[ dict(type='Resize', keep_ratio=True), dict(type='RandomFlip'), dict( type='Normalize', mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True), dict(type='Pad', size_divisor=32), dict(type='ImageToTensor', keys=['img']), dict(type='VideoCollect', keys=['img']) ]) ] data_root = 'data/MOT17/' data = dict( samples_per_gpu=2, workers_per_gpu=2, train=dict( type='MOTChallengeDataset', visibility_thr=-1, ann_file='data/MOT17/annotations/half-train_cocoformat.json', img_prefix='data/MOT17/train', ref_img_sampler=dict( num_ref_imgs=1, frame_range=10, filter_key_img=True, method='uniform'), pipeline=[ dict(type='LoadMultiImagesFromFile', to_float32=True), dict(type='SeqLoadAnnotations', with_bbox=True, with_track=True), dict( type='SeqResize', img_scale=(1088, 1088), share_params=True, ratio_range=(0.8, 1.2), keep_ratio=True, bbox_clip_border=False), dict(type='SeqPhotoMetricDistortion', share_params=True), dict( type='SeqRandomCrop', share_params=False, crop_size=(1088, 1088), bbox_clip_border=False), dict(type='SeqRandomFlip', share_params=True, flip_ratio=0.5), dict( type='SeqNormalize', mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True), dict(type='SeqPad', size_divisor=32), dict(type='MatchInstances', skip_nomatch=True), dict( type='VideoCollect', keys=[ 'img', 'gt_bboxes', 'gt_labels', 'gt_match_indices', 'gt_instance_ids' ]), dict(type='SeqDefaultFormatBundle', ref_prefix='ref') ]), val=dict( type='MOTChallengeDataset', ann_file='data/MOT17/annotations/half-val_cocoformat.json', img_prefix='data/MOT17/train', ref_img_sampler=None, pipeline=[ dict(type='LoadImageFromFile'), dict( type='MultiScaleFlipAug', img_scale=(1088, 1088), flip=False, transforms=[ dict(type='Resize', keep_ratio=True), dict(type='RandomFlip'), dict( type='Normalize', mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True), dict(type='Pad', size_divisor=32), dict(type='ImageToTensor', keys=['img']), dict(type='VideoCollect', keys=['img']) ]) ]), test=dict( type='MOTChallengeDataset', ann_file='data/MOT17/annotations/half-val_cocoformat.json', img_prefix='data/MOT17/train', ref_img_sampler=None, pipeline=[ dict(type='LoadImageFromFile'), dict( type='MultiScaleFlipAug', img_scale=(1088, 1088), flip=False, transforms=[ dict(type='Resize', keep_ratio=True), dict(type='RandomFlip'), dict( type='Normalize', mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True), dict(type='Pad', size_divisor=32), dict(type='ImageToTensor', keys=['img']), dict(type='VideoCollect', keys=['img']) ]) ])) optimizer = dict(type='SGD', lr=0.02, momentum=0.9, weight_decay=0.0001) optimizer_config = dict(grad_clip=None) checkpoint_config = dict(interval=1) log_config = dict(interval=50, hooks=[dict(type='TextLoggerHook')]) dist_params = dict(backend='nccl') log_level = 'INFO' load_from = None resume_from = None workflow = [('train', 1)] lr_config = dict( policy='step', warmup='linear', warmup_iters=100, warmup_ratio=0.01, step=[3]) total_epochs = 4 evaluation = dict(metric=['bbox', 'track'], interval=1) search_metrics = ['MOTA', 'IDF1', 'FN', 'FP', 'IDs', 'MT', 'ML'] 当然这里也可以用yolo来进行detection目标检测Yolo_cfg

Yolo的具体详细介绍可以参考我之前写的博客介绍,传送门:https://blog.csdn.net/m0_74194018/article/details/140747393

train_cfg = dict(type='EpochBasedTrainLoop', max_epochs=300, val_interval=10) val_cfg = dict(type='ValLoop') test_cfg = dict(type='TestLoop') param_scheduler = [ dict( type='mmdet.QuadraticWarmupLR', by_epoch=True, begin=0, end=5, convert_to_iter_based=True), dict( type='CosineAnnealingLR', eta_min=0.0005, begin=5, T_max=285, end=285, by_epoch=True, convert_to_iter_based=True), dict(type='ConstantLR', by_epoch=True, factor=1, begin=285, end=300) ] optim_wrapper = dict( type='OptimWrapper', optimizer=dict( type='SGD', lr=0.01, momentum=0.9, weight_decay=0.0005, nesterov=True), paramwise_cfg=dict(norm_decay_mult=0.0, bias_decay_mult=0.0)) auto_scale_lr = dict(enable=False, base_batch_size=64) default_scope = 'mmdet' default_hooks = dict( timer=dict(type='IterTimerHook'), logger=dict(type='LoggerHook', interval=50), param_scheduler=dict(type='ParamSchedulerHook'), checkpoint=dict(type='CheckpointHook', interval=10, max_keep_ckpts=3), sampler_seed=dict(type='DistSamplerSeedHook'), visualization=dict(type='DetVisualizationHook')) env_cfg = dict( cudnn_benchmark=False, mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0), dist_cfg=dict(backend='nccl')) vis_backends = [dict(type='LocalVisBackend')] visualizer = dict( type='DetLocalVisualizer', vis_backends=[dict(type='LocalVisBackend')], name='visualizer') log_processor = dict(type='LogProcessor', window_size=50, by_epoch=True) log_level = 'INFO' load_from = 'https://download.openmmlab.com/mmdetection/' \ 'v2.0/yolox/yolox_s_8x8_300e_coco/' \ 'yolox_s_8x8_300e_coco_20211121_095711-4592a793.pth' resume = False img_scale = (640, 640) model = dict( type='YOLOX', data_preprocessor=dict( type='DetDataPreprocessor', pad_size_divisor=32, batch_augments=[ dict( type='BatchSyncRandomResize', random_size_range=(480, 800), size_divisor=32, interval=10) ]), backbone=dict( type='CSPDarknet', deepen_factor=0.33, widen_factor=0.5, out_indices=(2, 3, 4), use_depthwise=False, spp_kernal_sizes=(5, 9, 13), norm_cfg=dict(type='BN', momentum=0.03, eps=0.001), act_cfg=dict(type='Swish')), neck=dict( type='YOLOXPAFPN', in_channels=[128, 256, 512], out_channels=128, num_csp_blocks=1, use_depthwise=False, upsample_cfg=dict(scale_factor=2, mode='nearest'), norm_cfg=dict(type='BN', momentum=0.03, eps=0.001), act_cfg=dict(type='Swish')), bbox_head=dict( type='YOLOXHead', num_classes=1, in_channels=128, feat_channels=128, stacked_convs=2, strides=(8, 16, 32), use_depthwise=False, norm_cfg=dict(type='BN', momentum=0.03, eps=0.001), act_cfg=dict(type='Swish'), loss_cls=dict( type='CrossEntropyLoss', use_sigmoid=True, reduction='sum', loss_weight=1.0), loss_bbox=dict( type='IoULoss', mode='square', eps=1e-16, reduction='sum', loss_weight=5.0), loss_obj=dict( type='CrossEntropyLoss', use_sigmoid=True, reduction='sum', loss_weight=1.0), loss_l1=dict(type='L1Loss', reduction='sum', loss_weight=1.0)), train_cfg=dict(assigner=dict(type='SimOTAAssigner', center_radius=2.5)), test_cfg=dict(score_thr=0.01, nms=dict(type='nms', iou_threshold=0.65))) data_root = 'data/coco/' dataset_type = 'CocoDataset' backend_args = dict(backend='local') train_pipeline = [ dict(type='Mosaic', img_scale=(640, 640), pad_val=114.0), dict( type='RandomAffine', scaling_ratio_range=(0.1, 2), border=(-320, -320)), dict( type='MixUp', img_scale=(640, 640), ratio_range=(0.8, 1.6), pad_val=114.0), dict(type='YOLOXHSVRandomAug'), dict(type='RandomFlip', prob=0.5), dict(type='Resize', scale=(640, 640), keep_ratio=True), dict( type='Pad', pad_to_square=True, pad_val=dict(img=(114.0, 114.0, 114.0))), dict(type='FilterAnnotations', min_gt_bbox_wh=(1, 1), keep_empty=False), dict(type='PackDetInputs') ] train_dataset = dict( type='MultiImageMixDataset', dataset=dict( type='CocoDataset', data_root='data/coco/', ann_file='annotations/instances_train2017.json', data_prefix=dict(img='train2017/'), pipeline=[ dict(type='LoadImageFromFile', backend_args=dict(backend='local')), dict(type='LoadAnnotations', with_bbox=True) ], filter_cfg=dict(filter_empty_gt=False, min_size=32)), pipeline=[ dict(type='Mosaic', img_scale=(640, 640), pad_val=114.0), dict( type='RandomAffine', scaling_ratio_range=(0.1, 2), border=(-320, -320)), dict( type='MixUp', img_scale=(640, 640), ratio_range=(0.8, 1.6), pad_val=114.0), dict(type='YOLOXHSVRandomAug'), dict(type='RandomFlip', prob=0.5), dict(type='Resize', scale=(640, 640), keep_ratio=True), dict( type='Pad', pad_to_square=True, pad_val=dict(img=(114.0, 114.0, 114.0))), dict( type='FilterAnnotations', min_gt_bbox_wh=(1, 1), keep_empty=False), dict(type='PackDetInputs') ]) test_pipeline = [ dict(type='LoadImageFromFile', backend_args=dict(backend='local')), dict(type='Resize', scale=(640, 640), keep_ratio=True), dict( type='Pad', pad_to_square=True, pad_val=dict(img=(114.0, 114.0, 114.0))), dict(type='LoadAnnotations', with_bbox=True), dict( type='PackDetInputs', meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape', 'scale_factor')) ] train_dataloader = dict( batch_size=8, num_workers=4, persistent_workers=True, sampler=dict(type='DefaultSampler', shuffle=True), dataset=dict( type='MultiImageMixDataset', dataset=dict( type='CocoDataset', data_root='data/coco/', ann_file='annotations/coco_face_train.json', data_prefix=dict(img='train2017/'), pipeline=[ dict( type='LoadImageFromFile', backend_args=dict(backend='local')), dict(type='LoadAnnotations', with_bbox=True) ], filter_cfg=dict(filter_empty_gt=False, min_size=32), metainfo=dict(CLASSES=('person', ), PALETTE=(220, 20, 60))), pipeline=[ dict(type='Mosaic', img_scale=(640, 640), pad_val=114.0), dict( type='RandomAffine', scaling_ratio_range=(0.1, 2), border=(-320, -320)), dict( type='MixUp', img_scale=(640, 640), ratio_range=(0.8, 1.6), pad_val=114.0), dict(type='YOLOXHSVRandomAug'), dict(type='RandomFlip', prob=0.5), dict(type='Resize', scale=(640, 640), keep_ratio=True), dict( type='Pad', pad_to_square=True, pad_val=dict(img=(114.0, 114.0, 114.0))), dict( type='FilterAnnotations', min_gt_bbox_wh=(1, 1), keep_empty=False), dict(type='PackDetInputs') ])) val_dataloader = dict( batch_size=8, num_workers=4, persistent_workers=True, drop_last=False, sampler=dict(type='DefaultSampler', shuffle=False), dataset=dict( type='CocoDataset', data_root='data/coco/', ann_file='annotations/coco_face_val.json', data_prefix=dict(img='val2017/'), test_mode=True, pipeline=[ dict(type='LoadImageFromFile', backend_args=dict(backend='local')), dict(type='Resize', scale=(640, 640), keep_ratio=True), dict( type='Pad', pad_to_square=True, pad_val=dict(img=(114.0, 114.0, 114.0))), dict(type='LoadAnnotations', with_bbox=True), dict( type='PackDetInputs', meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape', 'scale_factor')) ], metainfo=dict(CLASSES=('person', ), PALETTE=(220, 20, 60)))) test_dataloader = dict( batch_size=8, num_workers=4, persistent_workers=True, drop_last=False, sampler=dict(type='DefaultSampler', shuffle=False), dataset=dict( type='CocoDataset', data_root='data/coco/', ann_file='annotations/coco_face_val.json', data_prefix=dict(img='val2017/'), test_mode=True, pipeline=[ dict(type='LoadImageFromFile', backend_args=dict(backend='local')), dict(type='Resize', scale=(640, 640), keep_ratio=True), dict( type='Pad', pad_to_square=True, pad_val=dict(img=(114.0, 114.0, 114.0))), dict(type='LoadAnnotations', with_bbox=True), dict( type='PackDetInputs', meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape', 'scale_factor')) ], metainfo=dict(CLASSES=('person', ), PALETTE=(220, 20, 60)))) val_evaluator = dict( type='CocoMetric', ann_file='data/coco/annotations/coco_face_val.json', metric='bbox') test_evaluator = dict( type='CocoMetric', ann_file='data/coco/annotations/instances_val2017.json', metric='bbox') max_epochs = 300 num_last_epochs = 15 interval = 10 base_lr = 0.01 custom_hooks = [ dict(type='YOLOXModeSwitchHook', num_last_epochs=15, priority=48), dict(type='SyncNormHook', priority=48), dict( type='EMAHook', ema_type='ExpMomentumEMA', momentum=0.0001, strict_load=False, update_buffers=True, priority=49) ] metainfo = dict(CLASSES=('person', ), PALETTE=(220, 20, 60)) launcher = 'pytorch' 四、output文件夹

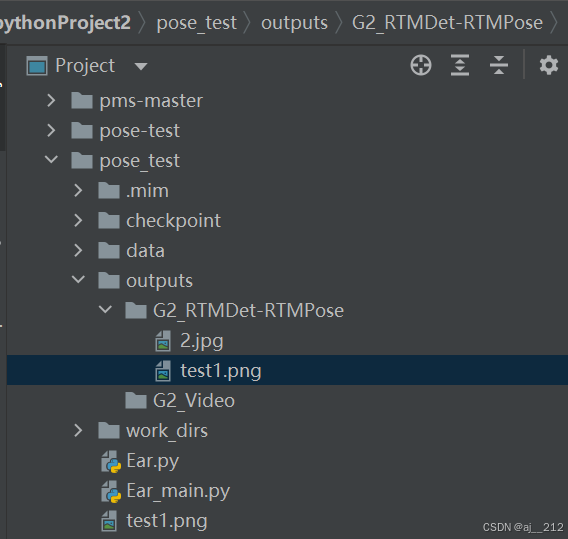

这里主要存放模型推理处理过后的图像

work_dirs文件夹:主要存放我的训练日志

Ear.py

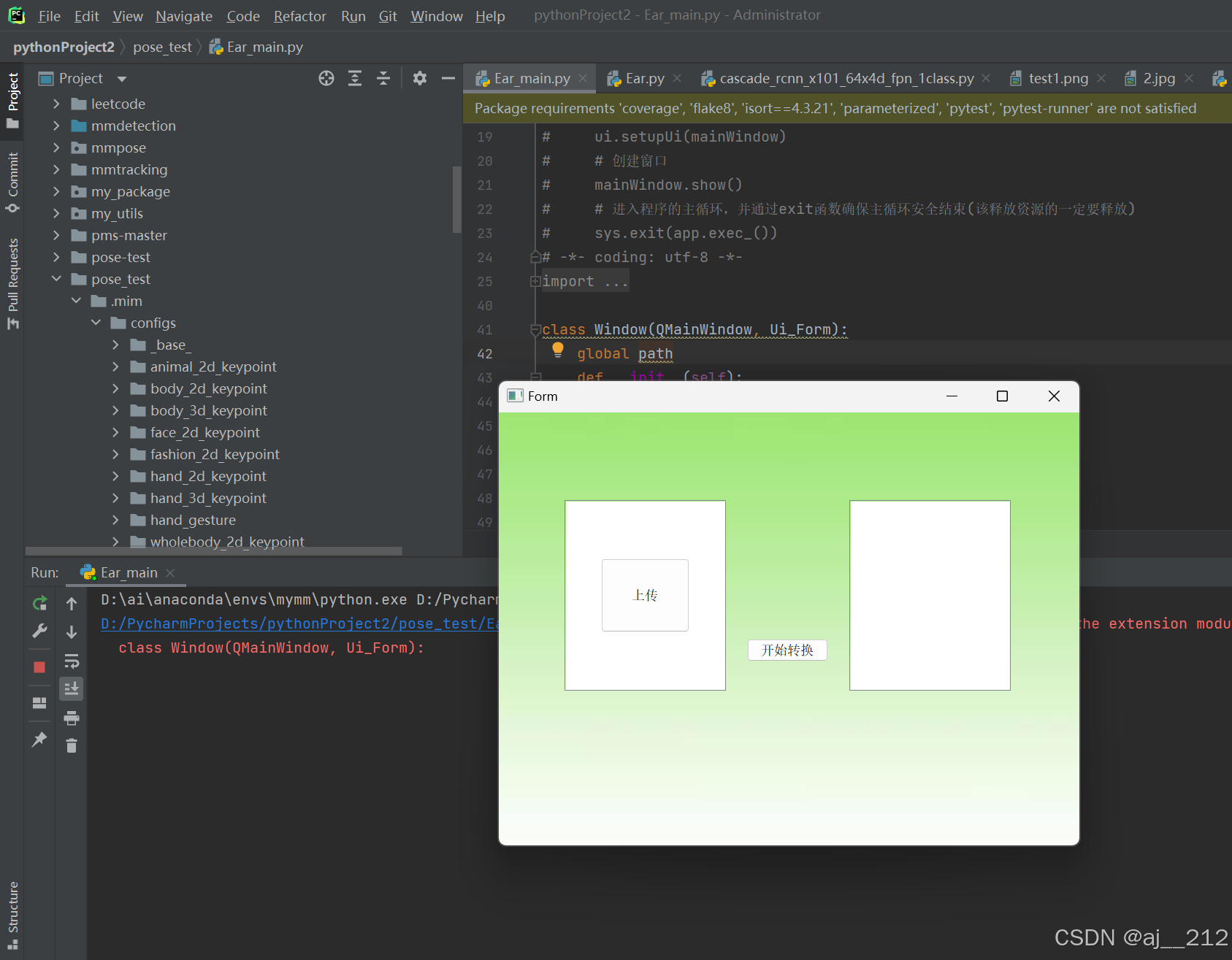

(这个文件主要用python的pyqt框架写一个特比简单的qt界面)

# -*- coding: utf-8 -*- # Form implementation generated from reading ui file 'Ear.ui' # # Created by: PyQt5 UI code generator 5.15.9 # # WARNING: Any manual changes made to this file will be lost when pyuic5 is # run again. Do not edit this file unless you know what you are doing. from PyQt5 import QtCore, QtGui, QtWidgets class Ui_Form(object): def setupUi(self, Form): Form.setObjectName("Form") Form.resize(795, 593) self.listWidget = QtWidgets.QListWidget(Form) self.listWidget.setGeometry(QtCore.QRect(90, 120, 221, 261)) self.listWidget.setObjectName("listWidget") self.listWidget_2 = QtWidgets.QListWidget(Form) self.listWidget_2.setGeometry(QtCore.QRect(480, 120, 221, 261)) self.listWidget_2.setObjectName("listWidget_2") self.pushButton = QtWidgets.QPushButton(Form) self.pushButton.setGeometry(QtCore.QRect(340, 310, 111, 31)) self.pushButton.setObjectName("pushButton") self.label = QtWidgets.QLabel(Form) self.label.setGeometry(QtCore.QRect(-30, -70, 1256, 707)) self.label.setText("") self.label.setPixmap(QtGui.QPixmap("C:/Users/lenovo/Pictures/0d9289b1001061e53ca12b2063016c0.png")) self.label.setScaledContents(True) self.label.setWordWrap(False) self.label.setOpenExternalLinks(True) self.label.setObjectName("label") self.pushButton_2 = QtWidgets.QPushButton(Form) self.pushButton_2.setGeometry(QtCore.QRect(140, 200, 121, 101)) self.pushButton_2.setBaseSize(QtCore.QSize(0, 0)) self.pushButton_2.setIconSize(QtCore.QSize(16, 16)) self.pushButton_2.setShortcut("") self.pushButton_2.setCheckable(False) self.pushButton_2.setAutoRepeatInterval(100) self.pushButton_2.setObjectName("pushButton_2") self.label.raise_() self.listWidget.raise_() self.listWidget_2.raise_() self.pushButton.raise_() self.pushButton_2.raise_() self.retranslateUi(Form) QtCore.QMetaObject.connectSlotsByName(Form) def retranslateUi(self, Form): _translate = QtCore.QCoreApplication.translate Form.setWindowTitle(_translate("Form", "Form")) self.pushButton.setText(_translate("Form", "开始转换")) self.pushButton_2.setText(_translate("Form", "上传")) 接着可以打开Ear_main.py文件运行一下查看效果:

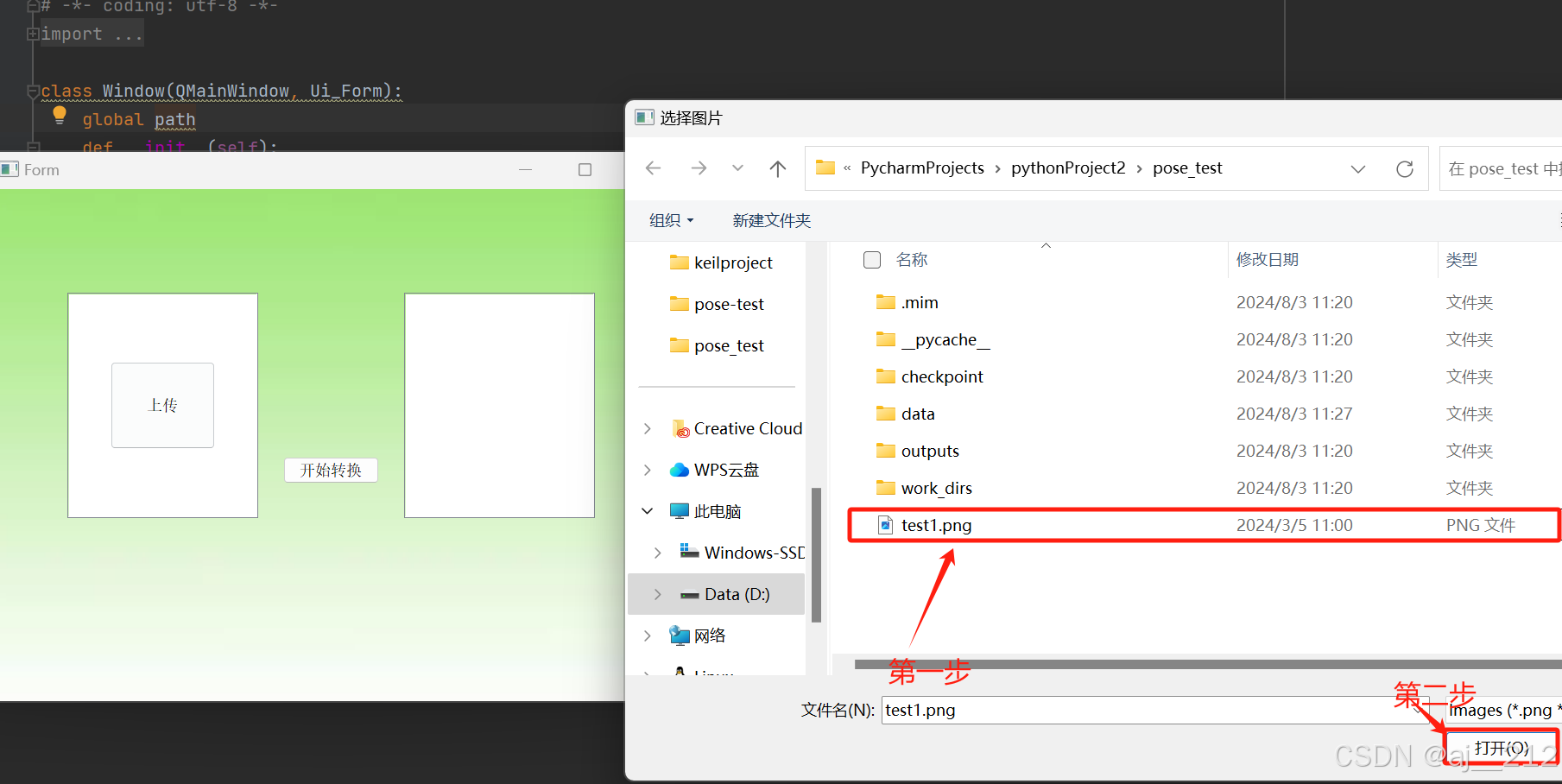

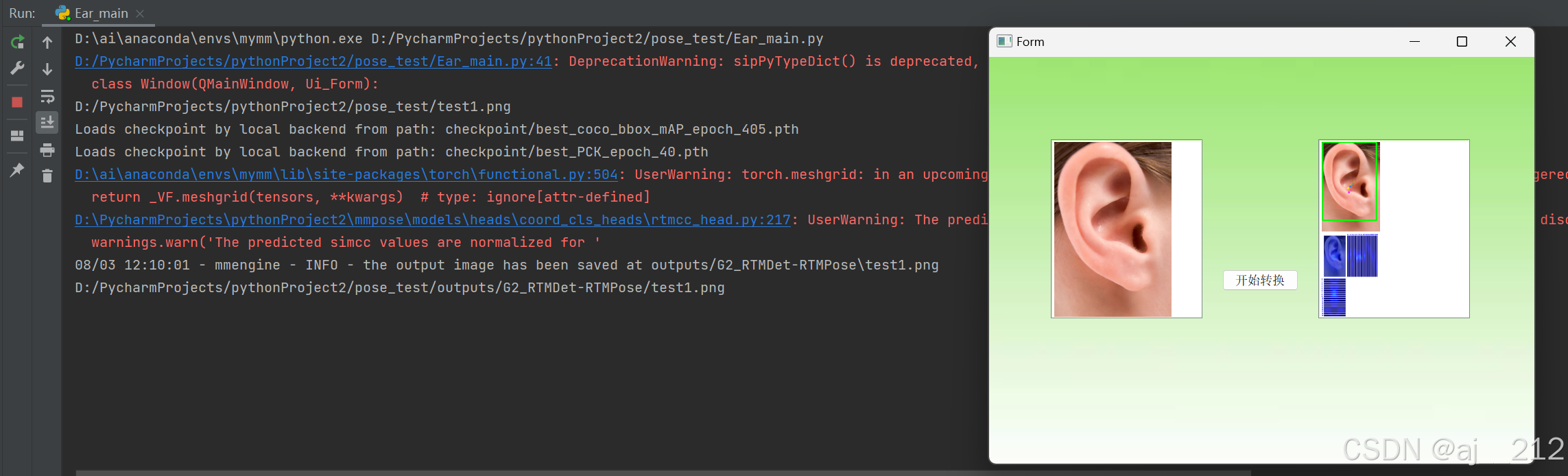

五、上传我提前为大家准备好的侧视图test1.png,点击开始转换

处理好的图片保存到outputs文件夹里了

处理好的图片保存到outputs文件夹里了

有两个warning可能是因为版本冲突的问题不是error可以忽略结果图

致辞这个项目完成啦,别忘了点赞收藏哦!!!继续关注我,教大家更多cv项目!!!