阅读量:0

一、搭建高可用OpenStack(Queen版)集群之架构环境准备

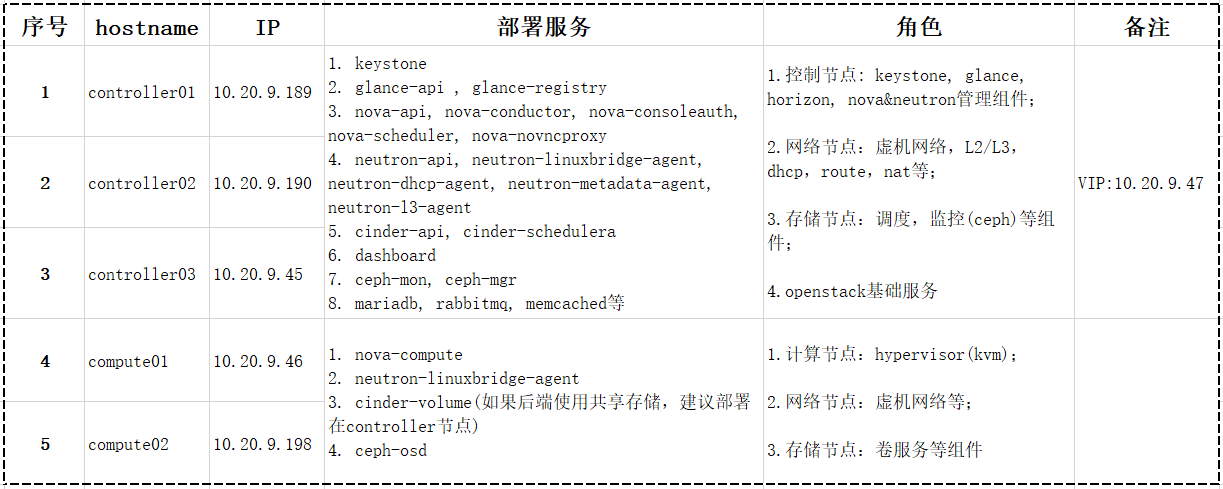

一、架构设计

二、初始化基础环境

1、管理节点创建密钥对(方便传输数据)

所有控制节点操作

# ssh-keygen #一路回车即可 Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: f7:4f:9a:63:83:ec:00:de:5f:4e:dd:46:39:0e:95:6d root@controller01 The key's randomart image is: +--[ RSA 2048]----+ | | | o| | oE| | ...| | . S . . o.| | . o . . .oo.| | . o. .+ o.o| | oo++= . | | .o.+o. | +-----------------+

分发证书(为了免输入密码,先安装yum install sshpass -y(注意分发完密钥,及时删除此行))

/opt/scripts/shell/ssh-copy.sh

#!/bin/bash IP=" 10.20.9.189 10.20.9.190 10.20.9.45 10.20.9.46 10.20.9.198 " MASTER=" 10.20.9.190 10.20.9.45 " for node in ${MASTER};do #for node in ${IP};do #sshpass -p 123456 ssh-copy-id -p 22 10.20.9.46 -o StrictHostKeyChecking=no #scp /etc/hosts ${node}:/etc/ scp /etc/my.cnf.d/openstack.cnf ${node}:/etc/my.cnf.d/openstack.cnf done 2、更改主机名和hosts解析(生产环境可用内网dns)

更改主机名(在对应的主机上执行)

hostnamectl --static set-hostname controller01 hostnamectl --static set-hostname controller02 hostnamectl --static set-hostname controller03 hostnamectl --static set-hostname compute01 hostnamectl --static set-hostname compute02

更改hosts文件,发送到各个节点(使用上面的脚本发送)

cat <<EOF>>/etc/hosts 10.20.9.189 controller01 controller01.local.com 10.20.9.190 controller02 controller02.local.com 10.20.9.45 controller03 controller03.local.com 10.20.9.46 compute01 compute01.local.com 10.20.9.198 compute02 compute02.local.com 10.20.9.47 controller EOF

for循环执行命令改为:scp /etc/hosts ${node}:/etc/

sh /opt/scripts/shell/ssh-copy.sh

3、设置时间同步(集群时间一定要保持一致)

#CentOS7可以安装chrony yum install chrony -y

线上直接同步本地时间服务器

4、集群安装OpenStack Queens版基础环境

# 安装queen版yum源 yum install centos-release-openstack-queens -y yum upgrade -y # 安装openstackclient yum install python-openstackclient -y # selinux开启时需要安装openstack-selinux,这里已将seliux设置为默认关闭 yum install openstack-selinux -y

5、防火墙若已开启,需要开放所需端口(端口根据实际情况作出更改);若关闭了省略此步骤

全部节点提前统一设置完成iptables,以controller01节点为例;

初始环境已使用iptables替代centos7.x自带的firewalld,同时关闭selinux;

[root@controller01 ~]# vim /etc/sysconfig/iptables # mariadb # tcp3306:服务监听端口; # tcp&udp4567:tcp做数据同步复制,多播复制同时采用tcp与udp; # tcp4568:增量状态传输; # tcp4444:其他状态快照传输; # tcp9200:心跳检测 -A INPUT -p tcp -m state --state NEW -m tcp --dport 3306 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 4444 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 4567:4568 -j ACCEPT -A INPUT -p udp -m state --state NEW -m udp --dport 4567 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 9200 -j ACCEPT # rabbitmq # tcp4369:集群邻居发现; # tcp5671,5672:用于AMQP 0.9.1 and 1.0 clients使用; # tcp5673:非rabbitmq默认使用端口,这里用作hapoxy前端监听端口,避免后端服务与haproxy在1个节点时无法启动的问题;如果使用rabbitmq本身的集群机制,则可不设置此端口; # tcp15672:用于http api与rabbitadmin访问,后者仅限在management plugin开启时; # tcp25672:用于erlang分布式节点/工具通信 -A INPUT -p tcp -m state --state NEW -m tcp --dport 4369 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 5671:5673 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 15672:15673 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 25672 -j ACCEPT # memcached # tcp11211:服务监听端口; -A INPUT -p tcp -m state --state NEW -m tcp --dport 11211 -j ACCEPT # pcs # tcp2224:pcs web管理服务监听端口,可通过web新建,查看,删除资源等,端口值在/usr/lib/pcsd/ssl.rb文件中设置; # udp5405:中间件corosync服务集群多播通信端口 -A INPUT -p tcp -m state --state NEW -m tcp --dport 2224 -j ACCEPT -A INPUT -p udp -m state --state NEW -m udp --dport 5404:5405 -j ACCEPT # haproxy # tcp1080:haproxy监听端口 -A INPUT -p tcp -m state --state NEW -m tcp --dport 1080 -j ACCEPT # dashboard # tcp80:dashboard监听端口 -A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT # keystone # tcp35357:admin-api端口; # tcp5000:public/internal-api端口 -A INPUT -p tcp -m state --state NEW -m tcp --dport 35357 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 5000 -j ACCEPT # glance # tcp9191:glance-registry端口; # tcp9292:glance-api端口 -A INPUT -p tcp -m state --state NEW -m tcp --dport 9191 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 9292 -j ACCEPT # nova # tcp8773:nova-ec2-api端口; # tcp8774:nova-compute-api端口; # tcp8775:nova-metadata-api端口; # tcp8778:placement-api端口; # tcp6080:vncproxy端口 -A INPUT -p tcp -m state --state NEW -m tcp --dport 8773:8775 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 8778 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 6080 -j ACCEPT # cinder # tcp8776:cinder-api端口 -A INPUT -p tcp -m state --state NEW -m tcp --dport 8776 -j ACCEPT # neutron # tcp9696:neutron-api端口; # udp4789:vxlan目的端口 -A INPUT -p tcp -m state --state NEW -m tcp --dport 9696 -j ACCEPT -A INPUT -p udp -m state --state NEW -m udp --dport 4789 -j ACCEPT # ceph # tcp6789:ceph-mon端口; # tcp6800~7300:ceph-osd端口 -A INPUT -p tcp -m state --state NEW -m tcp --dport 6789 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 6800:7300 -j ACCEPT

重启防火墙

[root@controller01 ~]# service iptables restart