阅读量:0

1. 介绍

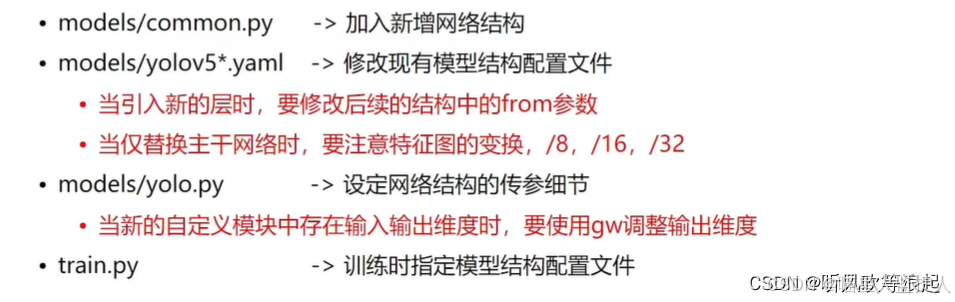

yolov5替换主干网络的步骤如下,依旧和之前的一样

2. 更改common文件

将下面代码加入common最下面即可:

from einops import rearrange import torch import torch.nn as nn # Transformer Attention模块定义 class TAttention(nn.Module): def __init__(self, dim, heads=8, dim_head=64, dropout=0.): super().__init__() inner_dim = dim_head * heads project_out = not (heads == 1 and dim_head == dim) self.heads = heads self.scale = dim_head ** -0.5 self.attend = nn.Softmax(dim=-1) self.to_qkv = nn.Linear(dim, inner_dim * 3, bias=False) self.to_out = nn.Sequential( nn.Linear(inner_dim, dim), nn.Dropout(dropout) ) if project_out else nn.Identity() def forward(