阅读量:0

爬虫:爬取王者荣耀技能信息(代码和代码流程)

-

代码

-

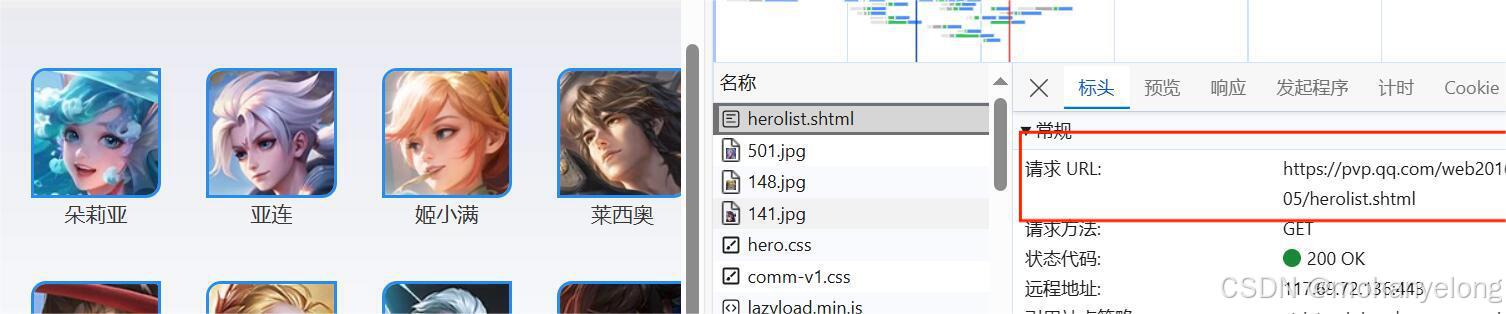

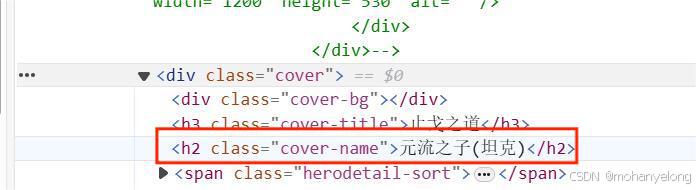

# 王者荣耀英雄信息获取 import time from selenium import webdriver from selenium.webdriver.common.by import By if __name__ == '__main__': fp = open("./honorKing.txt", "w", encoding='utf8') # 1、url url = "https://pvp.qq.com/web201605/herolist.shtml"#页面url # 2、发送请求 driver = webdriver.Edge()#采用edge浏览器 driver.get(url)#获取url time.sleep(3)#防止被检测到爬虫爬取 # 3、获取想要的信息 # 获取页面信息 # driver.page_source # 4、数据解析 li_list = driver.find_elements(By.XPATH, "//ul[@class='herolist clearfix']/li")#获取所有的li hero_url_list = []#存储所有的跳转url数据 for li in li_list: hero_url = li.find_element(By.XPATH, "a").get_attribute("href")#跳转的url hero_url_list.append(hero_url) # 句柄的问题 先把所有第一个页面的东西存起来 for url in hero_url_list: time.sleep(3)#防止被检测到爬虫爬取 driver.get(url) hero_name = driver.find_element(By.XPATH, "//h2[@class='cover-name']").text div_list = driver.find_elements(By.XPATH, "//div[@class='skill-show']/div")# 拿到所有的技能信息 fp.write(hero_name + "\n")#写入角色名称 for div in div_list: js = f'document.getElementsByClassName("show-list")[{div_list.index(div)}].style.display="block"'#解除技能信息被锁 driver.execute_script(js) skill_name = div.find_element(By.XPATH, "p[1]/b").text skill_desc = div.find_element(By.XPATH, "p[2]").text fp.write(skill_name + "---->" + skill_desc + "\n") print(skill_name, skill_desc) # 只爬两个看看样例 # if hero_url_list.index(url) == 1: # break driver.close() -

代码流程: