【UE5/UE4】超详细教程接入科大讯飞语音唤醒SDK并初始持久监听(10102错误码解决)

先导

Windows环境下

**UE版本为UE4.27及以下

你需要具备一定的C++基础,或全程一对一对照我的代码编写你的代码

使用Offline Voice Recognition插件作为录音插件(仅做录音插件使用)

基于https://github.com/zhangmei126/XunFei二次开发

语音识别部分参考CSDNUE4如何接入科大讯飞的语音识别

在此基础上增加了语音唤醒功能,实际上语音唤醒与上述文章中是两个模块

由于讯飞插件的使用需要调用MSPLogin,也就是需要先注册

其插件中的SpeechInit()方法已经为我们注册好了,如果可以自己写注册的话,后述本文语音唤醒部分是不分引擎版本的

语音唤醒环境配置

参考UE4如何接入科大讯飞的语音识别接入科大讯飞sdk以及使用Offline Voice Recognition插件了后,在plugins中确保他们都是开启的状态

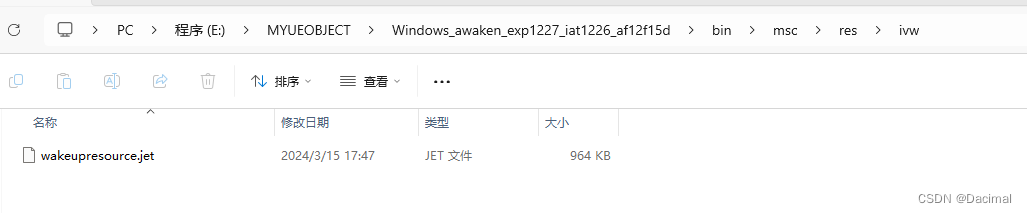

你要确保你的SDK下载的是Windows版本以及一下SDK文件包

你要确保你的SDK下载后正确导入了且appid已经拥有了正确的配置

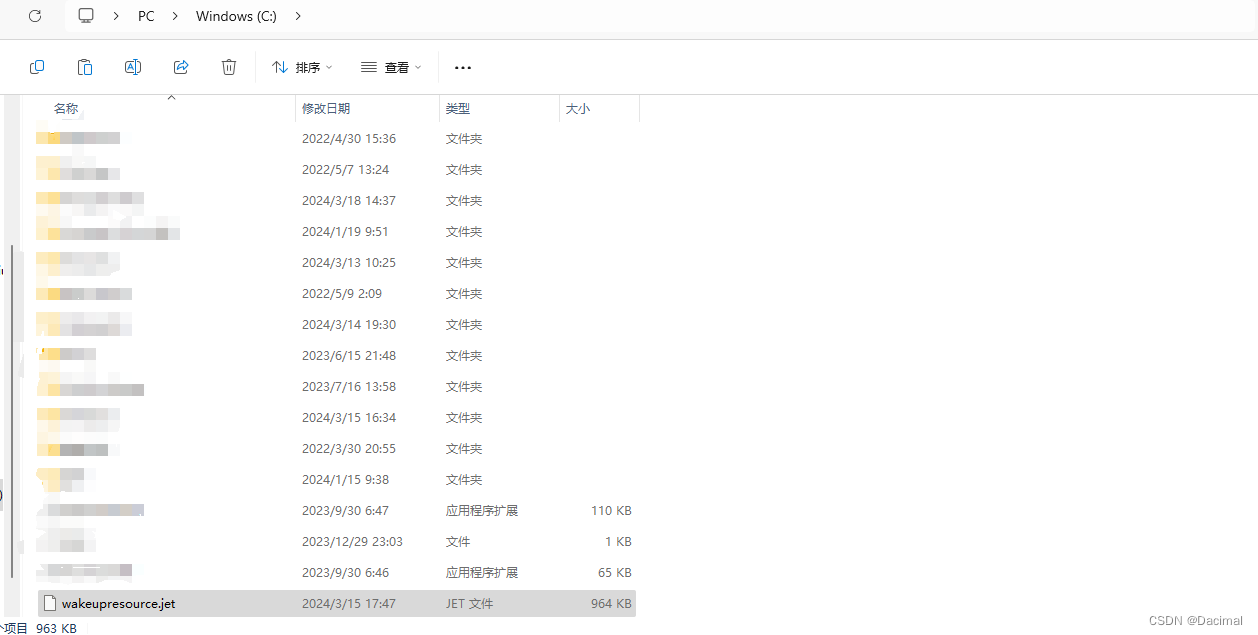

你要确保你SDK下载后将Windows_awaken_exp1227_iat1226_af12f15d\bin\msc\res\ivw\wakeupresource.jet放置到了你的c盘根目录下

to

如果你是为了10102错误码来此文章:

此处是讯飞的坑,讯飞的wakeupresource.jet的路径必须是绝对路径

c++中也就必须要使用转义符"\\"即const char* params = "ivw_threshold=0:5, ivw_res_path =fo|c:\\wakeupresource.jet";

至此10102解决了,就这么简单,但是很坑

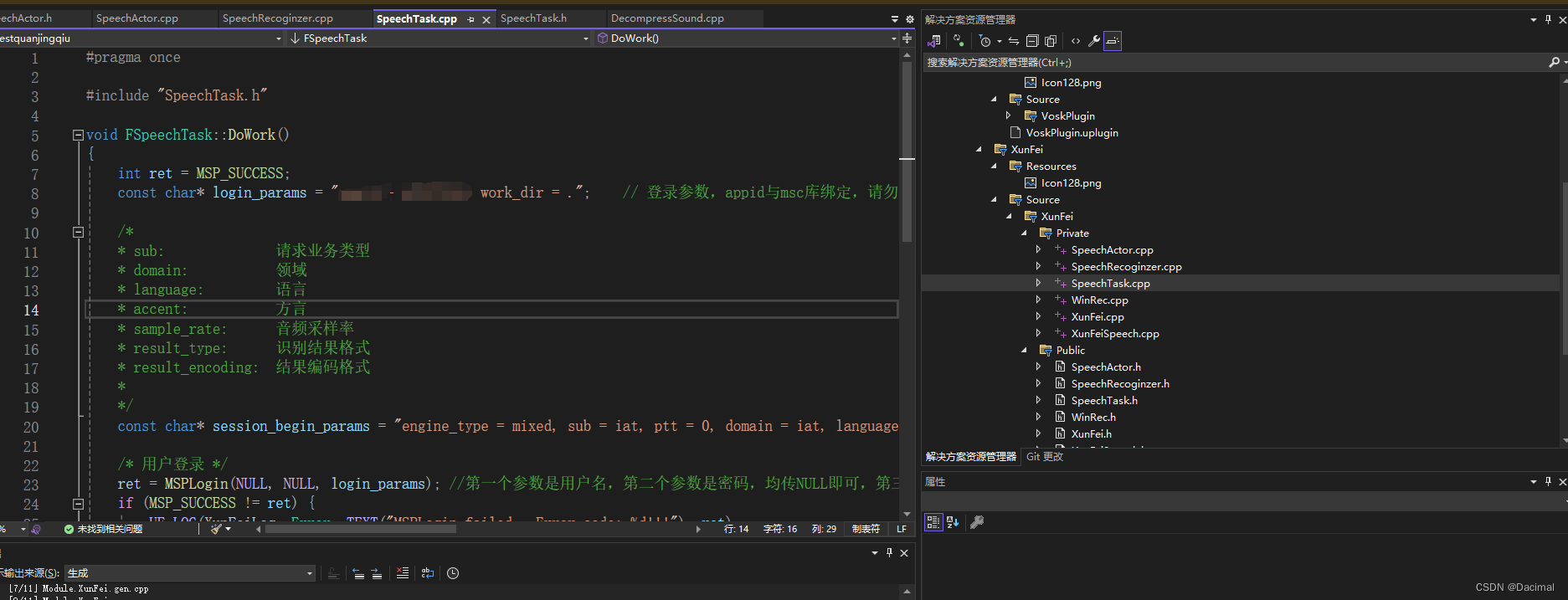

打开VisualStudio

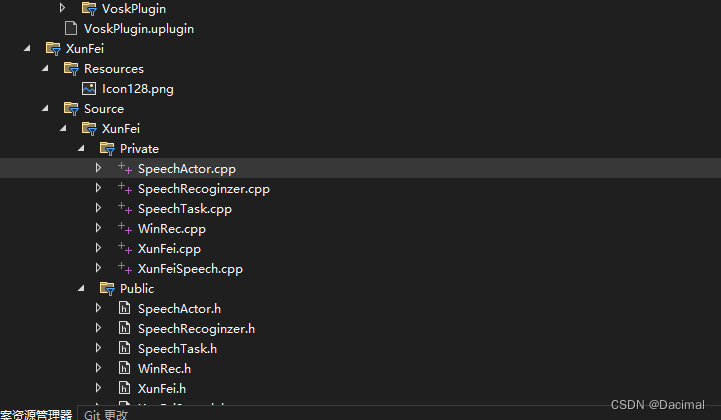

- 打开讯飞插件文件夹

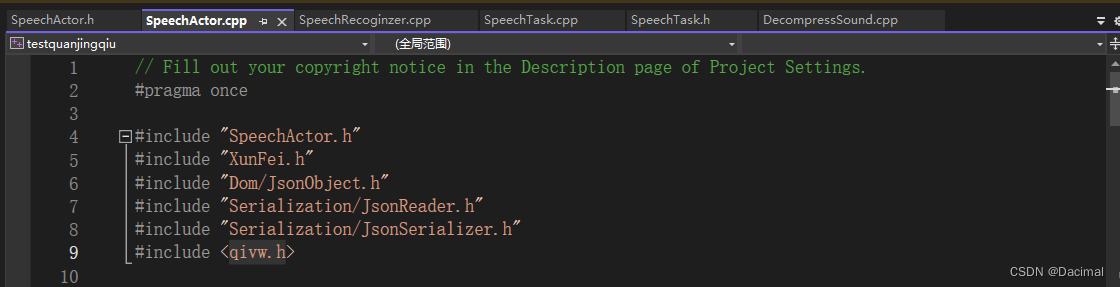

- 在SpeechActor.cpp中引入qivw.h

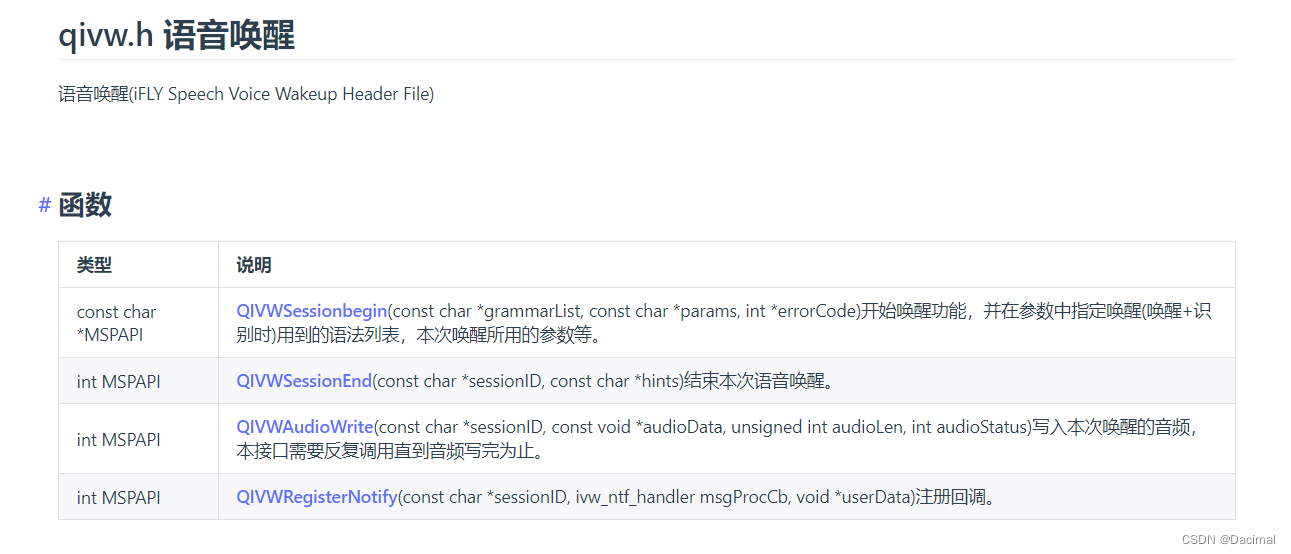

qivw.h语音唤醒具备以下方法

- 在SpeechActor.h中

#pragma once #include "SpeechTask.h" #include "CoreMinimal.h" #include "GameFramework/Actor.h" #include "SpeechActor.generated.h" UCLASS() class XUNFEI_API ASpeechActor : public AActor { GENERATED_BODY() private: FString Result; DECLARE_DYNAMIC_MULTICAST_DELEGATE(FWakeUpBufferDelegate); public: // Sets default values for this actor's properties ASpeechActor(); protected: // Called when the game starts or when spawned virtual void BeginPlay() override; public: // Called every frame virtual void Tick(float DeltaTime) override; UFUNCTION(BlueprintCallable, Category = "XunFei", meta = (DisplayName = "SpeechInit", Keywords = "Speech Recognition Initialization")) void SpeechInit(); UFUNCTION(BlueprintCallable, Category = "XunFei", meta = (DisplayName = "SpeechOpen", Keywords = "Speech Recognition Open")) void SpeechOpen(); UFUNCTION(BlueprintCallable, Category = "XunFei", meta = (DisplayName = "SpeechStop", Keywords = "Speech Recognition Stop")) void SpeechStop(); UFUNCTION(BlueprintCallable, Category = "XunFei", meta = (DisplayName = "SpeechQuit", Keywords = "Speech Recognition Quit")) void SpeechQuit(); UFUNCTION(BlueprintCallable, Category = "XunFei", meta = (DisplayName = "SpeechResult", Keywords = "Speech Recognition GetResult")) FString SpeechResult(); UFUNCTION(BlueprintCallable, Category = "XunFei", meta = (DisplayName = "WakeUpStart", Keywords = "Speech Recognition GetResult")) FString WakeUpStart(); UFUNCTION(BlueprintCallable, Category = "XunFei", meta = (DisplayName = "WakeUpEnd", Keywords = "Speech Recognition GetResult")) bool WakeUpEnd(FString SessionID); UFUNCTION(BlueprintCallable, Category = "XunFei", meta = (DisplayName = "WakeUpBuffer", Keywords = "Speech Recognition GetResult")) bool WakeUpBuffer(TArray<uint8> MyArray, FString SessionID); //这是一个回调函数 UPROPERTY(BlueprintAssignable) FWakeUpBufferDelegate OnWakeUpBuffer; }; - 在SpeechActor.cpp中

// Fill out your copyright notice in the Description page of Project Settings. #pragma once #include "SpeechActor.h" #include "XunFei.h" #include "Dom/JsonObject.h" #include "Serialization/JsonReader.h" #include "Serialization/JsonSerializer.h" #include <qivw.h> // Sets default values ASpeechActor::ASpeechActor() : Result{} { // Set this actor to call Tick() every frame. You can turn this off to improve performance if you don't need it. PrimaryActorTick.bCanEverTick = false; } // Called when the game starts or when spawned void ASpeechActor::BeginPlay() { Super::BeginPlay(); } // Called every frame void ASpeechActor::Tick(float DeltaTime) { Super::Tick(DeltaTime); } void ASpeechActor::SpeechInit() { FAutoDeleteAsyncTask<FSpeechTask>* SpeechTask = new FAutoDeleteAsyncTask<FSpeechTask>(); if (SpeechTask) { SpeechTask->StartBackgroundTask(); } else { UE_LOG(XunFeiLog, Error, TEXT("XunFei task object could not be create !")); return; } UE_LOG(XunFeiLog, Log, TEXT("XunFei Task Stopped !")); return; } void ASpeechActor::SpeechOpen() { xunfeispeech->SetStart(); return; } void ASpeechActor::SpeechStop() { xunfeispeech->SetStop(); return; } void ASpeechActor::SpeechQuit() { xunfeispeech->SetQuit(); Sleep(300); return; } FString ASpeechActor::SpeechResult() { Result = FString(UTF8_TO_TCHAR(xunfeispeech->get_result())); FString LajiString("{\"sn\":2,\"ls\":true,\"bg\":0,\"ed\":0,\"ws\":[{\"bg\":0,\"cw\":[{\"sc\":0.00,\"w\":\"\"}]}]}"); int32 LajiIndex = Result.Find(*LajiString); if (LajiIndex != -1) { Result.RemoveFromEnd(LajiString); } TSharedPtr<FJsonObject> JsonObject; TSharedRef< TJsonReader<TCHAR> > Reader = TJsonReaderFactory<TCHAR>::Create(Result); if (FJsonSerializer::Deserialize(Reader, JsonObject)) { Result.Reset(); TArray< TSharedPtr<FJsonValue> > TempArray = JsonObject->GetArrayField("ws"); for (auto rs : TempArray) { Result.Append((rs->AsObject()->GetArrayField("cw"))[0]->AsObject()->GetStringField("w")); } } UE_LOG(XunFeiLog, Log, TEXT("%s"), *Result); return Result; } // 在 cb_ivw_msg_proc 静态函数中进行事件触发 int cb_ivw_msg_proc(const char* sessionID1, int msg, int param1, int param2, const void* info, void* userData) { if (MSP_IVW_MSG_ERROR == msg) //唤醒出错消息 { UE_LOG(LogTemp, Warning, TEXT("不在")); return 0; } else if (MSP_IVW_MSG_WAKEUP == msg) //唤醒成功消息 { UE_LOG(LogTemp, Warning, TEXT("imhere")); if (userData) { ASpeechActor* MYThis = reinterpret_cast<ASpeechActor*>(userData); if (MYThis) { UE_LOG(LogTemp, Warning, TEXT("diaoyongle")); MYThis->OnWakeUpBuffer.Broadcast(); return 1; } } } return 0; } FString ASpeechActor::WakeUpStart() { int err_code = MSP_SUCCESS; const char* params = "ivw_threshold=0:5, ivw_res_path =fo|c:\\wakeupresource.jet"; int ret = 0; const char* sessionID = QIVWSessionBegin(NULL, params, &ret); err_code = QIVWRegisterNotify(sessionID,cb_ivw_msg_proc, this); if (err_code != MSP_SUCCESS) { UE_LOG(LogTemp, Warning, TEXT("QIVWRegisterNotify failed, error code is: %d"), ret); } else { UE_LOG(LogTemp, Warning, TEXT("QIVWRegisterNotify success, error code is: %d"), ret); } if (MSP_SUCCESS != ret) { UE_LOG(LogTemp, Warning, TEXT("QIVWSessionBegin failed, error code is: %d"),ret); } return FString(sessionID); UE_LOG(LogTemp, Warning, TEXT("QIVWSessionBegin is working")); } bool ASpeechActor::WakeUpEnd(FString SessionID) { int ret = QIVWSessionEnd(TCHAR_TO_ANSI(*SessionID) , "normal end"); if (MSP_SUCCESS != ret) { UE_LOG(LogTemp, Warning, TEXT("QIVWSessionEnd failed, error code is: %d"), ret); return false; } UE_LOG(LogTemp, Warning, TEXT("QIVWSessioniSEnd")); return true; } bool ASpeechActor::WakeUpBuffer(TArray<uint8> BitArray, FString SessionID) { int ret = 0; if (BitArray.Num() == 0) { return false; } else { int audio_len = BitArray.Num(); int audio_status =2; // 设置音频状态,这里假设为MSP_AUDIO_SAMPLE_LAST ret = QIVWAudioWrite(TCHAR_TO_ANSI(*SessionID), BitArray.GetData(), audio_len, audio_status); if (MSP_SUCCESS != ret) { printf("QIVWAudioWrite failed, error code is: %d", ret); return false; } return true; } } 至此准备工作完成

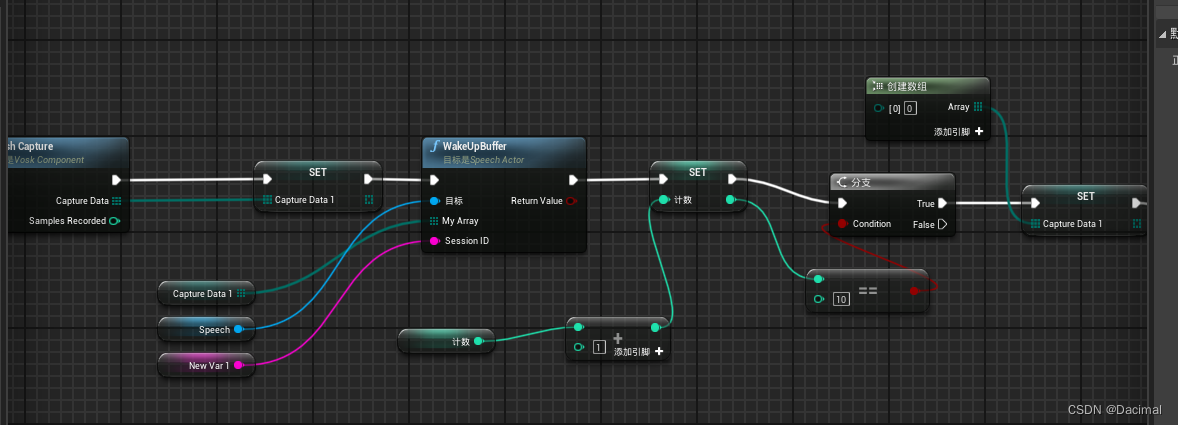

打开我们的蓝图

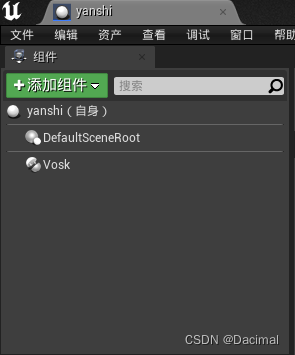

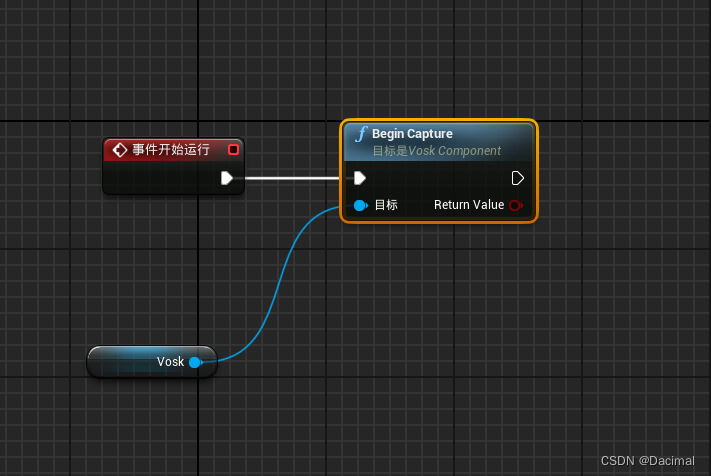

在确保你的Offline Voice Recognition插件打开的前提下添加vosk插件

考虑本文的应用环境需要他初始化时就持续监听

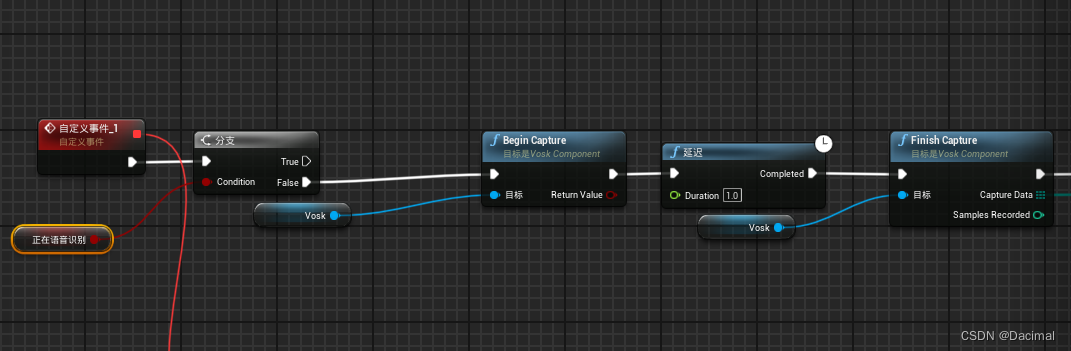

故而让其开始运行时就开启录音(语音唤醒需要录音文件才能监听)

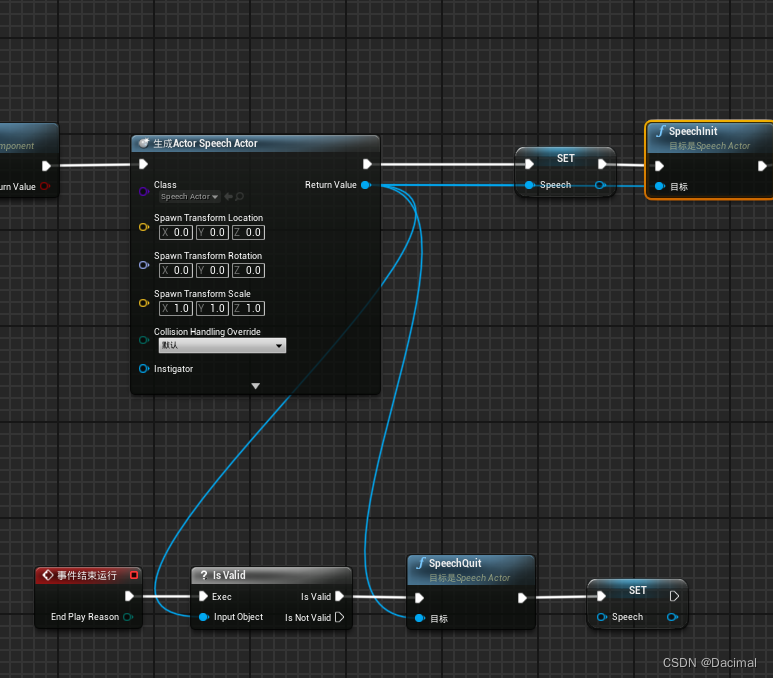

- 接下里我们需要注册讯飞,并且在运行结束释放讯飞注册

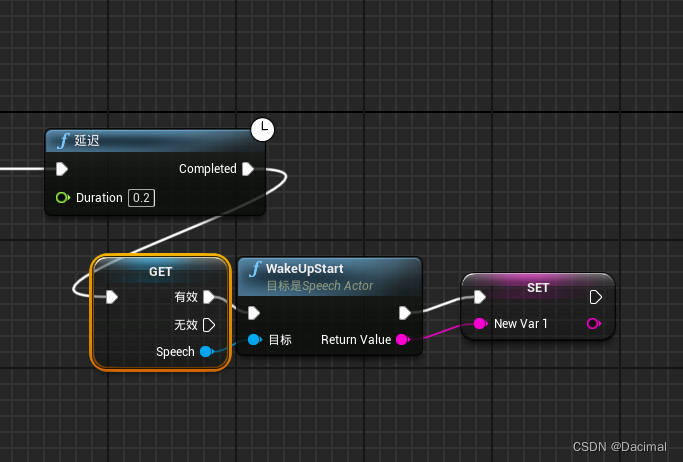

4.讯飞注册后等待两秒注册我们的wakeup语音唤醒

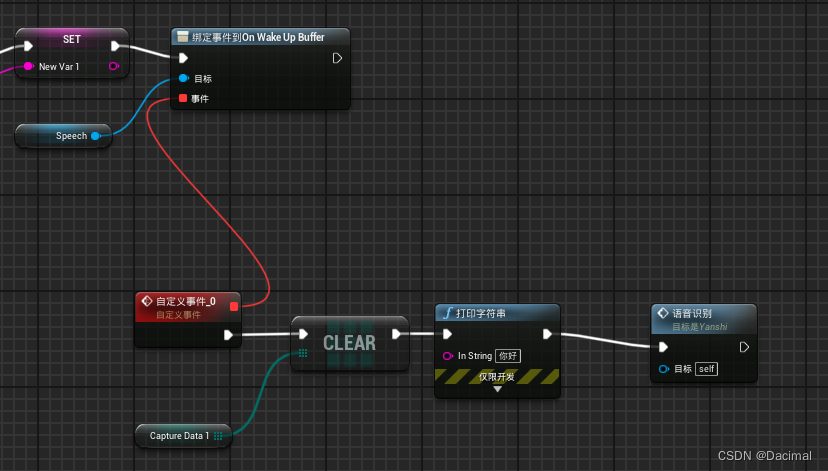

- 绑定一个唤醒事件

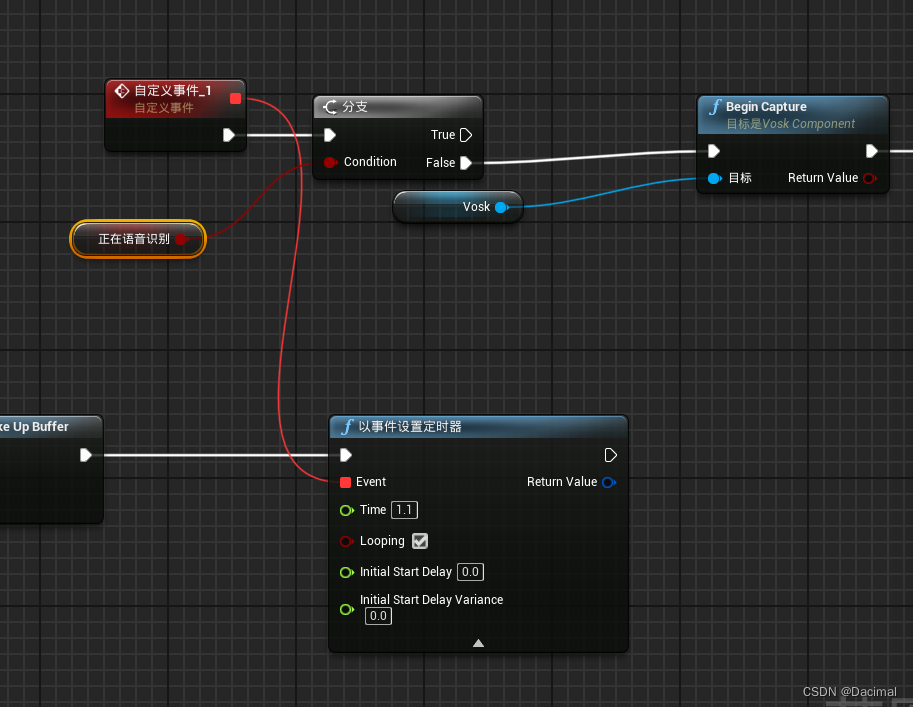

3. 在设置一个1.1秒的定时器

4. 定时器内部(正在语音识别默认值为false)

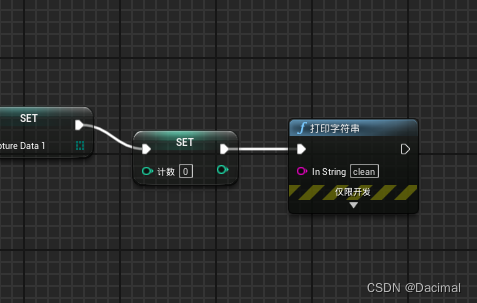

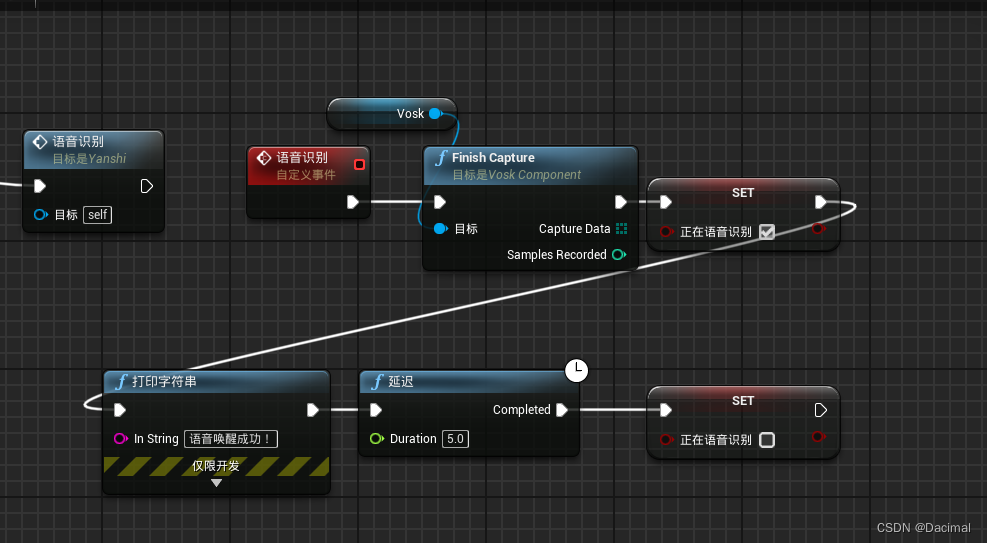

5. 语音唤醒的自定义事件(唤醒五秒钟后恢复继续监听)

蓝图文件以绑定

author:Dacimal

定制化开发联系:19815779273(不闲聊)