树莓派上部署ncnn,运行yolov5_lite算法

1、下载yolov5_lite源码:

giithub地址源码:yolov5_lite https://github.com/ppogg/YOLOv5-Lite

本实验使用的是这个镜像https://blog.csdn.net/qq_45756736/article/details/137525959?spm=1001.2014.3001.5501

下载好镜像之后,一定要先改交换区的大小,不然后面会卡到你怀疑人生,推荐看这个博客https://blog.csdn.net/qq_45756736/article/details/137543073?spm=1001.2014.3001.5501

进行本实验前需要更换国内源,换源博客大家可以看看https://blog.csdn.net/qq_45756736/article/details/138291635?spm=1001.2014.3001.5501

安装运行环境,训练模型等,这里就不再赘述。

2、:pt权重文件转换为onnx文件

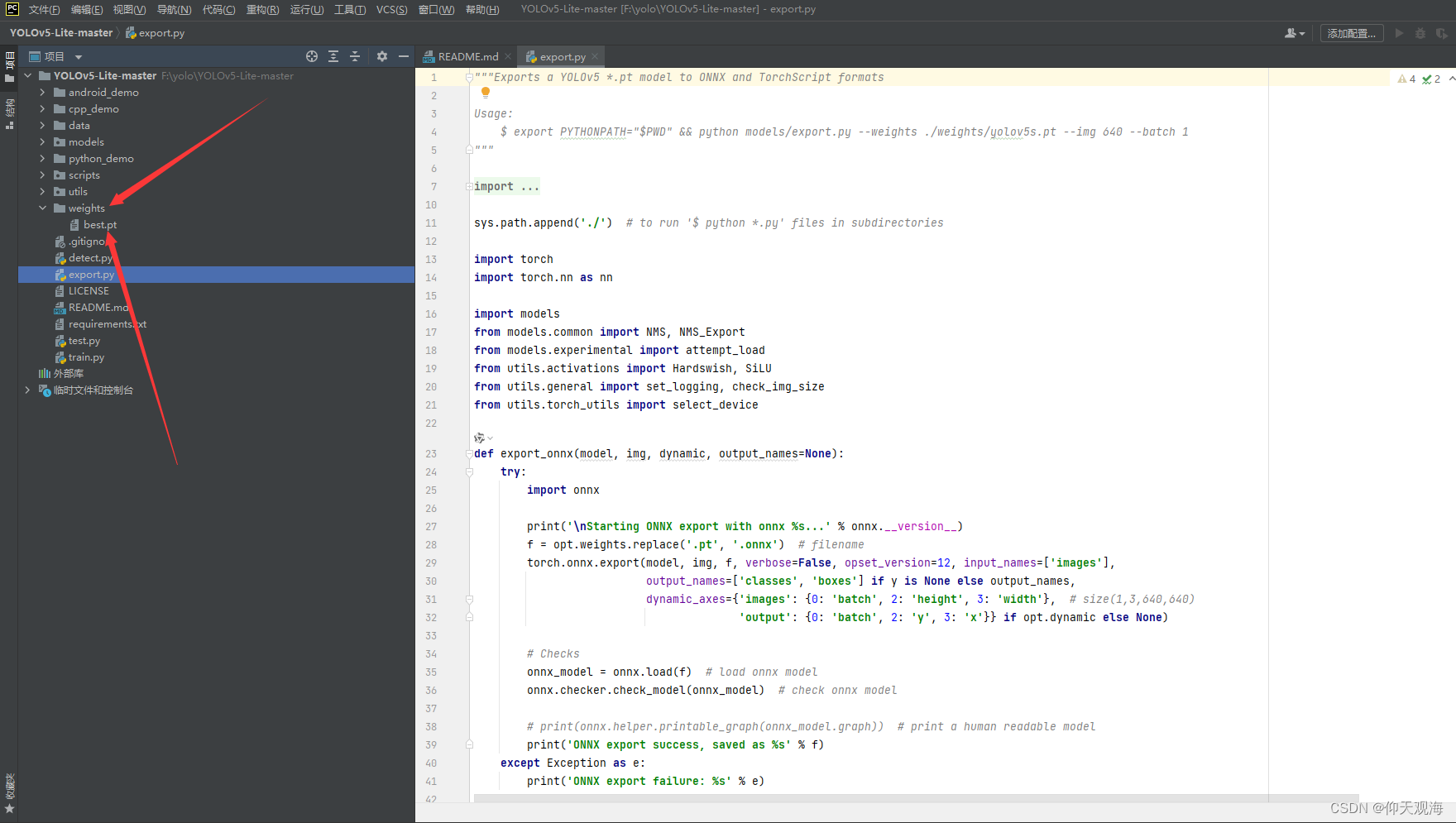

(1)将创建weights文件夹,并将自己的权重文件放在下方

(2)运行export.py文件,将权重文件换为自己的

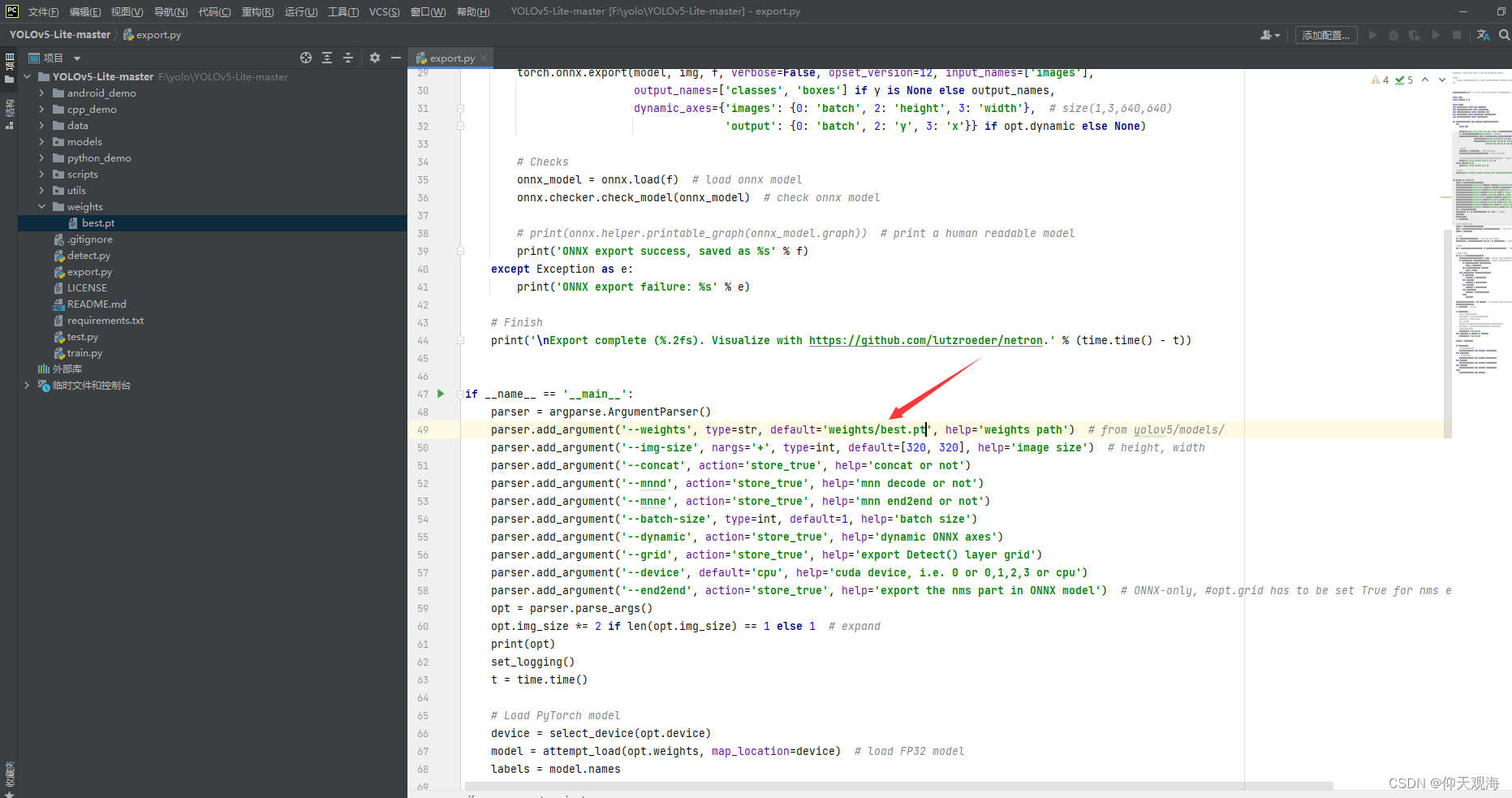

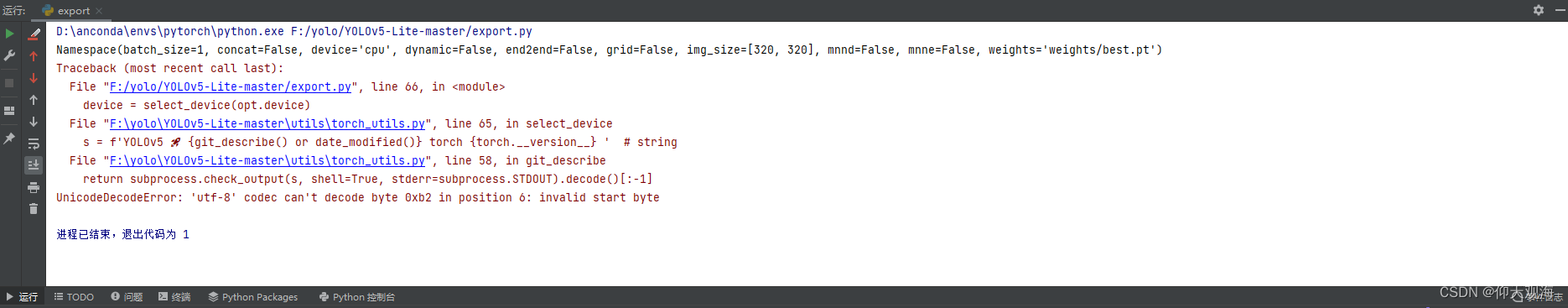

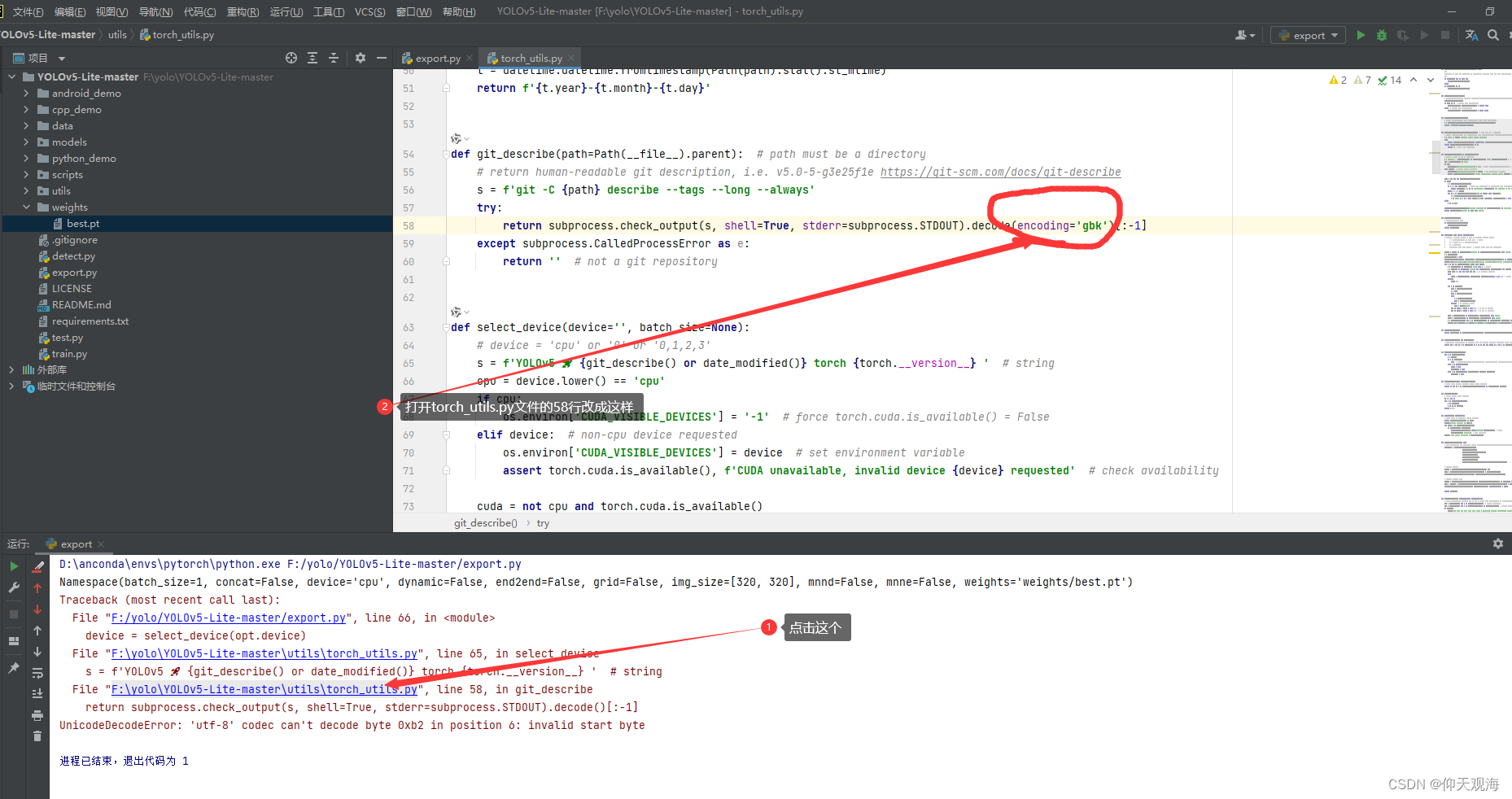

遇到这个bug,请参考这个

BUG:

解决方法:

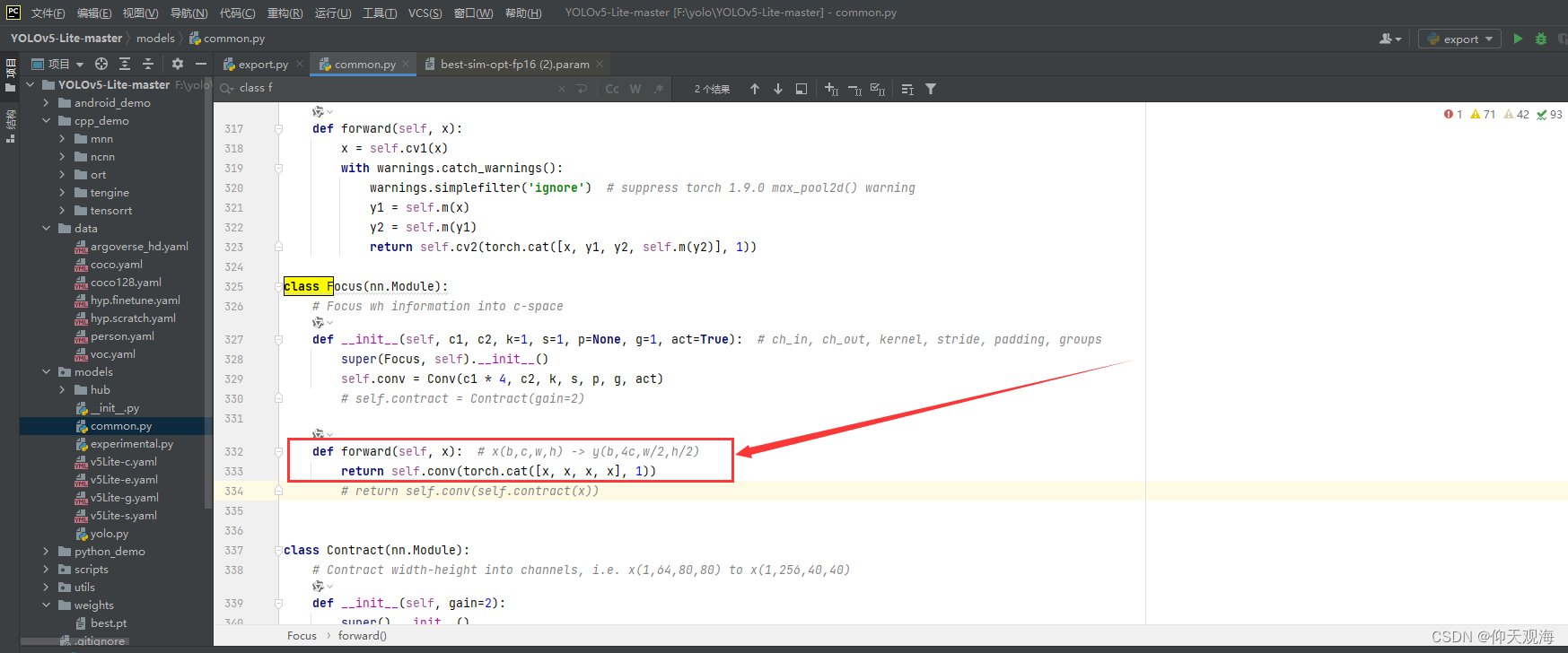

接下来一定要注意!打开common.py的333行修改成这样,才能避免出现Unsupported slice step!的错误

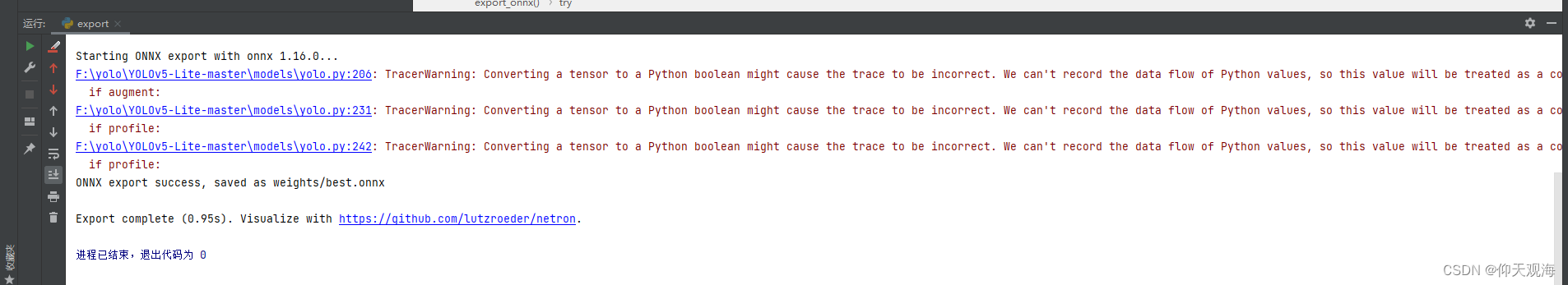

运行成功是这样的

这里面有警报,不用担心!

在生成ncnn文件的时候就有两个办法了

生成ncnn文件【方法一】首先我们介绍简单的方法

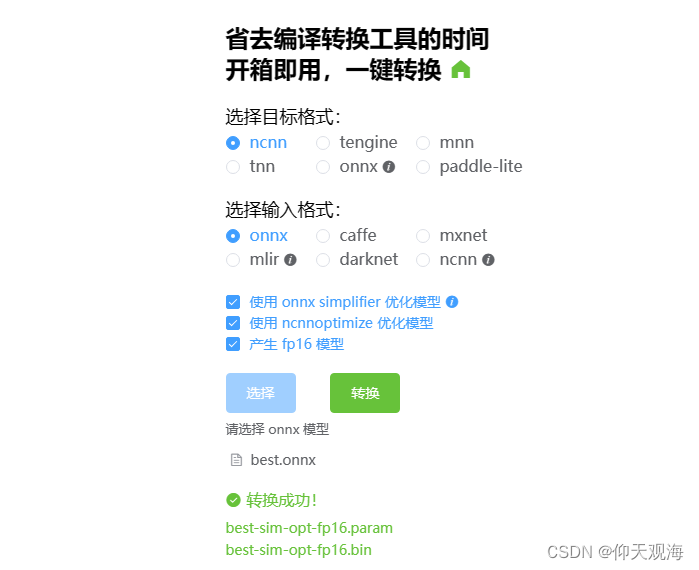

打开这个网站 https://convertmodel.com/,这个网站打开得需要点mo法,打开的网站是这样的

然后把自己转换好的onnx文件加载进去,这时候需要根据自己是否要转换为fp16的文件了,树莓派4算力还是有点不太行的,所以需要牺牲一些精度换取运行速度的,正常成功是这样的

不成功的下面会报Unsupported slice step!的错误,下面是按照nihui大佬的方式改的,不过我没有成功😭

- nihui大佬改Unsupported slice step!错误的方法

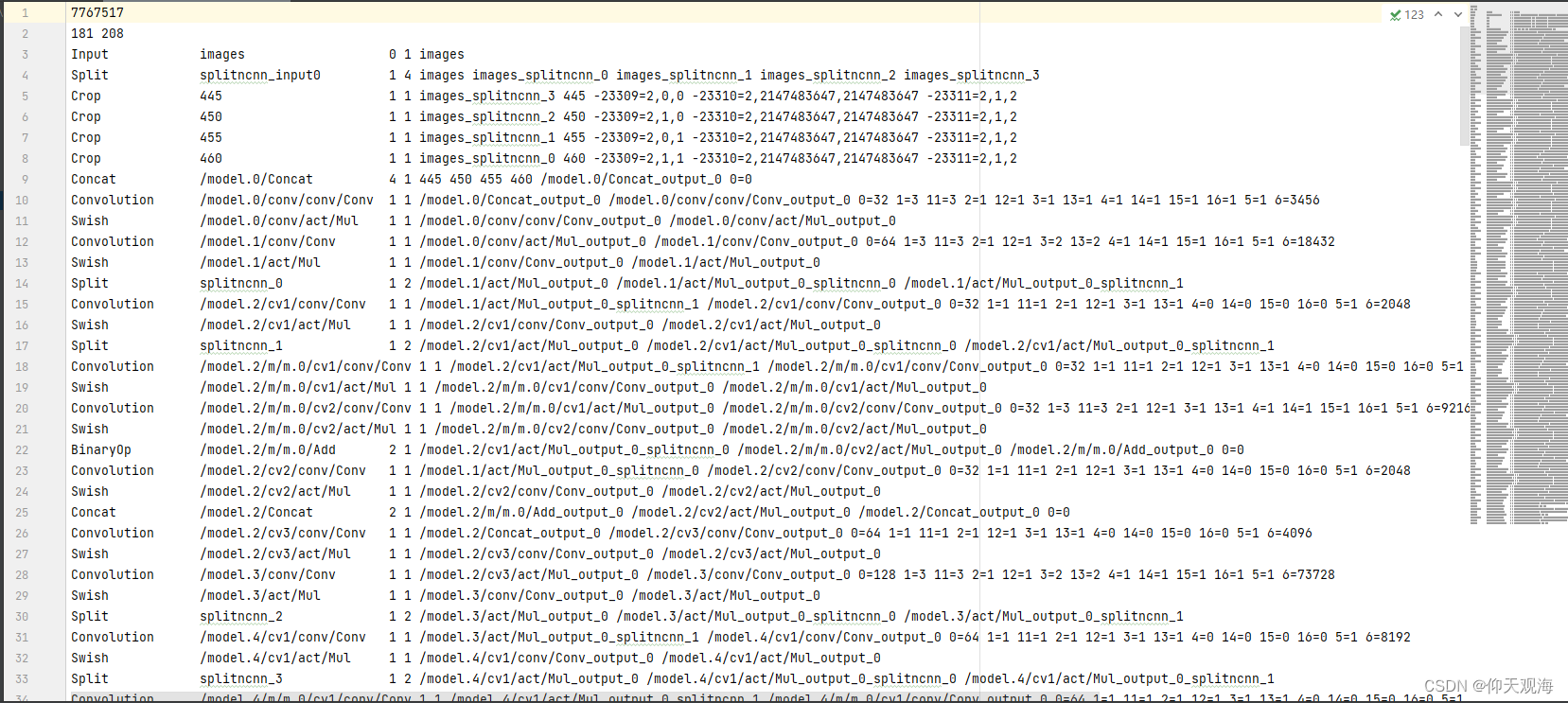

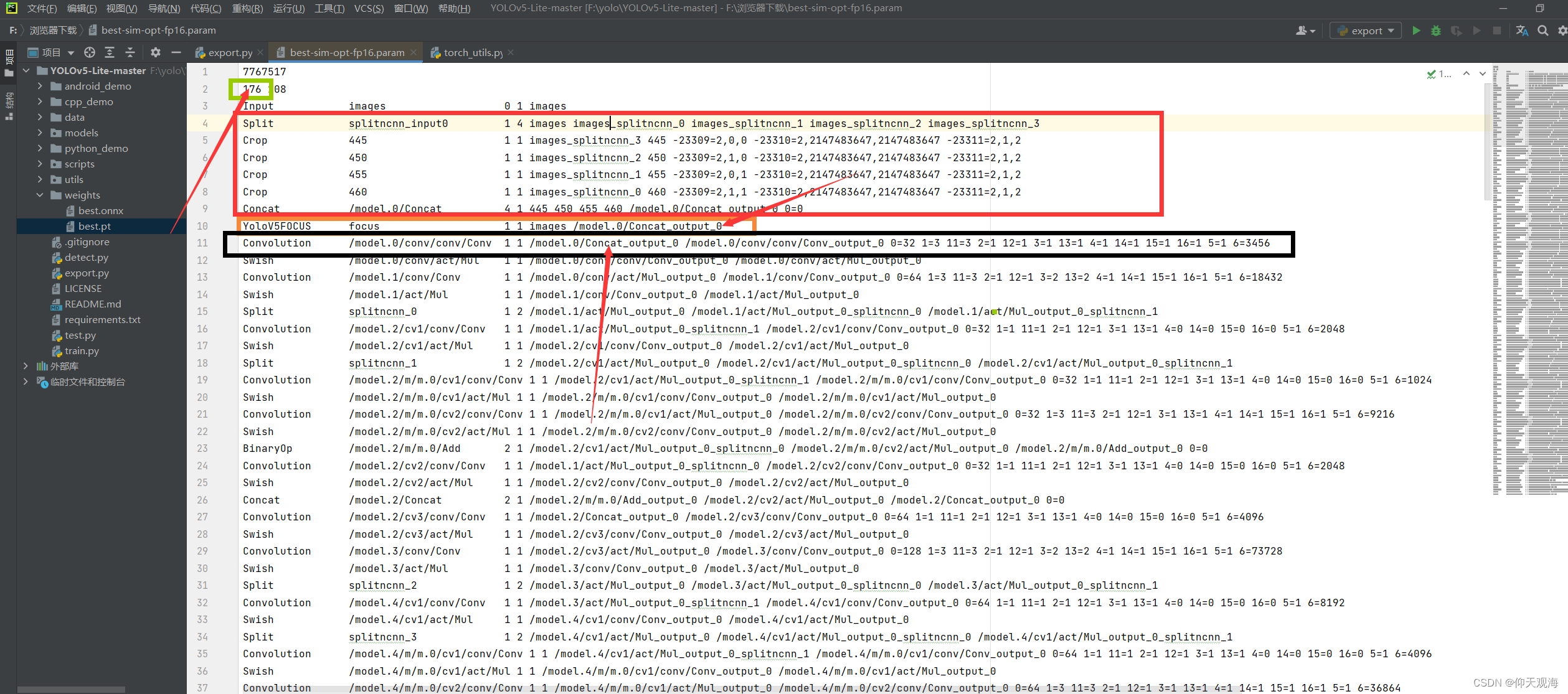

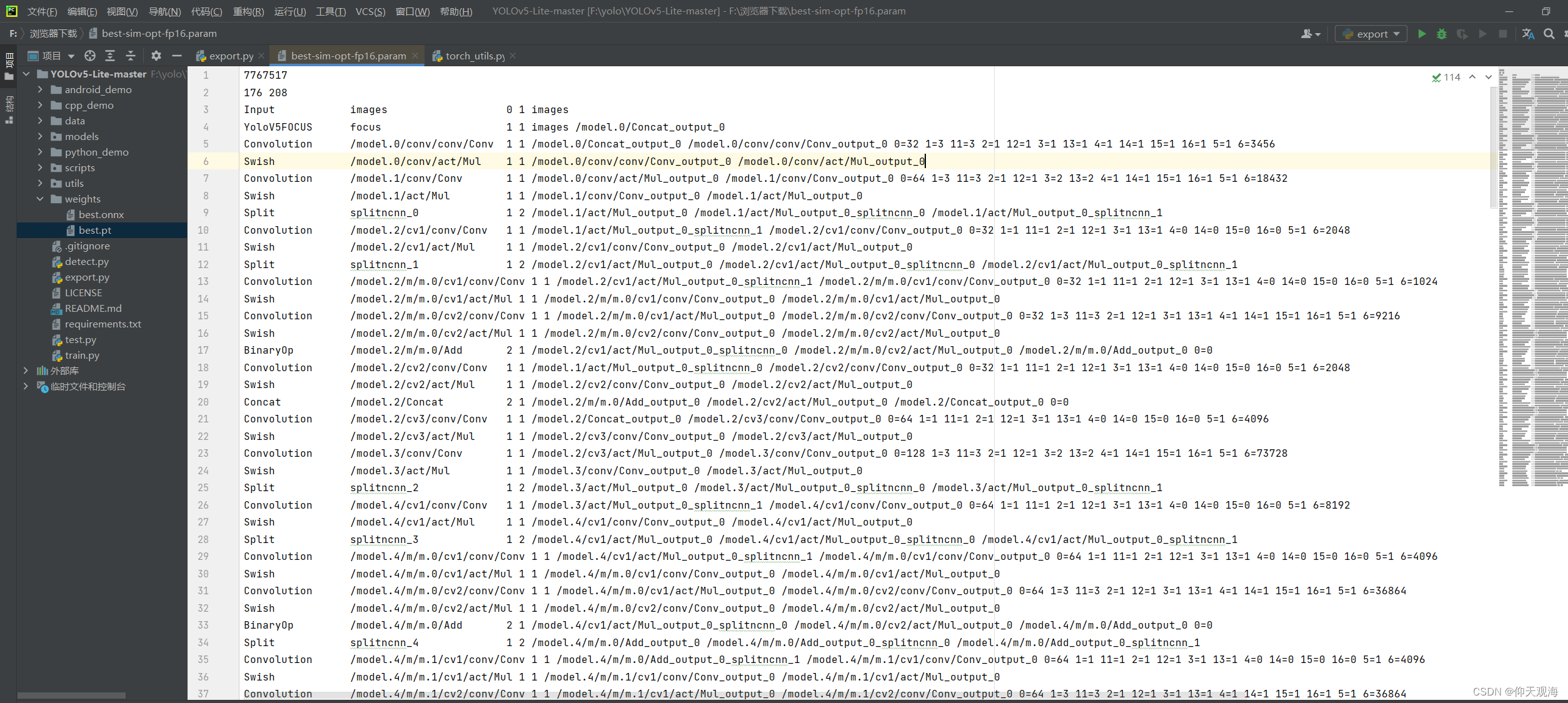

将下面生成的param和bin文件都下载下来。把生成的param文件用pycharm打开

将红色框中的内容删掉,加一行Yolov5…的内容,最后写的images /model.0/Concat_output_0是根据下面Convolution 那行内容确定的,至于为什么改成176,是因为之前的181行删掉了6行,又补充了一行,所以181-6+1=176.具体原因请参考nihui大佬的博客 https://zhuanlan.zhihu.com/p/275989233,简要理解参考这位大佬的博客 https://blog.csdn.net/roujian0985/article/details/122145235

改完的param文件长这样

然后保存就可以了

生成ncnn文件【方法二】第二个方法,我不太推荐有点麻烦。

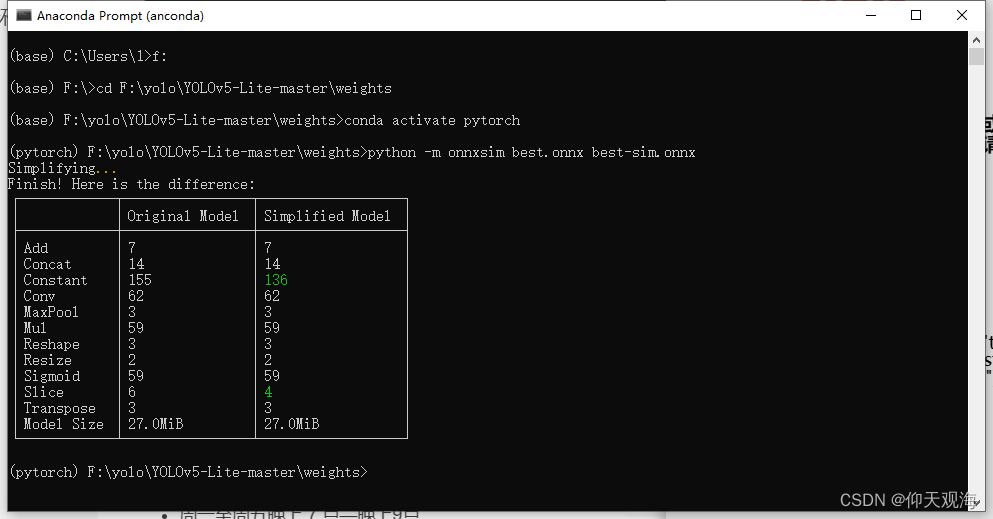

安装onnx-simplifier对onnx进行简化,中间可能需要安装别的库,切记将这些库都安装在运行yolo的那个环境中

pip3 install onnx-simplifier python -m onnxsim best.onnx best-sim.onnx 需要用Anaconda pronpt换到放权重的文件夹运行

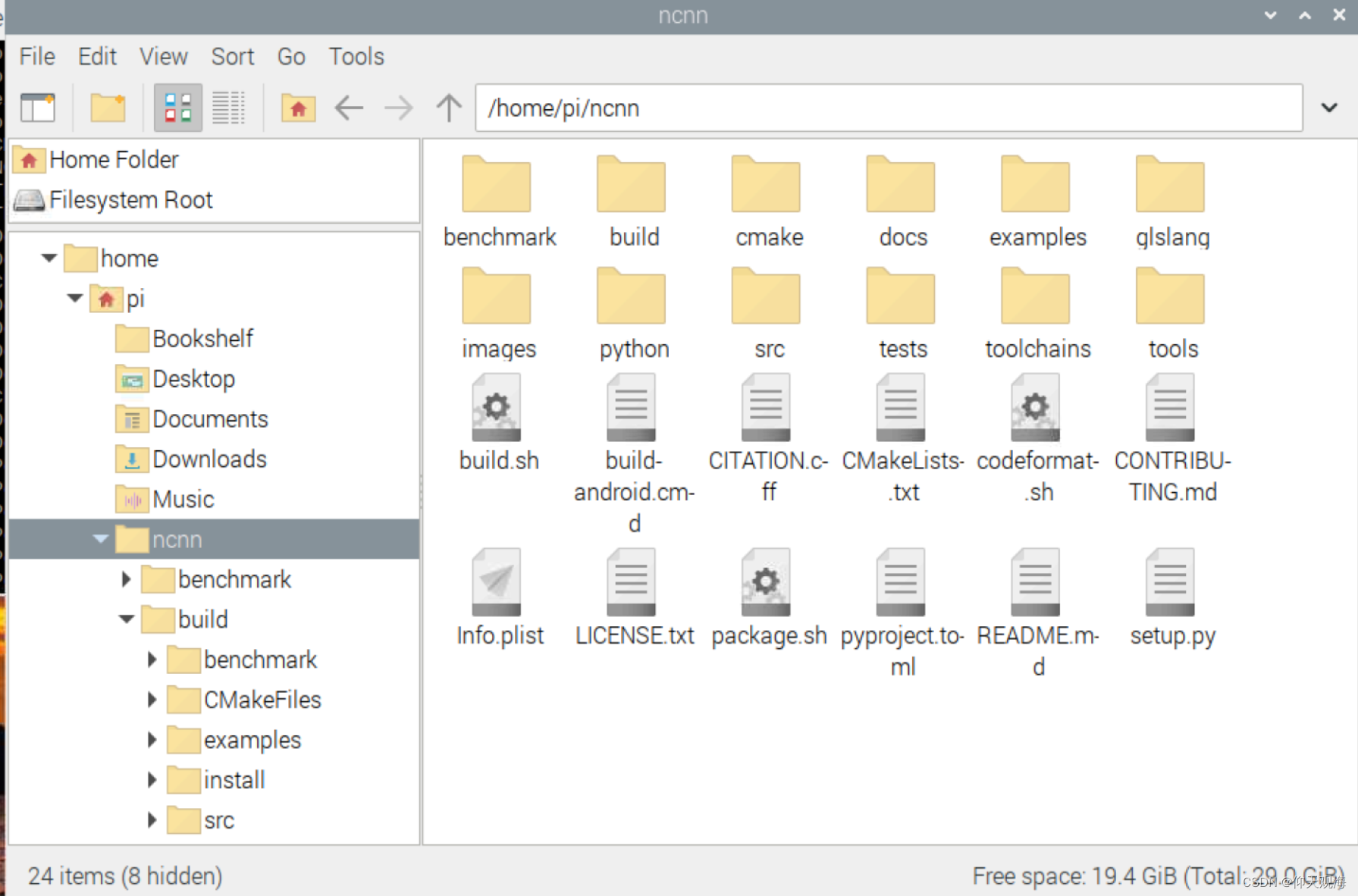

树莓派安装ncnn框架

在树莓派上下载ncnn框架,切记切记切记!一点要下载20210525版本的ncnn

具体操作如下

在树莓派终端输入以下代码,下载ncnn框架

安装依赖

sudo apt-get install -y gfortran sudo apt-get install -y libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libhdf5-serial-dev protobuf-compiler sudo apt-get install --no-install-recommends libboost-all-dev sudo apt-get install -y libgflags-dev libgoogle-glog-dev liblmdb-dev libatlas-base-dev 编译ncnn

git clone https://gitee.com/Tencent/ncnn.git cd ncnn mkdir build cd build cmake .. make -j4 make install 安装和编译都比较慢,请耐心等待,安装和编译之后的ncnn文件夹如下

运行yolov5_lite:

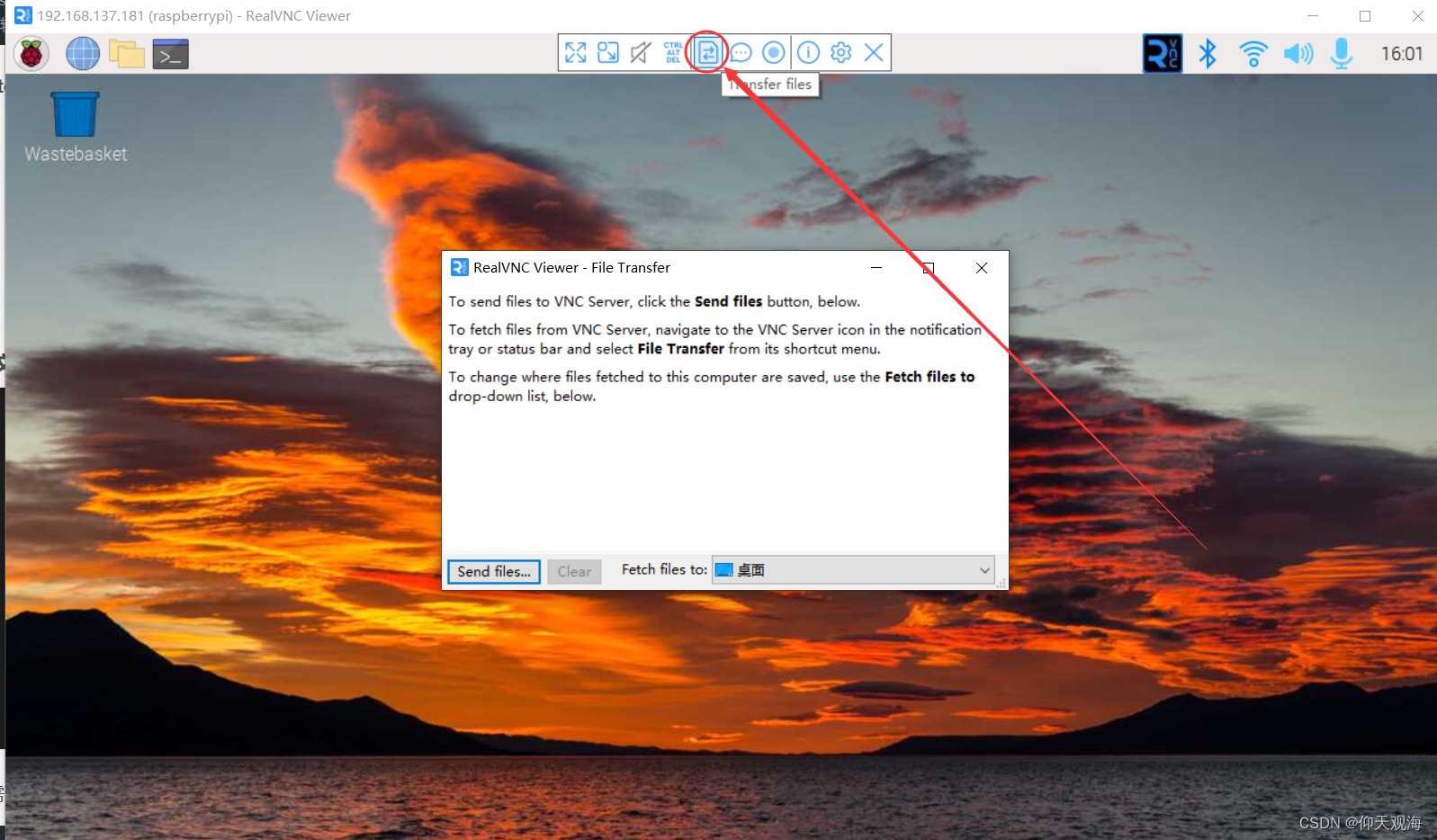

用刚才【方法一】转换的,将param文件和bin文件传进来,并放在ncnn/build文件夹下

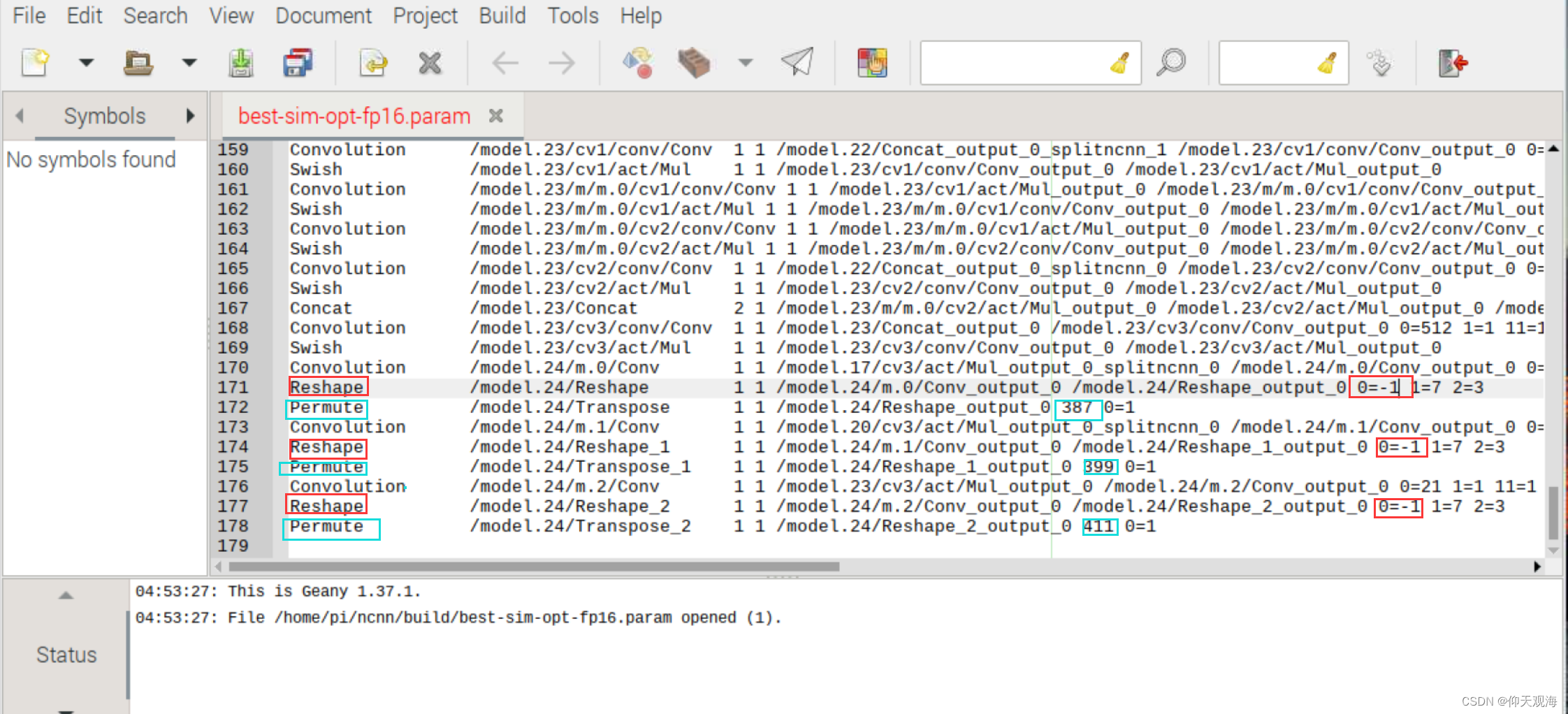

修改best-sim.param文件,将reshape中的0=x改成0=-1,按照官方文档的教程是将permute上面的reshape进行更改,permute后面的三组数字记录下来。

在ncnn/examples文件夹下面创建yolov5_lite.cpp文件,并在文件夹中复制下面代码

#include "layer.h" #include "net.h" #if defined(USE_NCNN_SIMPLEOCV) #include "simpleocv.h" #else #include <opencv2/core/core.hpp> #include <opencv2/highgui/highgui.hpp> #include <opencv2/imgproc/imgproc.hpp> #endif #include <float.h> #include <stdio.h> #include <vector> #include <sys/time.h> #include <iostream> #include <chrono> #include <opencv2/opencv.hpp> using namespace std; using namespace cv; using namespace std::chrono; // 0 : FP16 // 1 : INT8 #define USE_INT8 0 // 0 : Image // 1 : Camera #define USE_CAMERA 0 struct Object { cv::Rect_<float> rect; int label; float prob; }; static inline float intersection_area(const Object& a, const Object& b) { cv::Rect_<float> inter = a.rect & b.rect; return inter.area(); } static void qsort_descent_inplace(std::vector<Object>& faceobjects, int left, int right) { int i = left; int j = right; float p = faceobjects[(left + right) / 2].prob; while (i <= j) { while (faceobjects[i].prob > p) i++; while (faceobjects[j].prob < p) j--; if (i <= j) { // swap std::swap(faceobjects[i], faceobjects[j]); i++; j--; } } #pragma omp parallel sections { #pragma omp section { if (left < j) qsort_descent_inplace(faceobjects, left, j); } #pragma omp section { if (i < right) qsort_descent_inplace(faceobjects, i, right); } } } static void qsort_descent_inplace(std::vector<Object>& faceobjects) { if (faceobjects.empty()) return; qsort_descent_inplace(faceobjects, 0, faceobjects.size() - 1); } static void nms_sorted_bboxes(const std::vector<Object>& faceobjects, std::vector<int>& picked, float nms_threshold) { picked.clear(); const int n = faceobjects.size(); std::vector<float> areas(n); for (int i = 0; i < n; i++) { areas[i] = faceobjects[i].rect.area(); } for (int i = 0; i < n; i++) { const Object& a = faceobjects[i]; int keep = 1; for (int j = 0; j < (int)picked.size(); j++) { const Object& b = faceobjects[picked[j]]; // intersection over union float inter_area = intersection_area(a, b); float union_area = areas[i] + areas[picked[j]] - inter_area; // float IoU = inter_area / union_area if (inter_area / union_area > nms_threshold) keep = 0; } if (keep) picked.push_back(i); } } static inline float sigmoid(float x) { return static_cast<float>(1.f / (1.f + exp(-x))); } // unsigmoid static inline float unsigmoid(float y) { return static_cast<float>(-1.0 * (log((1.0 / y) - 1.0))); } static void generate_proposals(const ncnn::Mat &anchors, int stride, const ncnn::Mat &in_pad, const ncnn::Mat &feat_blob, float prob_threshold, std::vector <Object> &objects) { const int num_grid = feat_blob.h; float unsig_pro = 0; if (prob_threshold > 0.6) unsig_pro = unsigmoid(prob_threshold); int num_grid_x; int num_grid_y; if (in_pad.w > in_pad.h) { num_grid_x = in_pad.w / stride; num_grid_y = num_grid / num_grid_x; } else { num_grid_y = in_pad.h / stride; num_grid_x = num_grid / num_grid_y; } const int num_class = feat_blob.w - 5; const int num_anchors = anchors.w / 2; for (int q = 0; q < num_anchors; q++) { const float anchor_w = anchors[q * 2]; const float anchor_h = anchors[q * 2 + 1]; const ncnn::Mat feat = feat_blob.channel(q); for (int i = 0; i < num_grid_y; i++) { for (int j = 0; j < num_grid_x; j++) { const float *featptr = feat.row(i * num_grid_x + j); // find class index with max class score int class_index = 0; float class_score = -FLT_MAX; float box_score = featptr[4]; if (prob_threshold > 0.6) { // while prob_threshold > 0.6, unsigmoid better than sigmoid if (box_score > unsig_pro) { for (int k = 0; k < num_class; k++) { float score = featptr[5 + k]; if (score > class_score) { class_index = k; class_score = score; } } float confidence = sigmoid(box_score) * sigmoid(class_score); if (confidence >= prob_threshold) { float dx = sigmoid(featptr[0]); float dy = sigmoid(featptr[1]); float dw = sigmoid(featptr[2]); float dh = sigmoid(featptr[3]); float pb_cx = (dx * 2.f - 0.5f + j) * stride; float pb_cy = (dy * 2.f - 0.5f + i) * stride; float pb_w = pow(dw * 2.f, 2) * anchor_w; float pb_h = pow(dh * 2.f, 2) * anchor_h; float x0 = pb_cx - pb_w * 0.5f; float y0 = pb_cy - pb_h * 0.5f; float x1 = pb_cx + pb_w * 0.5f; float y1 = pb_cy + pb_h * 0.5f; Object obj; obj.rect.x = x0; obj.rect.y = y0; obj.rect.width = x1 - x0; obj.rect.height = y1 - y0; obj.label = class_index; obj.prob = confidence; objects.push_back(obj); } } else { for (int k = 0; k < num_class; k++) { float score = featptr[5 + k]; if (score > class_score) { class_index = k; class_score = score; } } float confidence = sigmoid(box_score) * sigmoid(class_score); if (confidence >= prob_threshold) { float dx = sigmoid(featptr[0]); float dy = sigmoid(featptr[1]); float dw = sigmoid(featptr[2]); float dh = sigmoid(featptr[3]); float pb_cx = (dx * 2.f - 0.5f + j) * stride; float pb_cy = (dy * 2.f - 0.5f + i) * stride; float pb_w = pow(dw * 2.f, 2) * anchor_w; float pb_h = pow(dh * 2.f, 2) * anchor_h; float x0 = pb_cx - pb_w * 0.5f; float y0 = pb_cy - pb_h * 0.5f; float x1 = pb_cx + pb_w * 0.5f; float y1 = pb_cy + pb_h * 0.5f; Object obj; obj.rect.x = x0; obj.rect.y = y0; obj.rect.width = x1 - x0; obj.rect.height = y1 - y0; obj.label = class_index; obj.prob = confidence; objects.push_back(obj); } } } } } } } static int detect_yolov5(const cv::Mat& bgr, std::vector<Object>& objects) { ncnn::Net yolov5; #if USE_INT8 yolov5.opt.use_int8_inference=true; #else yolov5.opt.use_vulkan_compute = true; yolov5.opt.use_bf16_storage = true; #endif // original pretrained model from https://github.com/ultralytics/yolov5 // the ncnn model https://github.com/nihui/ncnn-assets/tree/master/models #if USE_INT8 yolov5.load_param("~/ncnn/build/best-sim-int8.param"); yolov5.load_model("~/ncnn/build/best-sim-int8.bin"); #else yolov5.load_param("~/ncnn/build/best-sim-fp16.param"); yolov5.load_model("~/ncnn/build/best-sim-fp16.bin"); #endif const int target_size = 320; const float prob_threshold = 0.60f; const float nms_threshold = 0.60f; int img_w = bgr.cols; int img_h = bgr.rows; // letterbox pad to multiple of 32 int w = img_w; int h = img_h; float scale = 1.f; if (w > h) { scale = (float)target_size / w; w = target_size; h = h * scale; } else { scale = (float)target_size / h; h = target_size; w = w * scale; } ncnn::Mat in = ncnn::Mat::from_pixels_resize(bgr.data, ncnn::Mat::PIXEL_BGR2RGB, img_w, img_h, w, h); // pad to target_size rectangle // yolov5/utils/datasets.py letterbox int wpad = (w + 31) / 32 * 32 - w; int hpad = (h + 31) / 32 * 32 - h; ncnn::Mat in_pad; ncnn::copy_make_border(in, in_pad, hpad / 2, hpad - hpad / 2, wpad / 2, wpad - wpad / 2, ncnn::BORDER_CONSTANT, 114.f); const float norm_vals[3] = {1 / 255.f, 1 / 255.f, 1 / 255.f}; in_pad.substract_mean_normalize(0, norm_vals); ncnn::Extractor ex = yolov5.create_extractor(); ex.input("images", in_pad); std::vector<Object> proposals; // stride 8 { ncnn::Mat out; ex.extract("output", out); ncnn::Mat anchors(6); anchors[0] = 10.f; anchors[1] = 13.f; anchors[2] = 16.f; anchors[3] = 30.f; anchors[4] = 33.f; anchors[5] = 23.f; std::vector<Object> objects8; generate_proposals(anchors, 8, in_pad, out, prob_threshold, objects8); proposals.insert(proposals.end(), objects8.begin(), objects8.end()); } // stride 16 { ncnn::Mat out; ex.extract("671", out); ncnn::Mat anchors(6); anchors[0] = 30.f; anchors[1] = 61.f; anchors[2] = 62.f; anchors[3] = 45.f; anchors[4] = 59.f; anchors[5] = 119.f; std::vector<Object> objects16; generate_proposals(anchors, 16, in_pad, out, prob_threshold, objects16); proposals.insert(proposals.end(), objects16.begin(), objects16.end()); } // stride 32 { ncnn::Mat out; ex.extract("691", out); ncnn::Mat anchors(6); anchors[0] = 116.f; anchors[1] = 90.f; anchors[2] = 156.f; anchors[3] = 198.f; anchors[4] = 373.f; anchors[5] = 326.f; std::vector<Object> objects32; generate_proposals(anchors, 32, in_pad, out, prob_threshold, objects32); proposals.insert(proposals.end(), objects32.begin(), objects32.end()); } // sort all proposals by score from highest to lowest qsort_descent_inplace(proposals); // apply nms with nms_threshold std::vector<int> picked; nms_sorted_bboxes(proposals, picked, nms_threshold); int count = picked.size(); objects.resize(count); for (int i = 0; i < count; i++) { objects[i] = proposals[picked[i]]; // adjust offset to original unpadded float x0 = (objects[i].rect.x - (wpad / 2)) / scale; float y0 = (objects[i].rect.y - (hpad / 2)) / scale; float x1 = (objects[i].rect.x + objects[i].rect.width - (wpad / 2)) / scale; float y1 = (objects[i].rect.y + objects[i].rect.height - (hpad / 2)) / scale; // clip x0 = std::max(std::min(x0, (float)(img_w - 1)), 0.f); y0 = std::max(std::min(y0, (float)(img_h - 1)), 0.f); x1 = std::max(std::min(x1, (float)(img_w - 1)), 0.f); y1 = std::max(std::min(y1, (float)(img_h - 1)), 0.f); objects[i].rect.x = x0; objects[i].rect.y = y0; objects[i].rect.width = x1 - x0; objects[i].rect.height = y1 - y0; } return 0; } static void draw_objects(const cv::Mat& bgr, const std::vector<Object>& objects) { static const char* class_names[] = { "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch", "potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone", "microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear", "hair drier", "toothbrush" }; cv::Mat image = bgr.clone(); for (size_t i = 0; i < objects.size(); i++) { const Object& obj = objects[i]; fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob, obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height); cv::rectangle(image, obj.rect, cv::Scalar(0, 255, 0)); char text[256]; sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100); int baseLine = 0; cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine); int x = obj.rect.x; int y = obj.rect.y - label_size.height - baseLine; if (y < 0) y = 0; if (x + label_size.width > image.cols) x = image.cols - label_size.width; cv::rectangle(image, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)), cv::Scalar(255, 255, 255), -1); cv::putText(image, text, cv::Point(x, y + label_size.height), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0)); // cv::putText(image, to_string(fps), cv::Point(100, 100), //FPS //cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0)); } #if USE_CAMERA imshow("camera", image); cv::waitKey(1); #else cv::imwrite("result.jpg", image); #endif } #if USE_CAMERA int main(int argc, char** argv) { cv::VideoCapture capture; capture.open(0); //修改这个参数可以选择打开想要用的摄像头 cv::Mat frame; //111 int FPS = 0; int total_frames = 0; high_resolution_clock::time_point t1, t2; while (true) { capture >> frame; cv::Mat m = frame; cv::Mat f = frame; std::vector<Object> objects; auto start_time = std::chrono::high_resolution_clock::now(); // 记录开始时间 detect_yolov5(frame, objects); auto end_time = std::chrono::high_resolution_clock::now(); // 记录结束时间 auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(end_time - start_time); // 计算执行时间 float fps = (float)(1000)/duration.count(); draw_objects(m, objects); cout << "FPS: " << fps << endl; //int fps = 1000/duration.count(); //int x = m.cols-50; //int y = m.rows-50; //cv::putText(f, to_string(fps), cv::Point(100, 100), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0)); //if (cv::waitKey(30) >= 0) //break; } } #else int main(int argc, char** argv) { if (argc != 2) { fprintf(stderr, "Usage: %s [imagepath]\n", argv[0]); return -1; } const char* imagepath = argv[1]; struct timespec begin, end; long time; clock_gettime(CLOCK_MONOTONIC, &begin); cv::Mat m = cv::imread(imagepath, 1); if (m.empty()) { fprintf(stderr, "cv::imread %s failed\n", imagepath); return -1; } std::vector<Object> objects; detect_yolov5(m, objects); clock_gettime(CLOCK_MONOTONIC, &end); time = (end.tv_sec - begin.tv_sec) + (end.tv_nsec - begin.tv_nsec); printf(">> Time : %lf ms\n", (double)time/1000000); draw_objects(m, objects); return 0; } #endif 修改此代码

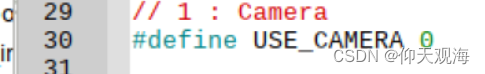

要是需要用摄像头检测的修改第30行代码,将0改为1

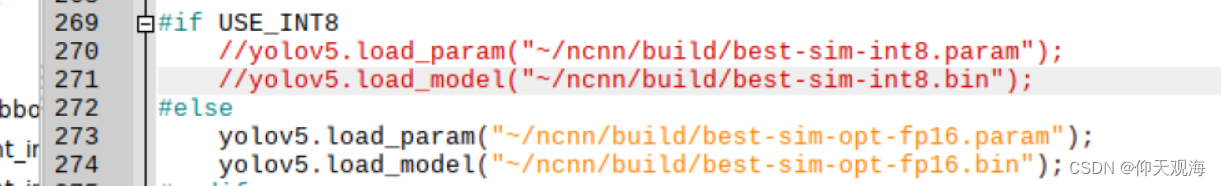

将270、271行代码注释掉,并在273、274行将自己的param和bin文件路径加载进去

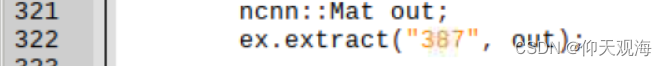

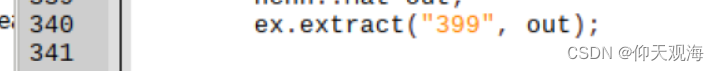

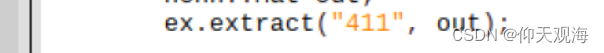

修改322、340,359行代码,将里面的output改为与param文件中permute中的数字一 一对应

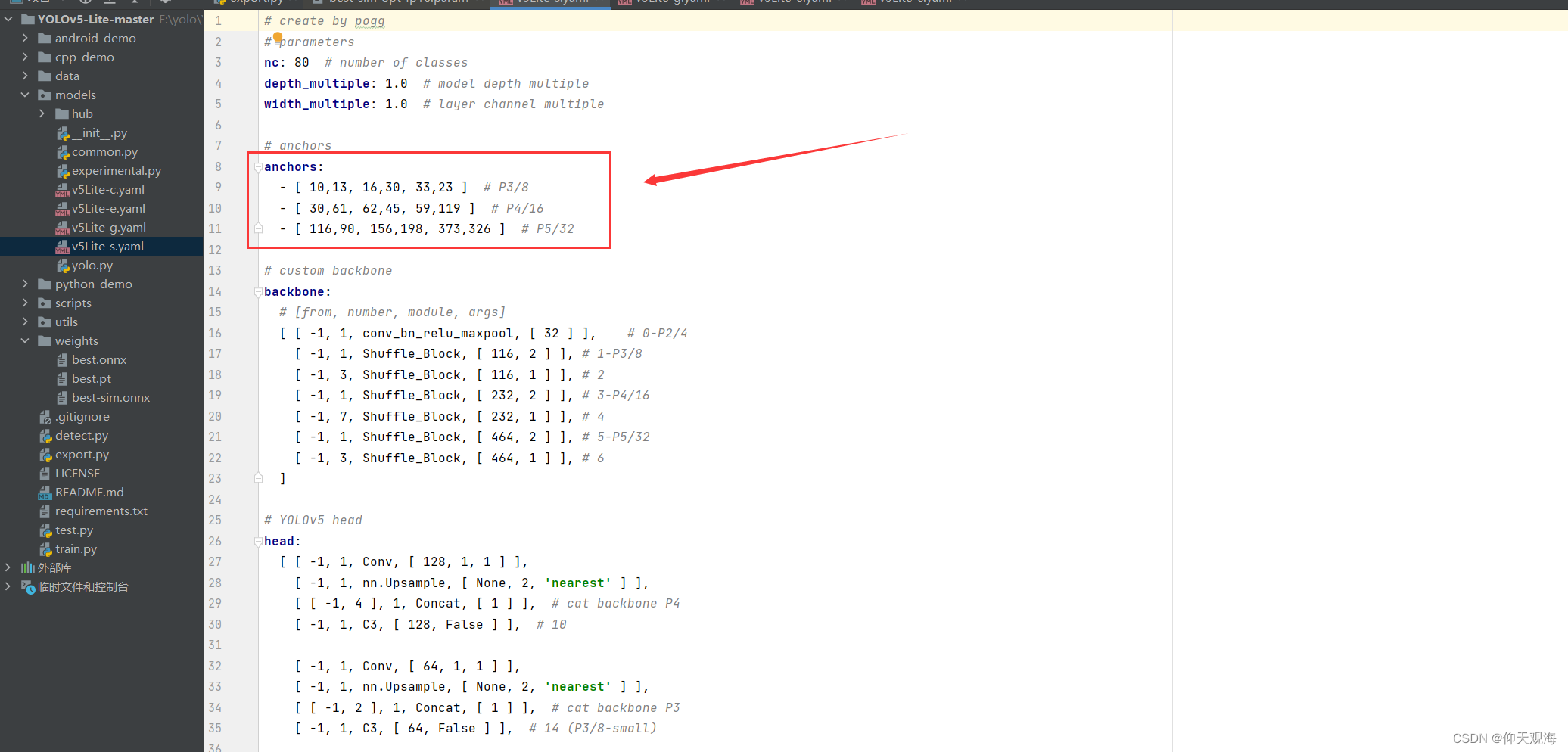

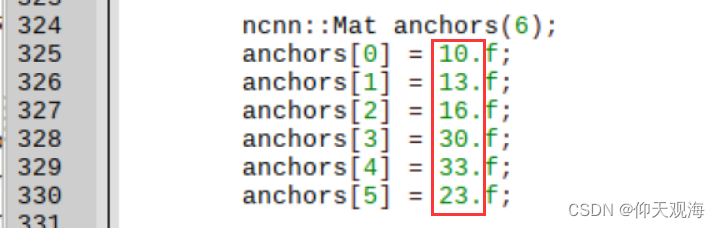

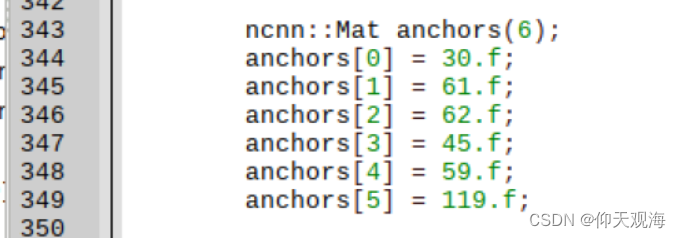

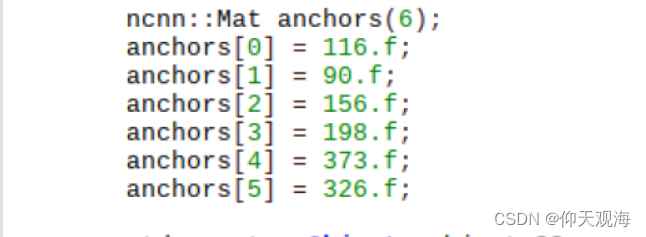

然后打开pycahrm,由于anchors是官方训练的,可以不用修改,但是自己训练自己数据集一定要修改anchors,修改anchors,修改anchors,然后与之一一对应

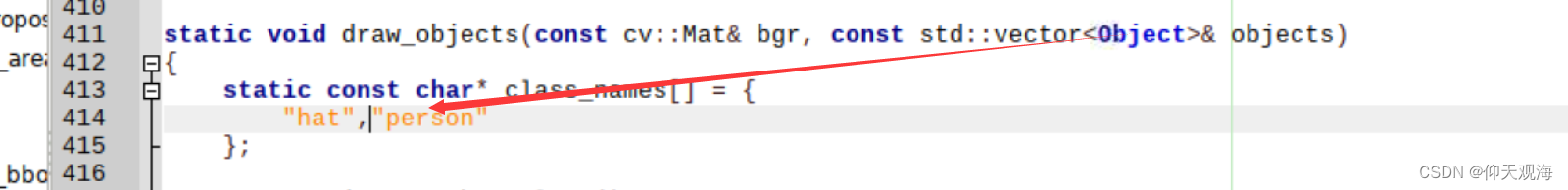

接着修改成自己训练的类

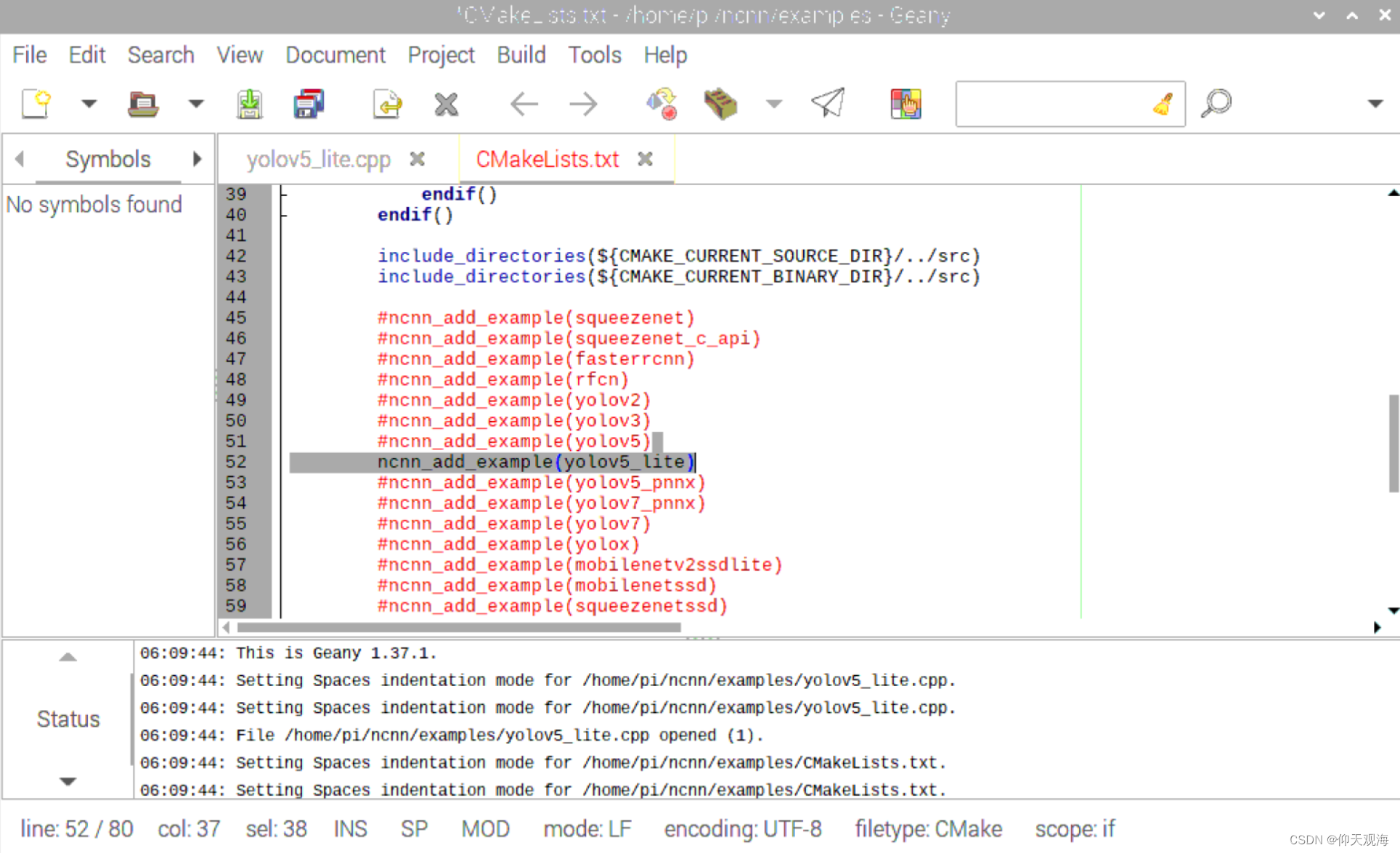

修改CMakeLists.txt

打开ncnn/examples/CMakeLists.txt文件加入自己刚才新建的yolov5_lite文件,可以把不需要加载的模型注释掉

注意一定要和自己建的文件名一致

完成后使用cmake进行编译

cd ncnn/build cmake .. make 使用【方法二】的得先进行param和bin文件的简化和转成fp16,然后放在ncnn/build文件下,这也是大多数博主使用的方法,后续操作跟上面是一样的,不过这个步骤尽量用树莓派进行,不然会爆出来很多奇奇怪怪的错误,经过我测试,我感觉使用不管哪种方法最后的运行结果都是一样的。

【方法二】ncnn 模型转换,生成param和bin文件

cd ~/ncnn/build/tools ./onnx2ncnn ./best-sim.onnx ./best-sim.param ./best-sim.bin 转化成fp16 ./ncnnoptimize ./best-sim.param ./best-sim.bin ./best-sim-fp16.param ./best-sim-fp16.bin 65536 其中65536 是设置模型转为f16开关 运行yolov5_lite:

本博客是用摄像头做实时检测的,需要检测图片或者视频的可以参考其他博文

cd /home/pi/ncnn/build/examples ./yolov5_lite 学习小结:

我看大多数博主跑完之后的帧率大概在3~5帧,但是我的在0.6帧左右,有一个问题就是,大多数博主导出的param文件里面都是数字,我的里面全是字母,这个原因我找了好久也没找到,应该是我的导出的方法可能有问题。总体感觉ncnn是比不用推导的运行快一点(也可能是我的心里作用),但是要用树莓派做到实时还是有点困难,本博客是用于个人学习。做这个做了好久,中间踩了各种各样的坑,也缺少跟人讨论的机会,可以的话,大家可以私信我一起讨论。