大模型工具Ollama

官网:https://ollama.com/

Ollama是一个用于部署和运行各种开源大模型的工具;

它能够帮助用户快速在本地运行各种大模型,极大地简化了大模型在本地运行的过程。用户通过执行几条命令就能在本地运行开源大模型,如Lama 2等;

综上,Ollama是一个大模型部署运行工具,在该工具里面可以部署运行各种大模型,方便开发者在本地搭建一套大模型运行环境;

下载:https://ollama.com/download

下载Ollama

说明:Ollama的运行会受到所使用模型大小的影响;

1、例如,运行一个7B(70亿参数)的模型至少需要8GB的可用内存(RAM),而运行一个13B(130亿参数)的模型需要16GB的内存,33B(330亿参数)的型需要32GB的内存;

2、需要考虑有足够的磁盘空间,大模型的文件大小可能比较大,建议至少为Ollama和其模型预留50GB的磁盘空间3、性能较高的CPU可以提供更好的运算速度和效率,多核处理器能够更好地处理并行任务,选择具有足够核心数的CPU:

4、显卡(GPU):Ollama支持纯CPU运行,但如果电脑配备了NVIDIA GPU,可以利用GPU进行加速,提高模型的运行速度和性能;

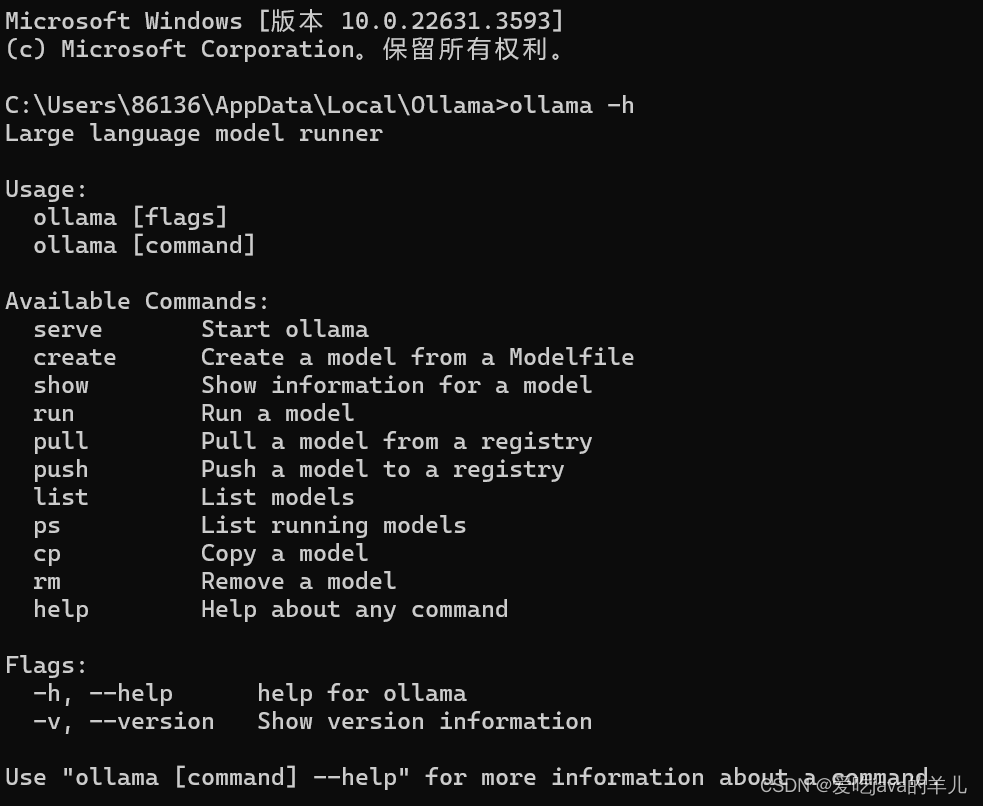

命令行使用ollama 打开终端,输入 ollama -h,查看到所有的命令

service ollama start启动allama

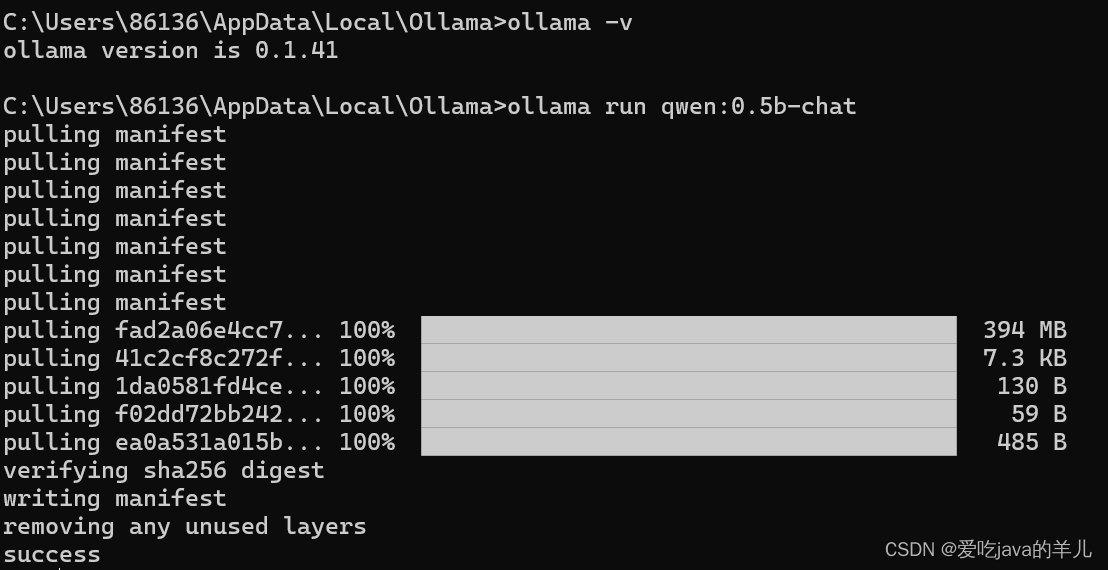

输入ollama -v查看当前版本,能输出版本则安装成功

运行模型单行对话

拉取并运行llama2模型ollama run llama2

直接输入该命令会检查目录下是否有该模型,没有会自动下载,下载好后自动运行该模型

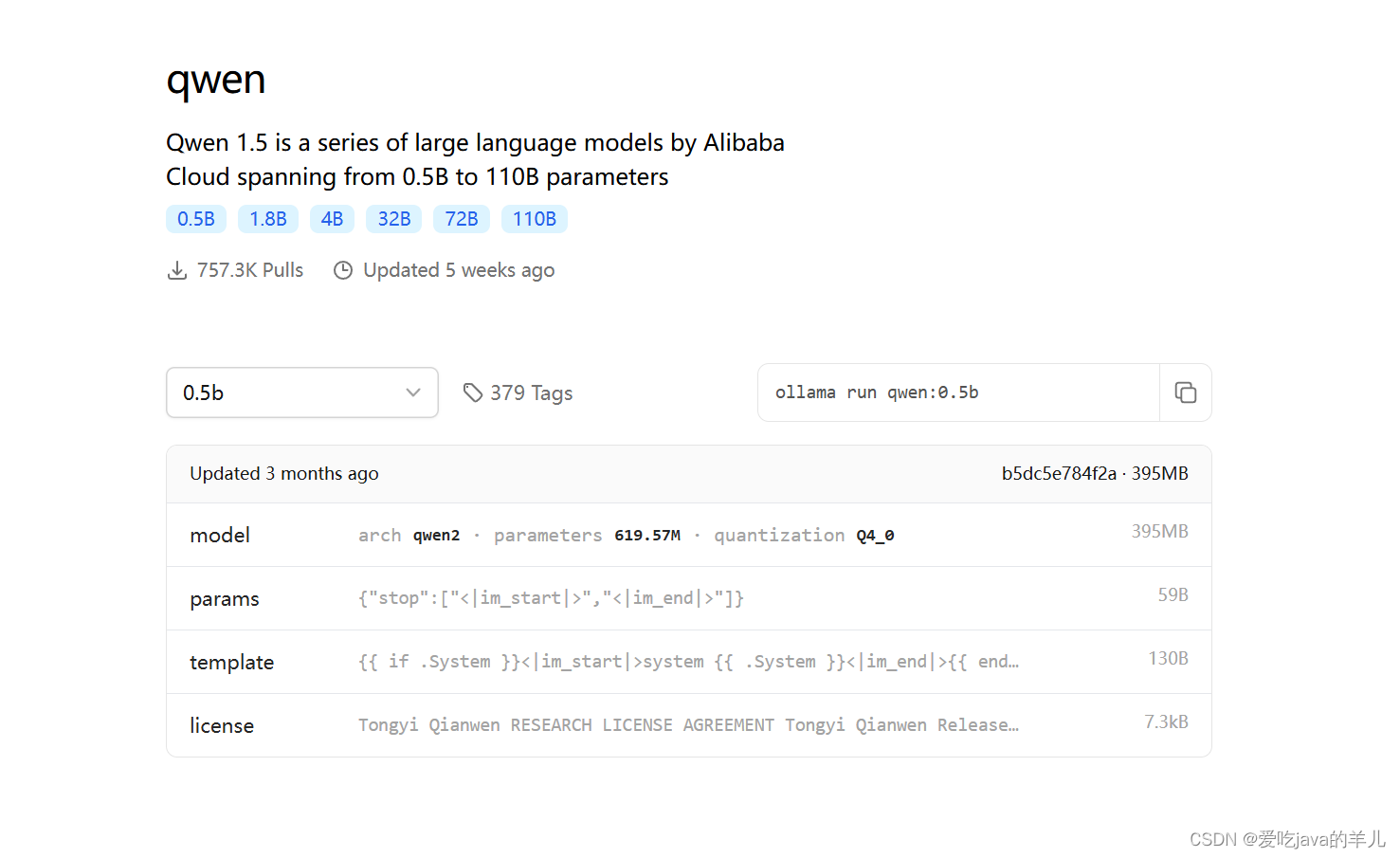

其他模型见library (ollama.com)

# 查看 Ollama 版本 ollama -v # 查看已安装的模型 ollama list # 删除指定模型 ollama rm [modelname] # 模型存储路径 # C:\Users\<username>\.ollama\modelsollama run qwen:0.5b

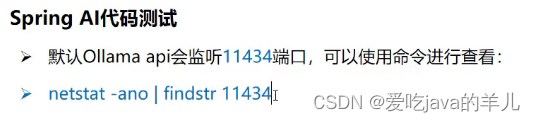

默认Ollama api会监听11434端口,可以使用命令进行查看netstat -ano |findstr 114341

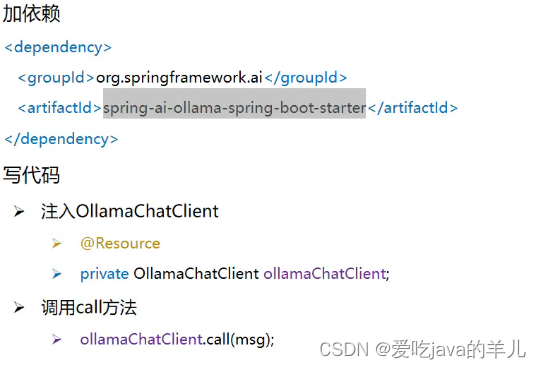

//加依赖 <dependency> <groupld>org.springframework,ai</groupld> <artifactld>spring-ai-ollama-spring-boot-starter</artifactld> </dependency> //写代码 注入OllamaChatClient @Resource private OllamaChatClient ollamaChatClient, //调用call方法 ollamaChatClient.call(msg);完整pom文件

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>3.3.0</version> <relativePath/> <!-- lookup parent from repository --> </parent> <groupId>com.zzq</groupId> <artifactId>spring-ai-ollama</artifactId> <version>0.0.1-SNAPSHOT</version> <name>spring-ai-ollama</name> <description>spring-ai-ollama</description> <properties> <java.version>17</java.version> <!-- 快照版本--> <spring-ai.version>1.0.0-SNAPSHOT</spring-ai.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>org.springframework.ai</groupId> <artifactId>spring-ai-ollama-spring-boot-starter</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-devtools</artifactId> <scope>runtime</scope> <optional>true</optional> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <optional>true</optional> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> </dependencies> <dependencyManagement> <dependencies> <dependency> <groupId>org.springframework.ai</groupId> <artifactId>spring-ai-bom</artifactId> <version>${spring-ai.version}</version> <type>pom</type> <scope>import</scope> </dependency> </dependencies> </dependencyManagement> <build> <plugins> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> <configuration> <excludes> <exclude> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> </exclude> </excludes> </configuration> </plugin> </plugins> </build> <!-- 快照版本--> <repositories> <repository> <id>spring-snapshot</id> <name>Spring Snapshots</name> <url>https://repo.spring.io/snapshot</url> <releases> <enabled>false</enabled> </releases> </repository> </repositories> </project> application文件内容

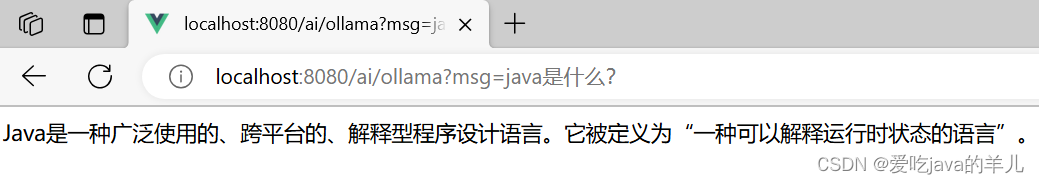

spring: application: name:spring-ai-05-ollama ai: ollama: base-url: http://localhost:11434 chat: options: model: qwen:0.5b controller

package com.zzq.controller; import jakarta.annotation.Resource; import org.springframework.ai.ollama.OllamaChatModel; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RequestParam; import org.springframework.web.bind.annotation.RestController; @RestController public class OllamaController { @Resource private OllamaChatModel ollamaChatModel; @RequestMapping(value = "/ai/ollama") public Object ollama(@RequestParam(value = "msg")String msg){ String called=ollamaChatModel.call(msg); System.out.println(called); return called; } }

package com.zzq.controller; import jakarta.annotation.Resource; import org.springframework.ai.chat.model.ChatResponse; import org.springframework.ai.chat.prompt.Prompt; import org.springframework.ai.ollama.OllamaChatModel; import org.springframework.ai.ollama.api.OllamaOptions; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RequestParam; import org.springframework.web.bind.annotation.RestController; @RestController public class OllamaController { @Resource private OllamaChatModel ollamaChatModel; @RequestMapping(value = "/ai/ollama") public Object ollama(@RequestParam(value = "msg")String msg){ String called=ollamaChatModel.call(msg); System.out.println(called); return called; } @RequestMapping(value = "/ai/ollama2") public Object ollama2(@RequestParam(value = "msg")String msg){ ChatResponse chatResponse=ollamaChatModel.call(new Prompt(msg, OllamaOptions.create() .withModel("qwen:0.5b")//使用哪个大模型 .withTemperature(0.4F)));//温度,温度值越高,准确率下降,温度值越低,准确率上升 System.out.println(chatResponse.getResult().getOutput().getContent()); return chatResponse.getResult().getOutput().getContent(); } }