✅作者简介:热爱科研的Matlab仿真开发者,修心和技术同步精进,代码获取、论文复现及科研仿真合作可私信。

🍎个人主页:Matlab科研工作室

🍊个人信条:格物致知。

更多Matlab完整代码及仿真定制内容点击👇

🔥 内容介绍

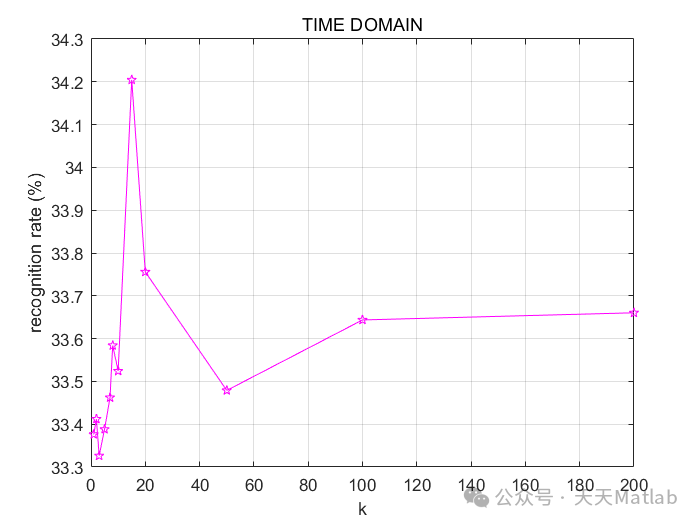

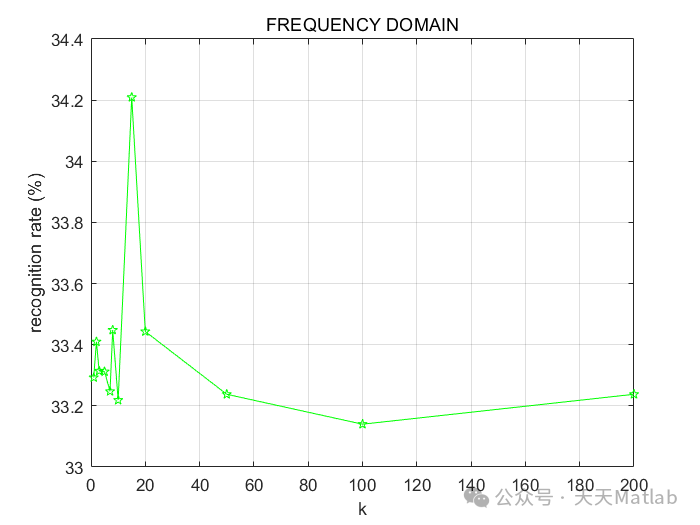

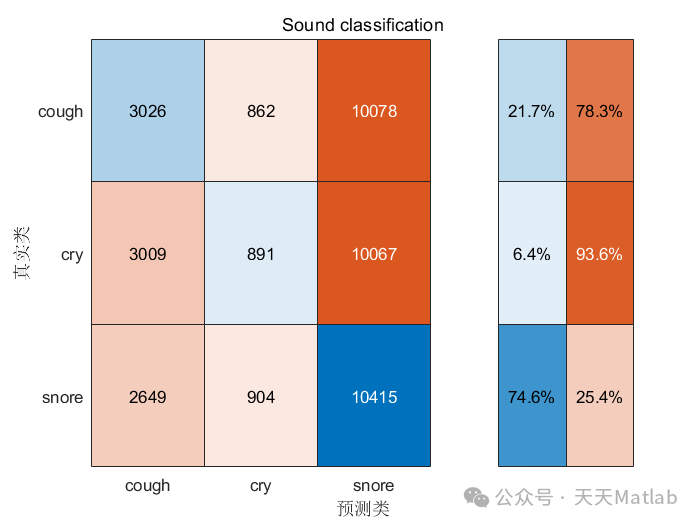

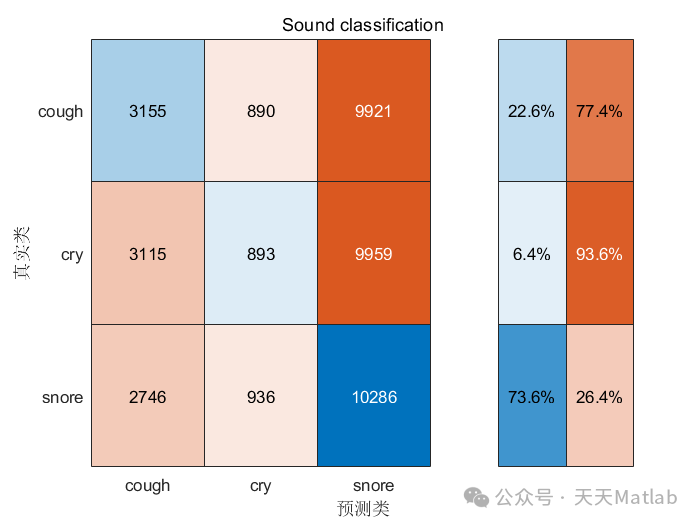

声音自动分类是语音识别领域的一项重要任务,广泛应用于语音交互、语音控制和医疗诊断等领域。本文提出了一种基于主成分分析(PCA)和最近邻(KNN)相结合的声音自动分类方法。PCA用于提取声音特征的降维表示,KNN用于基于降维特征进行分类。实验结果表明,该方法在多个声音数据集上取得了较高的分类精度,证明了其有效性和实用性。

引言

声音自动分类旨在根据声音特征将声音样本分类到预定义的类别中。传统的声音自动分类方法通常依赖于手工提取的特征,这需要大量的专业知识和经验。近年来,随着机器学习和深度学习的发展,基于数据驱动的特征提取和分类方法得到了广泛的关注。

方法

本文提出的方法包括以下步骤:

**特征提取:**使用梅尔频谱系数(MFCC)从声音样本中提取特征。MFCC是一种广泛用于语音识别领域的特征提取算法,它可以有效地捕捉声音的频谱信息。

**主成分分析(PCA):**对MFCC特征进行PCA降维。PCA是一种线性变换,它可以将高维特征投影到低维子空间中,同时保留最大方差的信息。PCA降维可以减少特征的冗余和噪声,提高分类的效率和准确性。

**最近邻(KNN):**使用KNN算法对PCA降维后的特征进行分类。KNN是一种非参数分类算法,它将一个新的样本分类为与它最相似的K个样本所属的类别。

结论

本文提出了一种基于PCA和KNN相结合的声音自动分类方法。该方法利用PCA降维提取声音特征的有效表示,并使用KNN进行分类。实验结果表明,该方法在多个声音数据集上取得了较高的分类精度,证明了其有效性和实用性。该方法可以为语音识别、语音控制和医疗诊断等领域提供一种有价值的工具。

📣 部分代码

clear; clc% ––––––––––––––––––––––––––––– IASPROJECT –––––––––––––––––––––––––––––––%----AUTHOR: ALESSANDRO-SCALAMBRINO-923216-------% ---MANAGE PATH, DIRECTORIES, FILES--addpath(genpath(pwd))fprintf('Extracting features from the audio files...\n\n')coughingFile = dir([pwd,'/Coughing/*.ogg']);cryingFile = dir([pwd,'/Crying/*.ogg']);snoringFile = dir([pwd,'/Snoring/*.ogg']);F = [coughingFile; cryingFile; snoringFile];% ---WINDOWS/STEP LENGHT---windowLength = 0.025;stepLength = 0.01;% ---FEATURES EXTRACTION---% ---INITIALIZING FEATURES VECTORS---allFeatures = [];coughingFeatures = [];cryingFeatures = [];snoringFeatures = [];% ---EXTRACTION---for i=1:3for j=1:40Features = stFeatureExtraction(F(i+j-1).name, windowLength, stepLength);allFeatures = [allFeatures Features];if i == 1; coughingFeatures = [coughingFeatures Features]; endif i == 2; cryingFeatures = [cryingFeatures Features]; endif i == 3; snoringFeatures = [snoringFeatures Features]; endendend% ---FEATURES NORMALIZATION----mn = mean(allFeatures);st = std(allFeatures);allFeaturesNorm = (allFeatures - repmat(mn,size(allFeatures,1),1))./repmat(st,size(allFeatures,1),1);% ---PCA---warning('off', 'stats:pca:ColRankDefX')[coeff,score,latent,tsquared,explained] = pca(allFeaturesNorm');disp('The following results are the values of the variance of each coefficient:')explainedcounter = 0;for p=1:length(explained)if explained(p) > 80counter = counter + 1;endenddisp(['The number of coefficients offering at least 80% of variance is ', mat2str(counter)])fprintf('\n\n')% ---PCA PLOTTING---S=[]; % size of each point, empty for all equalC=[repmat([1 0 0],length(coughingFeatures),1); repmat([0 1 0],length(cryingFeatures),1); repmat([0 0 1],length(snoringFeatures),1)];scatter3(score(:,1),score(:,2),score(:,3),S,C,'filled')axis equaltitle('PCA')% –––––––––––––––––––––––TRAIN/TEST DATASET –––––––––––––––––––––––trainPerc = 0.70;testPerc = 1 - trainPerc;coughingTrain = coughingFile(1:length(coughingFile)*trainPerc);cryingTrain = cryingFile(1:length(cryingFile)*trainPerc);snoringTrain = snoringFile(1:length(snoringFile)*trainPerc);FTR = [coughingTrain cryingTrain snoringTrain];TEST DATASET NORMALISATION –––––––––––––––––––––% normalisation in time domain of TEST dataallTestTimeFeatures = allTestTimeFeatures';allTestTimeFeatures = (allTestTimeFeatures - repmat(mnTime,size(allTestTimeFeatures,1),1))./repmat(stTime,size(allTestTimeFeatures,1),1);% normalisation in frequency domain of TEST dataallTestFreqFeatures = allTestFreqFeatures';allTestFreqFeatures = (allTestFreqFeatures - repmat(mnFreq,size(allTestFreqFeatures,1),1))./repmat(stFreq,size(allTestFreqFeatures,1),1);% normalisation of both time domain and frequency domain of TEST dataallTestFeatures = allTestFeatures';allTestFeatures = (allTestFeatures - repmat(mnAll,size(allTestFeatures,1),1))./repmat(stAll,size(allTestFeatures,1),1);% –––––––––––––––––––––––––– TRAIN/TEST LABELS ––––––––––––––––––––––––––% TRAINlabelcoughingTime = repmat(1,length(coughingTrainTimeFeatures),1);labelcryingTime = repmat(2,length(cryingTrainTimeFeatures),1);labelsnoringTime = repmat(3, length(snoringTrainTimeFeatures),1);allTimeLabels = [labelcoughingTime; labelcryingTime; labelsnoringTime];labelcoughingFreq = repmat(1,length(coughingTrainFreqFeatures),1);labelcryingFreq = repmat(2,length(cryingTrainFreqFeatures),1);labelsnoringFreq = repmat(3, length(snoringTrainFreqFeatures),1);allFreqLabels = [labelcoughingFreq; labelcryingFreq; labelsnoringFreq];labelcoughingAll = repmat(1,length(coughingTrainFeatures),1);labelcryingAll = repmat(2,length(cryingTrainFeatures),1);labelsnoringAll = repmat(3, length(snoringTrainFeatures),1);allLabels = [labelcoughingAll; labelcryingAll; labelsnoringAll];% ––––––––––––––––––––––––––– APPLY TEST LABELS ––––––––––––––––––––––––––testLabelcoughingTime = repmat(1,length(coughingTestTimeFeatures),1);testLabelcryingTime = repmat(2,length(cryingTestTimeFeatures),1);testLabelsnoringTime = repmat(3, length(snoringTestTimeFeatures),1);groundTruthTime = [testLabelcoughingTime; testLabelcryingTime; testLabelsnoringTime];testLabelcoughingFreq = repmat(1,length(coughingTestFreqFeatures),1);testLabelcryingFreq = repmat(2,length(cryingTestFreqFeatures),1);testLabelsnoringFreq = repmat(3, length(snoringTestFreqFeatures),1);groundTruthFreq = [testLabelcoughingFreq; testLabelcryingFreq; testLabelsnoringFreq];testLabelcoughingAll = repmat(1,length(coughingTestFeatures),1);testLabelcryingAll = repmat(2,length(cryingTestFeatures),1);testLabelsnoringAll = repmat(3, length(snoringTestFeatures),1);allGroundTruth = [testLabelcoughingAll; testLabelcryingAll; testLabelsnoringAll];% –––––––––––––––––––––––––––– KNN –––––––––––––––––––––––––––fprintf('––––––––––––––––––––––––––– COMPUTING THE KNN ––––––––––––––––––––––––––––\n\n')fprintf('Computing the recognition rate using the following values for k: 1, 2, 3, 5, 7, 8, 10, 15, 20, 50, 100, 200...\n\n')%TIMEKNN_calculation(allTrainTimeFeatures, allTestTimeFeatures, allTimeLabels, groundTruthTime, testLabelcoughingTime, testLabelcryingTime, testLabelsnoringTime, 'TIME DOMAIN', '-pm')%FREQKNN_calculation(allTrainFreqFeatures, allTestFreqFeatures, allFreqLabels, groundTruthFreq, testLabelcoughingFreq, testLabelcryingFreq, testLabelsnoringFreq, 'FREQUENCY DOMAIN', '-pg')%ALL-TOGETHERKNN_calculation(allTrainFeatures, allTestFeatures, allLabels, allGroundTruth, testLabelcoughingAll, testLabelcryingAll, testLabelsnoringAll, 'TIME AND FREQUENCY DOMAIN', '-pr')

⛳️ 运行结果

🔗 参考文献

[1]王心醉.人脸识别算法在ATM上的应用研究[J]. 2009.DOI:http://159.226.165.120//handle/181722/1057.

🎈 部分理论引用网络文献,若有侵权联系博主删除

🎁 关注我领取海量matlab电子书和数学建模资料

👇 私信完整代码和数据获取及论文数模仿真定制

1 各类智能优化算法改进及应用

生产调度、经济调度、装配线调度、充电优化、车间调度、发车优化、水库调度、三维装箱、物流选址、货位优化、公交排班优化、充电桩布局优化、车间布局优化、集装箱船配载优化、水泵组合优化、解医疗资源分配优化、设施布局优化、可视域基站和无人机选址优化、背包问题、 风电场布局、时隙分配优化、 最佳分布式发电单元分配、多阶段管道维修、 工厂-中心-需求点三级选址问题、 应急生活物质配送中心选址、 基站选址、 道路灯柱布置、 枢纽节点部署、 输电线路台风监测装置、 集装箱船配载优化、 机组优化、 投资优化组合、云服务器组合优化、 天线线性阵列分布优化

2 机器学习和深度学习方面

2.1 bp时序、回归预测和分类

2.2 ENS声神经网络时序、回归预测和分类

2.3 SVM/CNN-SVM/LSSVM/RVM支持向量机系列时序、回归预测和分类

2.4 CNN/TCN卷积神经网络系列时序、回归预测和分类

2.5 ELM/KELM/RELM/DELM极限学习机系列时序、回归预测和分类

2.6 GRU/Bi-GRU/CNN-GRU/CNN-BiGRU门控神经网络时序、回归预测和分类

2.7 ELMAN递归神经网络时序、回归\预测和分类

2.8 LSTM/BiLSTM/CNN-LSTM/CNN-BiLSTM/长短记忆神经网络系列时序、回归预测和分类

2.9 RBF径向基神经网络时序、回归预测和分类