阅读量:0

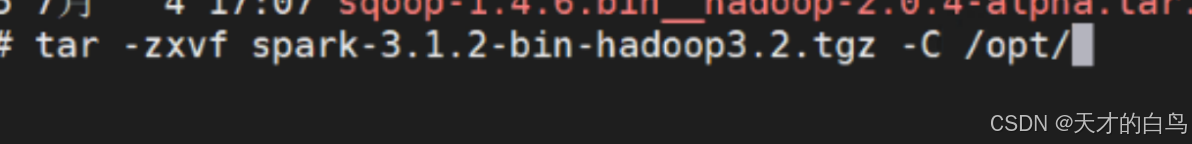

导入压缩包,解压改名

三台都配置spark环境变量

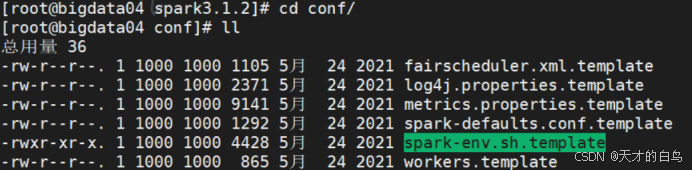

#SPARK_HOME export SPARK_HOME=/opt/softs/spark3.1.2 export PATH=$PATH:$SPARK_HOME/bin export PATH=$PATH:$SPARK_HOME/sbin修改配置文件

#!/usr/bin/env bash export JAVA_HOME=/opt/softs/jdk1.8.0 export HADOOP_HOME=/opt/softs/hadoop3.1.3 export HADOOP_CONF_DIR=/opt/softs/hadoop3.1.3/etc/hadoop export SPARK_DIST_CLASSPATH=$(/opt/softs/hadoop3.1.3/bin/hadoop classpath) export SPARK_MASTER_HOST=192.168.111.54 export SPARK_MASTER_PORT=7077 export SPARK_HISTORY_OPTS="-Dspark.history.ui.port=18080 -Dspark.history.retainedApplications=50 -Dspark.history.fs.logDirectory=hdfs://192.168.111.54:8020/spark-eventlog"

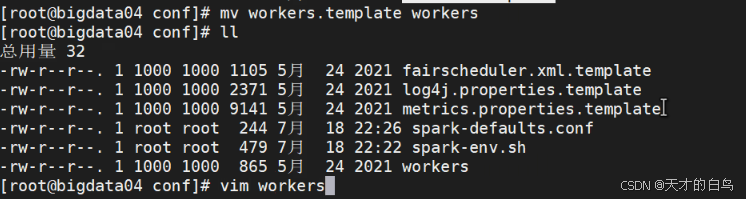

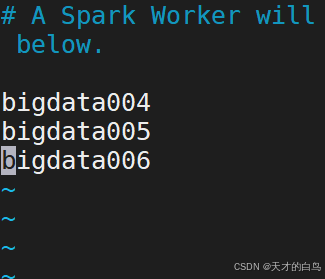

spark.master spark://192.168.111.54:7077 spark.eventLog.enabled true spark.eventLog.dir hdfs://192.168.111.54:8020/spark-eventlog spark.serializer org.apache.spark.serializer.KryoSerializer spark.driver.memory 2g spark.eventLog.compress true配置worker里的hostname

将修改传给其他虚拟机

scp -r spark3.1.2/ root@bigdata005:/opt/softs/

启动hdfs

启动spark

启动命令在/opt/softs/spark3.1.2/sbin 目录下的

sh start-all.sh

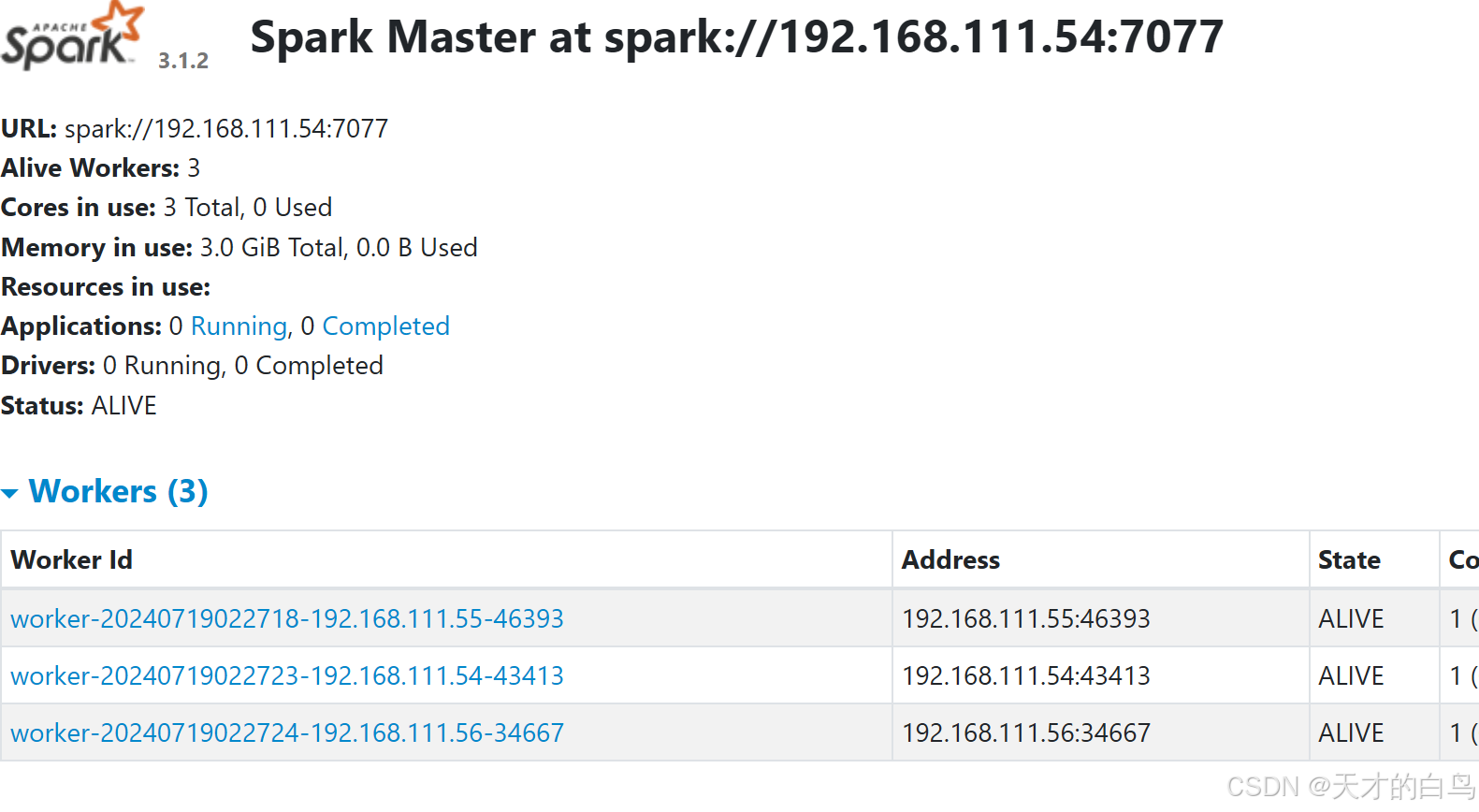

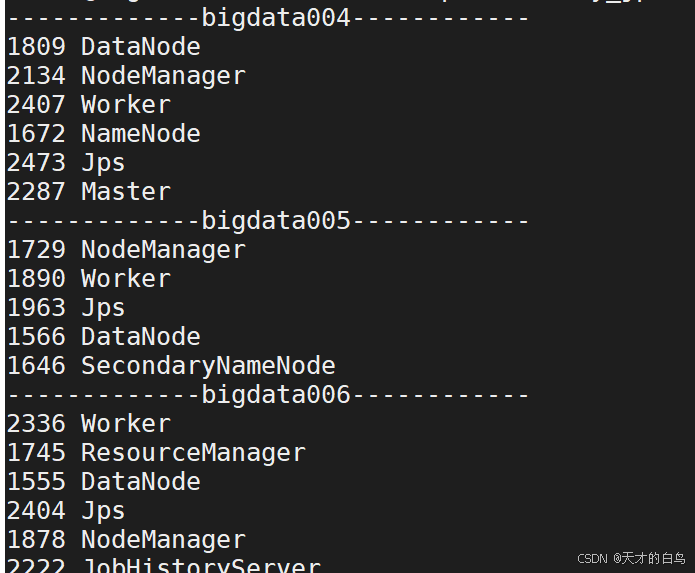

启动成功

关闭

![]()

webui

http://192.168.111.54:8080