阅读量:2

实现的逻辑是先检测是否有人脸,然后再检测是否张嘴了,最后生成照片传给后端比对人脸数据。本人是在工作中的项目需要考勤,前端需要活体检测。想到了这个简单的方法,在纯前端实现这些逻辑,当然精度不高,想要更好的实现,还得靠后端使用好的检测模型。

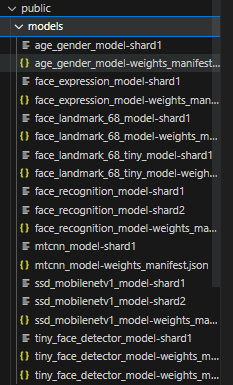

首先下载好相关的tracking.js,face.js,face-api.js(这个可以直接npm install face-api.js),以及一些静态的资源放public/models下

html部分(提示文字、video、canvas),css部分就不展示了,样式另行设计:

<div class="content_video"> <div class="face" :class="{ borderColor: borderColor }"> <p class="tip" style="font-size: 24px; color: blue; text-align: center;">{{ tip }}</p> <div class="face-meiti" id="container"> <video ref="video" preload autoplay loop muted playsinline :webkit-playsinline="true"></video> <canvas ref="canvas"> </canvas> </div> </div> </div>人脸识别部分:人脸识别使用 tracking.js和face.js,检测到人脸后即可进入面部动作识别区域

const borderColor=ref(false) // import { useRouter } from "vue-router"; const show = ref(false); const isIndex = ref(true); const isPhoto = ref(false); const videoEl = ref(); const localStream = ref(); const timer = ref(); const options = ref([]); const showPicker = ref(false); const form = reactive({ workerName: "", programName: "", programId: "", }); const vedioOpen = ref(true) const reJiance = ref(false) const state = reactive({ options: '', nose_x_anv: "", nose_y_anv: "", mouth_Y: '', action: "张嘴", isCheck: true, }) //人脸检测 const tip = ref("请正对摄像头"); const mediaStreamTrack = ref(); const video = ref(); //播放器实例 const trackerTask = ref(); //tracking实例 const uploadLock = true; //上传锁 const faceflag = ref(false); //是否进行拍照 const src = ref(); const getUserMedia = ref(""); const canvas = ref(); const LorR = computed(() => store.state.UserInfo.workerName != undefined ? true : false ); const selectPeople = computed(() => store.state.SalarySelectPeople); const store = useStore(); //人脸检测区域 const init = async () => { toast("正在申请摄像头权限", "info") vedioOpen.value = false; initTracker(canvas.value); }; const initTracker = async () => { const _this = this; // 固定写法 let tracker = new window.tracking.ObjectTracker("face"); tracker.setInitialScale(4); tracker.setStepSize(2); tracker.setEdgesDensity(0.1); //摄像头初始化 trackerTask.value = window.tracking.track(video.value, tracker, { camera: true, }); tracker.on("track", async (event) => { if (event.data.length == 0) { if (!faceflag.value) { tip.value = "未检测到人脸"; } } else if (event.data.length > 0) { console.log(event.data.length); event.data.forEach(async (rect, index) => { if (index != 0) { return; } // 防抖 if (!faceflag.value) { faceflag.value = true; tip.value = "请张张嘴"; //背景更改,提示作用 borderColor.value=true //进入人脸动作检测方法 initHuoti(rect) } }); } }); };面部动作识别部分:

const jiance = ref(); const initHuoti = async (rect) => { await faceApi.nets.tinyFaceDetector.loadFromUri('/models'); await faceApi.loadFaceLandmarkModel("/models"); state.options = new faceApi.TinyFaceDetectorOptions({ inputSize: 320, scoreThreshold: 0.4 }); //设定定时器,重复获取当前摄像头的图片 jiance.value = setInterval(() => { let context = canvas.value.getContext("2d", { willReadFrequently: true }); context.strokeStyle = "#a64ceb"; context.strokeRect(rect.x, rect.y, rect.width, rect.height); canvas.value.width = video.value.videoWidth; canvas.value.height = video.value.videoHeight; context.drawImage(video.value, 0, 0, canvas.value.width, canvas.value.height); let base64Img = canvas.value.toDataURL("image/jpeg"); face_test(base64Img) }, 400) } //使用faceapi检测人脸图片的特征值 const face_test = async (base64Img) => { const detections1 = await faceApi.detectSingleFace(canvas.value, state.options).withFaceLandmarks(); if (detections1) { const landmarks = detections1.landmarks const jawOutline = landmarks.getJawOutline() const nose = landmarks.getNose() const mouth = landmarks.getMouth() const leftEye = landmarks.getLeftEye() const rightEye = landmarks.getRightEye() const leftEyeBbrow = landmarks.getLeftEyeBrow() const rightEyeBrow = landmarks.getRightEyeBrow() //将特征值给这个方法检测是否张嘴了 isOpenMouth(mouth, base64Img) const resizedDetections = faceApi.resizeResults(detections1, { width: 280, height: 280 }) faceApi.draw.drawDetections(canvas.value, resizedDetections) } } //是否张嘴,精度取决于其中的检测方法 const isOpenMouth = (mouth, base64Img) => { const mouth_Y_list = mouth.map((item) => { return item.y }) const max = Math.max(...mouth_Y_list) const min = Math.min(...mouth_Y_list) const _y = max - min if (state.mouth_Y === "") { state.mouth_Y = _y } if (Math.abs(state.mouth_Y - _y) > 10) { clearTimeout(jiance.value); toast('检测成功,正在拍照', 'info', 1000); tip.value = "正在拍照,请勿乱动"; borderColor.value=false //上传照片 uploadimg(base64Img); } state.mouth_Y = _y }更好的获取摄像头方法,在tracking.js中做修改tracking.initUserMedia_方法,使用window.stream = MediaStream;存储相关流,方便后面页面关闭。

function getMediaDevices() { try { navigator.mediaDevices.enumerateDevices().then(function (devices) { devices.forEach(function (device) { switch (device?.kind) { case 'audioinput': console.log('音频输入设备(麦克风|话筒):', device); break; case 'audiooutput': console.log('音频输出设备(扬声器|音响):', device); break; case 'videoinput': console.log('视频输入设备(摄像头|相机):', device); break; default: console.log('当前可用的媒体设备: ', device); break; } }); }).catch(function (err) { console.error(err); }); } catch (err) { console.error(err); } finally { if (!navigator.mediaDevices || !navigator.mediaDevices.enumerateDevices) { console.log("不支持mediaDevices.enumerateDevices(), 未识别到多媒体设备!"); } } }; tracking.initUserMedia_ = function(element, opt_options) { getMediaDevices(); try { var options = { audio: true, // 注:这里 audio、video 默认都为false【一定要确保有麦克风或摄像头(有啥设备就开启啥设备)硬件设备的情况下才设为true 或 {...},否则会报DOMException: Requested device not found 等错!!】 video: true, // 获取视频 默认video: { facingMode: 'user' } }前置相机 } if (navigator.mediaDevices.getUserMedia) { // 访问用户媒体设备的 新标准API navigator.mediaDevices.getUserMedia(options).then(function (MediaStream) { element.srcObject=MediaStream window.stream = MediaStream; }).catch(function (err) { console.error("访问用户媒体设备失败:权限被拒绝 或 未识别到多媒体设备!", err); }).finally(() => { console.log('navigator.mediaDevices.getUserMedia API') }); } else if (navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia || navigator.msGetUserMedia) { try { //访问用户媒体设备的 旧标准API 兼容方法 navigator.getUserMedia(options, function (MediaStream) { element.srcObject=MediaStream window.stream = MediaStream; }, function (err) { console.error("访问用户媒体设备失败:权限被拒绝 或 未识别到多媒体设备!", err); }) } catch (error) { console.error(err); } finally { console.log('navigator.getUserMedia API') }; } else { if (0 > location.origin.indexOf('https://')) { console.error("提示:请尝试在本地localhost域名 或 https协议 的Web服务器环境中重新运行!"); } } } catch (error) { console.error("访问用户媒体设备失败:", error); } };//在使用页面关闭摄像头的方法 const stopMediaStreamTrack = function () { if (typeof window.stream === "object") { window.stream.getTracks().forEach(track => track.stop()); } clearTimeout(jiance.value); }