阅读量:5

https://github.com/fenglinglwb/mat

文章目录

基础镜像

docker run -it -p 7898:7860 --gpus device=3 kevinchina/deeplearning:pytorch2.3.0-cuda12.1-cudnn8-devel-xformers bash git clone https://github.com/fenglinglwb/MAT.git cd MAT/ pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple apt-get update && apt-get install ffmpeg libsm6 libxext6 -y 一系列操作后得到一个环境镜像,FFHQ_512.pkl在其中:

docker push kevinchina/deeplearning:pytorch2.3.0-cuda12.1-cudnn8-devel-mat 可以用这个镜像尝试inpaint效果:

docker run -it -p 7898:7860 --gpus device=3 kevinchina/deeplearning:pytorch2.3.0-cuda12.1-cudnn8-devel-mat bash 执行inpaint:

cd /workspace/MAT python generate_image.py --network pretrained/FFHQ_512.pkl --dpath images --mpath masks --outdir samples 原图

mask图:

结果去除图:

fastapi

安装了一些fastapi的环境:

kevinchina/deeplearning:pytorch2.3.0-cuda12.1-cudnn8-devel-mat-apibase 进而写dockerfile:

FROM kevinchina/deeplearning:pytorch2.3.0-cuda12.1-cudnn8-devel-mat-apibase EXPOSE 7860 ENTRYPOINT cd /workspace/MAT/ && python /workspace/MAT/mianfastapi.py build:

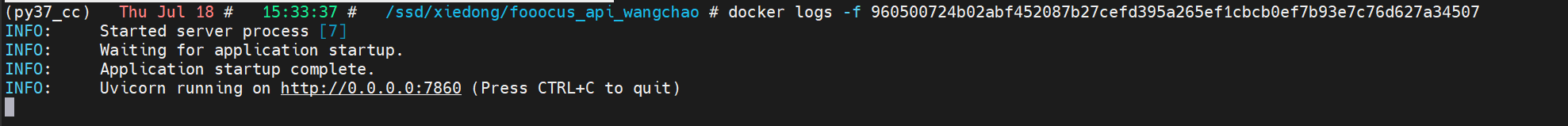

docker build -f Dockerfile1 . -t kevinchina/deeplearning:pytorch2.3.0-cuda12.1-cudnn8-devel-mat-api 只需要执行这个镜像就可以启动服务:

docker run -d -p 7898:7860 --gpus device=3 kevinchina/deeplearning:pytorch2.3.0-cuda12.1-cudnn8-devel-mat-api

总结

启动服务:

docker run -d -p 7898:7860 --gpus device=3 kevinchina/deeplearning:pytorch2.3.0-cuda12.1-cudnn8-devel-mat-api 访问服务:

import requests url = "http://10.136.19.26:7898/inpaint" image_path = "image.jpg" mask_path = "mask.jpg" # 读取图像和掩码文件 with open(image_path, "rb") as img_file, open(mask_path, "rb") as mask_file: files = { "image": img_file, "mask": mask_file } # 发送POST请求 response = requests.post(url, files=files) # 检查响应状态码 if response.status_code == 200: # 保存生成的图像 with open("output.png", "wb") as out_file: out_file.write(response.content) print("生成的图像已保存为 output.png") else: print(f"请求失败,状态码: {response.status_code}") print(response.text) 图片批量访问去除水印的请求代码

import base64 import io import os import traceback import requests import cv2 import numpy as np import json from PIL import Image from PIL import ImageDraw import numpy as np import cv2 from tqdm import tqdm import json def listPathAllfiles(dirname): result = [] for maindir, subdir, file_name_list in os.walk(dirname): for filename in file_name_list: apath = os.path.join(maindir, filename) result.append(apath) return result url = "http://10.136.19.26:7898/inpaint" # image_path = "image.jpg" # mask_path = "mask.jpg" # # # 读取图像和掩码文件 # with open(image_path, "rb") as img_file, open(mask_path, "rb") as mask_file: # files = { # "image": img_file, # "mask": mask_file # } # # # 发送POST请求 # response = requests.post(url, files=files) # # # 检查响应状态码 # if response.status_code == 200: # # 保存生成的图像 # with open("output.png", "wb") as out_file: # out_file.write(response.content) # print("生成的图像已保存为 output.png") # else: # print(f"请求失败,状态码: {response.status_code}") # print(response.text) src = r"/ssd/xiedong/xiezhenceshi/xiezhen_datasets" save_img_dst_output_inpaint_alpha = r"/ssd/xiedong/xiezhenceshi/inpaint_alpha" os.makedirs(save_img_dst_output_inpaint_alpha, exist_ok=True) files = listPathAllfiles(src) files.sort() files = [file for file in files if file.endswith(".jpg")] for src_image_file in tqdm(files): try: ocr_ret_file = src_image_file.replace(".jpg", ".json") output_image_file_alpha = src_image_file.replace(src, save_img_dst_output_inpaint_alpha) if not os.path.exists(ocr_ret_file): print(f"ocr_ret_file not exists: {ocr_ret_file}") continue if os.path.exists(output_image_file_alpha): print(f"output_image_file_alpha exists: {output_image_file_alpha}") continue output_image_file_alpha_father = os.path.dirname(output_image_file_alpha) os.makedirs(output_image_file_alpha_father, exist_ok=True) # 造一个mask图片在本地 ocr_json_data = json.load(open(ocr_ret_file, "r", encoding="utf-8")) image = cv2.imread(src_image_file) # 只要中心512*512的图 image_zitu = image[image.shape[0] // 2 - 256:image.shape[0] // 2 + 256, image.shape[1] // 2 - 256:image.shape[1] // 2 + 256] mask = np.zeros(image.shape, dtype=np.uint8) for item in ocr_json_data: box = item[0] cv2.fillPoly(mask, np.array([box], dtype=np.int32), (255, 255, 255)) # 只要中心512*512的图 mask_zitu = mask[mask.shape[0] // 2 - 256:mask.shape[0] // 2 + 256, mask.shape[1] // 2 - 256:mask.shape[1] // 2 + 256] # 取反mask_zitu的选择 mask_zitu = cv2.bitwise_not(mask_zitu) src_image_file_rb = cv2.imencode('.jpg', image_zitu)[1].tobytes() mask_file_rb = cv2.imencode('.jpg', mask_zitu)[1].tobytes() files = { "image": src_image_file_rb, "mask": mask_file_rb } # 发送POST请求 response = requests.post(url, files=files) # 检查响应状态码 if response.status_code == 200: # 保存生成的图像 # with open("output.png", "wb") as out_file: # out_file.write(response.content) # print("生成的图像已保存为 output.png") image_inpaint = Image.open(io.BytesIO(response.content)).convert('RGB') image_inpaint_cv2 = np.array(image_inpaint) image_inpaint_cv2 = cv2.cvtColor(image_inpaint_cv2, cv2.COLOR_RGB2BGR) # 贴回到原图 image[image.shape[0] // 2 - 256:image.shape[0] // 2 + 256, \ image.shape[1] // 2 - 256:image.shape[1] // 2 + 256] = image_inpaint_cv2 cv2.imwrite(output_image_file_alpha, image) else: print(f"请求失败,状态码: {response.status_code}") print(response.text) except: traceback.print_exc() 使用感受

不行,基本传统的inpaint就是很垃圾,效果不行无法投入使用,生成还得看StableDiffusion,但StableDiffusion就是很慢,如果有希望把LCM和小的SD模型用起来,就很nice了。