阅读量:3

背景

机器翻译具有悠长的发展历史,目前主流的机器翻译方法为神经网络翻译,如LSTM和transformer。在特定领域或行业中,由于机器翻译难以保证术语的一致性,导致翻译效果还不够理想。对于术语名词、人名地名等机器翻译不准确的结果,可以通过术语词典进行纠正,避免了混淆或歧义,最大限度提高翻译质量。

任务

基于术语词典干预的机器翻译挑战赛选择以英文为源语言,中文为目标语言的机器翻译。本次大赛除英文到中文的双语数据,还提供英中对照的术语词典。参赛队伍需要基于提供的训练数据样本从多语言机器翻译模型的构建与训练,并基于测试集以及术语词典,提供最终的翻译结果。

Baseline代码解读

首先导入相应的包

import torch import torch.nn as nn import torch.optim as optim from torch.utils.data import Dataset, DataLoader from torchtext.data.utils import get_tokenizer from collections import Counter import random from torch.utils.data import Subset, DataLoader import time 随后定义数据集、Decoder类、Encoder类、Seq2seq类

# 定义数据集类 # 修改TranslationDataset类以处理术语 class TranslationDataset(Dataset): def __init__(self, filename, terminology): self.data = [] with open(filename, 'r', encoding='utf-8') as f: for line in f: en, zh = line.strip().split('\t') self.data.append((en, zh)) self.terminology = terminology # 创建词汇表,注意这里需要确保术语词典中的词也被包含在词汇表中 self.en_tokenizer = get_tokenizer('basic_english') self.zh_tokenizer = list # 使用字符级分词 en_vocab = Counter(self.terminology.keys()) # 确保术语在词汇表中 zh_vocab = Counter() for en, zh in self.data: en_vocab.update(self.en_tokenizer(en)) zh_vocab.update(self.zh_tokenizer(zh)) # 添加术语到词汇表 self.en_vocab = ['<pad>', '<sos>', '<eos>'] + list(self.terminology.keys()) + [word for word, _ in en_vocab.most_common(10000)] self.zh_vocab = ['<pad>', '<sos>', '<eos>'] + [word for word, _ in zh_vocab.most_common(10000)] self.en_word2idx = {word: idx for idx, word in enumerate(self.en_vocab)} self.zh_word2idx = {word: idx for idx, word in enumerate(self.zh_vocab)} def __len__(self): return len(self.data) def __getitem__(self, idx): en, zh = self.data[idx] en_tensor = torch.tensor([self.en_word2idx.get(word, self.en_word2idx['<sos>']) for word in self.en_tokenizer(en)] + [self.en_word2idx['<eos>']]) zh_tensor = torch.tensor([self.zh_word2idx.get(word, self.zh_word2idx['<sos>']) for word in self.zh_tokenizer(zh)] + [self.zh_word2idx['<eos>']]) return en_tensor, zh_tensor def collate_fn(batch): en_batch, zh_batch = [], [] for en_item, zh_item in batch: en_batch.append(en_item) zh_batch.append(zh_item) # 对英文和中文序列分别进行填充 en_batch = nn.utils.rnn.pad_sequence(en_batch, padding_value=0, batch_first=True) zh_batch = nn.utils.rnn.pad_sequence(zh_batch, padding_value=0, batch_first=True) return en_batch, zh_batch class Encoder(nn.Module): def __init__(self, input_dim, emb_dim, hid_dim, n_layers, dropout): super().__init__() self.embedding = nn.Embedding(input_dim, emb_dim) self.rnn = nn.GRU(emb_dim, hid_dim, n_layers, dropout=dropout, batch_first=True) self.dropout = nn.Dropout(dropout) def forward(self, src): # src shape: [batch_size, src_len] embedded = self.dropout(self.embedding(src)) # embedded shape: [batch_size, src_len, emb_dim] outputs, hidden = self.rnn(embedded) # outputs shape: [batch_size, src_len, hid_dim] # hidden shape: [n_layers, batch_size, hid_dim] return outputs, hidden class Decoder(nn.Module): def __init__(self, output_dim, emb_dim, hid_dim, n_layers, dropout): super().__init__() self.output_dim = output_dim self.embedding = nn.Embedding(output_dim, emb_dim) self.rnn = nn.GRU(emb_dim, hid_dim, n_layers, dropout=dropout, batch_first=True) self.fc_out = nn.Linear(hid_dim, output_dim) self.dropout = nn.Dropout(dropout) def forward(self, input, hidden): # input shape: [batch_size, 1] # hidden shape: [n_layers, batch_size, hid_dim] embedded = self.dropout(self.embedding(input)) # embedded shape: [batch_size, 1, emb_dim] output, hidden = self.rnn(embedded, hidden) # output shape: [batch_size, 1, hid_dim] # hidden shape: [n_layers, batch_size, hid_dim] prediction = self.fc_out(output.squeeze(1)) # prediction shape: [batch_size, output_dim] return prediction, hidden class Seq2Seq(nn.Module): def __init__(self, encoder, decoder, device): super().__init__() self.encoder = encoder self.decoder = decoder self.device = device def forward(self, src, trg, teacher_forcing_ratio=0.5): # src shape: [batch_size, src_len] # trg shape: [batch_size, trg_len] batch_size = src.shape[0] trg_len = trg.shape[1] trg_vocab_size = self.decoder.output_dim outputs = torch.zeros(batch_size, trg_len, trg_vocab_size).to(self.device) _, hidden = self.encoder(src) input = trg[:, 0].unsqueeze(1) # Start token for t in range(1, trg_len): output, hidden = self.decoder(input, hidden) outputs[:, t, :] = output teacher_force = random.random() < teacher_forcing_ratio top1 = output.argmax(1) input = trg[:, t].unsqueeze(1) if teacher_force else top1.unsqueeze(1) return outputs增加术语词典

# 新增术语词典加载部分 def load_terminology_dictionary(dict_file): terminology = {} with open(dict_file, 'r', encoding='utf-8') as f: for line in f: en_term, ch_term = line.strip().split('\t') terminology[en_term] = ch_term return terminology训练模型

def train(model, iterator, optimizer, criterion, clip): model.train() epoch_loss = 0 for i, (src, trg) in enumerate(iterator): src, trg = src.to(device), trg.to(device) optimizer.zero_grad() output = model(src, trg) output_dim = output.shape[-1] output = output[:, 1:].contiguous().view(-1, output_dim) trg = trg[:, 1:].contiguous().view(-1) loss = criterion(output, trg) loss.backward() torch.nn.utils.clip_grad_norm_(model.parameters(), clip) optimizer.step() epoch_loss += loss.item() return epoch_loss / len(iterator)主函数,设置批次大小和数据量

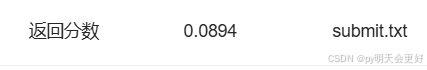

# 主函数 if __name__ == '__main__': start_time = time.time() # 开始计时 device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') #terminology = load_terminology_dictionary('../dataset/en-zh.dic') terminology = load_terminology_dictionary('../dataset/en-zh.dic') # 加载数据 dataset = TranslationDataset('../dataset/train.txt',terminology = terminology) # 选择数据集的前N个样本进行训练 N = 1000 #int(len(dataset) * 1) # 或者你可以设置为数据集大小的一定比例,如 int(len(dataset) * 0.1) subset_indices = list(range(N)) subset_dataset = Subset(dataset, subset_indices) train_loader = DataLoader(subset_dataset, batch_size=32, shuffle=True, collate_fn=collate_fn) # 定义模型参数 INPUT_DIM = len(dataset.en_vocab) OUTPUT_DIM = len(dataset.zh_vocab) ENC_EMB_DIM = 256 DEC_EMB_DIM = 256 HID_DIM = 512 N_LAYERS = 2 ENC_DROPOUT = 0.5 DEC_DROPOUT = 0.5 # 初始化模型 enc = Encoder(INPUT_DIM, ENC_EMB_DIM, HID_DIM, N_LAYERS, ENC_DROPOUT) dec = Decoder(OUTPUT_DIM, DEC_EMB_DIM, HID_DIM, N_LAYERS, DEC_DROPOUT) model = Seq2Seq(enc, dec, device).to(device) # 定义优化器和损失函数 optimizer = optim.Adam(model.parameters()) criterion = nn.CrossEntropyLoss(ignore_index=dataset.zh_word2idx['<pad>']) # 训练模型 N_EPOCHS = 10 CLIP = 1 for epoch in range(N_EPOCHS): train_loss = train(model, train_loader, optimizer, criterion, CLIP) print(f'Epoch: {epoch+1:02} | Train Loss: {train_loss:.3f}') # 在训练循环结束后保存模型 torch.save(model.state_dict(), './translation_model_GRU.pth') end_time = time.time() # 结束计时 # 计算并打印运行时间 elapsed_time_minute = (end_time - start_time)/60 print(f"Total running time: {elapsed_time_minute:.2f} minutes")由于没有对代码进行任何修改,所以效果并不好

之后尝试修改N以及NEPOCH参数,来降低损失,从而提高分数

![]()