阅读量:3

概述

使用Python实现虚拟鼠标控制,利用手势识别来替代传统鼠标操作。这一实现依赖于计算机视觉库OpenCV、手势识别库MediaPipe以及其他辅助库如PyAutoGUI和Pynput。

环境配置

在开始之前,请确保已安装以下Python库:

pip install opencv-python mediapipe pynput pyautogui numpy pillow 模块介绍

1. utils.py

utils.py包含一个Utils类,主要提供在图像上添加中文文本的功能。这对于在实时视频流中显示信息非常有用。

代码解析

import cv2 import numpy as np from PIL import Image, ImageDraw, ImageFont class Utils: def __init__(self): pass def cv2AddChineseText(self, img, text, position, textColor=(0, 255, 0), textSize=30): if isinstance(img, np.ndarray): # 判断是否OpenCV图片类型 img = Image.fromarray(cv2.cvtColor(img, cv2.COLOR_BGR2RGB)) draw = ImageDraw.Draw(img) fontStyle = ImageFont.truetype("./fonts/simsun.ttc", textSize, encoding="utf-8") draw.text(position, text, textColor, font=fontStyle) return cv2.cvtColor(np.asarray(img), cv2.COLOR_RGB2BGR) 2. handProcess.py

handProcess.py负责手势的识别和处理。该模块使用MediaPipe库来检测和跟踪手部的关键点,并根据手势的不同动作触发相应的鼠标操作。

代码解析

import cv2 import mediapipe as mp import time import math import numpy as np from utils import Utils class HandProcess: def __init__(self, static_image_mode=False, max_num_hands=2): self.mp_drawing = mp.solutions.drawing_utils self.mp_hands = mp.solutions.hands self.hands = self.mp_hands.Hands(static_image_mode=static_image_mode, min_detection_confidence=0.7, min_tracking_confidence=0.5, max_num_hands=max_num_hands) self.landmark_list = [] self.action_labels = { 'none': '无', 'move': '鼠标移动', 'click_single_active': '触发单击', 'click_single_ready': '单击准备', 'click_right_active': '触发右击', 'click_right_ready': '右击准备', 'scroll_up': '向上滑页', 'scroll_down': '向下滑页', 'drag': '鼠标拖拽' } self.action_deteted = '' def checkHandsIndex(self, handedness): if len(handedness) == 1: handedness_list = [handedness[0].classification[0].label] else: handedness_list = [handedness[0].classification[0].label, handedness[1].classification[0].label] return handedness_list def getDistance(self, pointA, pointB): return math.hypot((pointA[0] - pointB[0]), (pointA[1] - pointB[1])) def getFingerXY(self, index): return (self.landmark_list[index][1], self.landmark_list[index][2]) def drawInfo(self, img, action): thumbXY, indexXY, middleXY = map(self.getFingerXY, [4, 8, 12]) if action == 'move': img = cv2.circle(img, indexXY, 20, (255, 0, 255), -1) elif action == 'click_single_active': middle_point = int((indexXY[0] + thumbXY[0]) / 2), int((indexXY[1] + thumbXY[1]) / 2) img = cv2.circle(img, middle_point, 30, (0, 255, 0), -1) elif action == 'click_single_ready': img = cv2.circle(img, indexXY, 20, (255, 0, 255), -1) img = cv2.circle(img, thumbXY, 20, (255, 0, 255), -1) img = cv2.line(img, indexXY, thumbXY, (255, 0, 255), 2) elif action == 'click_right_active': middle_point = int((indexXY[0] + middleXY[0]) / 2), int((indexXY[1] + middleXY[1]) / 2) img = cv2.circle(img, middle_point, 30, (0, 255, 0), -1) elif action == 'click_right_ready': img = cv2.circle(img, indexXY, 20, (255, 0, 255), -1) img = cv2.circle(img, middleXY, 20, (255, 0, 255), -1) img = cv2.line(img, indexXY, middleXY, (255, 0, 255), 2) return img def checkHandAction(self, img, drawKeyFinger=True): upList = self.checkFingersUp() action = 'none' if len(upList) == 0: return img, action, None dete_dist = 100 key_point = self.getFingerXY(8) if upList == [0, 1, 0, 0, 0]: action = 'move' if upList == [1, 1, 0, 0, 0]: l1 = self.getDistance(self.getFingerXY(4), self.getFingerXY(8)) action = 'click_single_active' if l1 < dete_dist else 'click_single_ready' if upList == [0, 1, 1, 0, 0]: l1 = self.getDistance(self.getFingerXY(8), self.getFingerXY(12)) action = 'click_right_active' if l1 < dete_dist else 'click_right_ready' if upList == [1, 1, 1, 1, 1]: action = 'scroll_up' if upList == [0, 1, 1, 1, 1]: action = 'scroll_down' if upList == [0, 0, 1, 1, 1]: key_point = self.getFingerXY(12) action = 'drag' img = self.drawInfo(img, action) if drawKeyFinger else img self.action_deteted = self.action_labels[action] return img, action, key_point def checkFingersUp(self): fingerTipIndexs = [4, 8, 12, 16, 20] upList = [] if len(self.landmark_list) == 0: return upList if self.landmark_list[fingerTipIndexs[0]][1] < self.landmark_list[fingerTipIndexs[0] - 1][1]: upList.append(1) else: upList.append(0) for i in range(1, 5): if self.landmark_list[fingerTipIndexs[i]][2] < self.landmark_list[fingerTipIndexs[i] - 2][2]: upList.append(1) else: upList.append(0) return upList def processOneHand(self, img, drawBox=True, drawLandmarks=True): utils = Utils() results = self.hands.process(img) self.landmark_list = [] if results.multi_hand_landmarks: for hand_index, hand_landmarks in enumerate(results.multi_hand_landmarks): if drawLandmarks: self.mp_drawing.draw_landmarks(img, hand_landmarks, self.mp_hands.HAND_CONNECTIONS, self.mp_drawing_styles.get_default_hand_landmarks_style(), self.mp_drawing_styles.get_default_hand_connections_style()) for landmark_id, finger_axis in enumerate(hand_landmarks.landmark): h, w, c = img.shape p_x, p_y = math.ceil(finger_axis.x * w), math.ceil(finger_axis.y * h) self.landmark_list.append([landmark_id, p_x, p_y, finger_axis.z]) if drawBox: x_min, x_max = min(self.landmark_list, key=lambda i: i[1])[1], max(self.landmark_list, key=lambda i: i[1])[1] y_min, y_max = min(self.landmark_list, key=lambda i: i[2])[2], max(self.landmark_list, key=lambda i: i[2])[2] img = cv2.rectangle(img, (x_min - 30, y_min - 30), (x_max + 30, y_max + 30), (0, 255, 0), 2) img = utils.cv2AddChineseText(img, self.action_deteted, (x_min - 20, y_min - 120), textColor=(255, 0, 255), textSize=60) return img 3. virtual_mouse.py

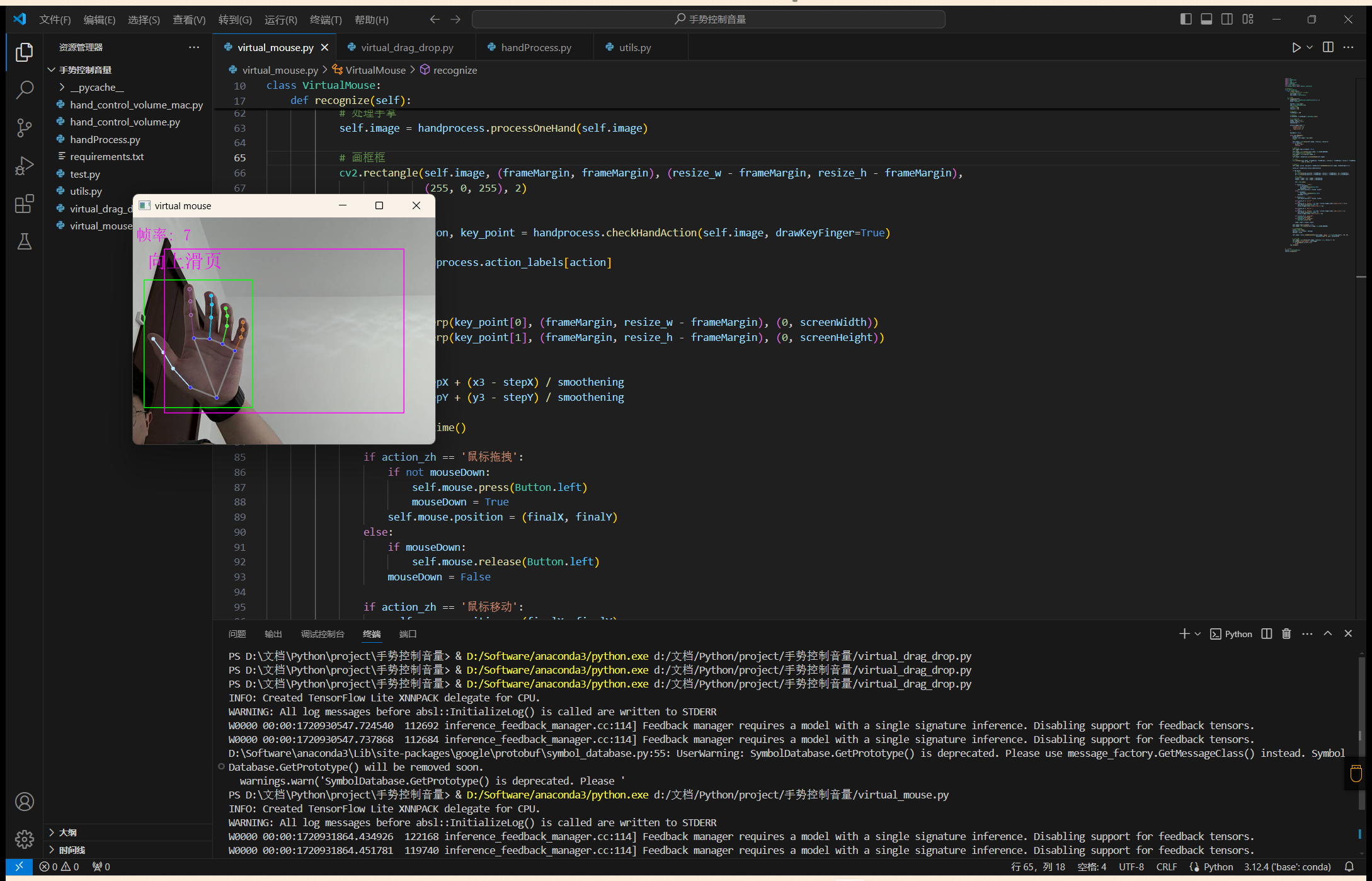

virtual_mouse.py是主程序模块,整合了手势识别和鼠标控制功能,实现了通过手势控制鼠标移动、点击和滚动的功能。

代码解析

import cv2 import handProcess import time import numpy as np import pyautogui from utils import Utils from pynput.mouse import Button, Controller class VirtualMouse: def __init__(self): self.image = None self.mouse = Controller() def recognize(self): handprocess = handProcess.HandProcess(False, 1) utils = Utils() fpsTime = time.time() cap = cv2.VideoCapture(0) resize_w = 960 resize_h = 720 frameMargin = 100 screenWidth, screenHeight = pyautogui.size() stepX, stepY = 0, 0 finalX, finalY = 0, 0 smoothening = 7 action_trigger_time = { 'single_click': 0, 'double_click': 0, 'right_click': 0 } mouseDown = False while cap.isOpened(): action_zh = '' success, self.image = cap.read() self.image = cv2.resize(self.image, (resize_w, resize_h)) if not success: print("空帧") continue self.image.flags.writeable = False self.image = cv2.cvtColor(self.image, cv2.COLOR_BGR2RGB) self.image = cv2.flip(self.image, 1) self.image = handprocess.processOneHand(self.image) cv2.rectangle(self.image, (frameMargin, frameMargin), (resize_w - frameMargin, resize_h - frameMargin), (255, 0, 255), 2) self.image, action, key_point = handprocess.checkHandAction(self.image, drawKeyFinger=True) action_zh = handprocess.action_labels[action] if key_point: x3 = np.interp(key_point[0], (frameMargin, resize_w - frameMargin), (0, screenWidth)) y3 = np.interp(key_point[1], (frameMargin, resize_h - frameMargin), (0, screenHeight)) finalX = stepX + (x3 - stepX) / smoothening finalY = stepY + (y3 - stepY) / smoothening now = time.time() if action_zh == '鼠标拖拽': if not mouseDown: self.mouse.press(Button.left) mouseDown = True self.mouse.position = (finalX, finalY) else: if mouseDown: self.mouse.release(Button.left) mouseDown = False if action_zh == '鼠标移动': self.mouse.position = (finalX, finalY) elif action_zh == '单击准备': pass elif action_zh == '触发单击' and (now - action_trigger_time['single_click'] > 0.3): self.mouse.click(Button.left, 1) action_trigger_time['single_click'] = now elif action_zh == '右击准备': pass elif action_zh == '触发右击' and (now - action_trigger_time['right_click'] > 2): self.mouse.click(Button.right, 1) action_trigger_time['right_click'] = now elif action_zh == '向上滑页': pyautogui.scroll(30) elif action_zh == '向下滑页': pyautogui.scroll(-30) stepX, stepY = finalX, finalY self.image.flags.writeable = True self.image = cv2.cvtColor(self.image, cv2.COLOR_RGB2BGR) cTime = time.time() fps_text = 1 / (cTime - fpsTime) fpsTime = cTime self.image = utils.cv2AddChineseText(self.image, "帧率: " + str(int(fps_text)), (10, 30), textColor=(255, 0, 255), textSize=50) self.image = cv2.resize(self.image, (resize_w // 2, resize_h // 2)) cv2.imshow('virtual mouse', self.image) if cv2.waitKey(5) & 0xFF == 27: break cap.release() control = VirtualMouse() control.recognize() 4. 功能列表

在这个虚拟鼠标控制项目中,通过识别不同的手势来触发相应的鼠标操作。以下是该项目中实现的主要功能及其对应的手势:

鼠标移动

- 手势:食指竖起(其他手指收回)。

- 描述:食指指尖的移动映射到屏幕上的鼠标光标移动。

单击准备

- 手势:拇指和食指都竖起且未接触。

- 描述:准备触发单击。

触发单击

- 手势:拇指和食指接触(捏合)。

- 描述:触发一次鼠标左键单击。

右击准备

- 手势:食指和中指都竖起且未接触。

- 描述:准备触发右击。

触发右击

- 手势:食指和中指接触(捏合)。

- 描述:触发一次鼠标右键单击。

鼠标拖拽

- 手势:中指、无名指和小指竖起(拇指和食指收回)。

- 描述:模拟鼠标左键按住并拖动。

向上滚动

- 手势:五指全部竖起。

- 描述:触发页面向上滚动。

向下滚动

- 手势:除了拇指外,其他四指竖起。

- 描述:触发页面向下滚动。

5.测试