阅读量:4

深度学习就像炼丹。炉子就是模型,火候就是那些参数,材料就是数据集。

1.1 参数有哪些

调参调参,参数到底是哪些参数?

1.网络相关的参数:(1)神经网络网络层

(2)隐藏层的神经元个数等

(3)卷积核的数量

(4)损失层函数的选择

2.数据预处理的相关参数:(1)batch normalization(2)等等

3.超参数:(1)激活函数(2)初始化(凯明初始化等)

(3)梯度下降(SGD、Adam)

(4)epoch (5)batch size

(6)学习率lr (7)衰减函数、正则化等

1.2 常见的情况及原因

1.通常是在网络训练的结果:(1)过拟合----样本数量太少了

(2)欠拟合----样本多但模型简单

(3)拟合,但是在上下浮动(震荡)

(4)恰好拟合

(5)模型不收敛

1.3 解决方法

(1)过拟合----数据增强、早停法、drop out、降低学习率、调整epoch

(2)欠拟合----加深层数、尽量用非线性的激活函数如relu

(3)拟合但震荡----降低数据增强的程度、学习率

(4)

(5)模型不收敛----数据集有问题、网络模型有问题

1.4 调参的过程

1.搭建网络模型

2.先用小样本进行尝试模型效果

3.根据小样本的效果,进行调参,包括分析损失。

1.5 代码(CPU训练)

# Author:SiZhen # Create: 2024/7/14 # Description: 调参练习-以手写数字集为例 import torch import torch.nn as nn import torchvision import torch.utils import torchvision.transforms as transforms import matplotlib.pyplot as plt #设置超参数 from torch import optim from torch.nn import init batch_size = 64 hidden_size = 128 learning_rate = 0.001 num_epoch = 10 #将图片进行预处理转换成pytorch张量 transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,),(0.5,),)]) #mnist是单通道,所以括号内只有一个0.5 #下载训练集 train_set = torchvision.datasets.MNIST(root="./data",train=True,download=True,transform=transform) #下载测试集 test_set = torchvision.datasets.MNIST(root="./data",train=False,download=True,transform=transform) #加载数据集 train_loader= torch.utils.data.DataLoader(train_set,batch_size=batch_size,shuffle=True) test_loader = torch.utils.data.DataLoader(test_set,batch_size=batch_size,shuffle=False)#测试不shuffle input_size = 784 #mnist,28x28像素 num_classes = 10 class SEAttention(nn.Module): # 初始化SE模块,channel为通道数,reduction为降维比率 def __init__(self, channel=1, reduction=8): super().__init__() self.avg_pool = nn.AdaptiveAvgPool2d(1) # 自适应平均池化层,将特征图的空间维度压缩为1x1 self.fc = nn.Sequential( # 定义两个全连接层作为激励操作,通过降维和升维调整通道重要性 nn.Linear(channel, channel // reduction, bias=False), # 降维,减少参数数量和计算量 nn.ReLU(inplace=True), # ReLU激活函数,引入非线性 nn.Linear(channel // reduction, channel, bias=False), # 升维,恢复到原始通道数 nn.Sigmoid(), # Sigmoid激活函数,输出每个通道的重要性系数 ) # 权重初始化方法 def init_weights(self): for m in self.modules(): # 遍历模块中的所有子模块 if isinstance(m, nn.Conv2d): # 对于卷积层 init.kaiming_normal_(m.weight, mode='fan_out') # 使用Kaiming初始化方法初始化权重 if m.bias is not None: init.constant_(m.bias, 0) # 如果有偏置项,则初始化为0 elif isinstance(m, nn.BatchNorm2d): # 对于批归一化层 init.constant_(m.weight, 1) # 权重初始化为1 init.constant_(m.bias, 0) # 偏置初始化为0 elif isinstance(m, nn.Linear): # 对于全连接层 init.normal_(m.weight, std=0.001) # 权重使用正态分布初始化 if m.bias is not None: init.constant_(m.bias, 0) # 偏置初始化为0 # 前向传播方法 def forward(self, x): b, c, _, _ = x.size() # 获取输入x的批量大小b和通道数c y = self.avg_pool(x).view(b, c) # 通过自适应平均池化层后,调整形状以匹配全连接层的输入 y = self.fc(y).view(b, c, 1, 1) # 通过全连接层计算通道重要性,调整形状以匹配原始特征图的形状 return x * y.expand_as(x) # 将通道重要性系数应用到原始特征图上,进行特征重新校准 import torch import torch.nn as nn from torch.nn import Softmax # 定义一个无限小的矩阵,用于在注意力矩阵中屏蔽特定位置 def INF(B, H, W): return -torch.diag(torch.tensor(float("inf")).repeat(H), 0).unsqueeze(0).repeat(B * W, 1, 1) class CrissCrossAttention(nn.Module): """ Criss-Cross Attention Module""" def __init__(self, in_dim): super(CrissCrossAttention, self).__init__() # Q, K, V转换层 self.query_conv = nn.Conv2d(in_channels=in_dim, out_channels=in_dim // 8, kernel_size=1) self.key_conv = nn.Conv2d(in_channels=in_dim, out_channels=in_dim // 8, kernel_size=1) self.value_conv = nn.Conv2d(in_channels=in_dim, out_channels=in_dim, kernel_size=1) # 使用softmax对注意力分数进行归一化 self.softmax = Softmax(dim=3) self.INF = INF # 学习一个缩放参数,用于调节注意力的影响 self.gamma = nn.Parameter(torch.zeros(1)) def forward(self, x): m_batchsize, _, height, width = x.size() # 计算查询(Q)、键(K)、值(V)矩阵 proj_query = self.query_conv(x) proj_query_H = proj_query.permute(0, 3, 1, 2).contiguous().view(m_batchsize * width, -1, height).permute(0, 2, 1) proj_query_W = proj_query.permute(0, 2, 1, 3).contiguous().view(m_batchsize * height, -1, width).permute(0, 2, 1) proj_key = self.key_conv(x) proj_key_H = proj_key.permute(0, 3, 1, 2).contiguous().view(m_batchsize * width, -1, height) proj_key_W = proj_key.permute(0, 2, 1, 3).contiguous().view(m_batchsize * height, -1, width) proj_value = self.value_conv(x) proj_value_H = proj_value.permute(0, 3, 1, 2).contiguous().view(m_batchsize * width, -1, height) proj_value_W = proj_value.permute(0, 2, 1, 3).contiguous().view(m_batchsize * height, -1, width) # 计算垂直和水平方向上的注意力分数,并应用无穷小掩码屏蔽自注意 energy_H = (torch.bmm(proj_query_H, proj_key_H) + self.INF(m_batchsize, height, width)).view(m_batchsize, width, height, height).permute(0, 2, 1, 3) energy_W = torch.bmm(proj_query_W, proj_key_W).view(m_batchsize, height, width, width) # 在垂直和水平方向上应用softmax归一化 concate = self.softmax(torch.cat([energy_H, energy_W], 3)) # 分离垂直和水平方向上的注意力,应用到值(V)矩阵上 att_H = concate[:, :, :, 0:height].permute(0, 2, 1, 3).contiguous().view(m_batchsize * width, height, height) att_W = concate[:, :, :, height:height + width].contiguous().view(m_batchsize * height, width, width) # 计算最终的输出,加上输入x以应用残差连接 out_H = torch.bmm(proj_value_H, att_H.permute(0, 2, 1)).view(m_batchsize, width, -1, height).permute(0, 2, 3, 1) out_W = torch.bmm(proj_value_W, att_W.permute(0, 2, 1)).view(m_batchsize, height, -1, width).permute(0, 2, 1, 3) return self.gamma * (out_H + out_W) + x class Net(nn.Module): def __init__(self, input_size, hidden_size, num_classes): super(Net, self).__init__() self.fc1 = nn.Linear(input_size, hidden_size) self.relu = nn.ReLU() self.fc2 = nn.Linear(hidden_size, num_classes) self.conv1 =nn.Conv2d(1,64,kernel_size=1) self.se = SEAttention(channel=1) self.cca = CrissCrossAttention(64) self.conv2 = nn.Conv2d(64,1,kernel_size=1) def forward(self, x): x = self.se(x) x = self.conv1(x) x = self.cca(x) x = self.conv2(x) out = self.fc1(x.view(-1, input_size)) out = self.relu(out) out = self.fc2(out) return out model = Net(input_size,hidden_size,num_classes) criterion = nn.CrossEntropyLoss() optimizer = optim.Adam(model.parameters(),lr=learning_rate) train_loss_list = [] test_loss_list = [] #训练 total_step = len(train_loader) for epoch in range(num_epoch): for i,(images,labels) in enumerate(train_loader): outputs = model(images) #获取模型分类后的结果 loss = criterion(outputs,labels) #计算损失 optimizer.zero_grad() #反向传播前,梯度清零 loss.backward() #反向传播 optimizer.step() #更新参数 train_loss_list.append(loss.item()) if (i+1)%100 ==0: print('Epoch[{}/{}],Step[{}/{}],Train Loss:{:.4f}' .format(epoch+1,num_epoch,i+1,total_step,loss.item())) model.eval() with torch.no_grad(): #禁止梯度计算 test_loss = 0.0 for images,labels in test_loader: outputs = model(images) loss = criterion(outputs,labels) test_loss +=loss.item()*images.size(0) #累加每个批次总损失得到总损失 test_loss /=len(test_loader.dataset) #整个测试集的平均损失 # 将计算得到的单个平均测试损失值扩展到一个列表中,长度为total_step # 这样做可能是为了在绘图时每个step都有一个对应的测试损失值,尽管实际测试损失在整个epoch内是恒定的 test_loss_list.extend([test_loss]*total_step) #方便可视化 model.train() print("Epoch[{}/{}],Test Loss:{:.4f}".format(epoch+1,num_epoch,test_loss)) plt.plot(train_loss_list,label='Train Loss') plt.plot(test_loss_list,label='Test Loss') plt.title('model loss') plt.xlabel('iterations') plt.ylabel('Loss') plt.legend() plt.show() 1.6 代码(GPU训练)

要想模型在GPU上训练,需要两点:

(1)模型在GPU上

(2)所有参与运算的张量在GPU上

# Author:SiZhen # Create: 2024/7/14 # Description: 调参练习-以手写数字集为例 import torch import torch.nn as nn import torchvision import torch.utils import torchvision.transforms as transforms import matplotlib.pyplot as plt #设置超参数 from torch import optim from torch.nn import init device = torch.device("cuda" if torch.cuda.is_available() else "cpu") batch_size = 64 hidden_size = 128 learning_rate = 0.001 num_epoch = 10 #将图片进行预处理转换成pytorch张量 transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,),(0.5,),)]) #mnist是单通道,所以括号内只有一个0.5 #下载训练集 train_set = torchvision.datasets.MNIST(root="./data",train=True,download=True,transform=transform) #下载测试集 test_set = torchvision.datasets.MNIST(root="./data",train=False,download=True,transform=transform) #加载数据集 train_loader= torch.utils.data.DataLoader(train_set,batch_size=batch_size,shuffle=True,pin_memory=True) test_loader = torch.utils.data.DataLoader(test_set,batch_size=batch_size,shuffle=False,pin_memory=True)#测试不shuffle input_size = 784 #mnist,28x28像素 num_classes = 10 class SEAttention(nn.Module): # 初始化SE模块,channel为通道数,reduction为降维比率 def __init__(self, channel=1, reduction=8): super().__init__() self.avg_pool = nn.AdaptiveAvgPool2d(1) # 自适应平均池化层,将特征图的空间维度压缩为1x1 self.fc = nn.Sequential( # 定义两个全连接层作为激励操作,通过降维和升维调整通道重要性 nn.Linear(channel, channel // reduction, bias=False), # 降维,减少参数数量和计算量 nn.ReLU(inplace=True), # ReLU激活函数,引入非线性 nn.Linear(channel // reduction, channel, bias=False), # 升维,恢复到原始通道数 nn.Sigmoid(), # Sigmoid激活函数,输出每个通道的重要性系数 ) # 权重初始化方法 def init_weights(self): for m in self.modules(): # 遍历模块中的所有子模块 if isinstance(m, nn.Conv2d): # 对于卷积层 init.kaiming_normal_(m.weight, mode='fan_out') # 使用Kaiming初始化方法初始化权重 if m.bias is not None: init.constant_(m.bias, 0) # 如果有偏置项,则初始化为0 elif isinstance(m, nn.BatchNorm2d): # 对于批归一化层 init.constant_(m.weight, 1) # 权重初始化为1 init.constant_(m.bias, 0) # 偏置初始化为0 elif isinstance(m, nn.Linear): # 对于全连接层 init.normal_(m.weight, std=0.001) # 权重使用正态分布初始化 if m.bias is not None: init.constant_(m.bias, 0) # 偏置初始化为0 # 前向传播方法 def forward(self, x): b, c, _, _ = x.size() # 获取输入x的批量大小b和通道数c y = self.avg_pool(x).view(b, c) # 通过自适应平均池化层后,调整形状以匹配全连接层的输入 y = self.fc(y).view(b, c, 1, 1) # 通过全连接层计算通道重要性,调整形状以匹配原始特征图的形状 return x * y.expand_as(x) # 将通道重要性系数应用到原始特征图上,进行特征重新校准 import torch import torch.nn as nn from torch.nn import Softmax # 定义一个无限小的矩阵,用于在注意力矩阵中屏蔽特定位置 def INF(B, H, W): return -torch.diag(torch.tensor(float("inf")).repeat(H), 0).unsqueeze(0).repeat(B * W, 1, 1) class CrissCrossAttention(nn.Module): """ Criss-Cross Attention Module""" def __init__(self, in_dim): super(CrissCrossAttention, self).__init__() # Q, K, V转换层 self.query_conv = nn.Conv2d(in_channels=in_dim, out_channels=in_dim // 8, kernel_size=1) self.key_conv = nn.Conv2d(in_channels=in_dim, out_channels=in_dim // 8, kernel_size=1) self.value_conv = nn.Conv2d(in_channels=in_dim, out_channels=in_dim, kernel_size=1) # 使用softmax对注意力分数进行归一化 self.softmax = Softmax(dim=3) self.INF = INF # 学习一个缩放参数,用于调节注意力的影响 self.gamma = nn.Parameter(torch.zeros(1)) def forward(self, x): m_batchsize, _, height, width = x.size() # 计算查询(Q)、键(K)、值(V)矩阵 proj_query = self.query_conv(x) proj_query_H = proj_query.permute(0, 3, 1, 2).contiguous().view(m_batchsize * width, -1, height).permute(0, 2, 1) proj_query_W = proj_query.permute(0, 2, 1, 3).contiguous().view(m_batchsize * height, -1, width).permute(0, 2, 1) proj_key = self.key_conv(x) proj_key_H = proj_key.permute(0, 3, 1, 2).contiguous().view(m_batchsize * width, -1, height) proj_key_W = proj_key.permute(0, 2, 1, 3).contiguous().view(m_batchsize * height, -1, width) proj_value = self.value_conv(x) proj_value_H = proj_value.permute(0, 3, 1, 2).contiguous().view(m_batchsize * width, -1, height) proj_value_W = proj_value.permute(0, 2, 1, 3).contiguous().view(m_batchsize * height, -1, width) # 计算垂直和水平方向上的注意力分数,并应用无穷小掩码屏蔽自注意 energy_H = (torch.bmm(proj_query_H, proj_key_H)+ self.INF(m_batchsize, height, width).to(device)).view(m_batchsize, width, height, height).permute(0, 2, 1, 3).to(device) energy_W = torch.bmm(proj_query_W, proj_key_W).view(m_batchsize, height, width, width) # 在垂直和水平方向上应用softmax归一化 concate = self.softmax(torch.cat([energy_H, energy_W], 3)) # 分离垂直和水平方向上的注意力,应用到值(V)矩阵上 att_H = concate[:, :, :, 0:height].permute(0, 2, 1, 3).contiguous().view(m_batchsize * width, height, height) att_W = concate[:, :, :, height:height + width].contiguous().view(m_batchsize * height, width, width) # 计算最终的输出,加上输入x以应用残差连接 out_H = torch.bmm(proj_value_H, att_H.permute(0, 2, 1)).view(m_batchsize, width, -1, height).permute(0, 2, 3, 1) out_W = torch.bmm(proj_value_W, att_W.permute(0, 2, 1)).view(m_batchsize, height, -1, width).permute(0, 2, 1, 3) return self.gamma * (out_H + out_W) + x class Net(nn.Module): def __init__(self, input_size, hidden_size, num_classes): super(Net, self).__init__() self.fc1 = nn.Linear(input_size, hidden_size) self.relu = nn.ReLU() self.fc2 = nn.Linear(hidden_size, num_classes) self.conv1 =nn.Conv2d(1,64,kernel_size=1) self.se = SEAttention(channel=1) self.cca = CrissCrossAttention(64) self.conv2 = nn.Conv2d(64,1,kernel_size=1) def forward(self, x): x = self.se(x) x = self.conv1(x) x = self.cca(x) x = self.conv2(x) out = self.fc1(x.view(-1, input_size)) out = self.relu(out) out = self.fc2(out) return out model = Net(input_size,hidden_size,num_classes) model.to(device) criterion = nn.CrossEntropyLoss().to(device) optimizer = optim.Adam(model.parameters(),lr=learning_rate) train_loss_list = [] test_loss_list = [] #训练 total_step = len(train_loader) for epoch in range(num_epoch): for i,(images,labels) in enumerate(train_loader): images,labels = images.to(device),labels.to(device) outputs = model(images).to(device) #获取模型分类后的结果 loss = criterion(outputs,labels).to(device) #计算损失 optimizer.zero_grad() #反向传播前,梯度清零 loss.backward() #反向传播 optimizer.step() #更新参数 train_loss_list.append(loss.item()) if (i+1)%100 ==0: print('Epoch[{}/{}],Step[{}/{}],Train Loss:{:.4f}' .format(epoch+1,num_epoch,i+1,total_step,loss.item())) model.eval() with torch.no_grad(): #禁止梯度计算 test_loss = 0.0 for images,labels in test_loader: images, labels = images.to(device), labels.to(device) outputs = model(images).to(device) loss = criterion(outputs,labels).to(device) test_loss +=loss.item()*images.size(0) #累加每个批次总损失得到总损失 test_loss /=len(test_loader.dataset) #整个测试集的平均损失 # 将计算得到的单个平均测试损失值扩展到一个列表中,长度为total_step # 这样做可能是为了在绘图时每个step都有一个对应的测试损失值,尽管实际测试损失在整个epoch内是恒定的 test_loss_list.extend([test_loss]*total_step) #方便可视化 model.train() print("Epoch[{}/{}],Test Loss:{:.4f}".format(epoch+1,num_epoch,test_loss)) plt.plot(train_loss_list,label='Train Loss') plt.plot(test_loss_list,label='Test Loss') plt.title('model loss') plt.xlabel('iterations') plt.ylabel('Loss') plt.legend() plt.show() 1.7 调参对比

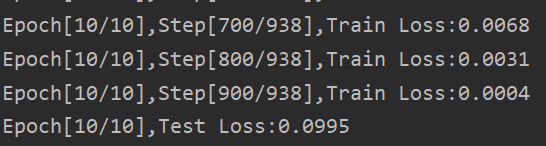

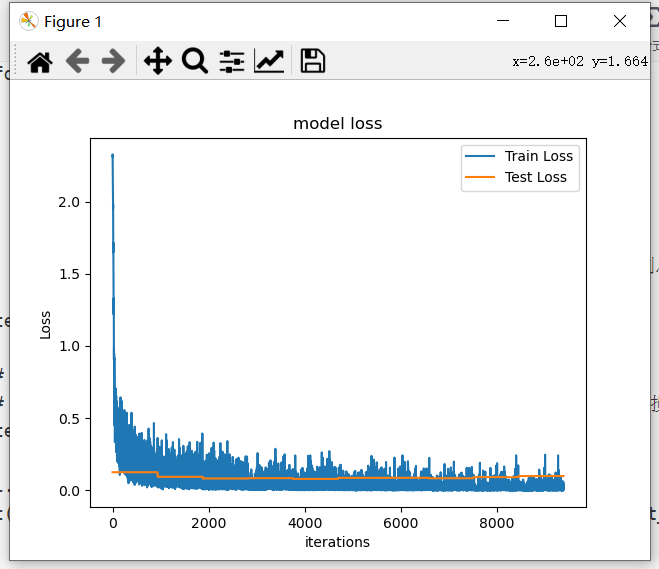

可以看到,该模型原始状态下损失值已经非常小了。

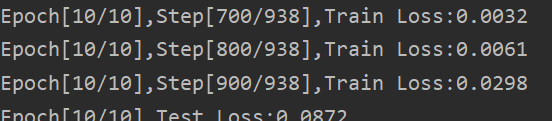

现在我把隐藏层神经元数量从原来的128改为256,学习率进一步减小为0.0005,我们看一下效果:

效果略微提升,但是我们可以看到在后面测试集的表现产生了一些波动,没有之前的模型稳定。