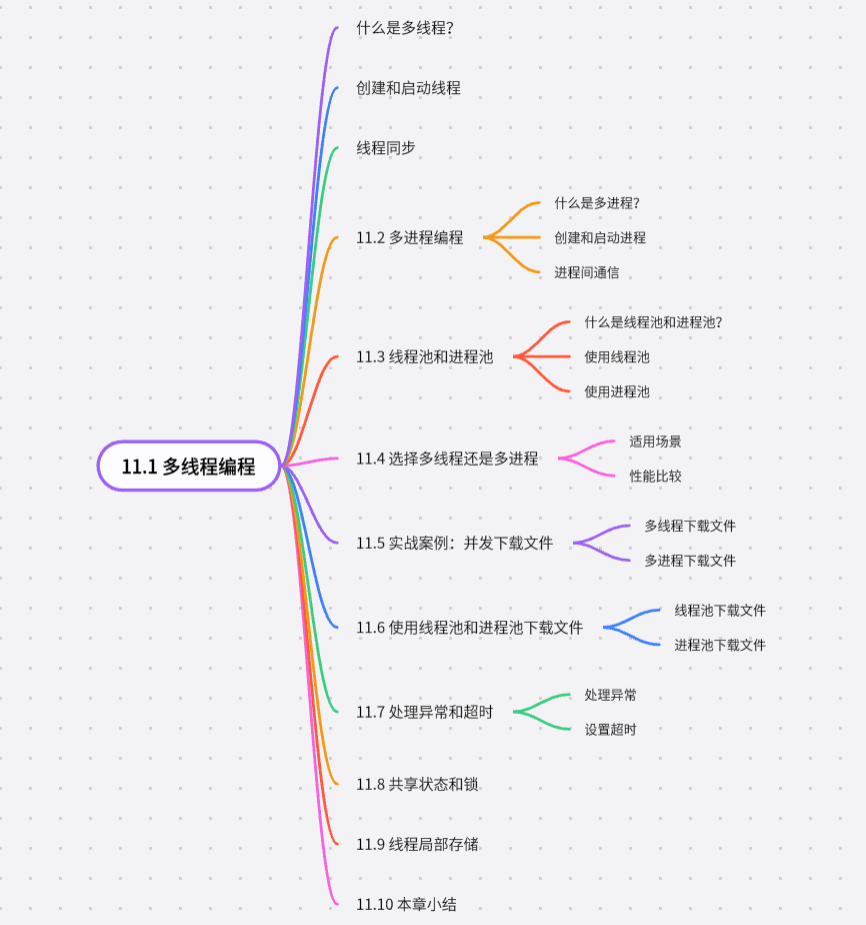

目录

在现代计算中,高效的并发和并行处理是提升程序性能的关键。Python提供了多种机制来实现并发和并行处理,包括多线程、多进程和线程池编程。本章将深入探讨这些概念及其在Python中的实现,帮助您更好地掌握这些技术,编写高效、可扩展的代码。

11.1 多线程编程

什么是多线程?

多线程是一种并发编程技术,它允许程序同时执行多个线程。线程是轻量级的进程,它们共享相同的内存空间,可以在多个处理器核心上并行执行。多线程适用于I/O密集型任务,例如文件操作和网络通信。

创建和启动线程

在Python中,可以使用threading模块创建和管理线程。

示例代码:

import threading def print_numbers(): for i in range(10): print(i) # 创建线程 thread = threading.Thread(target=print_numbers) # 启动线程 thread.start() # 等待线程结束 thread.join() 线程同步

多线程编程中,多个线程可能会访问共享资源,因此需要同步机制来避免竞态条件。Python提供了多种同步原语,例如锁(Lock)、条件变量(Condition)和信号量(Semaphore)。

示例代码:

import threading lock = threading.Lock() counter = 0 def increment_counter(): global counter for _ in range(1000): with lock: counter += 1 threads = [threading.Thread(target=increment_counter) for _ in range(10)] for thread in threads: thread.start() for thread in threads: thread.join() print(f"Final counter value: {counter}") 11.2 多进程编程

什么是多进程?

多进程是一种并行编程技术,它允许程序同时执行多个进程。进程是独立的执行单元,每个进程都有自己的内存空间。多进程适用于CPU密集型任务,例如科学计算和数据处理。

创建和启动进程

在Python中,可以使用multiprocessing模块创建和管理进程。

示例代码:

import multiprocessing def print_numbers(): for i in range(10): print(i) # 创建进程 process = multiprocessing.Process(target=print_numbers) # 启动进程 process.start() # 等待进程结束 process.join() 进程间通信

由于进程之间不共享内存,需要通过进程间通信(IPC)机制来交换数据。常见的IPC机制包括管道(Pipe)和队列(Queue)。

示例代码:

import multiprocessing def producer(queue): for i in range(10): queue.put(i) print(f"Produced {i}") def consumer(queue): while not queue.empty(): item = queue.get() print(f"Consumed {item}") queue = multiprocessing.Queue() producer_process = multiprocessing.Process(target=producer, args=(queue,)) consumer_process = multiprocessing.Process(target=consumer, args=(queue,)) producer_process.start() consumer_process.start() producer_process.join() consumer_process.join() 11.3 线程池和进程池

什么是线程池和进程池?

线程池和进程池是管理和复用多个线程或进程的技术。通过使用池,可以减少创建和销毁线程或进程的开销,提高资源利用率。Python提供了concurrent.futures模块来方便地创建和管理线程池和进程池。

使用线程池

线程池通过管理多个线程来执行任务,适用于I/O密集型任务。

示例代码:

from concurrent.futures import ThreadPoolExecutor def print_numbers(n): for i in range(n): print(i) with ThreadPoolExecutor(max_workers=3) as executor: futures = [executor.submit(print_numbers, 10) for _ in range(3)] for future in futures: future.result() 使用进程池

进程池通过管理多个进程来执行任务,适用于CPU密集型任务。

示例代码:

from concurrent.futures import ProcessPoolExecutor def compute_square(n): return n * n with ProcessPoolExecutor(max_workers=3) as executor: futures = [executor.submit(compute_square, i) for i in range(10)] results = [future.result() for future in futures] print(results) 11.4 选择多线程还是多进程

适用场景

- 多线程:适用于I/O密集型任务,例如文件读写、网络通信等。由于Python的GIL(全局解释器锁)限制,多线程在CPU密集型任务中效率不高。

- 多进程:适用于CPU密集型任务,例如科学计算、数据处理等。多进程可以利用多核CPU的优势,绕过GIL限制。

性能比较

在选择多线程和多进程时,需要权衡任务的性质、系统资源和实现复杂性。

示例代码:性能比较

import time from concurrent.futures import ThreadPoolExecutor, ProcessPoolExecutor def compute_square(n): return n * n numbers = range(10**6) start = time.time() with ThreadPoolExecutor(max_workers=4) as executor: executor.map(compute_square, numbers) print(f"Thread pool time: {time.time() - start:.2f} seconds") start = time.time() with ProcessPoolExecutor(max_workers=4) as executor: executor.map(compute_square, numbers) print(f"Process pool time: {time.time() - start:.2f} seconds") 11.5 实战案例:并发下载文件

通过一个实际案例,展示如何使用多线程和多进程实现并发下载文件。

多线程下载文件

示例代码:

import threading import requests def download_file(url): response = requests.get(url) filename = url.split("/")[-1] with open(filename, 'wb') as file: file.write(response.content) print(f"Downloaded {filename}") urls = [ "http://example.com/file1", "http://example.com/file2", "http://example.com/file3", ] threads = [threading.Thread(target=download_file, args=(url,)) for url in urls] for thread in threads: thread.start() for thread in threads: thread.join() 多进程下载文件

示例代码:

import multiprocessing import requests def download_file(url): response = requests.get(url) filename = url.split("/")[-1] with open(filename, 'wb') as file: file.write(response.content) print(f"Downloaded {filename}") urls = [ "http://example.com/file1", "http://example.com/file2", "http://example.com/file3", ] processes = [multiprocessing.Process(target=download_file, args=(url,)) for url in urls] for process in processes: process.start() for process in processes: process.join() 11.6 使用线程池和进程池下载文件

线程池下载文件

示例代码:

from concurrent.futures import ThreadPoolExecutor import requests def download_file(url): response = requests.get(url) filename = url.split("/")[-1] with open(filename, 'wb') as file: file.write(response.content) print(f"Downloaded {filename}") urls = [ "http://example.com/file1", "http://example.com/file2", "http://example.com/file3", ] with ThreadPoolExecutor(max_workers=3) as executor: executor.map(download_file, urls) 进程池下载文件

示例代码:

from concurrent.futures import ProcessPoolExecutor import requests def download_file(url): response = requests.get(url) filename = url.split("/")[-1] with open(filename, 'wb') as file: file.write(response.content) print(f"Downloaded {filename}") urls = [ "http://example.com/file1", "http://example.com/file2", "http://example.com/file3", ] with ProcessPoolExecutor(max_workers=3) as executor: executor.map(download_file, urls) 11.7 处理异常和超时

在并发和并行编程中,处理异常和设置超时是确保程序健壮性的重要手段。

处理异常

示例代码:

from concurrent.futures import ThreadPoolExecutor, as_completed def risky_task(n): if n == 5: raise ValueError("Something went wrong!") return n * n with ThreadPoolExecutor(max_workers=3) as executor: futures = [executor.submit(risky_task, i) for i in range(10)] for future in as_completed(futures): try: result = future.result() print(f"Result: {result}") except Exception as e: print(f"Exception: {e}") 设置超时

示例代码:

from concurrent.futures import ThreadPoolExecutor, TimeoutError def slow_task(n): import time time.sleep(n) return n with ThreadPoolExecutor(max_workers=3) as executor: future = executor.submit(slow_task, 5) try: result = future.result(timeout=2) print(f"Result: {result}") except TimeoutError: print("Task timed out") 11.8 共享状态和锁

在多线程编程中,共享状态需要同步,以避免竞态条件。可以使用锁(Lock)来保护共享资源。

示例代码:

import threading lock = threading.Lock() shared_counter = 0 def increment_counter(): global shared_counter for _ in range(1000): with lock: shared_counter += 1 threads = [threading.Thread(target=increment_counter) for _ in range(10)] for thread in threads: thread.start() for thread in threads: thread.join() print(f"Final counter value: {shared_counter}") 11.9 线程局部存储

线程局部存储(Thread-Local Storage, TLS)用于在多线程环境中为每个线程提供独立的存储空间。

示例代码:

import threading thread_local = threading.local() def process_data(): thread_local.data = threading.current_thread().name print(f"Processing data for {thread_local.data}") threads = [threading.Thread(target=process_data) for _ in range(5)] for thread in threads: thread.start() for thread in threads: thread.join() 11.10 本章小结

在本章中,我们深入探讨了Python中的多线程、多进程和线程池编程。我们介绍了多线程和多进程的基本概念、创建和管理方法,以及它们的适用场景和性能比较。我们还讨论了线程池和进程池的使用,展示了如何通过实际案例实现并发下载文件。最后,我们探讨了处理异常和超时、共享状态和锁以及线程局部存储等高级主题。

多线程和多进程编程是编写高效并发和并行程序的基础,掌握这些技术可以显著提高程序的性能和可扩展性。希望这篇博客能够帮助您深入理解和应用Python的多线程、多进程和线程池编程。