一、NFS存储

注意:在做本章节示例时,需要拿单独一台机器来部署NFS,具体步骤略。

NFS作为常用的网络文件系统,在多机之间共享文件的场景下用途广泛,毕竟NFS配置方

便,而且稳定可靠。

NFS同样也有一些缺点:① 存在单点故障的风险;② 不方便扩容;③ 性能一般。

NFS比较适合一些简单的、对存储要求不高的场景,比如测试环境、开发环境。

完整示例:

首先部署好NFS服务,并且保证所有Kubernetes节点可以顺利挂载(showmount -e

192.168.100.160 )

[root@aminglinux01 ~]# showmount -e 192.168.100.160

Export list for 192.168.100.160:

/root/nfs *

[root@aminglinux01 ~]#

定义基于NFS的PV

vi nfs-pv.yaml

[root@aminglinux01 ~]# cat nfs-pv.yaml apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv spec: capacity: storage: 5Gi accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Retain storageClassName: nfs-storage nfs: path: /data/nfs2 server: 192.168.100.160 [root@aminglinux01 ~]# 定义PVC

vi nfs-pvc.yaml

[root@aminglinux01 ~]# cat nfs-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nfs-pvc spec: storageClassName: nfs-storage accessModes: - ReadWriteMany resources: requests: storage: 5Gi [root@aminglinux01 ~]# [root@aminglinux01 ~]# kubectl apply -f nfs-pvc.yaml persistentvolumeclaim/nfs-pvc created [root@aminglinux01 ~]# 定义Pod

vi nfs-pod.yaml

[root@aminglinux01 ~]# cat nfs-pod.yaml apiVersion: v1 kind: Pod metadata: name: nfs-pod spec: containers: - name: nfs-container image: nginx:latest volumeMounts: - name: nfs-storage mountPath: /data volumes: - name: nfs-storage persistentVolumeClaim: claimName: nfs-pvc [root@aminglinux01 ~]# kubectl apply -f nfs-pod.yaml pod/nfs-pod created [root@aminglinux01 ~]# [root@aminglinux01 ~]# kubectl describe pod nfs-pod

Name: nfs-pod

Namespace: default

Priority: 0

Service Account: default

Node: aminglinux03/192.168.100.153

Start Time: Tue, 16 Jul 2024 17:53:58 +0800

Labels: <none>

Annotations: cni.projectcalico.org/containerID: cae85b956d4a3570429db9b11f96d51b258af363c313885d26a9d12ab0715357

cni.projectcalico.org/podIP: 10.18.68.176/32

cni.projectcalico.org/podIPs: 10.18.68.176/32

Status: Running

IP: 10.18.68.176

IPs:

IP: 10.18.68.176

Containers:

nfs-container:

Container ID: containerd://377477b565ff23b752d278289011af378936831a4c9af9f0e3f5aaf6187fed87

Image: nginx:latest

Image ID: docker.io/library/nginx@sha256:67682bda769fae1ccf5183192b8daf37b64cae99c6c3302650f6f8bf5f0f95df

Port: <none>

Host Port: <none>

State: Running

Started: Tue, 16 Jul 2024 18:18:43 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/data from nfs-storage (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-mtjkr (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

nfs-storage:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: nfs-pvc

ReadOnly: false

kube-api-access-mtjkr:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 32m default-scheduler Successfully assigned default/nfs-pod to aminglinux03

Warning FailedMount 10m (x10 over 30m) kubelet Unable to attach or mount volumes: unmounted volumes=[nfs-storage], unattached volumes=[nfs-storage kube-api-access-mtjkr]: timed out waiting for the condition

Warning FailedMount 9m47s (x19 over 32m) kubelet MountVolume.SetUp failed for volume "nfs-pv" : mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t nfs 192.168.100.160:/data/nfs2 /var/lib/kubelet/pods/d89d3ab9-836c-47a0-8b60-c6c953184756/volumes/kubernetes.io~nfs/nfs-pv

Output: mount.nfs: access denied by server while mounting 192.168.100.160:/data/nfs2

[root@aminglinux01 ~]#

二、API资源对象StorageClass

SC的主要作用在于,自动创建PV,从而实现PVC按需自动绑定PV。下面我们通过创建一个基于NFS的SC来演示SC的作用。要想使用NFS的SC,还需要安装一个NFS provisioner,provisioner里会定义NFS相关的信息(服务器IP、共享目录等)

github地址: https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

将源码下载下来:

git clone https://github.com/kubernetes-sigs/nfs-subdir-externalprovisioner

cd nfs-subdir-external-provisioner/deploy

sed -i 's/namespace: default/namespace: kube-system/' rbac.yaml ##修改命名空间为kube-system

kubectl apply -f rbac.yaml ##创建rbac授权

[root@aminglinux01 ~]# cd nfs-subdir-external-provisioner/deploy [root@aminglinux01 deploy]# sed -i 's/namespace: default/namespace: kube-system/' rbac.yaml [root@aminglinux01 deploy]# kubectl apply -f rbac.yaml serviceaccount/nfs-client-provisioner unchanged clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner unchanged clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner unchanged role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner unchanged rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner unchanged [root@aminglinux01 deploy]# 修改deployment.yaml

[root@aminglinux01 deploy]# cat deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: kube-system spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: registry.cn-hangzhou.aliyuncs.com/*/nfs-subdir-external-provisioner:v4.0.2 volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: k8s-sigs.io/nfs-subdir-external-provisioner - name: NFS_SERVER value: 192.168.100.160 ###NFS服务器IP - name: NFS_PATH value: /data/nfs ###NFS服务器路径 volumes: - name: nfs-client-root nfs: server: 192.168.100.160 ###NFS服务器IP path: /data/nfs ###NFS服务器路径 [root@aminglinux01 deploy]# [root@aminglinux01 deploy]# kubectl apply -f deployment.yaml deployment.apps/nfs-client-provisioner configured [root@aminglinux01 deploy]# kubectl apply -f class.yaml storageclass.storage.k8s.io/nfs-client unchanged SC YAML示例

cat class.yaml

[root@aminglinux01 deploy]# cat class.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: nfs-client provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "false" ###自动收缩存储空间 [root@aminglinux01 deploy]# [root@aminglinux01 deploy]# kubectl get StorageClass nfs-client NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 7d20h [root@aminglinux01 deploy]# kubectl describe StorageClass nfs-client Name: nfs-client IsDefaultClass: No Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"nfs-client"},"parameters":{"archiveOnDelete":"false"},"provisioner":"k8s-sigs.io/nfs-subdir-external-provisioner"} Provisioner: k8s-sigs.io/nfs-subdir-external-provisioner Parameters: archiveOnDelete=false AllowVolumeExpansion: <unset> MountOptions: <none> ReclaimPolicy: Delete VolumeBindingMode: Immediate Events: <none> [root@aminglinux01 deploy]# 有了SC,还需要一个PVC

vi nfsPvc.yaml

[root@aminglinux01 deploy]# cat nfsPvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nfspvc spec: storageClassName: nfs-client accessModes: - ReadWriteMany resources: requests: storage: 500Mi [root@aminglinux01 deploy]# [root@aminglinux01 deploy]# kubectl apply -f nfsPvc.yaml persistentvolumeclaim/nfspvc created [root@aminglinux01 deploy]# kubectl get PersistentVolumeClaim nfspvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nfspvc Bound pvc-edef8fd1-ab6c-4566-97f7-57627c26101c 500Mi RWX nfs-client 6m5s [root@aminglinux01 deploy]# kubectl describe PersistentVolumeClaim nfspvc Name: nfspvc Namespace: default StorageClass: nfs-client Status: Bound Volume: pvc-edef8fd1-ab6c-4566-97f7-57627c26101c Labels: <none> Annotations: pv.kubernetes.io/bind-completed: yes pv.kubernetes.io/bound-by-controller: yes volume.beta.kubernetes.io/storage-provisioner: k8s-sigs.io/nfs-subdir-external-provisioner volume.kubernetes.io/storage-provisioner: k8s-sigs.io/nfs-subdir-external-provisioner Finalizers: [kubernetes.io/pvc-protection] Capacity: 500Mi Access Modes: RWX VolumeMode: Filesystem Used By: nfspod Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ExternalProvisioning 6m15s (x2 over 6m15s) persistentvolume-controller waiting for a volume to be created, either by external provisioner "k8s-sigs.io/nfs-subdir-external-provisioner" or manually created by system administrator Normal Provisioning 6m14s k8s-sigs.io/nfs-subdir-external-provisioner_nfs-client-provisioner-74fcdfd588-5898r_67df3ef1-fefc-4f5e-9032-0c4263a17061 External provisioner is provisioning volume for claim "default/nfspvc" Normal ProvisioningSucceeded 6m14s k8s-sigs.io/nfs-subdir-external-provisioner_nfs-client-provisioner-74fcdfd588-5898r_67df3ef1-fefc-4f5e-9032-0c4263a17061 Successfully provisioned volume pvc-edef8fd1-ab6c-4566-97f7-57627c26101c [root@aminglinux01 deploy]# 下面创建一个Pod,来使用PVC

vi nfsPod.yaml

[root@aminglinux01 deploy]# cat nfsPod.yaml apiVersion: v1 kind: Pod metadata: name: nfspod spec: containers: - name: nfspod image: nginx:latest volumeMounts: - name: nfspv mountPath: "/usr/share/nginx/html" volumes: - name: nfspv persistentVolumeClaim: claimName: nfspvc [root@aminglinux01 deploy]# [root@aminglinux01 deploy]# kubectl apply -f nfsPod.yaml pod/nfspod created [root@aminglinux01 deploy]# kubectl describe pod nfspod

Name: nfspod

Namespace: default

Priority: 0

Service Account: default

Node: aminglinux03/192.168.100.153

Start Time: Tue, 16 Jul 2024 23:48:25 +0800

Labels: <none>

Annotations: cni.projectcalico.org/containerID: b81a7c48a39cbcb4acfe42b0c4677b5dd320b63f1735de9ec6a3f11a3ea93a1a

cni.projectcalico.org/podIP: 10.18.68.179/32

cni.projectcalico.org/podIPs: 10.18.68.179/32

Status: Running

IP: 10.18.68.179

IPs:

IP: 10.18.68.179

Containers:

nfspod:

Container ID: containerd://abe29d820c121bac46af4d1341aabeb0d8a30759917389e33ba7ca0619c97e76

Image: nginx:latest

Image ID: docker.io/library/nginx@sha256:67682bda769fae1ccf5183192b8daf37b64cae99c6c3302650f6f8bf5f0f95df

Port: <none>

Host Port: <none>

State: Running

Started: Tue, 16 Jul 2024 23:48:28 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/usr/share/nginx/html from nfspv (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-9xt4f (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

nfspv:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: nfspvc

ReadOnly: false

kube-api-access-9xt4f:

总结一下:

pod想使用共享存储 --> PVC (定义具体需求属性) -->SC (定义Provisioner即pv) -->Provisioner(定义具体的访问存储方法) --> NFS-server

三、Ceph存储

Ceph是Ceph使用C++语言开发,是一个开放、自我修复和自我管理的开源分布式存储系统。具有高扩展性、高性能、高可靠性的优点。

Ceph的优点

- 高扩展性:去中心化,支持使用普通X86服务器,支持上千个存储节点的规模,支持TB到EB级扩展。

- 高可靠性:没有单点故障,多数据副本,自动管理,自动修复。

- 高性能:摒弃了传统的集中式存储元数据寻址的方案,采用 CRUSH 算法,数据分布均衡,并行度高。

- 功能强大:Ceph是个大一统的存储系统,集块存储接口(RBD)、文件存储接口(CephFS)、对象存储接口(RadosGW)于一身,因而适用于不同的应用场景。

说明:Kubernetes使用Ceph作为存储,有两种方式,一种是将Ceph部署在Kubernetes里,需要借助一个工具rook;另外一种就是使用外部的Ceph集群,也就是说需要单独部署Ceph集群。下面,我们使用的就是第二种。

搭建Ceph集群

1)准备工作

| 机器编号 | 主机名 | IP |

| 1 | ceph1 | 192.168.100.161 |

| 2 | ceph2 | 192.168.100.162 |

| 3 | ceph3 | 192.168.100.163 |

关闭selinux、firewalld,配置hostname以及/etc/hosts为每一台机器都准备至少一块单独的磁盘(vmware下很方便增加虚拟磁盘),不需要格式化。

[root@bogon ~]# systemctl disable --now firewalld Removed /etc/systemd/system/multi-user.target.wants/firewalld.service. Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service. [root@bogon ~]# hostnamectl set-hostname Ceph1 [root@Ceph1 ~]# timedatectl set-timezone Asia/Shanghai 所有机器安装时间同步服务chrony

yum install -y chrony

systemctl start chronyd

systemctl enable chronyd

设置yum源(ceph1上)

vi /etc/yum.repos.d/ceph.repo #内容如下

cat /etc/yum.repos.d/ceph.repo

[ceph]

name=ceph

baseurl=http://mirrors.aliyun.com/ceph/rpm-pacific/el8/x86_64/

gpgcheck=0

priority =1

[ceph-noarch]

name=cephnoarch

baseurl=http://mirrors.aliyun.com/ceph/rpm-pacific/el8/noarch/

gpgcheck=0

priority =1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-pacific/el8/SRPMS

gpgcheck=0

priority=1

所有机器安装docker-ce(ceph使用docker形式部署)

先安装yum-utils工具

yum install -y yum-utils

配置Docker官方的yum仓库,如果做过,可以跳过

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

安装docker-ce

yum install -y docker-ce

启动服务

systemctl start docker

systemctl enable docker

所有机器安装python3、lvm2(三台都做)

yum install -y python3 lvm2

2)安装cephadm(ceph1上执行)

yum install -y cephadm

3)使用cephadm部署ceph(ceph1上)

cephadm bootstrap --mon-ip 192.168.100.161

注意看用户名、密码

Ceph Dashboard is now available at: URL: https://Ceph1:8443/ User: admin Password: cpbyyxt86a 4)访问dashboard

https://192.168.100.161:8443

更改密码后,用新密码登录控制台

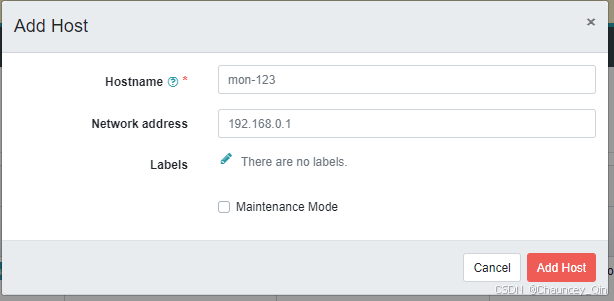

5)增加host

首先进入ceph shell(ceph1上)

cephadm shell ##会进入ceph的shell界面下

生成ssh密钥对儿

[ceph: root@ceph1 /]# ceph cephadm get-pub-key > ~/ceph.pub

[root@Ceph1 ~]# cephadm shell Inferring fsid f501f922-43a9-11ef-b210-000c2990e43b Using recent ceph image quay.io/ceph/ceph@sha256:f15b41add2c01a65229b0db515d2dd57925636ea39678ccc682a49e2e9713d98 [ceph: root@Ceph1 /]# ceph cephadm get-pub-key > ~/ceph.pub配置到另外两台机器免密登录

[ceph: root@ceph1 /]# ssh-copy-id -f -i ~/ceph.pub root@ceph2

[ceph: root@ceph1 /]# ssh-copy-id -f -i ~/ceph.pub root@ceph3

[ceph: root@Ceph1 /]# ssh-copy-id -f -i ~/ceph.pub root@Ceph2 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/ceph.pub" The authenticity of host 'ceph2 (192.168.100.162)' can't be established. ECDSA key fingerprint is SHA256:QL7GAuP7XtniiwJbCT7NbC1sBsUWR+giTILzhYD8+/E. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes root@ceph2's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'root@Ceph2'" and check to make sure that only the key(s) you wanted were added. [ceph: root@Ceph1 /]# ssh-copy-id -f -i ~/ceph.pub root@Ceph3 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/ceph.pub" The authenticity of host 'ceph3 (192.168.100.163)' can't be established. ECDSA key fingerprint is SHA256:QTA1LDrVstoSNuCgZavfi8tWh7X9zMowsSm4QqA9wIk. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes root@ceph3's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'root@Ceph3'" and check to make sure that only the key(s) you wanted were added. [ceph: root@Ceph1 /]# 到浏览器里,增加主机

6)创建OSD(ceph shell模式下,在ceph上操作)

假设三台机器上新增的新磁盘为/dev/sda

ceph orch daemon add osd Ceph1:/dev/sda

ceph orch daemon add osd Ceph2:/dev/sda

ceph orch daemon add osd Ceph3:/dev/sda

[ceph: root@Ceph1 /]# ceph orch daemon add osd Ceph1:/dev/sda Created no osd(s) on host Ceph1; already created? [ceph: root@Ceph1 /]# ceph orch daemon add osd Ceph2:/dev/sda Created osd(s) 1 on host 'Ceph2' [ceph: root@Ceph1 /]# ceph orch daemon add osd Ceph3:/dev/sda Created osd(s) 2 on host 'Ceph3'

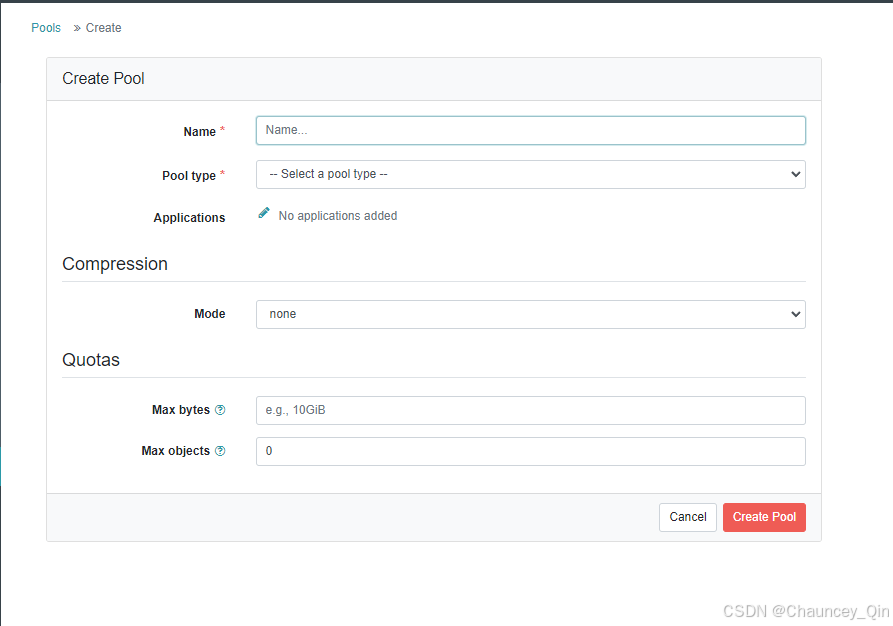

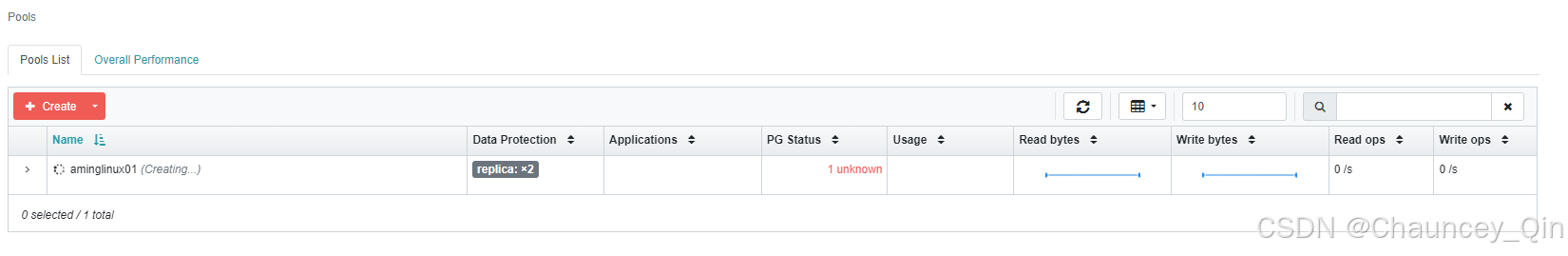

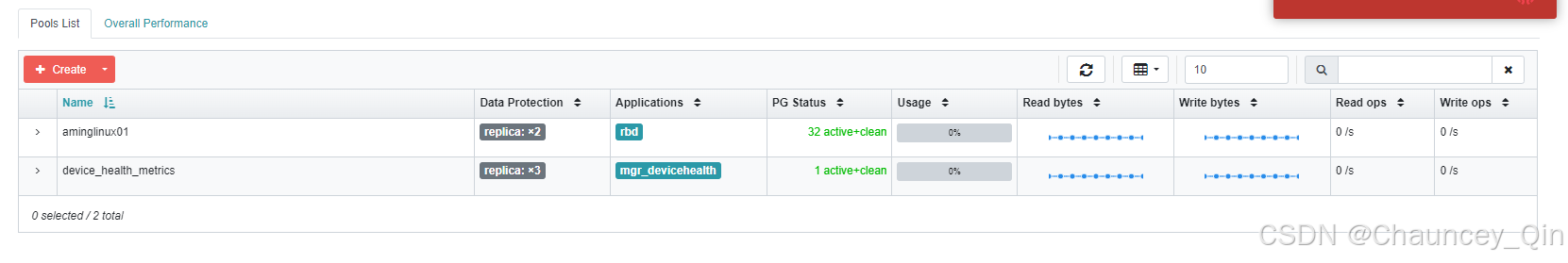

7)创建pool

8)查看集群状态

ceph -s

[ceph: root@Ceph1 /]# ceph -s cluster: id: f501f922-43a9-11ef-b210-000c2990e43b health: HEALTH_WARN clock skew detected on mon.Ceph2, mon.Ceph3 services: mon: 3 daemons, quorum Ceph1,Ceph2,Ceph3 (age 10m) mgr: Ceph2.nhhvbe(active, since 10m), standbys: Ceph1.nqobph osd: 3 osds: 3 up (since 2m), 3 in (since 2m) data: pools: 2 pools, 33 pgs objects: 0 objects, 0 B usage: 871 MiB used, 14 GiB / 15 GiB avail pgs: 33 active+clean [ceph: root@Ceph1 /]# 查案磁盘列表

ceph orch device ls

[ceph: root@Ceph1 /]# ceph orch device ls HOST PATH TYPE DEVICE ID SIZE AVAILABLE REFRESHED REJECT REASONS Ceph1 /dev/sda hdd 5120M 6m ago Has a FileSystem, Insufficient space (<10 extents) on vgs, LVM detected Ceph1 /dev/sr0 hdd VMware_IDE_CDR10_10000000000000000001 2569M 6m ago Has a FileSystem, Insufficient space (<5GB) Ceph2 /dev/sda hdd 5120M 3m ago Has a FileSystem, Insufficient space (<10 extents) on vgs, LVM detected Ceph2 /dev/sr0 hdd VMware_IDE_CDR10_10000000000000000001 2569M 3m ago Has a FileSystem, Insufficient space (<5GB) Ceph3 /dev/sda hdd 5120M 2m ago Has a FileSystem, Insufficient space (<10 extents) on vgs, LVM detected [ceph: root@Ceph1 /]# 9)针对aminglinux01 pool启用rbd application

ceph osd pool application enable aminglinux01 rbd

[ceph: root@Ceph1 /]# ceph osd pool application enable aminglinux01 rbd enabled application 'rbd' on pool 'aminglinux01' [ceph: root@Ceph1 /]#

10)初始化pool

[ceph: root@Ceph1 /]# rbd pool init aminglinux01

[ceph: root@Ceph1 /]#

四、 k8s使用ceph

1)获取ceph集群信息和admin用户的key(ceph那边)

#获取集群信息

[ceph: root@Ceph1 /]# ceph mon dump epoch 3 fsid f501f922-43a9-11ef-b210-000c2990e43b ##这一串一会儿用 last_changed 2024-07-16T19:48:11.564819+0000 created 2024-07-16T19:32:30.484938+0000 min_mon_release 16 (pacific) election_strategy: 1 0: [v2:192.168.100.161:3300/0,v1:192.168.100.161:6789/0] mon.Ceph1 1: [v2:192.168.100.162:3300/0,v1:192.168.100.162:6789/0] mon.Ceph2 2: [v2:192.168.100.163:3300/0,v1:192.168.100.163:6789/0] mon.Ceph3 dumped monmap epoch 3 [ceph: root@Ceph1 /]# #获取admin用户key

[ceph: root@Ceph1 /]# ceph auth get-key client.admin ; echo AQDNypZmFQmGNhAAbkbd5T9c55nWzJBmpDk9DA== #这串一会用 [ceph: root@Ceph1 /]# 2)下载并导入镜像

将用到的镜像先下载下来,避免启动容器时,镜像下载太慢或者无法下载可以下载到其中某一个节点上,然后将镜像拷贝到其它节点

#下载镜像(其中一个节点)

#下载镜像(其中一个节点)

wget -P /tmp/ https://d.frps.cn/file/tools/ceph-csi/k8s_1.24_cephcsi.

tar

#拷贝

scp /tmp/k8s_1.24_ceph-csi.tar aminglinux02:/tmp/

scp /tmp/k8s_1.24_ceph-csi.tar aminglinux03:/tmp/

#导入镜像(所有k8s节点)

ctr -n k8s.io i import k8s_1.24_ceph-csi.tar

3)建ceph的 provisioner

创建ceph目录,后续将所有yaml文件放到该目录下

mkdir ceph

cd ceph

创建secret.yaml

[root@aminglinux01 ceph]# cat secret.yaml apiVersion: v1 kind: Secret metadata: name: csi-rbd-secret namespace: default stringData: userID: admin userKey: AQDNypZmFQmGNhAAbkbd5T9c55nWzJBmpDk9DA== #这串上面已经获取 [root@aminglinux01 ceph]# 创建config-map.yaml

[root@aminglinux01 ceph]# cat csi-config-map.yaml apiVersion: v1 kind: ConfigMap metadata: name: "ceph-csi-config" data: config.json: |- [ { "clusterID": "f501f922-43a9-11ef-b210-000c2990e43b", "monitors": [ "192.168.100.161:6789", "192.168.100.162:6789", "192.168.100.163:6789" ] } ] [root@aminglinux01 ceph]# 创建ceph-conf.yaml

[root@aminglinux01 ceph]# cat ceph-conf.yaml apiVersion: v1 kind: ConfigMap data: ceph.conf: | [global] auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx # keyring is a required key and its value should be empty keyring: | metadata: name: ceph-config [root@aminglinux01 ceph]# 创建csi-kms-config-map.yaml(该config内容为空)

[root@aminglinux01 ceph]# cat csi-kms-config-map.yaml --- apiVersion: v1 kind: ConfigMap data: config.json: |- {} metadata: name: ceph-csi-encryption-kms-config [root@aminglinux01 ceph]#下载其余rbac以及provisioner相关yaml

wget https://d.frps.cn/file/tools/ceph-csi/csi-provisioner-rbac.yaml

wget https://d.frps.cn/file/tools/ceph-csi/csi-nodeplugin-rbac.yaml

wget https://d.frps.cn/file/tools/ceph-csi/csi-rbdplugin.yaml

wget https://d.frps.cn/file/tools/ceph-csi/csi-rbdpluginprovisioner.yaml

应用所有yaml(注意,当前目录是在ceph目录下)

for f in `ls *.yaml`; do echo $f; kubectl apply -f $f; done

for f in `ls *.yaml`; do echo $f; kubectl delete -f $f; done

[root@aminglinux01 ceph]# for f in `ls *.yaml`; do echo $f; kubectl apply -f $f; done ceph-conf.yaml configmap/ceph-config created csi-config-map.yaml configmap/ceph-csi-config created csi-kms-config-map.yaml configmap/ceph-csi-encryption-kms-config created csi-nodeplugin-rbac.yaml serviceaccount/rbd-csi-nodeplugin created clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created csi-provisioner-rbac.yaml serviceaccount/rbd-csi-provisioner created clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created csi-rbdplugin-provisioner.yaml service/csi-rbdplugin-provisioner created deployment.apps/csi-rbdplugin-provisioner created csi-rbdplugin.yaml daemonset.apps/csi-rbdplugin created service/csi-metrics-rbdplugin created secret.yaml secret/csi-rbd-secret created [root@aminglinux01 ceph]# 检查provisioner的pod,状态为running才对

4)创建storageclass

在k8s上创建ceph-sc.yaml

[root@aminglinux01 ceph]# cat ceph-sc.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: csi-rbd-sc #storageclass名称 provisioner: rbd.csi.ceph.com #驱动器 parameters: clusterID: f501f922-43a9-11ef-b210-000c2990e43b #ceph集群id pool: aminglinux01 #pool空间 imageFeatures: layering #rbd特性 csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret csi.storage.k8s.io/provisioner-secret-namespace: default csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret csi.storage.k8s.io/controller-expand-secret-namespace: default csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret csi.storage.k8s.io/node-stage-secret-namespace: default reclaimPolicy: Delete #pvc回收机制 allowVolumeExpansion: true #对扩展卷进行扩展 mountOptions: #StorageClass 动态创建的 PersistentVolume 将使用类中 mountOptions 字段指定的挂载选项 - discard [root@aminglinux01 ceph]# ##应用yaml

kubectl apply -f ceph-sc.yaml

[root@aminglinux01 ceph]# kubectl apply -f ceph-sc.yaml storageclass.storage.k8s.io/csi-rbd-sc created [root@aminglinux01 ceph]# 5)创建pvc

在k8s上创建ceph-pvc.yaml

[root@aminglinux01 ceph]# cat ceph-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: ceph-pvc #pvc名称 spec: accessModes: - ReadWriteOnce #访问模式 resources: requests: storage: 1Gi #存储空间 storageClassName: csi-rbd-sc [root@aminglinux01 ceph]# #应用yaml

kubectl apply -f ceph-pvc.yaml

[root@aminglinux01 ceph]# kubectl apply -f ceph-pvc.yaml persistentvolumeclaim/ceph-pvc created [root@aminglinux01 ceph]# 查看pvc状态,STATUS必须为Bound

[root@aminglinux01 ceph]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE ceph-pvc Bound pvc-67a82d8a-43c9-4609-95c5-6ae097daedb9 1Gi RWO csi-rbd-sc 54s local-pvc Bound local-pv 5Gi RWO local-storage 39h nfs-pvc Bound nfs-pv 5Gi RWX nfs-storage 27h nfspvc Bound pvc-edef8fd1-ab6c-4566-97f7-57627c26101c 500Mi RWX nfs-client 21h redis-pvc-redis-sts-0 Bound pvc-402daec2-9527-4a53-a6cb-e1d18c98f3d4 500Mi RWX nfs-client 8d redis-pvc-redis-sts-1 Bound pvc-bb317d2c-ef72-47a0-a8e2-f7704f60096d 500Mi RWX nfs-client 8d testpvc Pending test-storage 41h [root@aminglinux01 ceph]# 6)创建pod使用ceph存储

[root@aminglinux01 ceph]# cat ceph-pod.yaml apiVersion: v1 kind: Pod metadata: name: ceph-pod spec: containers: - name: ceph-ng image: nginx:latest volumeMounts: - name: ceph-mnt mountPath: /mnt readOnly: false volumes: - name: ceph-mnt persistentVolumeClaim: claimName: ceph-pvc [root@aminglinux01 ceph]# [root@aminglinux01 ceph]# kubectl apply -f ceph-pod.yaml pod/ceph-pod created [root@aminglinux01 ceph]# 查看pv

[root@aminglinux01 ceph]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE local-pv 5Gi RWO Retain Bound default/local-pvc local-storage 39h nfs-pv 5Gi RWX Retain Bound default/nfs-pvc nfs-storage 27h pvc-402daec2-9527-4a53-a6cb-e1d18c98f3d4 500Mi RWX Delete Bound default/redis-pvc-redis-sts-0 nfs-client 8d pvc-67a82d8a-43c9-4609-95c5-6ae097daedb9 1Gi RWO Delete Bound default/ceph-pvc csi-rbd-sc 4m7s pvc-bb317d2c-ef72-47a0-a8e2-f7704f60096d 500Mi RWX Delete Bound default/redis-pvc-redis-sts-1 nfs-client 8d pvc-edef8fd1-ab6c-4566-97f7-57627c26101c 500Mi RWX Delete Bound default/nfspvc nfs-client 21h testpv 500Mi RWO Retain Released default/testpvc test-storage 41h [root@aminglinux01 ceph]# 在ceph这边查看rbd

[ceph: root@Ceph1 /]# rbd ls aminglinux01 csi-vol-e8aeb725-1e74-42e3-a61b-8020f76d5b1d [ceph: root@Ceph1 /]# 在pod里查看挂载情况

[root@aminglinux01 ceph]# kubectl exec -it ceph-pod -- df Filesystem 1K-blocks Used Available Use% Mounted on overlay 17811456 9963716 7847740 56% / tmpfs 65536 0 65536 0% /dev tmpfs 1860440 0 1860440 0% /sys/fs/cgroup /dev/rbd0 996780 24 980372 1% /mnt /dev/mapper/rl-root 17811456 9963716 7847740 56% /etc/hosts shm 65536 0 65536 0% /dev/shm tmpfs 3618480 12 3618468 1% /run/secrets/kubernetes.io/serviceaccount tmpfs 1860440 0 1860440 0% /proc/acpi tmpfs 1860440 0 1860440 0% /proc/scsi tmpfs 1860440 0 1860440 0% /sys/firmware [root@aminglinux01 ceph]#