Day07-ES集群加密,kibana的RBAC实战,zookeeper集群搭建,zookeeper基本管理及kafka单点部署实战

- 0、昨日内容回顾:

- 1、基于nginx的反向代理控制访问kibana

- 2、配置ES集群TSL认证:

- 3、配置kibana连接ES集群

- 4、配置filebeat连接ES集群

- 5、配置logstash连接ES集群

- 6、自定义角色使用logstash组件写入数据到ES集群

- 7、部署zookeeper单点

- 8、zookeeper的命令行基本管理

- 9、zookeeper集群部署

- 10、编写zk的集群管理脚本

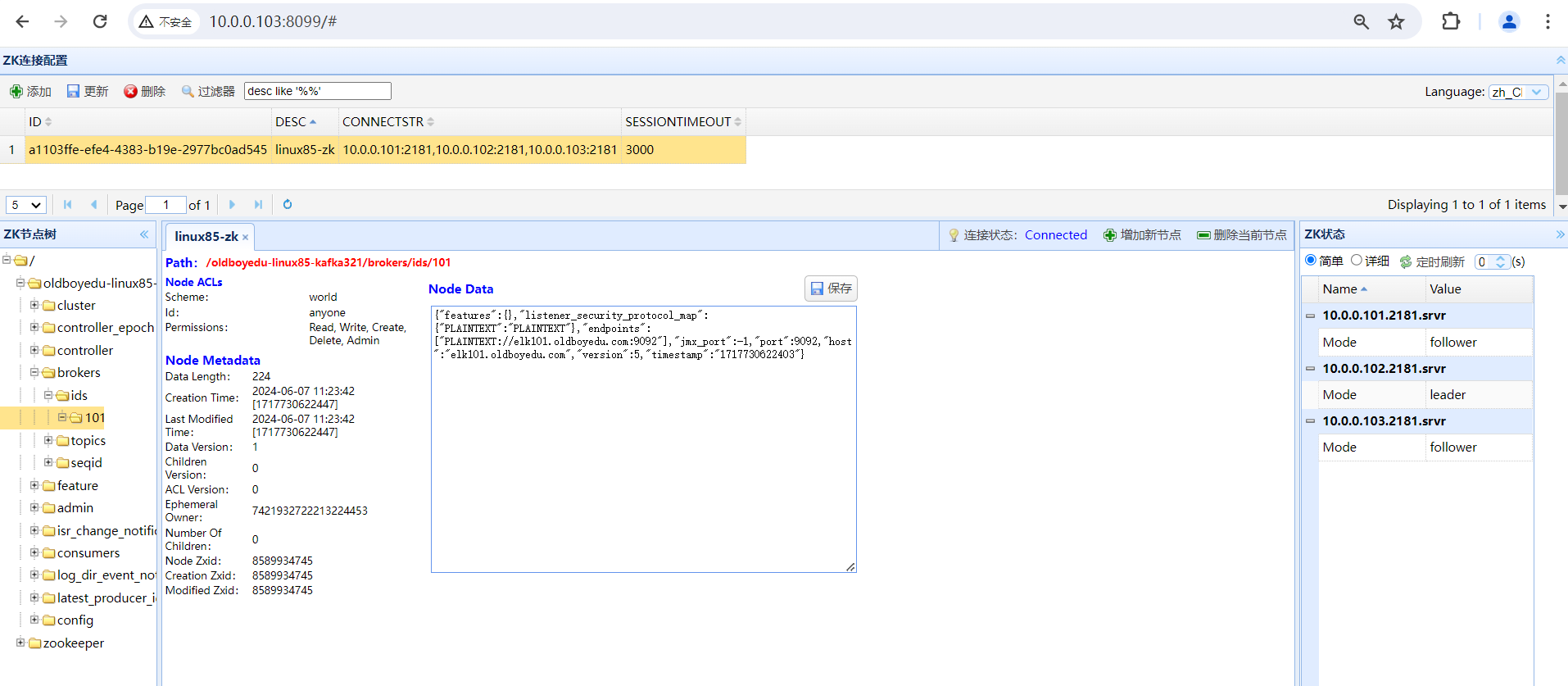

- 11、使用zkWeb管理zookeeper集群

- 12、快速搭建kafka单点环境

0、昨日内容回顾:

filebeat多实例

logstash的多实例

logstash的分支语法

logstash的pipeline

logstash的filter插件之mutate,useragent

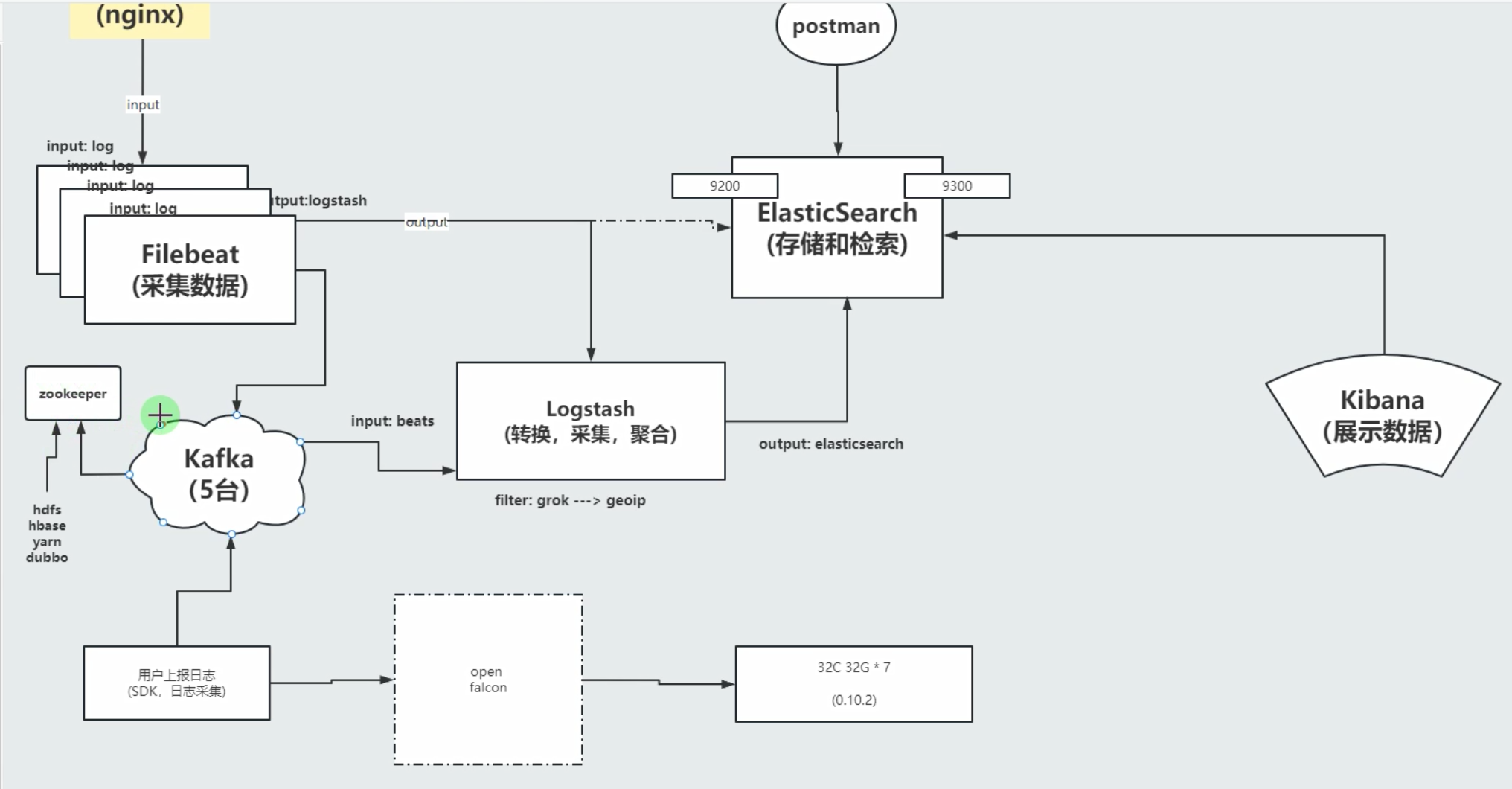

ELFK架构采集日志写入ES集群,并使用kibana出图展示

- map

- 可视化库

- dashboard

filebeat的模块使用

1、基于nginx的反向代理控制访问kibana

(1)部署nginx服务

略,参考之前的笔记即可。

(2)编写nginx的配置文件

cat > /etc/nginx/conf.d/kibana.conf <<'EOF' server { listen 80; server_name kibana.oldboyedu.com; location / { proxy_pass http://10.0.0.103:5601$request_uri; auth_basic "oldboyedu kibana web!"; auth_basic_user_file conf/htpasswd; } } EOF (3)创建账号文件

mkdir -pv /etc/nginx/conf yum -y install httpd-tools htpasswd -c -b /etc/nginx/conf/htpasswd admin oldboyedu (4)启动nginx服务

nginx -t systemctl reload nginx (5)访问nginx验证kibana访问

如下图所示。

2、配置ES集群TSL认证:

(1)elk101节点生成证书文件

cd /oldboyedu/softwares/es7/elasticsearch-7.17.5/ ./bin/elasticsearch-certutil cert -out config/elastic-certificates.p12 -pass "" --days 3650 (2)elk101节点为证书文件修改属主和属组

chown oldboyedu:oldboyedu config/elastic-certificates.p12 (3)elk101节点同步证书文件到其他节点

data_rsync.sh `pwd`/config/elastic-certificates.p12 (4)elk101节点修改ES集群的配置文件

vim /oldboyedu/softwares/es7/elasticsearch-7.17.5/config/elasticsearch.yml ... cluster.name: oldboyedu-linux85-binary path.data: /oldboyedu/data/es7 path.logs: /oldboyedu/logs/es7 network.host: 0.0.0.0 discovery.seed_hosts: ["elk101.oldboyedu.com","elk102.oldboyedu.com","elk103.oldboyedu.com"] cluster.initial_master_nodes: ["elk103.oldboyedu.com"] reindex.remote.whitelist: "10.0.0.*:19200" node.data: true node.master: true # 在最后一行添加以下内容 xpack.security.enabled: true xpack.security.transport.ssl.enabled: true xpack.security.transport.ssl.verification_mode: certificate xpack.security.transport.ssl.keystore.path: elastic-certificates.p12 xpack.security.transport.ssl.truststore.path: elastic-certificates.p12 (5)elk101节点同步ES配置文件到其他节点

data_rsync.sh `pwd`/config/elasticsearch.yml (6)所有节点重启ES集群

systemctl restart es7 (7)生成随机密码

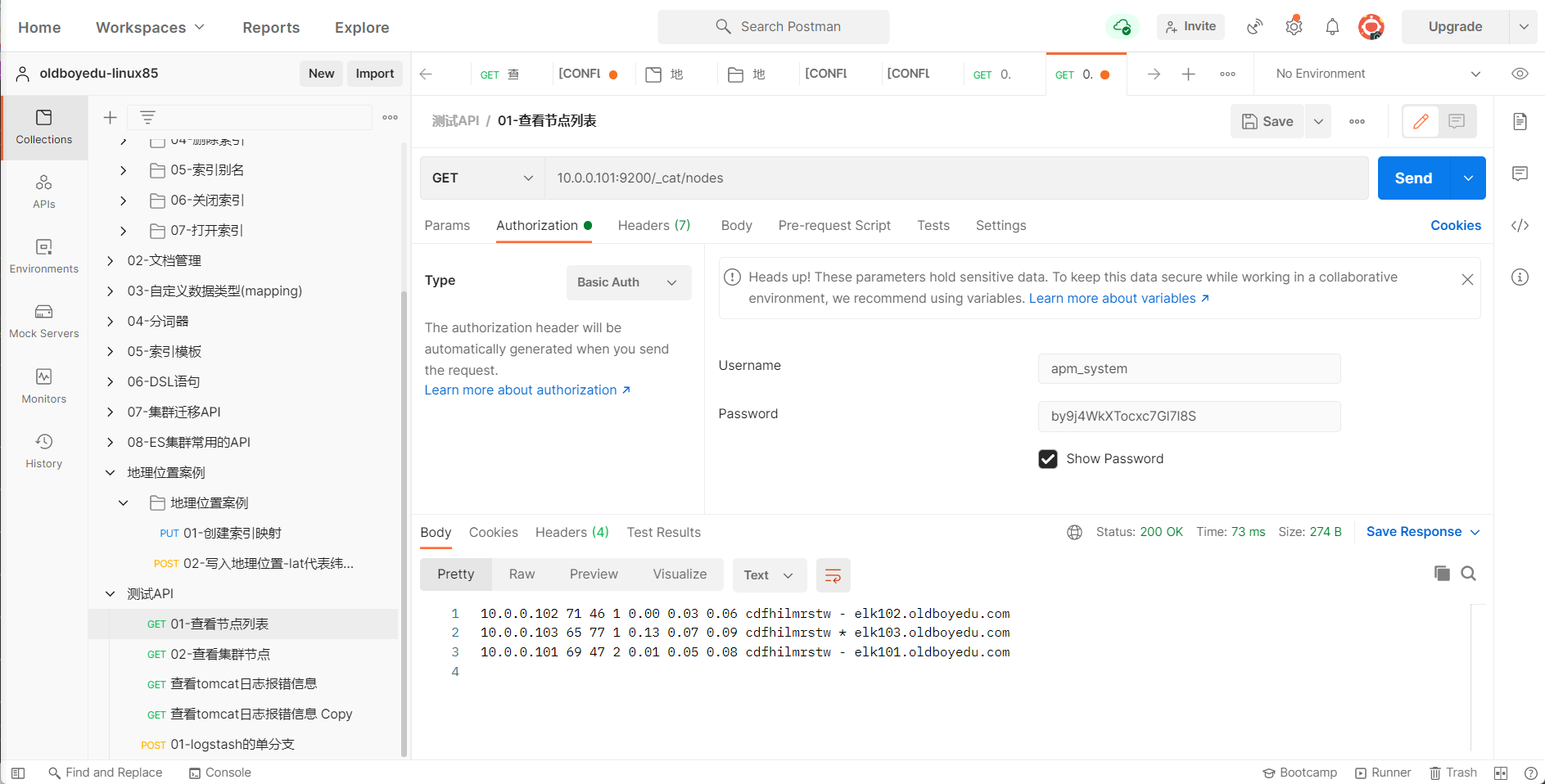

[root@elk101.oldboyedu.com elasticsearch-7.17.5]# ./bin/elasticsearch-setup-passwords auto warning: usage of JAVA_HOME is deprecated, use ES_JAVA_HOME Future versions of Elasticsearch will require Java 11; your Java version from [/oldboyedu/softwares/jdk1.8.0_291/jre] does not meet this requirement. Consider switching to a distribution of Elasticsearch with a bundled JDK. If you are already using a distribution with a bundled JDK, ensure the JAVA_HOME environment variable is not set. Initiating the setup of passwords for reserved users elastic,apm_system,kibana,kibana_system,logstash_system,beats_system,remote_monitoring_user. The passwords will be randomly generated and printed to the console. Please confirm that you would like to continue [y/N]y Changed password for user apm_system PASSWORD apm_system = by9j4WkXTocxc7Gl7l8S Changed password for user kibana_system PASSWORD kibana_system = t0HSSsrBPACFTDxor4Ix Changed password for user kibana PASSWORD kibana = t0HSSsrBPACFTDxor4Ix Changed password for user logstash_system PASSWORD logstash_system = JUXrlCfaMa74seZJnhw4 Changed password for user beats_system PASSWORD beats_system = 2V39PZkHNGIymaVaDFx0 Changed password for user remote_monitoring_user PASSWORD remote_monitoring_user = UZplScGKm6zAmMCO9Jmg Changed password for user elastic PASSWORD elastic = e31LGPoUxik7fnitQidO (8)postman访问

3、配置kibana连接ES集群

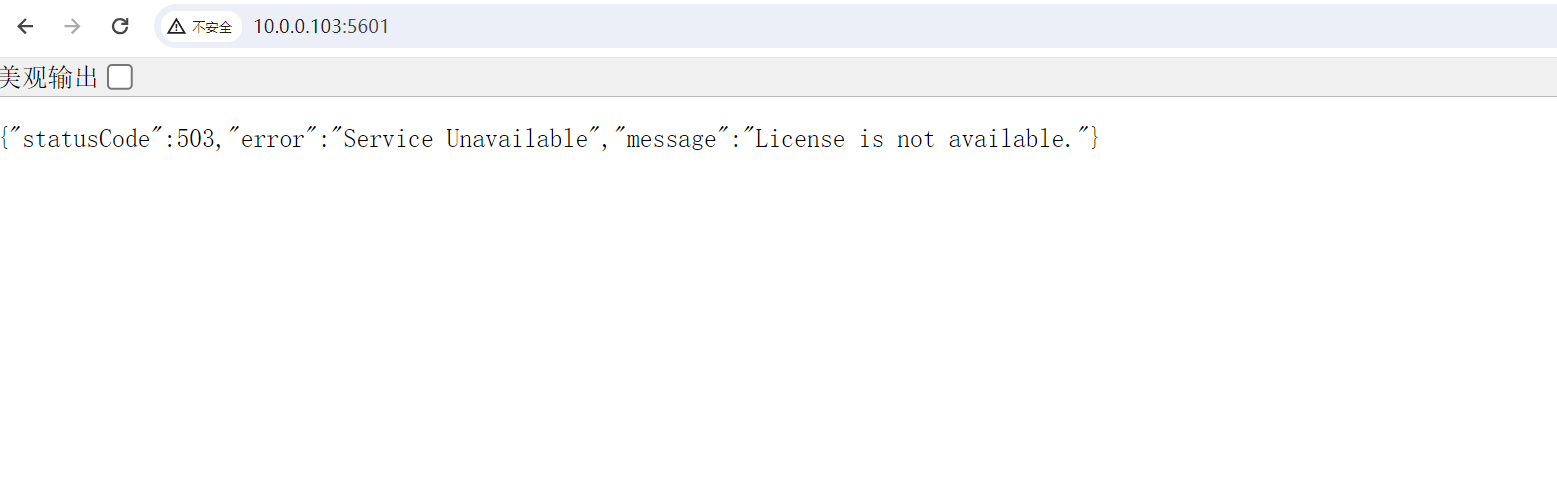

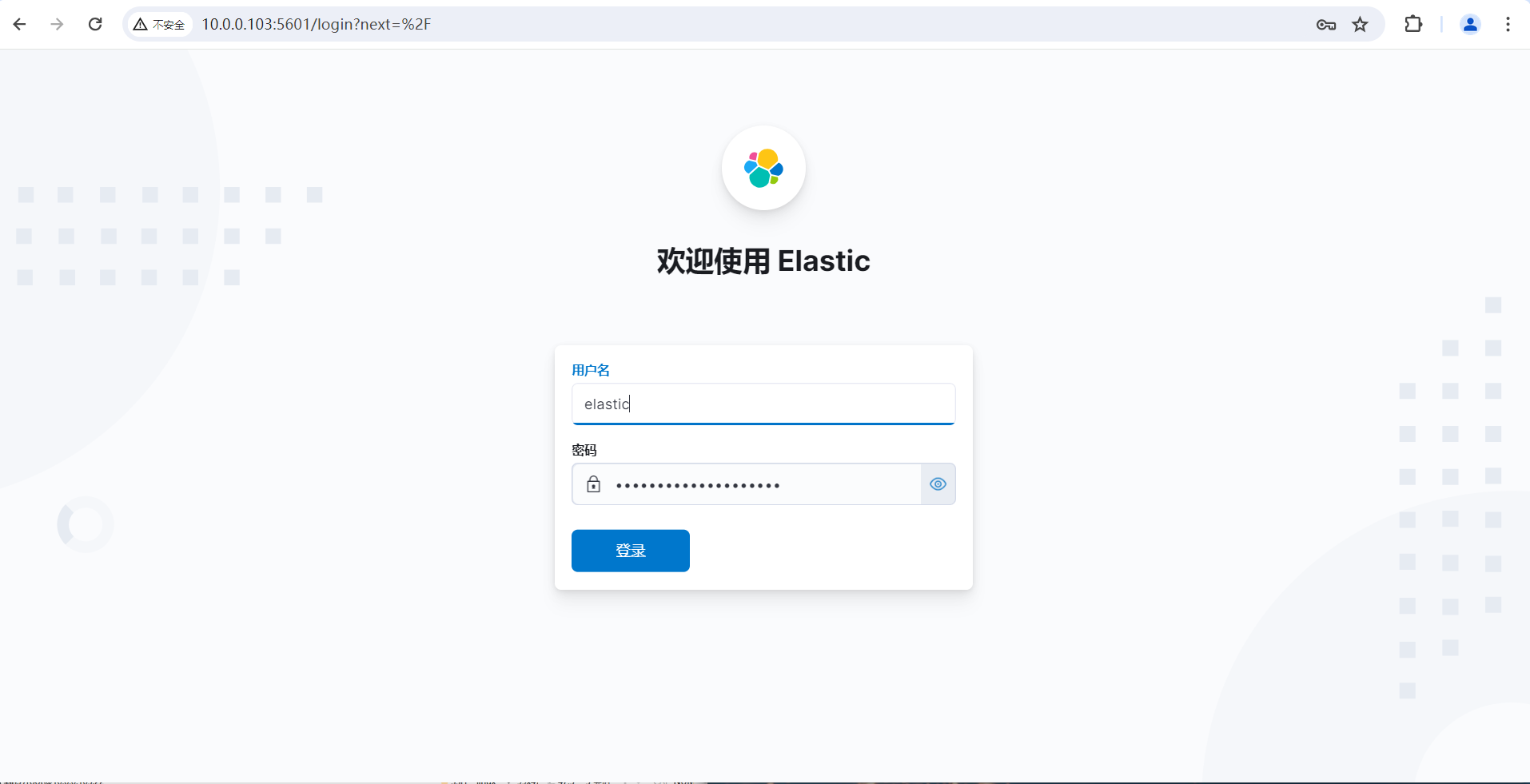

(1)修改kibana的配置文件

[root@elk103.oldboyedu.com elasticsearch-7.17.5]# yy /etc/kibana/kibana.yml server.host: "0.0.0.0" elasticsearch.hosts: ["http://10.0.0.101:9200","http://10.0.0.102:9200","http://10.0.0.103:9200"] elasticsearch.username: "kibana_system" elasticsearch.password: "VxFV4WjsHyxsA3CH2LQT" i18n.locale: "zh-CN" [root@elk103.oldboyedu.com elasticsearch-7.17.5]# (2)重启kibana

[root@elk103.oldboyedu.com elasticsearch-7.17.5]# systemctl restart kibana (3)使用elastic用户登录并修改密码

4、配置filebeat连接ES集群

(1)修改配置文件

[root@elk103.oldboyedu.com filebeat-7.17.5-linux-x86_64]# cat config/24-log-to-es_tls.yaml filebeat.inputs: - type: log paths: - /tmp/oldboyedu-linux85/test.log output.elasticsearch: hosts: ["http://10.0.0.101:9200","http://10.0.0.102:9200","http://10.0.0.103:9200"] username: "elastic" password: "yinzhengjie" index: "oldboyedu-jiaoshi07-test" setup.ilm.enabled: false setup.template.name: "oldboyedu-jiaoshi07" setup.template.pattern: "oldboyedu-jiaoshi07-*" setup.template.overwrite: true setup.template.settings: index.number_of_shards: 3 index.number_of_replicas: 0 (2)启动filebeat实例

[root@elk103.oldboyedu.com filebeat-7.17.5-linux-x86_64]# filebeat -e -c config/24-log-to-es_tls.yaml 5、配置logstash连接ES集群

(1)编写配置文件

[root@elk101.oldboyedu.com ~]# cat config/16-file-to-es_tsl.conf input { file { # 指定本地文件的路径 path => "/tmp/oldboyedu-linux85-file" # 指定读取文件的起始位置,但前提是该文件之前未读取过或者未在".sincedb"文件中记录。 start_position => "beginning" } } output { # stdout {} elasticsearch { hosts => ["http://localhost:9200"] index => "oldboyedu-linux85-logstash-file" user => "elastic" password => "yinzhengjie" } } [root@elk101.oldboyedu.com ~]# [root@elk101.oldboyedu.com ~]# (2)启动logstash实例

[root@elk101.oldboyedu.com ~]# logstash -rf config/16-file-to-es_tsl.conf 查看logstash采集文件的偏移量路径。

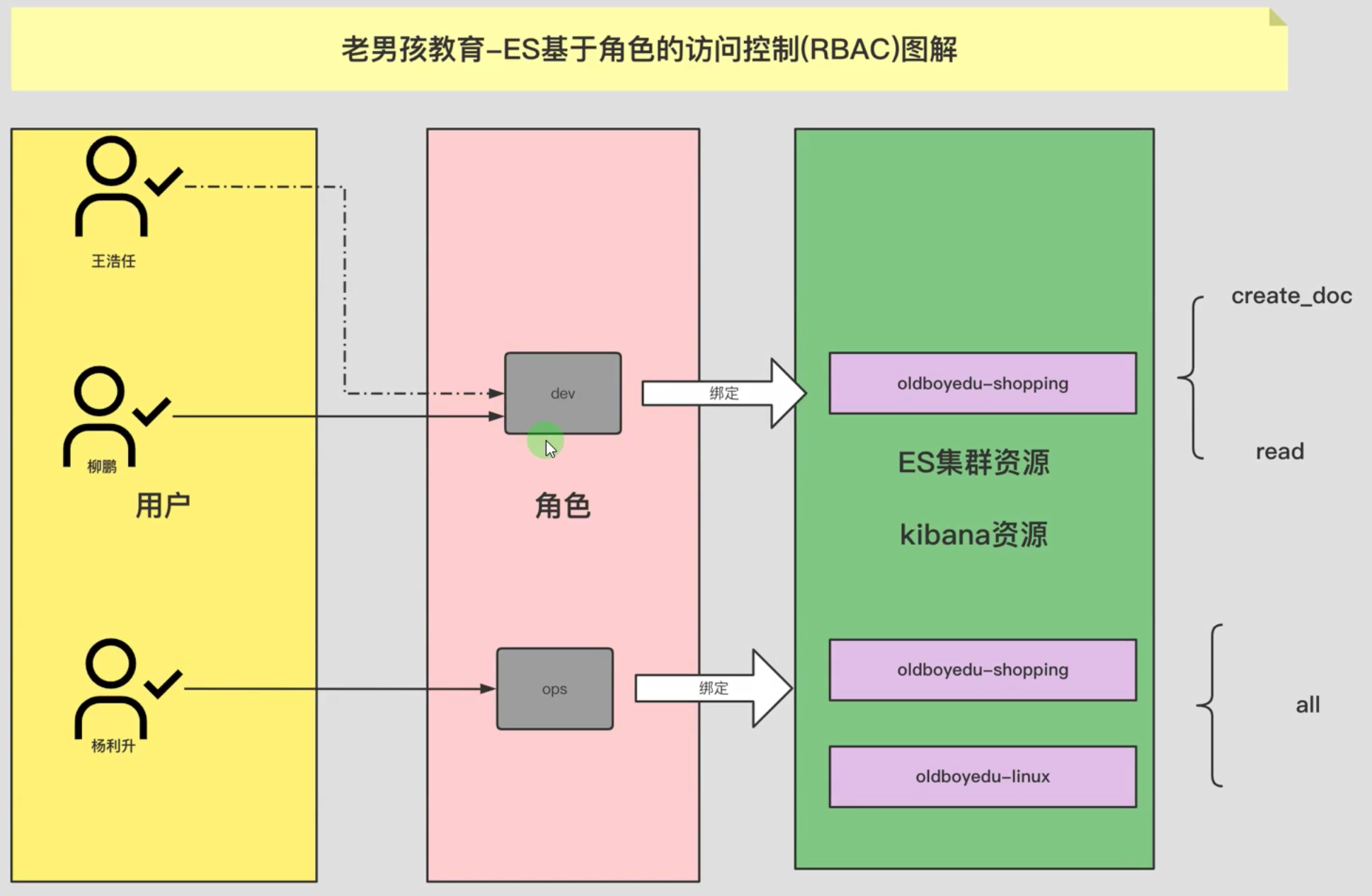

ls -la /oldboyedu/softwares/logstash-7.17.5/data/plugins/inputs/file/ 6、自定义角色使用logstash组件写入数据到ES集群

[root@elk101.oldboyedu.com ~]# cat config/16-file-to-es_tsl.conf input { file { # 指定本地文件的路径 path => "/tmp/oldboyedu-linux85-file" # 指定读取文件的起始位置,但前提是该文件之前未读取过或者未在".sincedb"文件中记录。 start_position => "beginning" } } output { # stdout {} elasticsearch { hosts => ["http://localhost:9200"] #index => "oldboyedu-linux85-logstash-file" index => "oldboyedu-linux85-logstash-demo" user => "jiaoshi07-logstash" password => "123456" } } [root@elk101.oldboyedu.com ~]#

7、部署zookeeper单点

(1)下载zookeeper软件

https://zookeeper.apache.org/releases.html [root@elk101.oldboyedu.com ~]# wget http://192.168.15.253/ElasticStack/day07-/softwares/apache-zookeeper-3.8.0-bin.tar.gz (2)解压软件包

[root@elk101.oldboyedu.com ~]# tar xf apache-zookeeper-3.8.0-bin.tar.gz -C /oldboyedu/softwares/ (3)创建符号连接

[root@elk101.oldboyedu.com ~]# cd /oldboyedu/softwares/ && ln -sv apache-zookeeper-3.8.0-bin zk (4)声明zk的环境变量

[root@elk101.oldboyedu.com softwares]# cat > /etc/profile.d/kafka.sh <<'EOF' #!/bin/bash export ZK_HOME=/oldboyedu/softwares/zk export PATH=$PATH:$ZK_HOME/bin EOF source /etc/profile.d/kafka.sh (5)创建zk的配置文件

[root@elk101.oldboyedu.com ~]# cp /oldboyedu/softwares/zk/conf/{zoo_sample.cfg,zoo.cfg} (6)启动zk节点

[root@elk101.oldboyedu.com ~]# zkServer.sh start [root@elk101.oldboyedu.com ~]# zkServer.sh status # 查看zk服务的状态信息 [root@elk101.oldboyedu.com ~]# zkServer.sh stop [root@elk101.oldboyedu.com ~]# zkServer.sh restart (7)连接ZK节点

[root@elk101.oldboyedu.com ~]# zkCli.sh 8、zookeeper的命令行基本管理

# 查看 ls / # 查看根(/)下有多少子zookeeper node,简称"znode"。 get /oldboyedu-linux85/jiaoshi07 # 查看"/oldboyedu-linux85/jiaoshi07"的数据。 # 创建 create /oldboyedu-linux85 # 在根路径下创建一个名为"oldboyedu-linux85"的"znode"。 create /oldboyedu-linux85/jiaoshi07 123 # 在"/oldboyedu-linux85/"znode下创建一个名为"jiaoshi07"的子znode,并指定该znode数据为"123"。 create -s /oldboyedu-linux85/jiaoshi07/liwenxuan 88888 # 创建一个前缀为"/oldboyedu-linux85/jiaoshi07/liwenxuan"有序编号的znode,数据为88888 create -s -e /oldboyedu-linux85/linux85/test # 创建一个名为"/oldboyedu-linux85/linux85/test"的临时znode。当前会话结束,临时znode会自动删除。 # 修改 set /oldboyedu-linux85/jiaoshi07 456 # 将"/oldboyedu-linux85/jiaoshi07 "的znode数据修改为456. # 删除 delete /oldboyedu-linux85/test02 # 删除名为"/oldboyedu-linux85/test02"的znode,但该znode不能有子znode。即必须为空。 deleteall /oldboyedu-linux85/jiaoshi07 # 递归删除"/oldboyedu-linux85/jiaoshi07"下的所有znode。

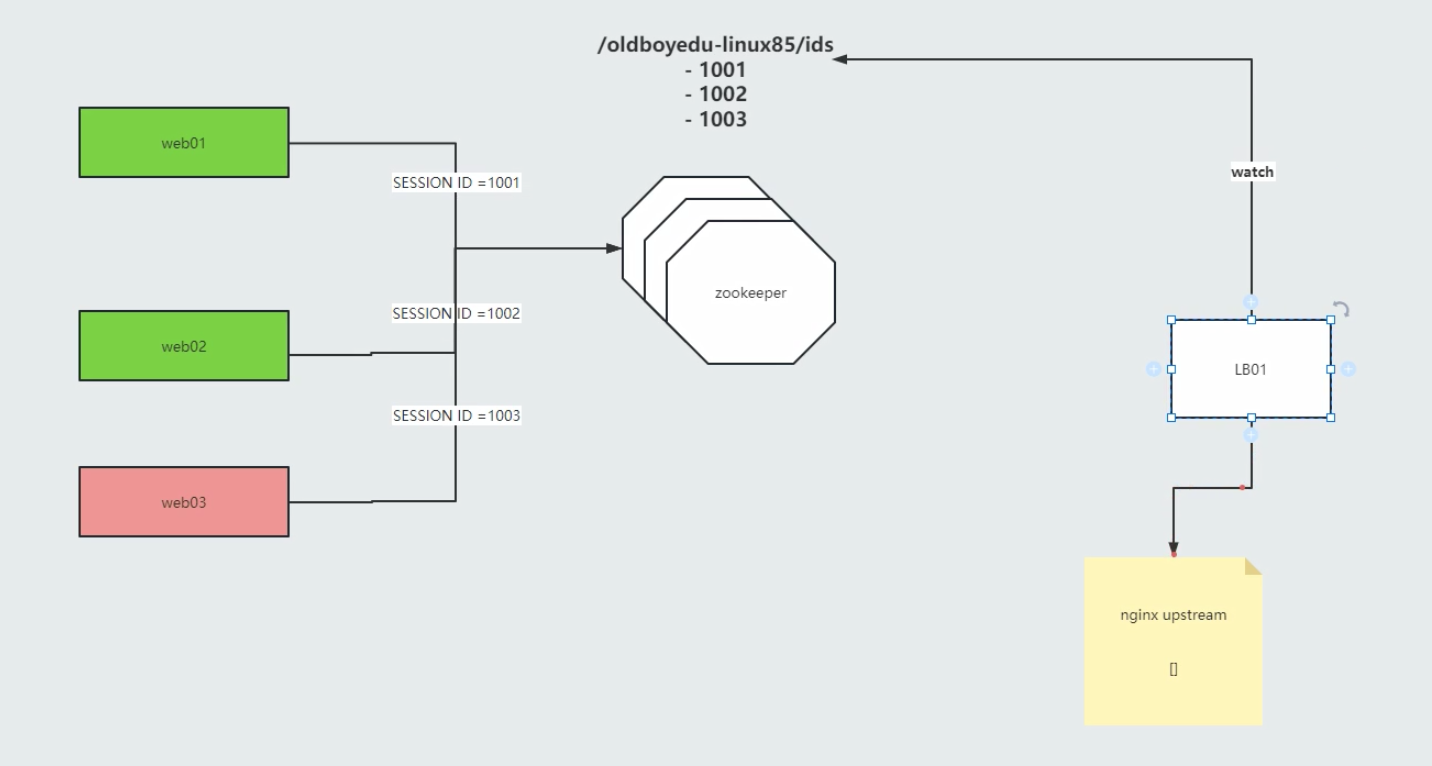

9、zookeeper集群部署

(1)创建zk的数据目录

[root@elk101.oldboyedu.com ~]# install -d /oldboyedu/data/zk (2)修改单点zk的配置文件

[root@elk101.oldboyedu.com ~]# vim /oldboyedu/softwares/zk/conf/zoo.cfg ... # 定义最小单元的时间范围tick。 tickTime=2000 # 启动时最长等待tick数量。 initLimit=5 # 数据同步时最长等待的tick时间进行响应ACK syncLimit=2 # 指定数据目录 dataDir=/oldboyedu/data/zk # 监听端口 clientPort=2181 # 开启四字命令允许所有的节点访问。 4lw.commands.whitelist=* # server.ID=A:B:C[:D] # ID: # zk的唯一编号。 # A: # zk的主机地址。 # B: # leader的选举端口,是谁leader角色,就会监听该端口。 # C: # 数据通信端口。 # D: # 可选配置,指定角色。 server.101=10.0.0.101:2888:3888 server.102=10.0.0.102:2888:3888 server.103=10.0.0.103:2888:3888 (3)同步数据即可

[root@elk101.oldboyedu.com ~]# data_rsync.sh /oldboyedu/softwares/zk/ [root@elk101.oldboyedu.com ~]# data_rsync.sh /oldboyedu/softwares/apache-zookeeper-3.8.0-bin/ [root@elk101.oldboyedu.com ~]# data_rsync.sh /oldboyedu/data/zk/ [root@elk101.oldboyedu.com ~]# data_rsync.sh /etc/profile.d/kafka.sh (4)创建myid文件

[root@elk101.oldboyedu.com ~]# for ((host_id=101;host_id<=103;host_id++)) do ssh 10.0.0.${host_id} "echo ${host_id} > /oldboyedu/data/zk/myid";done (5)所有节点启动zk服务

[root@elk101.oldboyedu.com ~]# zkServer.sh start [root@elk102.oldboyedu.com ~]# source /etc/profile.d/kafka.sh [root@elk102.oldboyedu.com ~]# zkServer.sh start [root@elk103.oldboyedu.com ~]# source /etc/profile.d/kafka.sh [root@elk103.oldboyedu.com ~]# zkServer.sh start (6)查看zk的角色状态

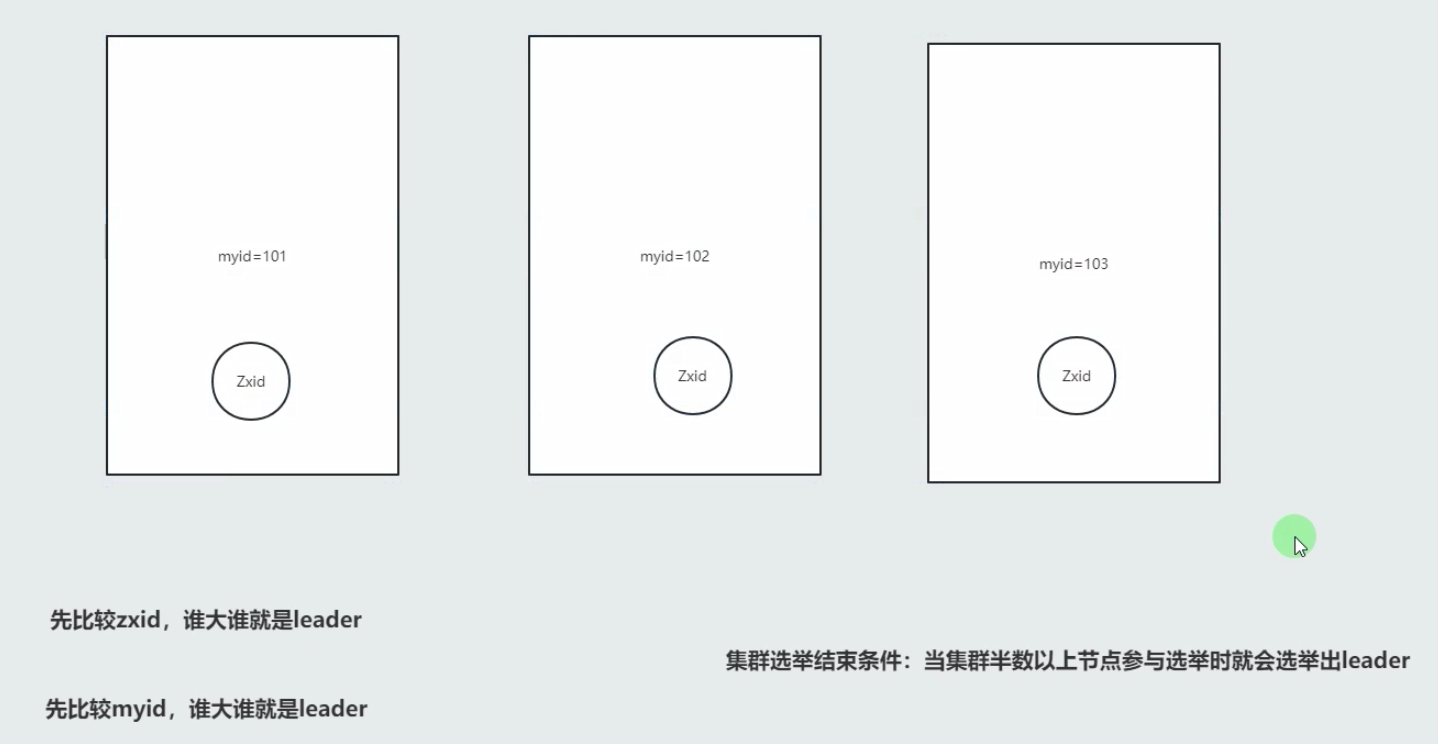

[root@elk101.oldboyedu.com ~]# zkServer.sh status leader选举流程图解

10、编写zk的集群管理脚本

[root@elk101.oldboyedu.com ~]# cat /usr/local/sbin/zkManager.sh #!/bin/bash #判断用户是否传参 if [ $# -ne 1 ];then echo "无效参数,用法为: $0 {start|stop|restart|status}" exit fi #获取用户输入的命令 cmd=$1 #定义函数功能 function zookeeperManger(){ case $cmd in start) echo "启动服务" remoteExecution start ;; stop) echo "停止服务" remoteExecution stop ;; restart) echo "重启服务" remoteExecution restart ;; status) echo "查看状态" remoteExecution status ;; *) echo "无效参数,用法为: $0 {start|stop|restart|status}" ;; esac } #定义执行的命令 function remoteExecution(){ for (( i=101 ; i<=103 ; i++ )) ; do tput setaf 2 echo ========== 10.0.0.${i} zkServer.sh $1 ================ tput setaf 9 ssh 10.0.0.${i} "source /etc/profile.d/kafka.sh; zkServer.sh $1 2>/dev/null" done } #调用函数 zookeeperManger [root@elk101.oldboyedu.com ~]# chmod +x /usr/local/sbin/zkManager.sh [root@elk101.oldboyedu.com ~]# zkManager.sh start [root@elk101.oldboyedu.com ~]# zkManager.sh staus 验证集群:

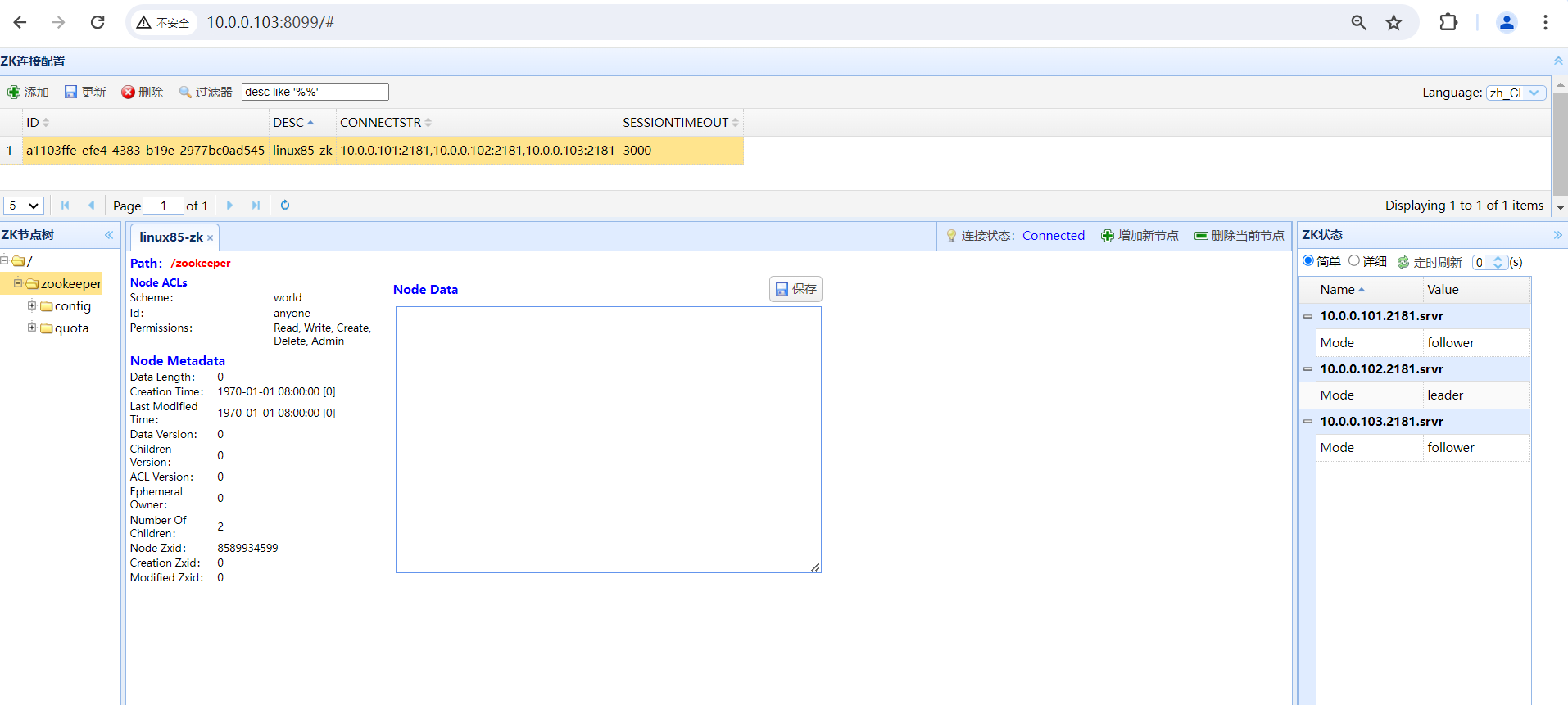

[root@elk103.oldboyedu.com ~]# zkCli.sh -server 10.0.0.101:2181,10.0.0.102:2181,10.0.0.103:2181 11、使用zkWeb管理zookeeper集群

(1)下载软件包

[root@elk103.oldboyedu.com ~]# wget http://192.168.15.253/ElasticStack/day07-/softwares/zkWeb-v1.2.1.jar (2)启动zkweb

java -jar zkWeb-v1.2.1.jar

12、快速搭建kafka单点环境

(1)下载kafka软件包

[root@elk101.oldboyedu.com ~]# wget http://192.168.15.253/ElasticStack/day07-/softwares/kafka_2.13-3.2.1.tgz (2)解压软件包

[root@elk101.oldboyedu.com ~]# tar xf kafka_2.13-3.2.1.tgz -C /oldboyedu/softwares/ (3)创建符号连接

[root@elk101.oldboyedu.com ~]# cd /oldboyedu/softwares/ && ln -svf kafka_2.13-3.2.1 kafka (4)配置环境变量

[root@elk101.oldboyedu.com softwares]# cat /etc/profile.d/kafka.sh #!/bin/bash export ZK_HOME=/oldboyedu/softwares/zk export PATH=$PATH:$ZK_HOME/bin export KAFKA_HOME=/oldboyedu/softwares/kafka export PATH=$PATH:$KAFKA_HOME/bin [root@elk101.oldboyedu.com softwares]# source /etc/profile.d/kafka.sh (5)修改配置文件

[root@elk101.oldboyedu.com ~]# yy /oldboyedu/softwares/kafka/config/server.properties ... broker.id=101 zookeeper.connect=10.0.0.101:2181,10.0.0.102:2181,10.0.0.103:2181/oldboyedu-linux85-kafka321 [root@elk101.oldboyedu.com ~]# (6)启动kafka单点

[root@elk101.oldboyedu.com softwares]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties (7)验证zookeeper的源数据信息

[root@elk101 softwares]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties [root@elk101 softwares]# jps 4134 Jps 1134 Elasticsearch 1135 Elasticsearch 2815 QuorumPeerMain [root@elk101 softwares]# cat /tmp/kafka-logs/meta.properties # #Fri Jun 07 11:14:46 CST 2024 cluster.id=H2ceIpqTT1iUzb46e5jeKw version=0 broker.id=101 [root@elk101 softwares]# jps 4156 Jps 1134 Elasticsearch 1135 Elasticsearch 2815 QuorumPeerMain [root@elk101 softwares]# rm -rf /tmp/kafka-logs/ [root@elk101 softwares]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties 在zkWeb查看即可。

今日作业

(1)完成课堂的所有练习并整理思维导图;

扩展作业:

(1)使用ansible一键部署zookeeper集群;