k8s集群规划

master- 最低两核心,否则集群初始化失败

| 主机名 | IP地址 | 角色 | 操作系统 | 硬件配置 |

|---|---|---|---|---|

| master | 10.62.158.200 | 管理节点 | CentOS 7 | 2 Core/4G Memory |

| node01 | 10.62.158.201 | 工作节点01 | CentOS 7 | 2 Core/4G Memory |

| node02 | 10.62.158.202 | 工作节点02 | CentOS 7 | 2 Core/4G Memory |

前置工作 - 准备集群所需文件 - 在联网环境下进行

离线包可 点击链接下载,与前置工作离线包内容一致

Docker在线安装

安装 yum-utils 软件提供 yum-config-manager 命令

[root@localhost ~]# yum install yum-utils -y 添加阿里云 docker-ce 仓库

[root@localhost ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo 安装 docker 软件包

[root@localhost ~]# yum install docker-ce-20.10.9-3.el7 -y 启用 Cgroup 控制组,用于限制进程的资源使用量,如CPU、内存资源

[root@localhost ~]# mkdir /etc/docker cat > /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"] } EOF 启动 docker 并设置 docker 随机自启

[root@localhost ~]# systemctl enable docker --now 查看 docker 是否安装成功

[root@master ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 软件包及镜像文件下载

下载 Docker 软件安装包

- 仅下载软件安装包不安装

[root@localhost ~]# yum install --downloadonly --downloaddir=docker-ce-20.10.9-3.el7.x86_64 docker-ce-20.10.9-3.el7.x86_64 -y - 将软件包压缩为gz格式

[root@localhost ~]# tar -zcf docker-ce-20.10.9-3.el7.x86_64.tar.gz docker-ce-20.10.9-3.el7.x86_64 下载K8s软件安装包

- 配置

K8s软件仓库来安装集群所需软件,使用阿里云YUM源

cat > /etc/yum.repos.d/k8s.repo <<EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF - 下载

K8s软件包到kubernetes-1.23.0目录

# kubeadm [root@localhost ~]# yum install --downloadonly --downloaddir=kubernetes-1.23.0 kubeadm-1.23.0-0 -y # kubectl [root@localhost ~]# yum install --downloadonly --downloaddir=kubernetes-1.23.0 kubectl-1.23.0-0 -y # kubelet [root@localhost ~]# yum install --downloadonly --downloaddir=kubernetes-1.23.0 kubelet-1.23.0-0 -y - 进入

kubernetes-1.23.0目录,删除非1.23.0版本的kubectl与kubelet

[root@localhost ~]# cd kubernetes-1.23.0/ [root@localhost kubernetes-1.23.0]# rm -rf a24e42254b5a14b67b58c4633d29c27370c28ed6796a80c455a65acc813ff374-kubectl-1.28.2-0.x86_64.rpm [root@localhost kubernetes-1.23.0]# rm -rf e1cae938e231bffa3618f5934a096bd85372ee9b1293081f5682a22fe873add8-kubelet-1.28.2-0.x86_64.rpm [root@localhost kubernetes-1.23.0]# ls 0f2a2afd740d476ad77c508847bad1f559afc2425816c1f2ce4432a62dfe0b9d-kubernetes-cni-1.2.0-0.x86_64.rpm conntrack-tools-1.4.4-7.el7.x86_64.rpm 3f5ba2b53701ac9102ea7c7ab2ca6616a8cd5966591a77577585fde1c434ef74-cri-tools-1.26.0-0.x86_64.rpm libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm 4a5ee8285fca4f1d4dcb2e8267a87c01d4cd1d70fe848d477facbadd1904923c-kubectl-1.23.0-0.x86_64.rpm libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm 8e1a4a6eee06a24e4674ccc1056b5c122e56014ca7995d1b1945e631ccb2118f-kubelet-1.23.0-0.x86_64.rpm libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm c749943bded17575a758b1d945cd7ed5e891ec7ba9379f641ec7ccdadc2df963-kubeadm-1.23.0-0.x86_64.rpm socat-1.7.3.2-2.el7.x86_64.rpm - 将软件包压缩为gz格式

[root@localhost kubernetes-1.23.0]# cd .. [root@localhost ~]# tar -zcf kubernetes-1.23.0.tar.gz kubernetes-1.23.0 ipvs代理软件包

- 下载

ipset软件包

[root@localhost ~]# yum install --downloadonly --downloaddir=ipset ipset -y - 压缩

ipset软件包

[root@localhost ~]# tar -zcf ipset.tar.gz ipset - 下载

ipvsadm软件包

[root@localhost ~]# yum install --downloadonly --downloaddir=ipvsadm ipvsadm -y - 压缩

ipvsadm软件包

[root@localhost ~]# tar -zcf ipvsadm.tar.gz ipvsadm 导出Calico插件镜像

- 查看

Calico部署时需要的镜像,以下镜像是在K8s集群中获取到的

[root@master01 ~]# grep image calico.yaml image: docker.io/calico/cni:v3.24.1 imagePullPolicy: IfNotPresent image: docker.io/calico/cni:v3.24.1 imagePullPolicy: IfNotPresent image: docker.io/calico/node:v3.24.1 imagePullPolicy: IfNotPresent image: docker.io/calico/node:v3.24.1 imagePullPolicy: IfNotPresent image: docker.io/calico/kube-controllers:v3.24.1 imagePullPolicy: IfNotPresent - 拉取镜像文件

# 拉取镜像 [root@localhost ~]# docker pull docker.io/calico/cni:v3.24.1 [root@localhost ~]# docker pull docker.io/calico/node:v3.24.1 [root@localhost ~]# docker pull docker pull docker.io/calico/kube-controllers:v3.24.1 # 查看镜像 [root@localhost ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE calico/kube-controllers v3.24.1 f9c3c1813269 20 months ago 71.3MB calico/cni v3.24.1 67fd9ab48451 20 months ago 197MB calico/node v3.24.1 75392e3500e3 20 months ago 223MB - 导出

Calico插件镜像 - 3个 - 注意需创建calico文件夹,镜像文件默认导出到calico文件夹下

[root@localhost ~]# mkdir calico [root@localhost ~]# docker save -o calico/node-v3.24.1.tar calico/node:v3.24.1 [root@localhost ~]# docker save -o calico/cni-v3.24.1.tar calico/cni:v3.24.1 [root@localhost ~]# docker save -o calico/kube-controllers-v3.24.1.tar calico/kube-controllers:v3.24.1 [root@localhost calico]# ls cni-v3.24.1.tar node-v3.24.1.tar kube-controllers-v3.24.1.tar K8s组件镜像压缩包

- 查看

K8s部署所需镜像,以下镜像是在K8s集群中获取到的,知道咱们需要以下的镜像文件就行

[root@master ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE calico/cni v3.24.1 67fd9ab48451 20 months ago 197MB calico/node v3.24.1 75392e3500e3 20 months ago 223MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.23.0 e6bf5ddd4098 2 years ago 135MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.23.0 e03484a90585 2 years ago 112MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.23.0 37c6aeb3663b 2 years ago 125MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.23.0 56c5af1d00b5 2 years ago 53.5MB registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.5.1-0 25f8c7f3da61 2 years ago 293MB registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7 2 years ago 46.8MB registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.6 6270bb605e12 2 years ago 683kB - 拉取

K8s组件镜像

# kube-apiserver [root@localhost ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.23.0 # kube-proxy [root@localhost ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.23.0 # kube-controller-manager [root@localhost ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.23.0 # kube-scheduler [root@localhost ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.23.0 # etcd [root@localhost ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 # coredns [root@localhost ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.6 # pause [root@localhost ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 [root@localhost ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE calico/kube-controllers v3.24.1 f9c3c1813269 20 months ago 71.3MB calico/cni v3.24.1 67fd9ab48451 20 months ago 197MB calico/node v3.24.1 75392e3500e3 20 months ago 223MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.23.0 e6bf5ddd4098 2 years ago 135MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.23.0 e03484a90585 2 years ago 112MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.23.0 37c6aeb3663b 2 years ago 125MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.23.0 56c5af1d00b5 2 years ago 53.5MB registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.5.1-0 25f8c7f3da61 2 years ago 293MB registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7 2 years ago 46.8MB registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.6 6270bb605e12 2 years ago 683kB - 导出

K8s组件镜像 - 7个 - 放入images-k8s文件夹下【该过程未体现】

# kube-apiserver [root@localhost ~]# docker save -o kube-apiserver-v1.23.0.tar registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.23.0 # kube-proxy [root@localhost ~]# docker save -o kube-proxy-v1.23.0.tar registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.23.0 # kube-controller-manager [root@localhost ~]# docker save -o kube-controller-manager-v1.23.0.tar registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.23.0 # kube-scheduler [root@localhost ~]# docker save -o kube-scheduler-v1.23.0.tar registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.23.0 # etcd [root@localhost ~]# docker save -o etcd-3.5.1-0.tar registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 # coredns [root@localhost ~]# docker save -o coredns-v1.8.6.tar registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.6 # pause [root@localhost ~]# docker save -o pause-3.6.tar registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 导出Nginx应用镜像

- 拉取镜像文件

[root@localhost ~]# docker pull nginx:1.20.2 # 导出镜像 [root@localhost ~]# docker save -o nginx-1.20.2.tar nginx:1.20.2 - 导出

Nginx镜像

[root@localhost ~]# docker save -o nginx-1.20.2.tar nginx:1.20.2 下载 Calico 部署文件 calico.yaml

[root@localhost ~]# wget https://raw.githubusercontent.com/projectcalico/calico/v3.24.1/manifests/calico.yaml 集群环境部署

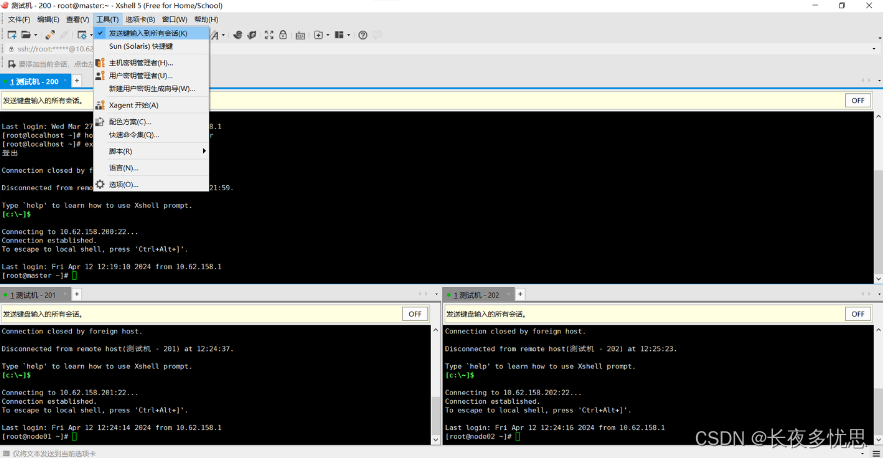

按照集群规划修改每个节点主机名

# 管理节点 [root@localhost ~]# hostnamectl set-hostname master [root@localhost ~]# exit 登出 Connection closed by foreign host. Disconnected from remote host(测试机 - 200) at 12:21:59. # 工作节点01 [root@localhost ~]# hostnamectl set-hostname node01 [root@localhost ~]# exit 登出 Connection closed by foreign host. Disconnected from remote host(测试机 - 201) at 12:24:37. # 工作节点02 [root@localhost ~]# hostnamectl set-hostname node02 [root@localhost ~]# exit 登出 Connection closed by foreign host. Disconnected from remote host(测试机 - 202) at 12:25:23. 提示:以下前期环境准备需要在所有节点都执行,上传前置工作中的全部文件到集群所有主机中

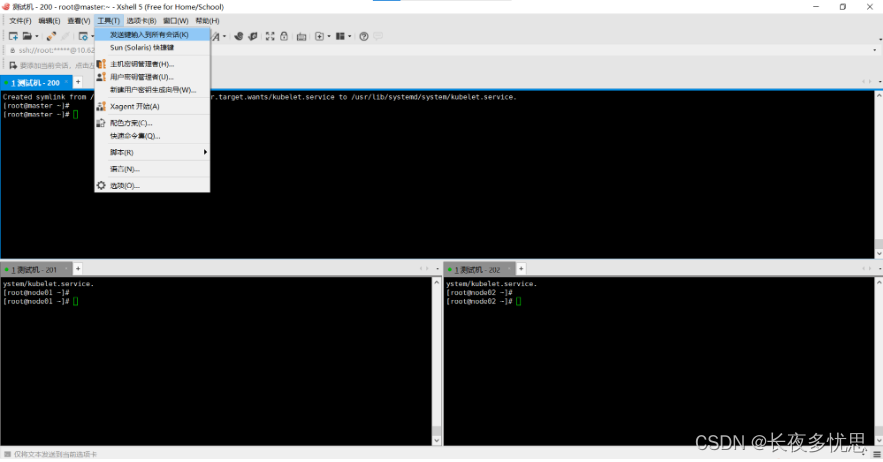

三个节点命令同步设置

注意:此方式需确保所有主机命令均执行完毕才能进行下一步操作

软件包解压

[root@master ~]# ls anaconda-ks.cfg calico calico.yaml docker-ce-20.10.9-3.el7.x86_64.tar.gz images-k8s ipset.tar.gz ipvsadm.tar.gz kubernetes-1.23.0.tar.gz nginx-1.20.2.tar sysconfigure.sh # 软件包解压 [root@master ~]# tar -zxvf docker-ce-20.10.9-3.el7.x86_64.tar.gz [root@master ~]# tar -zxvf ipset.tar.gz [root@master ~]# tar -zxvf ipvsadm.tar.gz [root@master ~]# tar -zxvf kubernetes-1.23.0.tar.gz Docker离线安装

进入 docker 安装目录

[root@master ~]# cd docker-ce-20.10.9-3.el7.x86_64 离线安装 docker

[root@master docker-ce-20.10.9-3.el7.x86_64]# yum install ./*.rpm -y 启用 Cgroup 控制组,用于限制进程的资源使用量,如CPU、内存资源

[root@master docker-ce-20.10.9-3.el7.x86_64]# mkdir /etc/docker cat > /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"] } EOF 启动 docker 并设置 docker 随机自启

[root@master docker-ce-20.10.9-3.el7.x86_64]# systemctl enable docker --now 查看 docker 是否安装成功

[root@master docker-ce-20.10.9-3.el7.x86_64]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 镜像文件导入

K8s 镜像文件导入

# 进入镜像文件目录 [root@master ~]# cd images-k8s/ [root@master images-k8s]# ls coredns-v1.8.6.tar etcd-3.5.1-0.tar kube-apiserver-v1.23.0.tar kube-controller-manager-v1.23.0.tar kube-proxy-v1.23.0.tar kube-scheduler-v1.23.0.tar pause-3.6.tar # 镜像文件导入 [root@master ~]# docker load -i kube-apiserver-v1.23.0.tar [root@master ~]# docker load -i kube-controller-manager-v1.23.0.tar [root@master ~]# docker load -i kube-scheduler-v1.23.0.tar [root@master ~]# docker load -i kube-proxy-v1.23.0.tar [root@master ~]# docker load -i etcd-3.5.1-0.tar [root@master ~]# docker load -i coredns-v1.8.6.tar [root@master ~]# docker load -i pause-3.6.tar # 查看已有镜像文件 [root@master images-k8s]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.23.0 e6bf5ddd4098 2 years ago 135MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.23.0 37c6aeb3663b 2 years ago 125MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.23.0 56c5af1d00b5 2 years ago 53.5MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.23.0 e03484a90585 2 years ago 112MB registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.5.1-0 25f8c7f3da61 2 years ago 293MB registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7 2 years ago 46.8MB registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.6 6270bb605e12 2 years ago 683kB Calico 镜像文件导入

# 进入镜像文件目录 [root@master images-k8s]# cd .. [root@master ~]# cd calico # 镜像文件导入 [root@master calico]# docker load -i cni-v3.24.1.tar [root@master calico]# docker load -i node-v3.24.1.tar [root@master calico]# docker load -i kube-controllers-v3.24.1.tar # 查看已有镜像文件 [root@master calico]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE calico/cni v3.24.1 67fd9ab48451 20 months ago 197MB calico/node v3.24.1 75392e3500e3 20 months ago 223MB calico/kube-controllers v3.24.1 f9c3c1813269 20 months ago 71.3MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.23.0 e6bf5ddd4098 2 years ago 135MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.23.0 56c5af1d00b5 2 years ago 53.5MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.23.0 37c6aeb3663b 2 years ago 125MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.23.0 e03484a90585 2 years ago 112MB registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.5.1-0 25f8c7f3da61 2 years ago 293MB registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7 2 years ago 46.8MB registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.6 6270bb605e12 2 years ago 683kB Nginx 应用镜像文件导入

# Nginx应用镜像文件导入 [root@master calico]# cd .. [root@master ~]# docker load -i nginx-1.20.2.tar 配置集群之间本地解析,集群在初始化时需要能够解析到每个节点的主机名

[root@master ~]# vim /etc/hosts 10.62.158.200 master 10.62.158.201 node01 10.62.158.202 node02 开启bridge网桥过滤功能

添加配置文件

cat > /etc/sysctl.d/k8s.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF 加载 br_netfilter 模块来允许在 bridge 设备上的数据包经过 iptables 防火墙处理

[root@master ~]# modprobe br_netfilter && lsmod | grep br_netfilter 从配置文件 k8s.conf 加载内核参数设置,使上述配置生效

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf 配置ipvs代理功能

ipset 与 ipvsadm 软件安装

# 进入ipset软件安装目录 [root@master ~]# cd ipset # 安装ipset软件 [root@master ipset]# yum install ./*.rpm -y # 进入ipvsadm软件安装目录 [root@master ipset]# cd .. [root@master ~]# cd ipvsadm # 安装ipvsadm软件 [root@master ipvsadm]# yum install ./*.rpm -y 将需要加载的 ipvs 相关模块写入到文件中

cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack EOF 添加执行权限

[root@master ipvsadm]# chmod +x /etc/sysconfig/modules/ipvs.modules 执行配置文件使 ipvs 生效

[root@master ipvsadm]# /etc/sysconfig/modules/ipvs.modules 查看 ipvs 是否配置成功

[root@master ipvsadm]# lsmod | grep ip_vs ip_vs_sh 12688 0 ip_vs_wrr 12697 0 ip_vs_rr 12600 0 ip_vs 145497 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 133095 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4 libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack 关闭SWAP分区

临时关闭 - 此步必做

[root@master ipvsadm]# swapoff -a 永久关闭

[root@master ipvsadm]# sed -ri 's/.*swap.*/#&/' /etc/fstab 检查 swap

[root@master ipvsadm]# free -h total used free shared buff/cache available Mem: 3.8G 177M 315M 11M 3.4G 3.3G Swap: 0B 0B 0B kubeadm方式集群部署

安装 K8s 集群软件

# 进入软件安装目录 [root@master ipvsadm]# cd .. [root@master ~]# cd kubernetes-1.23.0 # 安装软件 [root@master kubernetes-1.23.0]# yum install ./*.rpm -y 配置 kubelet 启用 Cgroup 控制组,用于限制进程的资源使用量,如CPU、内存等

cat > /etc/sysconfig/kubelet <<EOF KUBELET_EXTRA_ARGS="--cgroup-driver=systemd" EOF 设置 kubelet 开机自启动即可,集群初始化后自动启动

[root@master kubernetes-1.23.0]# systemctl enable kubelet 集群初始化 - 在master节点初始化集群即可,取消多主机同步控制功能

查看集群所需镜像文件

[root@master ~]# kubeadm config images list 以下是集群初始化所需的集群组件镜像,已准备完毕

W0412 13:05:08.946167 19834 version.go:103] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get "https://cdn.dl.k8s.io/release/stable-1.txt": dial tcp 146.75.113.55:443: i/o timeout (Client.Timeout exceeded while awaiting headers) W0412 13:05:08.946214 19834 version.go:104] falling back to the local client version: v1.23.0 k8s.gcr.io/kube-apiserver:v1.23.0 k8s.gcr.io/kube-controller-manager:v1.23.0 k8s.gcr.io/kube-scheduler:v1.23.0 k8s.gcr.io/kube-proxy:v1.23.0 k8s.gcr.io/pause:3.6 k8s.gcr.io/etcd:3.5.1-0 k8s.gcr.io/coredns/coredns:v1.8.6 需要创建集群初始化配置文件

[root@master kubernetes-1.23.0]# cd .. [root@master ~]# kubeadm config print init-defaults > kubeadm-config.yml 配置文件需要修改如下内容

[root@master ~]# vim kubeadm-config.yml 以下是需要修改的内容

# 本机IP地址 advertiseAddress: 10.62.158.200 # 本机名称 name: master 集群初始化

[root@master ~]# kubeadm init --config kubeadm-config.yml --upload-certs Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.62.158.200:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:172f1c0a0f43fba836fd15a0eb515630ec8b73eccbd6b313b32fad317658e3fa 根据集群初始化后的提示,执行以下命令生成集群管理员配置文件

[root@master ~]# mkdir -p $HOME/.kube [root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config 根据提示将 node 节点加入集群后,在 master 节点验证

node01节点加入集群

[root@node01 kubernetes-1.23.0]# kubeadm join 10.62.158.200:6443 --token abcdef.0123456789abcdef \ > --discovery-token-ca-cert-hash sha256:172f1c0a0f43fba836fd15a0eb515630ec8b73eccbd6b313b32fad317658e3fa [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. node02节点加入集群

[root@node02 kubernetes-1.23.0]# kubeadm join 10.62.158.200:6443 --token abcdef.0123456789abcdef \ > --discovery-token-ca-cert-hash sha256:172f1c0a0f43fba836fd15a0eb515630ec8b73eccbd6b313b32fad317658e3fa [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. 查看集群三节点状态

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady control-plane,master 2m49s v1.23.0 node01 NotReady <none> 70s v1.23.0 node02 NotReady <none> 35s v1.23.0 部署Calico网络

查看已上传的 calico.yaml 文件

[root@master ~]# ls anaconda-ks.cfg calico.yaml docker-ce-20.10.9-3.el7.x86_64.tar.gz ipset ipvsadm kubeadm-config.yml kubernetes-1.23.0.tar.gz sysconfigure.sh calico docker-ce-20.10.9-3.el7.x86_64 images-k8s ipset.tar.gz ipvsadm.tar.gz kubernetes-1.23.0 nginx-1.20.2.tar 创建 calico 网络

[root@master ~]# kubectl apply -f calico.yaml 查看 calico 的 Pod 状态,等待所有组件状态都为 Running ,K8s 集群搭建完成

# 查看calico状态 [root@master ~]# kubectl get pod -n kube-system | grep calico calico-kube-controllers-66966888c4-sjnfg 0/1 Running 0 42s calico-node-2kdmr 1/1 Running 0 42s calico-node-mk4ct 1/1 Running 0 42s calico-node-qjgqd 1/1 Running 0 42s # 集群搭建完毕 [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane,master 5m19s v1.23.0 node01 Ready <none> 3m40s v1.23.0 node02 Ready <none> 3m5s v1.23.0 集群测试

添加 nginx 配置文件:nginx.yml

apiVersion: v1 kind: Pod metadata: name: nginx labels: app: nginx spec: containers: - name: nginx image: nginx:1.20.2 --- apiVersion: v1 kind: Service metadata: name: nginx-svc spec: type: NodePort selector: app: nginx ports: - port: 80 targetPort: 80 nodePort: 30000 执行部署文件,生成 nginx 应用

[root@master ~]# kubectl apply -f nginx.yml pod/nginx created service/nginx-svc created 部署成功,查看容器状态

[root@master ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx 1/1 Running 0 29s 获取 k8s 中服务端口列表

[root@master ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7m23s nginx-svc NodePort 10.97.195.147 <none> 80:30000/TCP 17s 访问集群任意节点,访问nginx服务,打完收工!!

http://10.62.158.200:30000/ http://10.62.158.201:30000/ http://10.62.158.202:30000/