学习记录

autoDL服务器训练YOLOV8

文章目录

前言

使用autoDL服务器自定义训练yolov8

一、获取autoDL服务器

网址:autoDL

根据需求购买设备(建议选择4/4这种没人租用的,可以独自使用硬盘和cpu,训练速度更快)

自选环境:

从右上角控制台进入购买的实例:

二、通过finalShell连接服务器

下载地址:finalShell

下载安装包时,将该网址加入设置>>Cookie和网站权限>>不安全的内容>>添加入允许,不然可能会下载不了。

安装过程简单略过

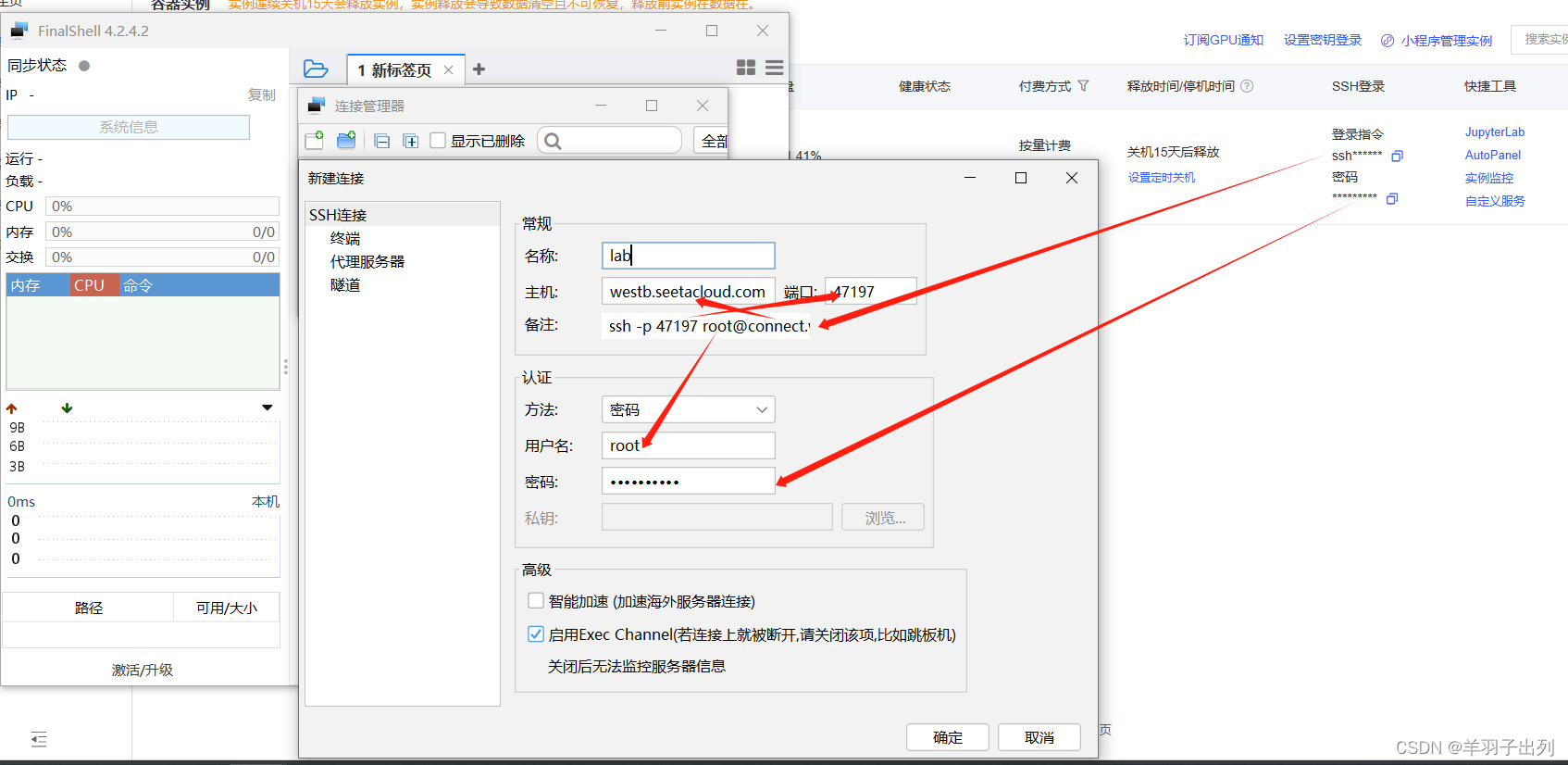

连接:

复制登陆指令到备注,名称随便写,主机复制@后面的部分进去,端口为root前面的数字。用户名为@前面的字符root,密码为登录指令下的密码。

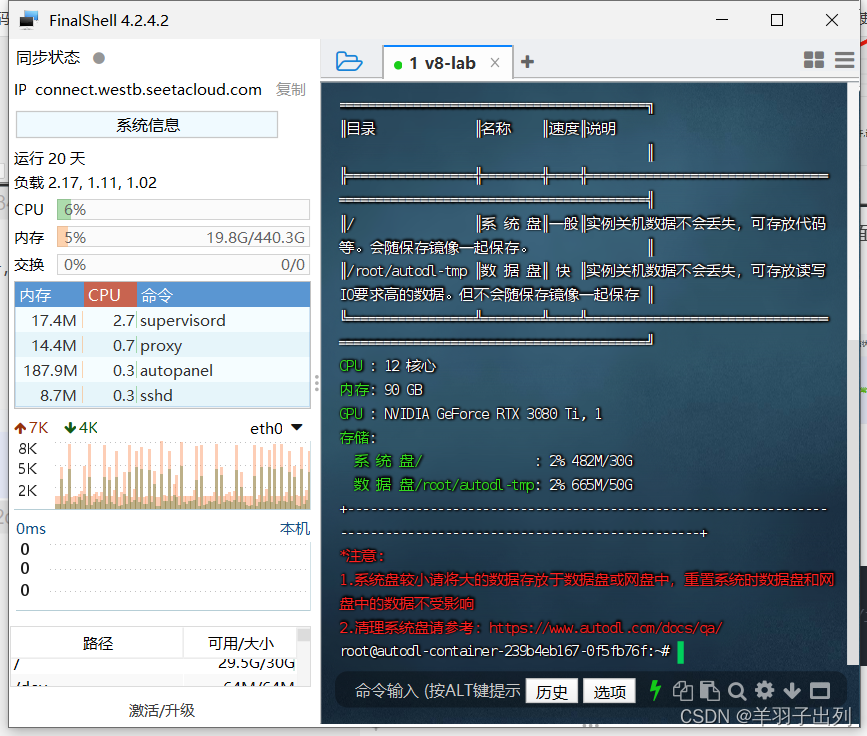

下面是进入后的界面:

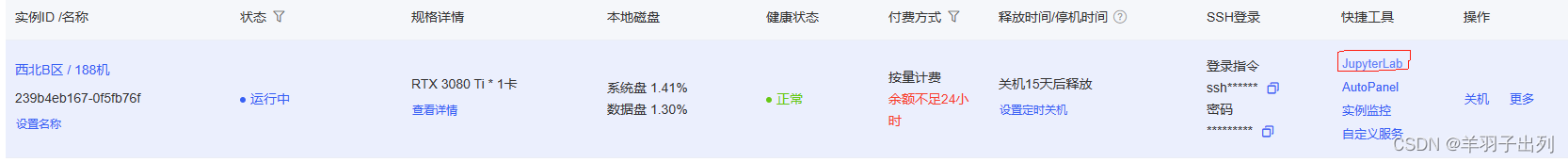

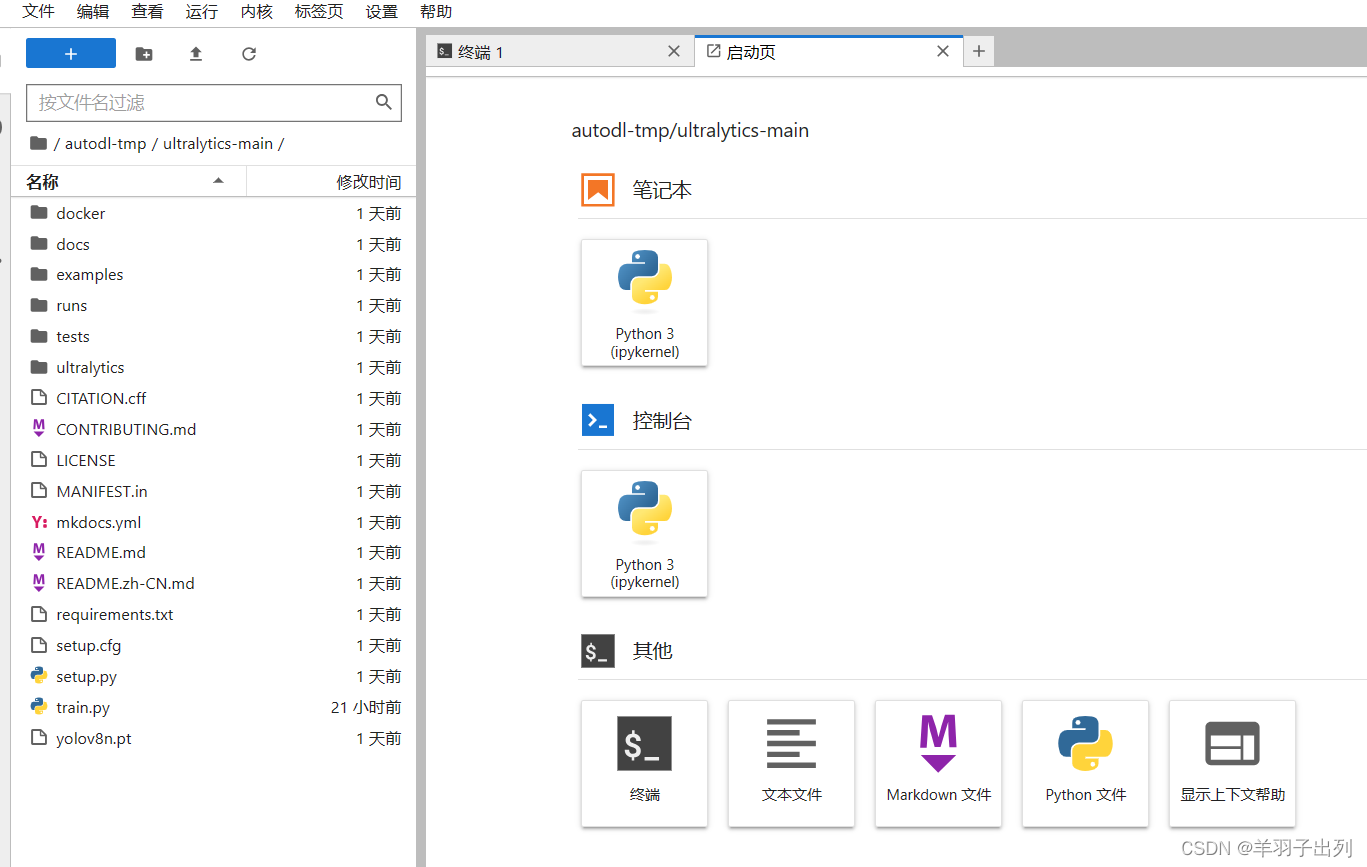

在autoDL实例中点击jupyterlab进入

在autoDL的帮助文档中有基本的教程,大部分问题都有解决案例。

首先设置学术资源加速:

终端输入source /etc/network_turbo

获取ultralytics:(灰色命令为用户输入指令)

root@autodl-container-239b4eb167-0f5fb76f:~# source /etc/network_turbo 设置成功 root@autodl-container-239b4eb167-0f5fb76f:~# ls autodl-pub autodl-tmp miniconda3 tf-logs root@autodl-container-239b4eb167-0f5fb76f:~# cd autodl-tmp root@autodl-container-239b4eb167-0f5fb76f:~/autodl-tmp# git clone https://github.com/ultralytics/ultralytics.git Cloning into 'ultralytics'... remote: Enumerating objects: 16392, done. remote: Counting objects: 100% (107/107), done. remote: Compressing objects: 100% (90/90), done. remote: Total 16392 (delta 49), reused 47 (delta 17), pack-reused 16285 Receiving objects: 100% (16392/16392), 8.74 MiB | 5.47 MiB/s, done. Resolving deltas: 100% (11359/11359), done. root@autodl-container-239b4eb167-0f5fb76f:~/autodl-tmp# pip install ultralytics 用户使用数据一般放在autodl-tmp目录中。

三、训练yolov8

可以通过finalshell图形化界面直接拖动训练数据进入服务器开始训练。

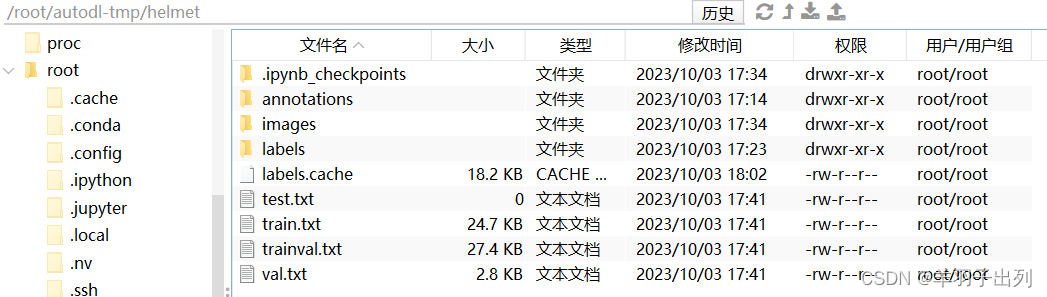

helmet文件即训练训练数据。

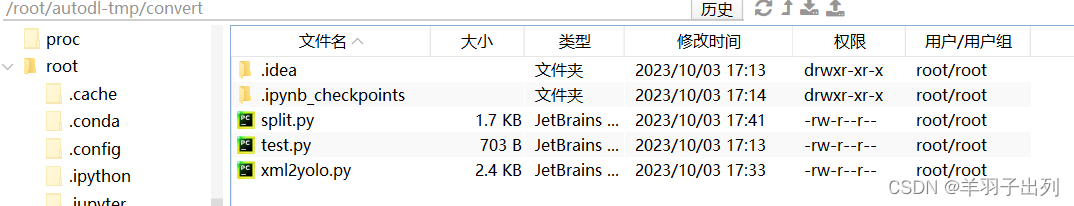

convert文件是格式转换代码,目的是将xml转为yolo的txt,并划分数据集

通过jupyterlab中的终端运行代码:

root@autodl-container-239b4eb167-0f5fb76f:~/autodl-tmp# cd convert root@autodl-container-239b4eb167-0f5fb76f:~/autodl-tmp/convert# ls split.py test.py xml2yolo.py root@autodl-container-239b4eb167-0f5fb76f:~/autodl-tmp/convert# python xml2yolo.py 注意转换路径

xml2yolo:

# -*- coding: utf-8 -*- from xml.dom import minidom import os import glob lut = { 'Without Helmet': 0, 'With Helmet': 1 } def convert_coordinates(size, box): dw = 1.0 / size[0] dh = 1.0 / size[1] x = (box[0] + box[1]) / 2.0 y = (box[2] + box[3]) / 2.0 w = box[1] - box[0] h = box[3] - box[2] x = x * dw w = w * dw y = y * dh h = h * dh return (x, y, w, h) def convert_xml2yolo(lut): for fname in glob.glob("..\helmet/annotations/*.xml"): xmldoc = minidom.parse(fname) fname_out = ('..\helmet\labels/' + os.path.basename(fname).split('.')[0] + '.txt') with open(fname_out, "w") as f: itemlist = xmldoc.getElementsByTagName('object') size = xmldoc.getElementsByTagName('size')[0] width = int((size.getElementsByTagName('width')[0]).firstChild.data) height = int((size.getElementsByTagName('height')[0]).firstChild.data) # if len(itemlist) == 0: # print(fname_out) # if width > 1000: # print(fname_out) for item in itemlist: # get class label classid = (item.getElementsByTagName('name')[0]).firstChild.data if classid in lut: label_str = str(lut[classid]) else: label_str = "-1" print("warning: label '%s' not in look-up table" % classid) # get bbox coordinates xmin = ((item.getElementsByTagName('bndbox')[0]).getElementsByTagName('xmin')[0]).firstChild.data ymin = ((item.getElementsByTagName('bndbox')[0]).getElementsByTagName('ymin')[0]).firstChild.data xmax = ((item.getElementsByTagName('bndbox')[0]).getElementsByTagName('xmax')[0]).firstChild.data ymax = ((item.getElementsByTagName('bndbox')[0]).getElementsByTagName('ymax')[0]).firstChild.data b = (float(xmin), float(xmax), float(ymin), float(ymax)) bb = convert_coordinates((width, height), b) # print(bb) f.write(label_str + " " + " ".join([("%.6f" % a) for a in bb]) + '\n') # print("wrote %s" % fname_out) def main(): convert_xml2yolo(lut) if __name__ == '__main__': main() split:

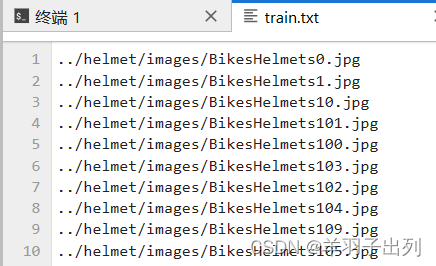

# coding:utf-8 import os import random import argparse parser = argparse.ArgumentParser() #xml文件的地址 parser.add_argument('--xml_path', default=r'../helmet/annotations', type=str, help='input xml label path') #数据集的划分 parser.add_argument('--txt_path', default='../helmet', type=str, help='output txt label path') opt = parser.parse_args() trainval_percent = 1.0 # 训练集和验证集所占比例。 这里没有划分测试集 train_percent = 0.9 # 训练集所占比例,可自己进行调整 xmlfilepath = opt.xml_path txtsavepath = opt.txt_path total_xml = os.listdir(xmlfilepath) if not os.path.exists(txtsavepath): os.makedirs(txtsavepath) num = len(total_xml) list_index = range(num) tv = int(num * trainval_percent) tr = int(tv * train_percent) trainval = random.sample(list_index, tv) train = random.sample(trainval, tr) file_trainval = open(txtsavepath + '/trainval.txt', 'w') file_test = open(txtsavepath + '/test.txt', 'w') file_train = open(txtsavepath + '/train.txt', 'w') file_val = open(txtsavepath + '/val.txt', 'w') for i in list_index: name = '../helmet/images/' + total_xml[i][:-4] + '.jpg' + '\n' if i in trainval: file_trainval.write(name) if i in train: file_train.write(name) else: file_val.write(name) else: file_test.write(name) file_trainval.close() file_train.close() file_val.close() file_test.close() def conv(): for img_name in os.listdir(r"../helmet/images"): path = os.path.join(r"../helmet/images", img_name) os.rename(path, path[: -4] + '.jpg') conv() 生成结果:(本项目只需要train.txt和val.txt)

在路径autodl-tmp/ultralytics/ultralytics/cfg/datasets/创建数据集helmet.yaml文件(注意修改路径):

# Ultralytics YOLO 🚀, AGPL-3.0 license # COCO128 dataset https://www.kaggle.com/ultralytics/coco128 (first 128 images from COCO train2017) by Ultralytics # Example usage: yolo train data=coco128.yaml # parent # ├── ultralytics # └── datasets # └── coco128 ← downloads here (7 MB) # Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..] path: ../autodl-tmp/helmet # dataset root dir train: train.txt # train images (relative to 'path') 128 images val: val.txt # val images (relative to 'path') 128 images test: # test images (optional) # Classes names: 0: without_helmet 1: with_helmet 同时在路径autodl-tmp/ultralytics-main/ultralytics/cfg/models/v8/yolov8.yaml修改所训练模型的yaml文件(根据类别数修改):

nc: 2 # number of classes 现在可以训练了:

在ultralytics的目录下创建train.py来训练

从ultralytics中拷贝代码到train.py:

from ultralytics import YOLO # Load a model model = YOLO("yolov8n.yaml") # build a new model from scratch model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training) # Use the model model.train(data="../ultralytics-main/ultralytics/cfg/datasets/helmet.yaml", epochs=500, imgsz=1280, batch=23) # train the model metrics = model.val() # evaluate model performance on the validation set # results = model("https://ultralytics.com/images/bus.jpg") # predict on an image path = model.export(format="onnx") # export the model to ONNX format 注意修改路径。

通过终端运行train.py

然后可能会出现一个路径错误:

Traceback (most recent call last):

File “train.py”, line 8, in

model.train(data=“…/ultralytics-main/ultralytics/cfg/datasets/helmet.yaml”, epochs=500, imgsz=1280, batch=23) # train the model

File “/root/autodl-tmp/ultralytics-main/ultralytics/engine/model.py”, line 329, in train

self.trainer = (trainer or self._smart_load(‘trainer’))(overrides=args, _callbacks=self.callbacks)

File “/root/autodl-tmp/ultralytics-main/ultralytics/engine/trainer.py”, line 122, in init

raise RuntimeError(emojis(f"Dataset ‘{clean_url(self.args.data)}’ error ❌ {e}")) from e

RuntimeError: Dataset ‘…/ultralytics-main/ultralytics/cfg/datasets/helmet.yaml’ error ❌

Dataset ‘…/ultralytics-main/ultralytics/cfg/datasets/helmet.yaml’ images not found ⚠️, missing path ‘/root/autodl-tmp/ultralytics-main/autodl-tmp/helmet/val.txt’

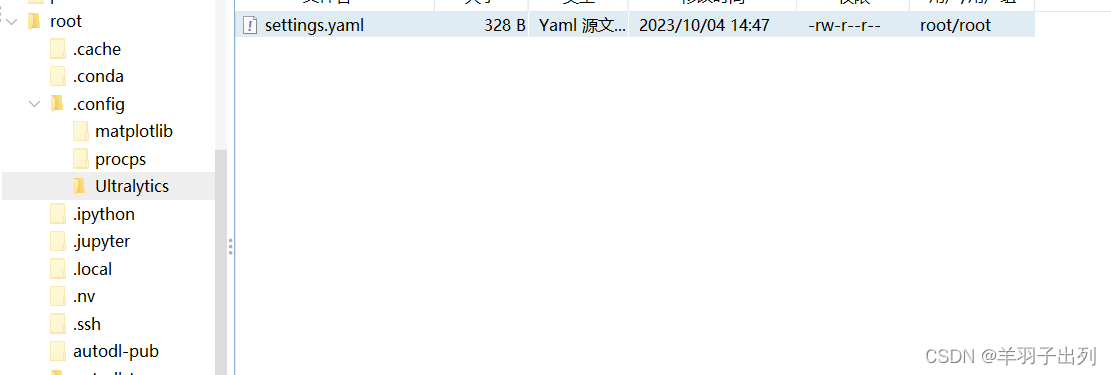

Note dataset download directory is ‘/root/autodl-tmp/ultralytics-main/datasets’. You can update this in '/root/.config/Ultralytics/settings.yaml

通过finalshell对这个root/.config/Ultralytics/settings.yaml中路径的修改:

datasets_dir: /root/autodl-tmp/ultralytics-main/datasets 修改为:

datasets_dir: /root/autodl-tmp Ctrl+s保存

再次运行train.py

开始训练:

注:如果出现其他路径错误请检查train.txt或val.txt的路径:

总结

在使用autoDL时候,经常会出现模型停止训练,但是后台线程未停止,所以需要kill train.py,帮助文档中的常见问题中记录了解决方案,有时候包不全也需要去下载,总之问题基本上都可以通过帮助文档结局。

注:本文仅供本人学习,作为学习记录,流程基本上参考该up主视频