Content

建立动作和观测的数据结构

动作和观测可以分为两种:rlNumericSpec和rlFiniteSetSpec。

- rlNumericSpec:代表连续的动作或观测数据。

- rlFiniteSetSpec:代表离散的动作或观测数据。

obsInfo = rlNumericSpec([3 1],... % 创建一个3x1的观测矩阵 'LowerLimit',[-inf -inf 0 ]',... 'UpperLimit',[ inf inf inf]'); obsInfo.Name = 'observations'; obsInfo.Description = 'integrated error, error, and measured height'; numObservations = obsInfo.Dimension(1); % 取观测矩阵的维度 这句不要也行 actInfo = rlNumericSpec([1 1]); actInfo.Name = 'flow'; numActions = actInfo.Dimension(1);% 这句不要也行 HRV_guide实例:

numObs = 8; ObservationInfo = rlNumericSpec([8 1]); ObservationInfo.Name = 'States & Delta'; ObservationInfo.Description = 'r, V, lon, lat, gamma, psi, delta_lon, delta_lat'; numAct = 1; ActionInfo = rlNumericSpec([1 1],... 'LowerLimit',[MIN_action]',... 'UpperLimit',[MAX_action]'); % 连续的输入空间 ActionInfo.Name = 'Guidance Action'; 创建环境

根据观测、动作、环境step和reset函数创建环境

env = rlFunctionEnv(obsInfo,actInfo,stepfcn,resetfcn)

stepfcn为自己写的环境的step函数,resetfcn为环境的重置函数。

HRV_guide实例:

env = rlFunctionEnv(ObservationInfo,ActionInfo,'Hyper_Guidance_StepFunction','Hyper_Guidance_ResetFunction'); 测试环境是否符合要求

% 测试环境变量 rng(0); % 随机数种子 InitialObs = reset(env) [NextObs,Reward,IsDone,LoggedSignals] = step(env,simulation_step); NextObs Reward 如果正常打印说明至少架构层面没有问题。

网络创建

Critic网络

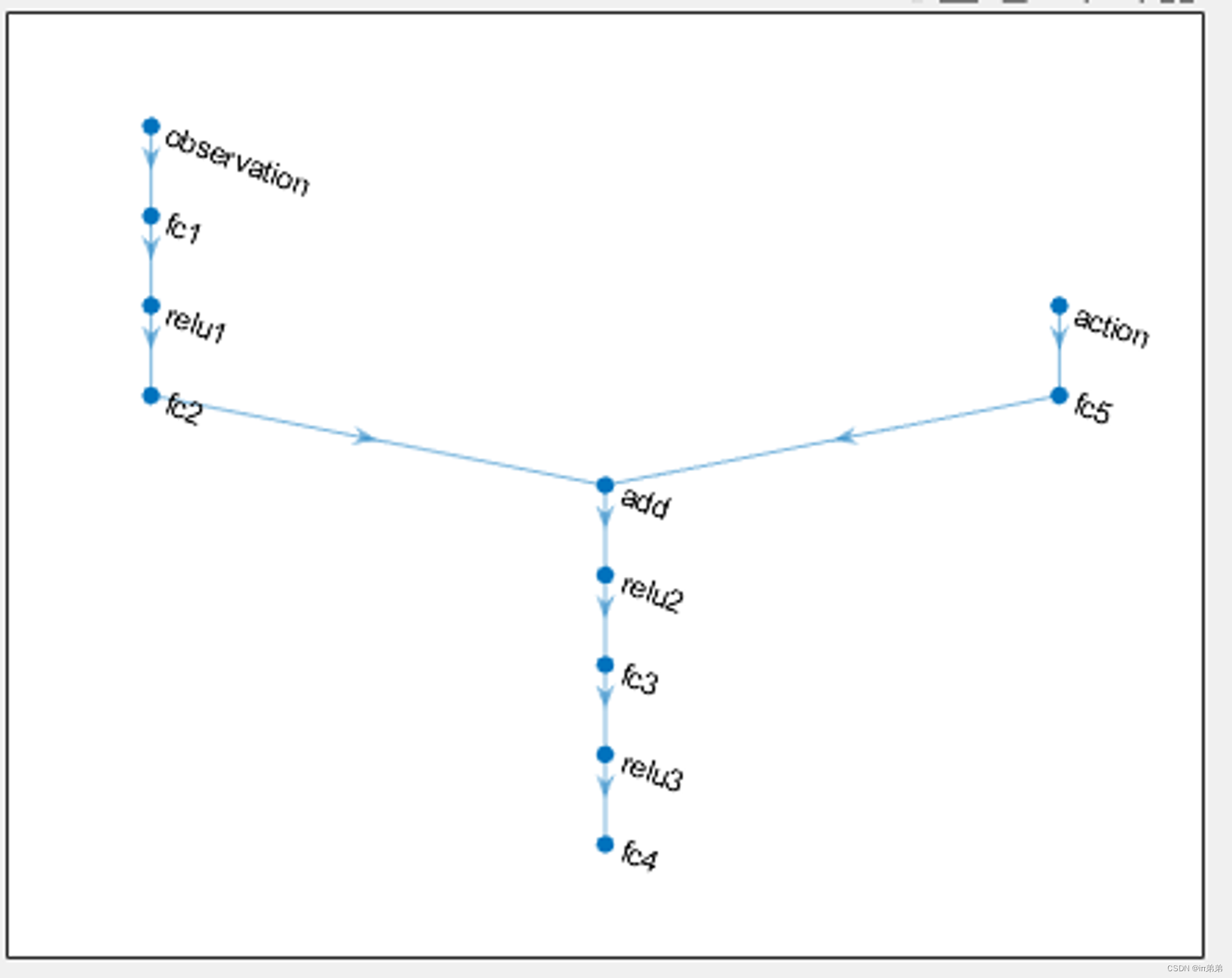

% 网络创建 hiddenLayerSize = 128; observationPath = [ imageInputLayer([numObs,1],'Normalization','none','Name','observation') fullyConnectedLayer(hiddenLayerSize,'Name','fc1') reluLayer('Name','relu1') fullyConnectedLayer(hiddenLayerSize,'Name','fc2') additionLayer(2,'Name','add') reluLayer('Name','relu2') fullyConnectedLayer(hiddenLayerSize,'Name','fc3') reluLayer('Name','relu3') fullyConnectedLayer(1,'Name','fc4')]; actionPath = [ imageInputLayer([numAct,1],'Normalization','none','Name','action') fullyConnectedLayer(hiddenLayerSize,'Name','fc5')]; % Create the layer graph. criticNetwork = layerGraph(observationPath); criticNetwork = addLayers(criticNetwork,actionPath); % Connect actionPath to observationPath. criticNetwork = connectLayers(criticNetwork,'fc5','add/in2'); matlab2020a不支持featureInputLayer,换为imageInputLayer。additionLayer在网络中添加了一个连接点(输入number为2),之后先用layerGraph建图,然后将action的输入网络和critic的基础网络(Q网络需要输入s和a,所以这么设)在连接点连上(criticNetwork = connectLayers(criticNetwork,'fc5','add/in2');)。Critic网络图如下:

设置Critic网络训练参数

criticOptions = rlRepresentationOptions('LearnRate',1e-03,'GradientThreshold',1); critic = rlQValueRepresentation(criticNetwork,ObservationInfo,ActionInfo,... 'Observation',{'observation'},'Action',{'action'},criticOptions); Actor网络

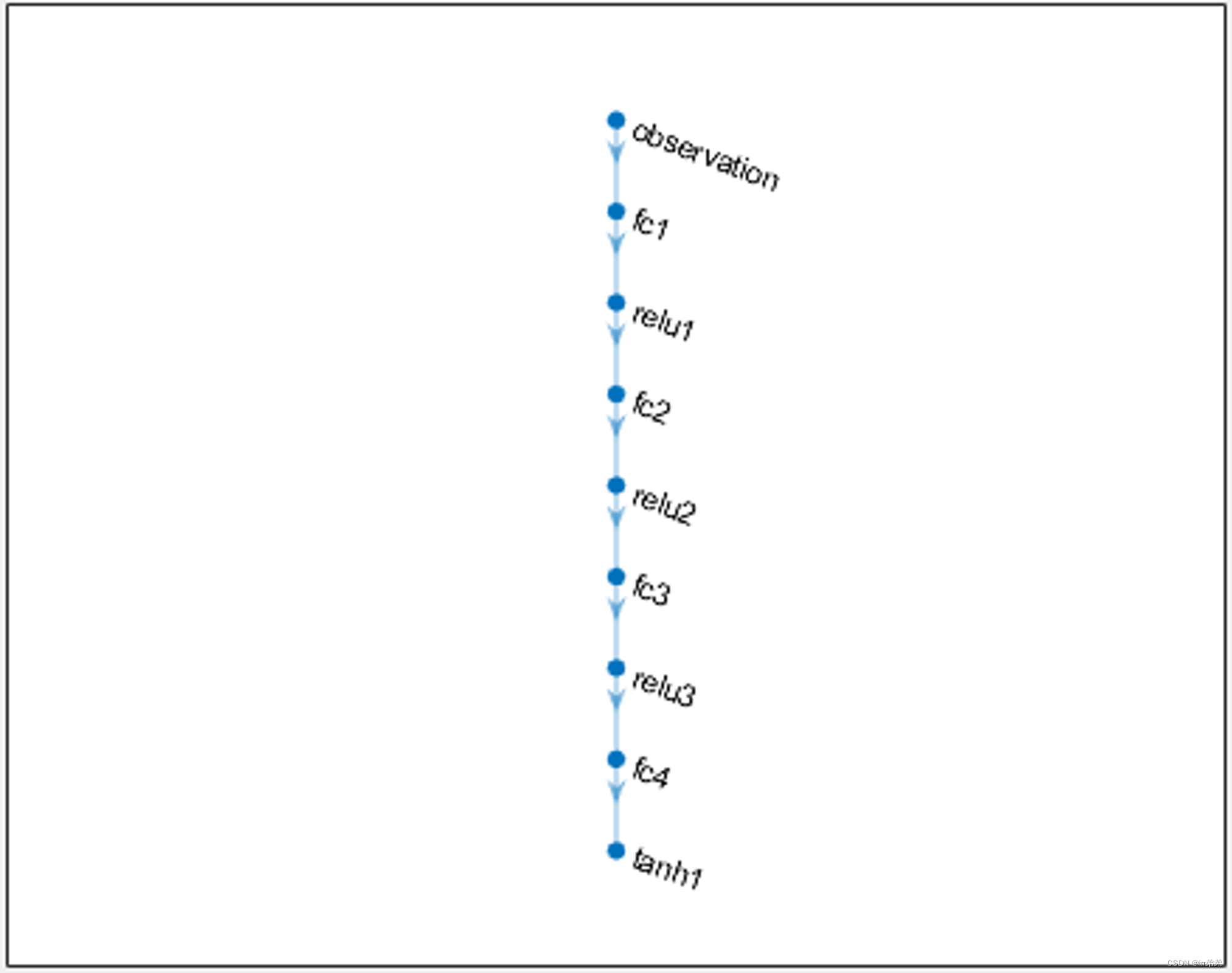

actorNetwork = [ imageInputLayer([numObs,1],'Normalization','none','Name','observation') fullyConnectedLayer(hiddenLayerSize,'Name','fc1') reluLayer('Name','relu1') fullyConnectedLayer(hiddenLayerSize,'Name','fc2') reluLayer('Name','relu2') fullyConnectedLayer(hiddenLayerSize,'Name','fc3') reluLayer('Name','relu3') fullyConnectedLayer(numAct,'Name','fc4') tanhLayer('Name','tanh1')]; 查看一下Actor a = π(s)网络结构:

plot(layerGraph(actorNetwork))

设置Actor网络训练参数

actorOptions = rlRepresentationOptions('LearnRate',1e-04,'GradientThreshold',1); % use gpu 'UserDevice','gpu' actor = rlDeterministicActorRepresentation(actorNetwork,ObservationInfo,ActionInfo,... 'Observation',{'observation'},'Action',{'tanh1'},actorOptions); 这里 rlDeterministicActorRepresentation 中需要说明观测是网络中的那个节点,动作输出是那个节点。

创建智能体

agentOptions = rlDDPGAgentOptions(... 'SampleTime',simulation_step,... 'TargetSmoothFactor',1e-3,... 'ExperienceBufferLength',1e6 ,... 'DiscountFactor',0.99,... 'MiniBatchSize',256); agentOptions.NoiseOptions.Variance = 1e-1; agentOptions.NoiseOptions.VarianceDecayRate = 1e-6; agent = rlDDPGAgent(actor,critic,agentOptions); 'SampleTime’默认的就是1,具体含义目前不太清楚。

设置训练参数

maxepisodes = 1500; maxsteps = ceil(Max_simulation_time/simulation_step); trainingOptions = rlTrainingOptions(... 'MaxEpisodes',maxepisodes,... 'MaxStepsPerEpisode',maxsteps,... 'StopOnError',"on",... 'Verbose',false,... 'Plots',"training-progress",... 'StopTrainingCriteria',"AverageReward",... 'StopTrainingValue',415,... 'ScoreAveragingWindowLength',10,... 'SaveAgentCriteria',"EpisodeReward",... 'SaveAgentValue',415); 这里面有并行多进程的设置,但是并行的toolbox需要另外下载和配置。Verbose设置为true将在命令端打印训练中的reward。

开始训练

trainingStats = train(agent,env,trainingOptions); MATLAB强化学习step函数文件和reset函数文件编写

reset函数文件

这个函数起始名如下(不需要输入):

function [InitialObservation, LoggedSignal] = Hyper_Guidance_ResetFunction() LoggedSignal在我看来就是一个记录需要的数据(可能是状态)的句柄,InitialObservation就是初始状态的设置(需要和之前定义的状态维度保持一致。下面给出一个在该函数中返回值的例子:

LoggedSignal.State = [INIT_r/r_scale;INIT_V/V_scale;INIT_theta;INIT_phi;INIT_gamma;INIT_psi;]; InitialObservation = [State_Obs_norm_1;State_Obs_norm_2;State_Obs_norm_3;State_Obs_norm_4;State_Obs_norm_5;State_Obs_norm_6;State_Obs_norm_7;State_Obs_norm_8]; step函数文件

这个函数起始名如下:

function [NextObs,Reward,IsDone,LoggedSignals] = Hyper_Guidance_StepFunction(Action,LoggedSignals) 可以看到step函数传入也有句柄 LoggedSignal,可以理解为通信句柄,在step函数内对它进行更新,所以输出也有 LoggedSignal。给出一个案例:高超声速飞行器在每一次step时通过 LoggedSignal拿到其6个状态变量的值,计算出 NextObs即下一时刻的观测值(注意状态变量时针对飞行器动力学模型而言的,观测值是我们定义在强化学习问题中的,可以理解为一个映射 s → obs)

Action就是传入的动作(使用它的时候别忘了其维度是之前设置的维度)。

设置好Reward机制和Done条件后这个文件就算写好了。

注意

在创建环境中:rlFunctionEnv(ObservationInfo,ActionInfo,'Hyper_Guidance_StepFunction','Hyper_Guidance_ResetFunction');

传入的函数名称要与我们写的reset和step文件名称相同。

MATLAB 强化学习2(以PPO为例)

matlab PPO既支持连续动作也支持离散动作。

numObs = 3; numAct = 2; 如果是连续动作:

actionInfo = rlNumericSpec([numAct 1],'LowerLimit',-1,'UpperLimit',1); % 连续的动作空间 如果是离散动作:

actionInfo = rlFiniteSetSpec(1:8) % 离散动作空间(8g) 对于观测,这里假设是连续的:

obsInfo = rlNumericSpec([numObs 1],... % 创建一个3x1的观测矩阵 'LowerLimit',[-inf -inf 0 ]',... 'UpperLimit',[ inf inf inf]'); obsInfo.Name = 'observations'; 创建环境:

env = rlFunctionEnv(obsInfo,actionInfo,'StepFunction','ResetFunction'); 整体代码

numObs = 3; numAct = 2; obsInfo = rlNumericSpec([numObs 1],... % 创建一个3x1的观测矩阵 'LowerLimit',[-inf -inf 0 ]',... 'UpperLimit',[ inf inf inf]'); obsInfo.Name = 'observations'; % actionInfo = rlNumericSpec([numAct 1],... % 'LowerLimit',-1,... % 'UpperLimit',1); % 连续的动作空间 actionInfo = rlFiniteSetSpec([numAct 1]) % 离散动作空间 actionInfo.Name = 'Guidance Action'; env = rlFunctionEnv(obsInfo,actionInfo,'StepFunction','ResetFunction'); rng(0) criticLayerSizes = [200 100]; actorLayerSizes = [200 100]; criticNetwork = [imageInputLayer([numObs 1 1],'Normalization','none','Name','observation') fullyConnectedLayer(criticLayerSizes(1),'Name','CriticFC1') reluLayer('Name','CriticRelu1') fullyConnectedLayer(criticLayerSizes(2),'Name','CriticFC2') reluLayer('Name','CriticRelu2') fullyConnectedLayer(1,'Name','CriticOutput')]; criticOpts = rlRepresentationOptions('LearnRate',1e-3); critic = rlValueRepresentation(criticNetwork,env.getObservationInfo, ... 'Observation',{'observation'},criticOpts); actorNetwork = [imageInputLayer([numObs 1 1],'Normalization','none','Name','observation') fullyConnectedLayer(actorLayerSizes(1),'Name','ActorFC1') reluLayer('Name','ActorRelu1') fullyConnectedLayer(actorLayerSizes(2),'Name','ActorFC2') reluLayer('Name','ActorRelu2') fullyConnectedLayer(numAct,'Name','Action') tanhLayer('Name','ActorTanh1') ]; % 如为连续动作最后一层fullyConnectedLayer(2*numAct,'Name','Action') actorOptions = rlRepresentationOptions('LearnRate',1e-3); actor = rlStochasticActorRepresentation(actorNetwork,env.getObservationInfo,env.getActionInfo,... 'Observation',{'observation'}, actorOptions); opt = rlPPOAgentOptions('ExperienceHorizon',512,... 'ClipFactor',0.2,... 'EntropyLossWeight',0.02,... 'MiniBatchSize',64,... 'NumEpoch',3,... 'AdvantageEstimateMethod','gae',... 'GAEFactor',0.95,... 'DiscountFactor',0.9995); agent = rlPPOAgent(actor,critic,opt); trainOpts = rlTrainingOptions(... 'MaxEpisodes',20000,... 'MaxStepsPerEpisode',1200,... 'Verbose',false,... 'Plots','training-progress',... 'StopTrainingCriteria','AverageReward',... 'StopTrainingValue',10000,... 'ScoreAveragingWindowLength',100,... 'SaveAgentCriteria',"EpisodeReward",... 'SaveAgentValue',11000); trainingStats = train(agent,env,trainOpts); 这里如果动作空间是连续的,那么actor网络的输出维度是numAct即动作维度的两倍(因为对于动作的每一个维度需要生成一个μ和一个σ来产生高斯分布作为策略π)。

step函数和reset函数和ddpg例子中一样,如果是离散的动作,传入的Action为[1, numAct]中某个整数。

测试Agent

只需要load自动保存在savedAgents下的模型即可,名字为saved_agent:

simSteps = 60; simOptions = rlSimulationOptions('MaxSteps',simSteps); experience = sim(env,saved_agent,simOptions); simActionSeries = reshape(experience.Action.GuidanceAction.Data, simSteps+1, 1); 上面这段代码可以获得size为61的Action序列。

关于PPO的actor

ppo的actor在连续动作下输出是num of action的两倍,比如两个连续动作,则输出为4*1,其中第一个维度和第二个维度为动作的均值,第三个和第四个维度是标准差,matlab自带的sim是将这个4维输出建立两个高斯分布再去采样,如果要转c自己写前向传播可以直接取两个均值作为输出即可,这样就成为确定性策略了。